Knowledge Transferring Assessment

A Knowledge Transferring Assessment combines the knowledge assessment directly with the knowledge transfer. In this sense it is a specific form of an Online Assessment/E-assessment/Computer-based assessment implementing a "Pull Learning Approach".[1] In contrast to an Online Assessment which may finalize a formal training in order to assess the gained knowledge (as part of a "Push Learning Approach" [2]), a Knowledge Transferring Assessment replaces the formal training by providing the required or desired knowledge fully integrated in the assessment by means of a specific design.

Advantages[edit]

- Each trainee only spends the time required to gain the still missing knowledge. I.e. no time is wasted for the knowledge already sufficient to answer related assessment questions.

- The assessment itself becomes the means to "pull" the information required (See "pull based learning" [3])

- Performers become aware of the availability of the web based material, useful for their "Self-efficacy" [4] in their day-to-day work.

- The desired/required knowledge can be gained during the assessment by the way.

- Any existing knowledge base or knowledge material can be integrated provided it meets the minimum requirements:

- Reachable via a URL

- The granularity conforms with the assessed questions in the way that the knowledge required to answer a question can be gathered from one to two web pages, i.e. the knowledge is not spread over too may pages

- The content of the material provides the required knowledge in a simple and efficient manner

- The content is structured in correspondence with the assessed questions

Primary design principles[edit]

The below principals are mandatory for a Knowledge Transferring Assessment and thus differ from the form of usual Online Assessments performed e.g. at the end of a formal training.

- The Knowledge Transferring Assessment is web based and thus can be used like Web-based teaching materials.

- The Knowledge Transferring Assessment primarily makes use of multiple choice questions. However, other forms like a "sequence of steps" or a so-called Click Assessment are alternatives. The latter is a means to assess a users ability to use an application software correctly when performing a certain task.

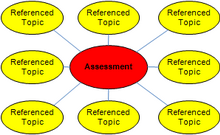

- In a Knowledge Transferring Assessment each question refers (via a URL) to the source(s) providing the required knowledge, i.e. enable to answer the question correctly.

- In case of a multiple choice question all answers but one are correct. By that, the one and only incorrect answer is the correct one (See Objective Assessment). Sample question: "Which of the below named tasks is not part of the process x?". Because all answers but one name correct steps the performer obtains a maximum of correct information - an essential design principal to transfer knowledge by the assessment itself.

- For each question a "negative feedback" (in case the selected answer is not correct) additionally refers to the source of knowledge - in order to enforce the impression that the required information for a correct answer is available effortless.

- For each question a "positive feedback" confirms and amplifies the gained knowledge.

Efficiency and efficacy[edit]

The efficiency of a Knowledge Transferring Assessment results from the combination of knowledge transfer together with the knowledge assessment and thereby with the minimization of the required training effort. Specifically individuals with a certain level of know-how and knowledge benefit from the approach. The independency of location, time, mobility, resource availability, etc. are e-Learning benefits and advantages in general and Online assessments specifically. The efficacy depends on:

- The relevance and completeness of the assessment questions.

- The form of the assessment questions which must enable to provide a maximum of relevant knowledge (only one answer amongst may is garbage).

- The online availability of both, the assessment itself and all required knowledge material

- The coherence between the assessment questions and the structure and design of the knowledge material and its coherence..

Secondary (general) design principals[edit]

The below are considerable properties of an Online Assessment. However, they are depending on the used authoring tool. Which of the properties and features is given a certain value should be part of the tool selection process. Many of the below properties increase the value of the tool and thus finally also the value of the provided Knowledge Transferring Assessment.

- The Assessment itself does not store any user relevant data and thus provides no conflicts with any data privacy rules. As a result, an assessment has to be finished within one session

- The final result is presented with the answer of the last question. The result indicate how many question had been answered correctly and/or how many points of a possible maximum had been reached.

- Only the first chosen answer counts for the result and secondary choices are impossible. I.e a trial and error approach is impossible not even to find out the correct answer for a subsequent performance.

- Each assessment start not only presents the questions in a random order but also the answers belonging to a question. Thus it is very time consuming to help each other even when the assessment is performed by several individuals sitting next to each other.

- The currently reached result is displayed permanently. Example: n questions of m in total answered, m % of the required result reached.

- It is displayed how may question this need to be answered and to which extent they contribute to the final result when answered correctly. The performer thus has the choice to stop a session which is unable to be finished with the required minimum result.

- The major properties of the assessment should be presented the performer at the very beginning in order to avoid frustration.

Properties of the authoring tool[edit]

There are many tools available to design an Online Assessment and they differ in their design and implementation features.[5] The below properties are useful examples and may support the tool selection process.

- It can be defined to which extent (with how many points) a certain question contributes to the final result. Tools may automate this based on the number of answers from which the user has to pickle the correct one.

- It can be defined, how many question are randomly selected from a larger question pool. This would add some difficulty for the performer because at least some questions differ between initiated sessions.

- It can be defined from which question pool(s) of several the questions are selected and assembled to one specific assessment. This enables the design of role or task specific assessments. In addition, the question pooling ensures that an assessment contains a certain number of questions for a certain knowledge aspect. When all questions are randomly selected form one pool only a certain knowledge aspect may not be covered/contained.

- The assembled assessment should be a compiled result from an editable source. This prevents a user may find the correct answer by investigating the HTML source.

- A SCORM export should be supported in order to enable the assessment's integration into an LMS(Learning Management System).

Disadvantages and risks[edit]

Besides "Where Organizations Go Wrong With e-Learning",[6] for this approach specifically, the random order of the assessment questions potentially conflicts with a didactical concept. Either all didactical aspects have to be covered by the knowledge material or one dedicated question pool is exempted from the random questions ordering.

References[edit]

- ^ "The Pull Approach" in http://www.articulate.com/rapid-elearning/are-your-e-learning-courses-pushed-or-pulled/

- ^ "The Push Approach" in http://www.articulate.com/rapid-elearning/are-your-e-learning-courses-pushed-or-pulled/

- ^ Put your learners on a diet - consider a pull-based learning approach, http://www.learninggeneralist.com/2009/11/put-your-learners-on-diet-consider-pull.html, referred to also in eLearningLearning (http://www.elearninglearning.com)

- ^ Mun Y. Yi, Yujong Hwang, Predicting the use of web-based information systems: self-efficacy, enjoyment, learning goal orientation, and the technology acceptance model, International Journal of Human-Computer Studies, Volume 59, Issue 4, Zhang and Dillon Special Issue on HCI and MIS, October 2003, Pages 431-449, ISSN 1071-5819, doi:10.1016/S1071-5819(03)00114-9.

- ^ E-assessment for open learning, Stephen J Swithenby Centre for Open Learning of Mathematics, Science, Computing and Technology, The Open University, Milton Keynes MK7 6AA, UK, http://www.eadtu.nl/academic-networks/eltan/files/Stephen%20Swithenby%20-%20E-assessment%20for%20open%20learning.pdf Archived 2011-07-20 at the Wayback Machine

- ^ Where Organizations Go Wrong With e-Learning http://minutebio.com/blog/2009/06/20/where-organizations-go-wrong-with-e-learning/