Sigmoid function

This article needs additional citations for verification. (May 2008) |

A sigmoid function is a mathematical function having an "S" shaped curve (sigmoid curve). Often, sigmoid function refers to the special case of the logistic function shown in the first figure and defined by the formula

Other examples of similar shapes include the Gompertz curve (used in modeling systems that saturate at large values of t) and the ogee curve (used in the spillway of some dams). Sigmoid functions have finite limits at negative infinity and infinity, most often going either from 0 to 1 or from −1 to 1, depending on convention.

A wide variety of sigmoid functions have been used as the activation function of artificial neurons, including the logistic and hyperbolic tangent functions. Sigmoid curves are also common in statistics as cumulative distribution functions (which go from 0 to 1), such as the integrals of the logistic distribution, the normal distribution, and Student's t probability density functions.

Definition

A sigmoid function is a bounded differentiable real function that is defined for all real input values and has a positive derivative at each point.[1]

Properties

In general, a sigmoid function is real-valued and differentiable, having either a non-negative or non-positive first derivative[citation needed] which is bell shaped. There are also a pair of horizontal asymptotes as . The differential equation , with the inclusion of a boundary condition providing a third degree of freedom, , provides a class of functions of this type.

The logistic function has this further, important property, that its derivative can be expressed by the function itself,

Examples

Many natural processes, such as those of complex system learning curves, exhibit a progression from small beginnings that accelerates and approaches a climax over time. When a detailed description is lacking, a sigmoid function is often used.[2]

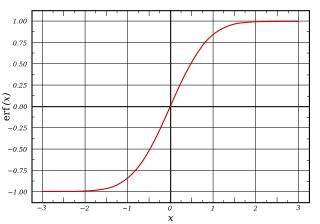

Besides the logistic function, sigmoid functions include the ordinary arctangent, the hyperbolic tangent, the Gudermannian function, and the error function, but also the generalised logistic function and algebraic functions like .

The integral of any smooth, positive, "bump-shaped" function will be sigmoidal, thus the cumulative distribution functions for many common probability distributions are sigmoidal. The most famous such example is the error function, which is related to the cumulative distribution function (CDF) of a normal distribution.

Hard sigmoid

Simpler approximations of smooth sigmoid functions, especially piecewise linear functions or piecewise constant functions, are preferred in some applications, where speed of computation is more important than precision; extreme forms are known as a hard sigmoid.[3][4] These are particularly found in artificial intelligence, especially computer vision and artificial neural networks. The most extreme examples are the sign function or Heaviside step function, which go from −1 to 1 or 0 to 1 (which to use depends on normalization) at 0.[5] For example, the Theano library provides two approximations: ultra_fast_sigmoid and hard_sigmoid, which is a 3-part linear approximation (output 0, line with slope 5, output 1).[6]

See also

- Cumulative distribution function

- Generalized logistic curve

- Gompertz function

- Heaviside step function

- Hyperbolic function

- Logistic distribution

- Logistic function

- Logistic regression

- Logit

- Modified hyperbolic tangent

- Softplus function

- Smoothstep function (Graphics)

- Softmax function

- Weibull distribution

- Netoid function

References

- ^ Han, Jun; Morag, Claudio (1995). "The influence of the sigmoid function parameters on the speed of backpropagation learning". In Mira, José; Sandoval, Francisco (eds.). From Natural to Artificial Neural Computation. pp. 195–201.

- ^ Gibbs, M.N. (Nov 2000). "Variational Gaussian process classifiers". IEEE Transactions on Neural Networks. 11 (6): 1458–1464. doi:10.1109/72.883477.

- ^ Quora, "What is hard sigmoid in artificial neural networks? Why is it faster than standard sigmoid? Are there any disadvantages over the standard sigmoid?", Leo Mauro's answer

- ^ How is Hard Sigmoid defined

- ^ Curves and Surfaces in Computer Vision and Graphics, Volume 1610, SPIE, 1992, "hard+sigmoid" p. 301

- ^ nnet – Ops for neural networks

- Mitchell, Tom M. (1997). Machine Learning. WCB–McGraw–Hill. ISBN 0-07-042807-7.. In particular see "Chapter 4: Artificial Neural Networks" (in particular pp. 96–97) where Mitchell uses the word "logistic function" and the "sigmoid function" synonymously – this function he also calls the "squashing function" – and the sigmoid (aka logistic) function is used to compress the outputs of the "neurons" in multi-layer neural nets.

- Humphrys, Mark. "Continuous output, the sigmoid function". Properties of the sigmoid, including how it can shift along axes and how its domain may be transformed.