Audio deepfake: Difference between revisions

Redirecting to Digital_cloning#Voice_cloning (♐) Tag: New redirect |

Tags: Removed redirect use of predatory open access journal nowiki added Visual edit |

||

| Line 1: | Line 1: | ||

The '''audio deepfake''' is a type of [[artificial intelligence]] used to create convincing speech sentences that sound like specific people saying things they did not say.<ref>{{Cite web |title=Deepfake Detection: Current Challenges and Next Steps |url=https://ieeexplore.ieee.org/document/9105991/ |access-date=2022-06-29 |website=ieeexplore.ieee.org |language=en-US |doi=10.1109/icmew46912.2020.9105991}}</ref><ref name=":0">{{Cite journal |last=Diakopoulos |first=Nicholas |last2=Johnson |first2=Deborah |date=June 2020 |year=June 2020 |title=Anticipating and addressing the ethical implications of deepfakes in the context of elections |url=http://journals.sagepub.com/doi/10.1177/1461444820925811 |journal=New Media & Society |language=en |publication-date=2020-06-05 |volume=23 |issue=7 |pages=2072–2098 |doi=10.1177/1461444820925811 |issn=1461-4448}}</ref> This technology was initially developed for various applications to improve human life. For example, it can be used to produce audiobooks<ref name=":10">{{Citation |last=Chadha |first=Anupama |title=Deepfake: An Overview |date=2021 |url=https://link.springer.com/10.1007/978-981-16-0733-2_39 |work=Proceedings of Second International Conference on Computing, Communications, and Cyber-Security |volume=203 |pages=557–566 |editor-last=Singh |editor-first=Pradeep Kumar |place=Singapore |publisher=Springer Singapore |language=en |doi=10.1007/978-981-16-0733-2_39 |isbn=978-981-16-0732-5 |access-date=2022-06-29 |last2=Kumar |first2=Vaibhav |last3=Kashyap |first3=Sonu |last4=Gupta |first4=Mayank |editor2-last=Wierzchoń |editor2-first=Sławomir T. |editor3-last=Tanwar |editor3-first=Sudeep |editor4-last=Ganzha |editor4-first=Maria}}</ref>, and also to help people who have lost their voices due to throat disease or other medical problems,<ref name=":11">{{Cite news |title=AI gave Val Kilmer his voice back. But critics worry the technology could be misused. |language=en-US |work=Washington Post |url=https://www.washingtonpost.com/technology/2021/08/18/val-kilmer-ai-voice-cloning/ |access-date=2022-06-29 |issn=0190-8286}}</ref><ref>{{Cite web |last=August 19 |first=Vanessa Etienne |last2=Pm |first2=2021 12:31 |title=Val Kilmer Gets His Voice Back After Throat Cancer Battle Using AI Technology: Hear the Results |url=https://people.com/movies/val-kilmer-gets-his-voice-back-after-throat-cancer-battle-using-ai-technology-hear-the-results/ |access-date=2022-07-01 |website=PEOPLE.com |language=en}}</ref> get them back. Commercially, it has opened the door to several opportunities. It can be used to create a brand mascot or give variety to content such as weather and sports news in the broadcast world.<ref>{{Cite web |title=Text to Speech Newscaster AI Voices |url=https://play.ht/text-to-speech-voices/newscaster-voices/ |access-date=2022-06-29 |website=play.ht}}</ref> Entertainment companies or apps can bring back past talent or incorporate the voice of a historical figure into their programming.<ref name=":13">{{Cite web |date=2021-06-23 |title=Recreate Famous Voices with AI Audio Tool Uberduck |url=https://influence.digital/recreate-famous-voices-with-ai-audio-tool-uberduck/ |access-date=2022-06-29 |website=Influence Digital |language=en-GB}}</ref> This technology can also create more personalized digital assistants and natural-sounding speech translation services. |

|||

#REDIRECT [[Digital_cloning#Voice_cloning]] {{Redirect category shell| |

|||

{{R to section}} |

|||

Audio deepfakes, recently called audio manipulations, are becoming widely accessible using simple mobile devices or personal [[Personal computer|PC]]<nowiki/>s.<ref name=":2">{{Cite journal |last=Almutairi |first=Zaynab |last2=Elgibreen |first2=Hebah |date=2022-05-04 |title=A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions |url=https://www.mdpi.com/1999-4893/15/5/155 |journal=Algorithms |language=en |volume=15 |issue=5 |pages=155 |doi=10.3390/a15050155 |issn=1999-4893}}</ref> Unfortunately, these tools have also been used to spread misinformation around the world using audio<ref name=":0" />, and their malicious use has led to fears of the audio deepfake. This has led to [[Computer security|cybersecurity]] concerns among the global public about the side effects of using audio deepfakes. People can use them as a [[Logical access control|logical access]] voice [[Spoofing attack|spoofing]] technique<ref>{{Cite journal |last=Chen |first=Tianxiang |last2=Kumar |first2=Avrosh |last3=Nagarsheth |first3=Parav |last4=Sivaraman |first4=Ganesh |last5=Khoury |first5=Elie |date=2020-11-01 |title=Generalization of Audio Deepfake Detection |url=https://www.isca-speech.org/archive/odyssey_2020/chen20_odyssey.html |journal=The Speaker and Language Recognition Workshop (Odyssey 2020) |language=en |publisher=ISCA |pages=132–137 |doi=10.21437/Odyssey.2020-19}}</ref>, where they can be used to manipulate public opinion for propaganda, defamation, or terrorism. Vast amounts of voice recordings are transmitted over the Internet daily, and spoofing detection is challenging.<ref name=":1">{{Cite journal |last=Ballesteros |first=Dora M. |last2=Rodriguez-Ortega |first2=Yohanna |last3=Renza |first3=Diego |last4=Arce |first4=Gonzalo |date=2021-12-01 |title=Deep4SNet: deep learning for fake speech classification |url=https://www.sciencedirect.com/science/article/pii/S0957417421008770 |journal=Expert Systems with Applications |language=en |volume=184 |pages=115465 |doi=10.1016/j.eswa.2021.115465 |issn=0957-4174}}</ref> However, audio deepfake attackers have targeted not only individuals and organizations but also politicians and governments.<ref>{{Cite journal |last=Suwajanakorn |first=Supasorn |last2=Seitz |first2=Steven M. |last3=Kemelmacher-Shlizerman |first3=Ira |date=2017-07-20 |title=Synthesizing Obama: learning lip sync from audio |url=https://doi.org/10.1145/3072959.3073640 |journal=ACM Transactions on Graphics |volume=36 |issue=4 |pages=95:1–95:13 |doi=10.1145/3072959.3073640 |issn=0730-0301}}</ref> In early 2020, some scammers used artificial intelligence-based software to impersonate the voice of a [[Chief executive officer|CEO]] to authorize a money transfer of about $35 million through a phone call.<ref name=":15">{{Cite web |last=Brewster |first=Thomas |title=Fraudsters Cloned Company Director’s Voice In $35 Million Bank Heist, Police Find |url=https://www.forbes.com/sites/thomasbrewster/2021/10/14/huge-bank-fraud-uses-deep-fake-voice-tech-to-steal-millions/ |access-date=2022-06-29 |website=Forbes |language=en}}</ref> Therefore, it is necessary to authenticate any audio recording distributed to avoid spreading misinformation. |

|||

}} |

|||

== Categories == |

|||

Audio deepfakes can be divided into three different categories: |

|||

=== Replay-based === |

|||

Replay-based deepfakes are malicious work that aims to reproduce a recording of the interlocutor's voice.<ref name=":3">{{Cite journal |last=Khanjani |first=Zahra |last2=Watson |first2=Gabrielle |last3=Janeja |first3=Vandana P. |date=2021-11-28 |title=How Deep Are the Fakes? Focusing on Audio Deepfake: A Survey |url=http://arxiv.org/abs/2111.14203 |journal=arXiv:2111.14203 [cs, eess] |doi=10.48550/arxiv.2111.14203}}</ref> |

|||

There are two types: ''far-field'' detection and ''cut-and-paste'' detection. In far-field detection, a microphone recording of the victim is played as a test segment on a hands-free phone.<ref>{{Cite journal |last=Pradhan |first=Swadhin |last2=Sun |first2=Wei |last3=Baig |first3=Ghufran |last4=Qiu |first4=Lili |date=2019-09-09 |title=Combating Replay Attacks Against Voice Assistants |url=https://doi.org/10.1145/3351258 |journal=Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies |volume=3 |issue=3 |pages=100:1–100:26 |doi=10.1145/3351258}}</ref> On the other hand, cut-and-paste involves faking the requested sentence from a text-dependent system.<ref name=":1" /> Text-dependent speaker verification can be used to defend against replay-based attacks. <ref name=":3" /><ref>{{Cite web |title=Preventing replay attacks on speaker verification systems |url=https://ieeexplore.ieee.org/document/6095943 |access-date=2022-06-29 |website=ieeexplore.ieee.org |language=en-US}}</ref> A current technique that detects end-to-end replay attacks is the use of [[Convolutional neural network|deep convolutional neural networks]].<ref>{{Cite journal |last=Tom |first=Francis |last2=Jain |first2=Mohit |last3=Dey |first3=Prasenjit |date=2018-09-02 |title=End-To-End Audio Replay Attack Detection Using Deep Convolutional Networks with Attention |url=https://www.isca-speech.org/archive/interspeech_2018/tom18_interspeech.html |journal=Interspeech 2018 |language=en |publisher=ISCA |pages=681–685 |doi=10.21437/Interspeech.2018-2279}}</ref> |

|||

=== Synthetic-based === |

|||

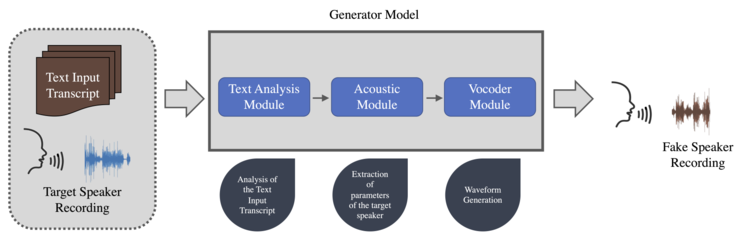

[[File:TTS_diagram.png|alt=A block diagram illustrating the synthetic-based approach for generating audio deepfakes|thumb|The Synthetic-based approach diagram.|740x740px]] |

|||

This category is based on [[speech synthesis]], referring to the artificial production of human speech using software or hardware system programs. Speech synthesis includes Text-To-Speech, which aims to transform the text into acceptable and natural speech in real-time,<ref>{{Cite journal |last=Tan |first=Xu |last2=Qin |first2=Tao |last3=Soong |first3=Frank |last4=Liu |first4=Tie-Yan |date=2021-07-23 |title=A Survey on Neural Speech Synthesis |url=http://arxiv.org/abs/2106.15561 |journal=arXiv:2106.15561 [cs, eess] |doi=10.48550/arxiv.2106.15561}}</ref> making the speech sound in line with the text input, using the rules of linguistic description of the text. |

|||

A classical system of this type consists of three modules: a text analysis model, an acoustic model, and a [[vocoder]]. The generation usually has to follow two essential steps. It is necessary to collect clean and well-structured raw audio with the transcripted text of the original speech audio sentence. Second, the Text-To-Speech model must be trained using these data to build a synthetic audio generation model. |

|||

Specifically, the transcribed text with the target speaker's voice is the input of the generation model. The text analysis module processes the input text and converts it into linguistic features. Then, the acoustic module extracts the parameters of the target speaker from the audio data based on the linguistic features generated by the text analysis module.<ref name=":2" /> Finally, the vocoder learns to create vocal waveforms based on the parameters of the acoustic features. The final audio file is generated, including the synthetic simulation audio in a waveform format, creating speech audio in the voice of many speakers, even those not in training. |

|||

The first breakthrough in this regard was introduced by [[WaveNet|WaveNet,]]<ref name=":6">{{Cite journal |last=Oord |first=Aaron van den |last2=Dieleman |first2=Sander |last3=Zen |first3=Heiga |last4=Simonyan |first4=Karen |last5=Vinyals |first5=Oriol |last6=Graves |first6=Alex |last7=Kalchbrenner |first7=Nal |last8=Senior |first8=Andrew |last9=Kavukcuoglu |first9=Koray |date=2016-09-19 |title=WaveNet: A Generative Model for Raw Audio |url=http://arxiv.org/abs/1609.03499 |journal=arXiv:1609.03499 [cs] |doi=10.48550/arxiv.1609.03499}}</ref> a [[neural network]] for generating raw audio [[Waveform|waveforms]] capable of emulating the characteristics of many different speakers. This network has been overtaken over the years by other systems<ref>{{Cite journal |last=Kuchaiev |first=Oleksii |last2=Li |first2=Jason |last3=Nguyen |first3=Huyen |last4=Hrinchuk |first4=Oleksii |last5=Leary |first5=Ryan |last6=Ginsburg |first6=Boris |last7=Kriman |first7=Samuel |last8=Beliaev |first8=Stanislav |last9=Lavrukhin |first9=Vitaly |last10=Cook |first10=Jack |last11=Castonguay |first11=Patrice |date=2019-09-13 |title=NeMo: a toolkit for building AI applications using Neural Modules |url=http://arxiv.org/abs/1909.09577 |journal=arXiv:1909.09577 [cs, eess] |doi=10.48550/arxiv.1909.09577}}</ref><ref>{{Cite journal |last=Wang |first=Yuxuan |last2=Skerry-Ryan |first2=R. J. |last3=Stanton |first3=Daisy |last4=Wu |first4=Yonghui |last5=Weiss |first5=Ron J. |last6=Jaitly |first6=Navdeep |last7=Yang |first7=Zongheng |last8=Xiao |first8=Ying |last9=Chen |first9=Zhifeng |last10=Bengio |first10=Samy |last11=Le |first11=Quoc |date=2017-04-06 |title=Tacotron: Towards End-to-End Speech Synthesis |url=http://arxiv.org/abs/1703.10135 |journal=arXiv:1703.10135 [cs] |doi=10.48550/arxiv.1703.10135}}</ref><ref name=":7">{{Cite journal |last=Prenger |first=Ryan |last2=Valle |first2=Rafael |last3=Catanzaro |first3=Bryan |date=2018-10-30 |title=WaveGlow: A Flow-based Generative Network for Speech Synthesis |url=http://arxiv.org/abs/1811.00002 |journal=arXiv:1811.00002 [cs, eess, stat] |doi=10.48550/arxiv.1811.00002}}</ref><ref>{{Cite journal |last=Vasquez |first=Sean |last2=Lewis |first2=Mike |date=2019-06-04 |title=MelNet: A Generative Model for Audio in the Frequency Domain |url=http://arxiv.org/abs/1906.01083 |journal=arXiv:1906.01083 [cs, eess, stat] |doi=10.48550/arxiv.1906.01083}}</ref><ref name=":8">{{Cite journal |last=Ping |first=Wei |last2=Peng |first2=Kainan |last3=Gibiansky |first3=Andrew |last4=Arik |first4=Sercan O. |last5=Kannan |first5=Ajay |last6=Narang |first6=Sharan |last7=Raiman |first7=Jonathan |last8=Miller |first8=John |date=2018-02-22 |title=Deep Voice 3: Scaling Text-to-Speech with Convolutional Sequence Learning |url=http://arxiv.org/abs/1710.07654 |journal=arXiv:1710.07654 [cs, eess] |doi=10.48550/arxiv.1710.07654}}</ref><ref>{{Cite journal |last=Ren |first=Yi |last2=Ruan |first2=Yangjun |last3=Tan |first3=Xu |last4=Qin |first4=Tao |last5=Zhao |first5=Sheng |last6=Zhao |first6=Zhou |last7=Liu |first7=Tie-Yan |date=2019-11-20 |title=FastSpeech: Fast, Robust and Controllable Text to Speech |url=http://arxiv.org/abs/1905.09263 |journal=arXiv:1905.09263 [cs, eess] |doi=10.48550/arxiv.1905.09263}}</ref> which synthesize highly realistic artificial voices within everyone’s reach.<ref>{{Cite journal |last=Ning |first=Yishuang |last2=He |first2=Sheng |last3=Wu |first3=Zhiyong |last4=Xing |first4=Chunxiao |last5=Zhang |first5=Liang-Jie |date=January 2019 |title=A Review of Deep Learning Based Speech Synthesis |url=https://www.mdpi.com/2076-3417/9/19/4050 |journal=Applied Sciences |language=en |volume=9 |issue=19 |pages=4050 |doi=10.3390/app9194050 |issn=2076-3417}}</ref> |

|||

Unfortunately, Text-To-Speech is highly dependent on the quality of the voice corpus used to realize the system, and creating an entire voice corpus is expensive.<ref name=":4">{{Cite journal |last=Kuligowska |first=Karolina |last2=Kisielewicz |first2=Paweł |last3=Włodarz |first3=Aleksandra |date=2018-05-16 |title=Speech synthesis systems: disadvantages and limitations |url=https://www.sciencepubco.com/index.php/ijet/article/view/12933 |journal=International Journal of Engineering & Technology |volume=7 |issue=2.28 |pages=234 |doi=10.14419/ijet.v7i2.28.12933 |issn=2227-524X}}</ref> Another disadvantage is that speech synthesis systems do not recognize periods or special characters. Also, ambiguity problems are persistent, as two words written in the same way can have different meanings.<ref name=":4" /> |

|||

=== Imitation-based === |

|||

[[File:Imitation-based_approach.png|alt=A block diagram illustrating the imitation-based approach for generating audio deepfakes|thumb|The Imitation-based approach diagram.|738x738px]] |

|||

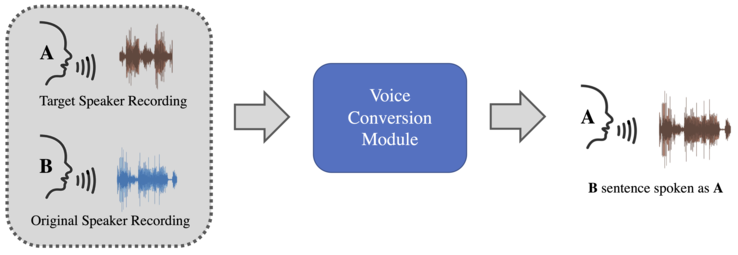

Audio deepfake based on imitation is a way of transforming an original speech from one speaker - the original - so that it sounds spoken like another speaker - the target one.<ref name=":5">{{Cite journal |last=Rodríguez-Ortega |first=Yohanna |last2=Ballesteros |first2=Dora María |last3=Renza |first3=Diego |date=2020 |editor-last=Florez |editor-first=Hector |editor2-last=Misra |editor2-first=Sanjay |title=A Machine Learning Model to Detect Fake Voice |url=https://link.springer.com/chapter/10.1007/978-3-030-61702-8_1 |journal=Applied Informatics |language=en |location=Cham |publisher=Springer International Publishing |pages=3–13 |doi=10.1007/978-3-030-61702-8_1 |isbn=978-3-030-61702-8}}</ref> An imitation-based algorithm takes a spoken signal as input and alters it by changing its style, intonation, or prosody, trying to mimic the target voice without changing the linguistic information.<ref>{{Cite journal |last=Zhang |first=Mingyang |last2=Wang |first2=Xin |last3=Fang |first3=Fuming |last4=Li |first4=Haizhou |last5=Yamagishi |first5=Junichi |date=2019-04-07 |title=Joint training framework for text-to-speech and voice conversion using multi-source Tacotron and WaveNet |url=http://arxiv.org/abs/1903.12389 |journal=arXiv:1903.12389 [cs, eess, stat] |doi=10.48550/arxiv.1903.12389}}</ref> This technique is also known as voice conversion. |

|||

This method is often confused with the previous Synthetic-based method, as there is no clear separation between the two approaches regarding the generation process. Indeed, both methods modify acoustic-spectral and style characteristics of the speech audio signal, but the Imitation-based usually keeps the input and output text unaltered. This is obtained by changing how this sentence is spoken to match the target speaker's characteristics.<ref name=":21">{{Cite journal |last=Sercan |first=Ö Arık |last2=Jitong |first2=Chen |last3=Kainan |first3=Peng |last4=Wei |first4=Ping |last5=Yanqi |first5=Zhou |title=Neural Voice Cloning with a Few Samples |url=https://papers.nips.cc/paper/2018/hash/4559912e7a94a9c32b09d894f2bc3c82-Abstract.html |journal=Advances in Neural Information Processing Systems (NeurIPS 2018) |publication-date=12 October 2018 |volume=31 |pages=10040–10050 |arxiv=1802.06006}}</ref> |

|||

Voices can be imitated in several ways, such as using humans with similar voices that can mimic the original speaker. In recent years, the most popular approach involves the use of particular neural networks called [[Generative adversarial network|Generative Adversarial Networks (GAN)]] due to their flexibility as well as high-quality results.<ref name=":3" /><ref name=":5" /> |

|||

Then, the original audio signal is transformed to say a speech in the target audio using an imitation generation method that generates a new speech, shown in the fake one. |

|||

== Detection methods == |

|||

The audio deepfake detection task determines whether the given speech audio is real or deepfake. |

|||

Recently, this has become a hot topic in the [[Forensic science|forensic]] the research community, trying to keep up with the rapid evolution of counterfeiting techniques. |

|||

In general, deepfake detection methods can be divided into two categories based on the aspect they leverage to perform the detection task. The first focuses on low-level aspects, looking for artifacts introduced by the generators at the sample level. The second, instead, focus on higher-level features representing more complex aspects as the semantic content of the speech audio recording. |

|||

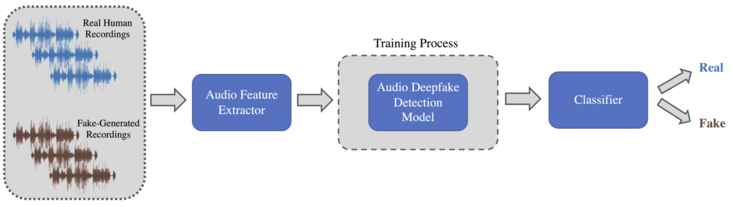

[[File:Audio deepfake detection.png|thumb|A generic audio deepfake detection framework. |alt=A diagram illustrating the usual framework used to perform the audio deepfake detection task.|733x733px]] |

|||

Many [[machine learning]] and [[deep learning]] models have been developed using different strategies to detect fake audio. Most of the time, these algorithms follow a three-step procedure: |

|||

# Each speech audio recording must be preprocessed and transformed into appropriate audio features; |

|||

# The computed features are fed into the detection model, which performs the necessary operations, such as the training process, essential to discriminate between real and fake speech audio; |

|||

# The output is fed into the final module to produce a prediction probability of the ''Fake'' class or the ''Real'' one. Following the ASVspoof<ref name=":14">{{Cite web |title={{!}} ASVspoof |url=https://www.asvspoof.org/ |access-date=2022-07-01 |website=www.asvspoof.org}}</ref> challenge nomenclature, the Fake audio is indicated with the term ''"Spoof,"'' the Real instead is called ''"Bonafide."'' |

|||

Over the years, many researchers have shown that machine learning approaches are more accurate than deep learning methods, regardless of the features used.<ref name=":2" /> However, the scalability of machine learning methods is not confirmed due to excessive training and manual feature extraction, especially with many audio files. Instead, when deep learning algorithms are used, specific transformations are required on the audio files to ensure that the algorithms can handle them. |

|||

There are several open-source implementations of different detection methods<ref>{{Citation |title=resemble-ai/Resemblyzer |date=2022-06-30 |url=https://github.com/resemble-ai/Resemblyzer |publisher=Resemble AI |access-date=2022-07-01}}</ref><ref>{{Citation |last=mendaxfz |title=Synthetic-Voice-Detection |date=2022-06-28 |url=https://github.com/mendaxfz/Synthetic-Voice-Detection |access-date=2022-07-01}}</ref><ref>{{Citation |last=HUA |first=Guang |title=End-to-End Synthetic Speech Detection |date=2022-06-29 |url=https://github.com/ghuawhu/end-to-end-synthetic-speech-detection |access-date=2022-07-01}}</ref>, and usually many research groups release them on a public hosting service like [[GitHub]]. |

|||

== Open challenges and future research direction == |

|||

The audio deepfake is a very recent field of research. For this reason, there are many possibilities for development and improvement, as well as possible threats that adopting this technology can bring to our daily lives. The most important ones are listed below. |

|||

=== Deepfake generation === |

|||

Regarding the generation, the most significant aspect is the credibility of the victim, i.e., the perceptual quality of the audio deepfake. |

|||

Several metrics determine the level of accuracy of audio deepfake generation, and the most widely used is the [[Mean opinion score|MOS (Mean Opinion Score)]], which is the arithmetic average of user ratings. Usually, the test to be rated involves perceptual evaluation of sentences made by different speech generation algorithms. This index showed that audio generated by algorithms trained on a single speaker has a higher MOS.<ref name=":21" /><ref name=":6" /><ref>{{Cite journal |last=Kong |first=Jungil |last2=Kim |first2=Jaehyeon |last3=Bae |first3=Jaekyoung |date=2020-10-23 |title=HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis |url=http://arxiv.org/abs/2010.05646 |journal=arXiv:2010.05646 [cs, eess] |doi=10.48550/arxiv.2010.05646}}</ref><ref>{{Cite journal |last=Kumar |first=Kundan |last2=Kumar |first2=Rithesh |last3=de Boissiere |first3=Thibault |last4=Gestin |first4=Lucas |last5=Teoh |first5=Wei Zhen |last6=Sotelo |first6=Jose |last7=de Brebisson |first7=Alexandre |last8=Bengio |first8=Yoshua |last9=Courville |first9=Aaron |date=2019-12-08 |title=MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis |url=http://arxiv.org/abs/1910.06711 |journal=arXiv:1910.06711 [cs, eess] |doi=10.48550/arxiv.1910.06711}}</ref><ref name=":8" /> |

|||

The sampling rate also plays an essential role in detecting and generating audio deepfakes. Currently, available datasets have a [[Sampling (signal processing)|sampling rate]] of around 16kHz, significantly reducing speech quality. An increase in the sampling rate could lead to higher quality generation.<ref name=":7" /> |

|||

=== Deepfake detection === |

|||

Focusing on the detection part, one principal weakness affecting recent models is the adopted language. |

|||

Most studies focus on detecting audio deepfake in the English language, not paying much attention to the most spoken languages like Chinese and Spanish,<ref>{{Cite web |last=Babbel.com |last2=GmbH |first2=Lesson Nine |title=The 10 Most Spoken Languages In The World |url=https://www.babbel.com/en/magazine/the-10-most-spoken-languages-in-the-world |access-date=2022-06-30 |website=Babbel Magazine |language=en}}</ref> as well as Hindi and Arabic. |

|||

There is also essential to consider more factors related to different accents that represent the way of pronunciation strictly associated with a particular individual, location, or nation. In other fields of audio, such as [[speaker recognition]], the accent has been found to influence the performance significantly<ref>{{Cite journal |last=Najafian |first=Maryam |last2=Russell |first2=Martin |date=September 2020 |title=Automatic accent identification as an analytical tool for accent robust automatic speech recognition |url=https://linkinghub.elsevier.com/retrieve/pii/S0167639317300043 |journal=Speech Communication |language=en |volume=122 |pages=44–55 |doi=10.1016/j.specom.2020.05.003}}</ref>, so it is expected that this feature could affect the models' performance even in this detection task. |

|||

In addition, the excessive preprocessing of the audio data has led to a very high and often unsustainable computational cost. For this reason, many researchers have suggested following a [[Self-supervised learning|Self-Supervised Learning]] approach,<ref>{{Cite journal |last=Liu |first=Xiao |last2=Zhang |first2=Fanjin |last3=Hou |first3=Zhenyu |last4=Mian |first4=Li |last5=Wang |first5=Zhaoyu |last6=Zhang |first6=Jing |last7=Tang |first7=Jie |date=2021 |title=Self-supervised Learning: Generative or Contrastive |url=https://ieeexplore.ieee.org/document/9462394/ |journal=IEEE Transactions on Knowledge and Data Engineering |pages=1–1 |doi=10.1109/TKDE.2021.3090866 |issn=1558-2191}}</ref> dealing with unlabeled data to work effectively in detection tasks and improving the model's scalability, and, at the same time, decreasing the computational cost. |

|||

Training a testing model with real audio data is still an underdeveloped area. Indeed, using audio with real-world background noises can increase the robustness of the fake audio detection models. |

|||

In addition, most of the effort is focused on detecting Synthetic-based audio deepfakes, and few studies are analyzing imitation-based due to their intrinsic difficulty in the generation process.<ref name=":1" /> |

|||

=== Defense against deepfakes === |

|||

Over the years, there has been an increase in techniques aimed at defending against malicious actions that audio deepfake could bring, such as identity theft and manipulation of speeches by the nation's governors. |

|||

To prevent deepfakes, some suggest using blockchain and other [[distributed ledger]] technologies (DLT) to identify the provenance of data and track information.<ref name=":2" /><ref name=":17">{{Cite journal |last=Rashid |first=Md Mamunur |last2=Lee |first2=Suk-Hwan |last3=Kwon |first3=Ki-Ryong |date=2021 |title=Blockchain Technology for Combating Deepfake and Protect Video/Image Integrity |url=http://koreascience.or.kr/article/JAKO202125761199587.page |journal=Journal of Korea Multimedia Society |volume=24 |issue=8 |pages=1044–1058 |doi=10.9717/kmms.2021.24.8.1044 |issn=1229-7771}}</ref><ref name=":18">{{Cite journal |last=Fraga-Lamas |first=Paula |last2=Fernández-Caramés |first2=Tiago M. |date=2019-10-20 |title=Fake News, Disinformation, and Deepfakes: Leveraging Distributed Ledger Technologies and Blockchain to Combat Digital Deception and Counterfeit Reality |url=http://arxiv.org/abs/1904.05386 |journal=arXiv:1904.05386 [cs] |doi=10.48550/arxiv.1904.05386}}</ref><ref name=":19">{{Cite journal |last=Ki Chan |first=Christopher Chun |last2=Kumar |first2=Vimal |last3=Delaney |first3=Steven |last4=Gochoo |first4=Munkhjargal |date=September 2020 |title=Combating Deepfakes: Multi-LSTM and Blockchain as Proof of Authenticity for Digital Media |url=https://ieeexplore.ieee.org/document/9311067 |journal=2020 IEEE / ITU International Conference on Artificial Intelligence for Good (AI4G) |pages=55–62 |doi=10.1109/AI4G50087.2020.9311067}}</ref> |

|||

Extracting and comparing affective cues corresponding to perceived emotions from digital content has also been proposed to combat deepfakes.<ref>{{Citation |last=Mittal |first=Trisha |title=Emotions Don't Lie: An Audio-Visual Deepfake Detection Method using Affective Cues |date=2020-10-12 |url=https://doi.org/10.1145/3394171.3413570 |work=Proceedings of the 28th ACM International Conference on Multimedia |pages=2823–2832 |place=New York, NY, USA |publisher=Association for Computing Machinery |doi=10.1145/3394171.3413570 |isbn=978-1-4503-7988-5 |access-date=2022-06-29 |last2=Bhattacharya |first2=Uttaran |last3=Chandra |first3=Rohan |last4=Bera |first4=Aniket |last5=Manocha |first5=Dinesh}}</ref><ref>{{Cite journal |last=Conti |first=Emanuele |last2=Salvi |first2=Davide |last3=Borrelli |first3=Clara |last4=Hosler |first4=Brian |last5=Bestagini |first5=Paolo |last6=Antonacci |first6=Fabio |last7=Sarti |first7=Augusto |last8=Stamm |first8=Matthew C. |last9=Tubaro |first9=Stefano |date=2022-05-23 |title=Deepfake Speech Detection Through Emotion Recognition: A Semantic Approach |url=https://ieeexplore.ieee.org/document/9747186/ |journal=ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) |location=Singapore, Singapore |publisher=IEEE |pages=8962–8966 |doi=10.1109/ICASSP43922.2022.9747186 |isbn=978-1-6654-0540-9}}</ref><ref>{{Cite journal |last=Hosler |first=Brian |last2=Salvi |first2=Davide |last3=Murray |first3=Anthony |last4=Antonacci |first4=Fabio |last5=Bestagini |first5=Paolo |last6=Tubaro |first6=Stefano |last7=Stamm |first7=Matthew C. |date=June 2021 |title=Do Deepfakes Feel Emotions? A Semantic Approach to Detecting Deepfakes Via Emotional Inconsistencies |url=https://ieeexplore.ieee.org/document/9523100/ |journal=2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) |location=Nashville, TN, USA |publisher=IEEE |pages=1013–1022 |doi=10.1109/CVPRW53098.2021.00112 |isbn=978-1-6654-4899-4}}</ref> |

|||

Another critical aspect concerns the mitigation of this problem. It would be better to keep some proprietary detection tools only for those who need them, such as fact-checkers for journalists.<ref name=":3" /> That way, those who create the generation models, perhaps for nefarious purposes, would not know precisely what features facilitate the detection of a deepfake,<ref name=":3" /> discouraging possible attackers. |

|||

To improve the detection instead, researchers are trying to generalize the process,<ref>{{Cite journal |last=Müller |first=Nicolas M. |last2=Czempin |first2=Pavel |last3=Dieckmann |first3=Franziska |last4=Froghyar |first4=Adam |last5=Böttinger |first5=Konstantin |date=2022-04-21 |title=Does Audio Deepfake Detection Generalize? |url=http://arxiv.org/abs/2203.16263 |journal=arXiv:2203.16263 [cs, eess] |doi=10.48550/arxiv.2203.16263}}</ref> looking for preprocessing techniques that improve performance and testing different loss functions used for training.<ref>{{Cite journal |last=Chen |first=Tianxiang |last2=Kumar |first2=Avrosh |last3=Nagarsheth |first3=Parav |last4=Sivaraman |first4=Ganesh |last5=Khoury |first5=Elie |date=2020-11-01 |title=Generalization of Audio Deepfake Detection |url=https://www.isca-speech.org/archive/odyssey_2020/chen20_odyssey.html |journal=The Speaker and Language Recognition Workshop (Odyssey 2020) |language=en |publisher=ISCA |pages=132–137 |doi=10.21437/Odyssey.2020-19}}</ref><ref>{{Cite journal |last=Zhang |first=You |last2=Jiang |first2=Fei |last3=Duan |first3=Zhiyao |date=2021 |title=One-Class Learning Towards Synthetic Voice Spoofing Detection |url=https://ieeexplore.ieee.org/document/9417604 |journal=IEEE Signal Processing Letters |volume=28 |pages=937–941 |doi=10.1109/LSP.2021.3076358 |issn=1558-2361}}</ref> |

|||

=== Research programs === |

|||

Numerous research groups worldwide are working to recognize media manipulations, i.e., audio deepfakes but also image and video deepfake. These projects are usually supported by public or private funding and are in close contact with universities and research institutions. |

|||

For this purpose, the [[Defense Advanced Research Projects Agency (DARPA)]] runs the Semantic Forensics (SemaFor).<ref name=":9">{{Cite web |title=SAM.gov |url=https://sam.gov/opp/a8883be78ac1442e8a22924011fc13c4/view |access-date=2022-06-29 |website=sam.gov}}</ref><ref>{{Cite web |title=The SemaFor Program |url=https://www.darpa.mil/program/semantic-forensics |access-date=2022-07-01 |website=www.darpa.mil}}</ref> Leveraging some of the research from the Media Forensics (MediFor)<ref>{{Cite web |title=The DARPA MediFor Program |url=https://govtribe.com/file/government-file/darpabaa1558-darpa-baa-15-58-medifor-dot-pdf |access-date=2022-06-29 |website=govtribe.com}}</ref><ref>{{Cite web |title=The MediFor Program |url=https://www.darpa.mil/program/media-forensics |access-date=2022-07-01 |website=www.darpa.mil}}</ref> program, also from DARPA, these semantic detection algorithms will have to determine whether a media object has been generated or manipulated, to automate the analysis of media provenance and uncover the intent behind the falsification of various content.<ref name=":12" /><ref name=":9" /> |

|||

Another research program is the Preserving Media Trustworthiness in the Artificial Intelligence Era (PREMIER)<ref>{{Cite web |title=PREMIER |url=https://sites.google.com/unitn.it/premier/ |access-date=2022-07-01 |website=sites.google.com |language=en-US}}</ref> program, founded by the Italian [[Ministry of Education, University and Research|Ministry of Education, University and Research (MIUR)]] and runs by five Italian universities. PREMIER will pursue novel hybrid approaches to obtain forensic detectors that are more interpretable and secure.<ref name=":20">{{Cite web |title=PREMIER - Project |url=https://sites.google.com/unitn.it/premier/project |access-date=2022-06-29 |website=sites.google.com |language=en-US}}</ref> |

|||

=== Public challenges === |

|||

In the last few years, numerous challenges have been organized to push this field of audio deepfake research even further. |

|||

The most famous world challenge is the ASVspoof<ref name=":14" />, the Automatic Speaker Verification Spoofing and Countermeasures Challenge. This challenge is a bi-annual community-led initiative that aims to promote the consideration of spoofing and the development of countermeasures.<ref>{{Cite journal |last=Yamagishi |first=Junichi |last2=Wang |first2=Xin |last3=Todisco |first3=Massimiliano |last4=Sahidullah |first4=Md |last5=Patino |first5=Jose |last6=Nautsch |first6=Andreas |last7=Liu |first7=Xuechen |last8=Lee |first8=Kong Aik |last9=Kinnunen |first9=Tomi |last10=Evans |first10=Nicholas |last11=Delgado |first11=Héctor |date=2021-09-01 |title=ASVspoof 2021: accelerating progress in spoofed and deepfake speech detection |url=http://arxiv.org/abs/2109.00537 |journal=arXiv:2109.00537 [cs, eess]}}</ref> |

|||

Another recent challenge is the ADD<ref>{{Cite web |date=2021-12-17 |title=Audio Deepfake Detection: ICASSP 2022 |url=https://signalprocessingsociety.org/publications-resources/data-challenges/audio-deepfake-detection-icassp-2022 |access-date=2022-07-01 |website=IEEE Signal Processing Society |language=en}}</ref> - Audio Deepfake Detection - which considers fake situations in a more real-life scenario.<ref>{{Cite journal |last=Yi |first=Jiangyan |last2=Fu |first2=Ruibo |last3=Tao |first3=Jianhua |last4=Nie |first4=Shuai |last5=Ma |first5=Haoxin |last6=Wang |first6=Chenglong |last7=Wang |first7=Tao |last8=Tian |first8=Zhengkun |last9=Bai |first9=Ye |last10=Fan |first10=Cunhang |last11=Liang |first11=Shan |date=2022-02-26 |title=ADD 2022: the First Audio Deep Synthesis Detection Challenge |url=http://arxiv.org/abs/2202.08433 |journal=arXiv:2202.08433 [cs, eess]}}</ref> |

|||

Also the Voice Conversion Challenge<ref>{{Cite web |title=Joint Workshop for the Blizzard Challenge and Voice Conversion Challenge 2020 - SynSIG |url=https://www.synsig.org/index.php/Joint_Workshop_for_the_Blizzard_Challenge_and_Voice_Conversion_Challenge_2020 |access-date=2022-07-01 |website=www.synsig.org}}</ref> is a bi-annual challenge, created with the need to compare different voice conversion systems and approaches using the same voice data. |

|||

== See Also == |

|||

{{div col|colwidth=30em}} |

|||

* [[Deepfake]] |

|||

* [[Speech synthesis]] |

|||

* [[Digital cloning]] |

|||

* [[Deep learning]] |

|||

* [[Artificial intelligence]] |

|||

* [[Speech analysis]] |

|||

* [[Speech recognition]] |

|||

* [[Digital signal processing]] |

|||

{{div col end}} |

|||

== References == |

|||

<references /> |

|||

[[Category:Deepfakes]] |

|||

[[Category:Digital signal processing]] |

|||

[[Category:Digital forensics]] |

|||

Revision as of 09:35, 1 July 2022

The audio deepfake is a type of artificial intelligence used to create convincing speech sentences that sound like specific people saying things they did not say.[1][2] This technology was initially developed for various applications to improve human life. For example, it can be used to produce audiobooks[3], and also to help people who have lost their voices due to throat disease or other medical problems,[4][5] get them back. Commercially, it has opened the door to several opportunities. It can be used to create a brand mascot or give variety to content such as weather and sports news in the broadcast world.[6] Entertainment companies or apps can bring back past talent or incorporate the voice of a historical figure into their programming.[7] This technology can also create more personalized digital assistants and natural-sounding speech translation services.

Audio deepfakes, recently called audio manipulations, are becoming widely accessible using simple mobile devices or personal PCs.[8] Unfortunately, these tools have also been used to spread misinformation around the world using audio[2], and their malicious use has led to fears of the audio deepfake. This has led to cybersecurity concerns among the global public about the side effects of using audio deepfakes. People can use them as a logical access voice spoofing technique[9], where they can be used to manipulate public opinion for propaganda, defamation, or terrorism. Vast amounts of voice recordings are transmitted over the Internet daily, and spoofing detection is challenging.[10] However, audio deepfake attackers have targeted not only individuals and organizations but also politicians and governments.[11] In early 2020, some scammers used artificial intelligence-based software to impersonate the voice of a CEO to authorize a money transfer of about $35 million through a phone call.[12] Therefore, it is necessary to authenticate any audio recording distributed to avoid spreading misinformation.

Categories

Audio deepfakes can be divided into three different categories:

Replay-based

Replay-based deepfakes are malicious work that aims to reproduce a recording of the interlocutor's voice.[13]

There are two types: far-field detection and cut-and-paste detection. In far-field detection, a microphone recording of the victim is played as a test segment on a hands-free phone.[14] On the other hand, cut-and-paste involves faking the requested sentence from a text-dependent system.[10] Text-dependent speaker verification can be used to defend against replay-based attacks. [13][15] A current technique that detects end-to-end replay attacks is the use of deep convolutional neural networks.[16]

Synthetic-based

This category is based on speech synthesis, referring to the artificial production of human speech using software or hardware system programs. Speech synthesis includes Text-To-Speech, which aims to transform the text into acceptable and natural speech in real-time,[17] making the speech sound in line with the text input, using the rules of linguistic description of the text.

A classical system of this type consists of three modules: a text analysis model, an acoustic model, and a vocoder. The generation usually has to follow two essential steps. It is necessary to collect clean and well-structured raw audio with the transcripted text of the original speech audio sentence. Second, the Text-To-Speech model must be trained using these data to build a synthetic audio generation model.

Specifically, the transcribed text with the target speaker's voice is the input of the generation model. The text analysis module processes the input text and converts it into linguistic features. Then, the acoustic module extracts the parameters of the target speaker from the audio data based on the linguistic features generated by the text analysis module.[8] Finally, the vocoder learns to create vocal waveforms based on the parameters of the acoustic features. The final audio file is generated, including the synthetic simulation audio in a waveform format, creating speech audio in the voice of many speakers, even those not in training.

The first breakthrough in this regard was introduced by WaveNet,[18] a neural network for generating raw audio waveforms capable of emulating the characteristics of many different speakers. This network has been overtaken over the years by other systems[19][20][21][22][23][24] which synthesize highly realistic artificial voices within everyone’s reach.[25]

Unfortunately, Text-To-Speech is highly dependent on the quality of the voice corpus used to realize the system, and creating an entire voice corpus is expensive.[26] Another disadvantage is that speech synthesis systems do not recognize periods or special characters. Also, ambiguity problems are persistent, as two words written in the same way can have different meanings.[26]

Imitation-based

Audio deepfake based on imitation is a way of transforming an original speech from one speaker - the original - so that it sounds spoken like another speaker - the target one.[27] An imitation-based algorithm takes a spoken signal as input and alters it by changing its style, intonation, or prosody, trying to mimic the target voice without changing the linguistic information.[28] This technique is also known as voice conversion.

This method is often confused with the previous Synthetic-based method, as there is no clear separation between the two approaches regarding the generation process. Indeed, both methods modify acoustic-spectral and style characteristics of the speech audio signal, but the Imitation-based usually keeps the input and output text unaltered. This is obtained by changing how this sentence is spoken to match the target speaker's characteristics.[29]

Voices can be imitated in several ways, such as using humans with similar voices that can mimic the original speaker. In recent years, the most popular approach involves the use of particular neural networks called Generative Adversarial Networks (GAN) due to their flexibility as well as high-quality results.[13][27]

Then, the original audio signal is transformed to say a speech in the target audio using an imitation generation method that generates a new speech, shown in the fake one.

Detection methods

The audio deepfake detection task determines whether the given speech audio is real or deepfake.

Recently, this has become a hot topic in the forensic the research community, trying to keep up with the rapid evolution of counterfeiting techniques.

In general, deepfake detection methods can be divided into two categories based on the aspect they leverage to perform the detection task. The first focuses on low-level aspects, looking for artifacts introduced by the generators at the sample level. The second, instead, focus on higher-level features representing more complex aspects as the semantic content of the speech audio recording.

Many machine learning and deep learning models have been developed using different strategies to detect fake audio. Most of the time, these algorithms follow a three-step procedure:

- Each speech audio recording must be preprocessed and transformed into appropriate audio features;

- The computed features are fed into the detection model, which performs the necessary operations, such as the training process, essential to discriminate between real and fake speech audio;

- The output is fed into the final module to produce a prediction probability of the Fake class or the Real one. Following the ASVspoof[30] challenge nomenclature, the Fake audio is indicated with the term "Spoof," the Real instead is called "Bonafide."

Over the years, many researchers have shown that machine learning approaches are more accurate than deep learning methods, regardless of the features used.[8] However, the scalability of machine learning methods is not confirmed due to excessive training and manual feature extraction, especially with many audio files. Instead, when deep learning algorithms are used, specific transformations are required on the audio files to ensure that the algorithms can handle them.

There are several open-source implementations of different detection methods[31][32][33], and usually many research groups release them on a public hosting service like GitHub.

Open challenges and future research direction

The audio deepfake is a very recent field of research. For this reason, there are many possibilities for development and improvement, as well as possible threats that adopting this technology can bring to our daily lives. The most important ones are listed below.

Deepfake generation

Regarding the generation, the most significant aspect is the credibility of the victim, i.e., the perceptual quality of the audio deepfake.

Several metrics determine the level of accuracy of audio deepfake generation, and the most widely used is the MOS (Mean Opinion Score), which is the arithmetic average of user ratings. Usually, the test to be rated involves perceptual evaluation of sentences made by different speech generation algorithms. This index showed that audio generated by algorithms trained on a single speaker has a higher MOS.[29][18][34][35][23]

The sampling rate also plays an essential role in detecting and generating audio deepfakes. Currently, available datasets have a sampling rate of around 16kHz, significantly reducing speech quality. An increase in the sampling rate could lead to higher quality generation.[21]

Deepfake detection

Focusing on the detection part, one principal weakness affecting recent models is the adopted language.

Most studies focus on detecting audio deepfake in the English language, not paying much attention to the most spoken languages like Chinese and Spanish,[36] as well as Hindi and Arabic.

There is also essential to consider more factors related to different accents that represent the way of pronunciation strictly associated with a particular individual, location, or nation. In other fields of audio, such as speaker recognition, the accent has been found to influence the performance significantly[37], so it is expected that this feature could affect the models' performance even in this detection task.

In addition, the excessive preprocessing of the audio data has led to a very high and often unsustainable computational cost. For this reason, many researchers have suggested following a Self-Supervised Learning approach,[38] dealing with unlabeled data to work effectively in detection tasks and improving the model's scalability, and, at the same time, decreasing the computational cost.

Training a testing model with real audio data is still an underdeveloped area. Indeed, using audio with real-world background noises can increase the robustness of the fake audio detection models.

In addition, most of the effort is focused on detecting Synthetic-based audio deepfakes, and few studies are analyzing imitation-based due to their intrinsic difficulty in the generation process.[10]

Defense against deepfakes

Over the years, there has been an increase in techniques aimed at defending against malicious actions that audio deepfake could bring, such as identity theft and manipulation of speeches by the nation's governors.

To prevent deepfakes, some suggest using blockchain and other distributed ledger technologies (DLT) to identify the provenance of data and track information.[8][39][40][41]

Extracting and comparing affective cues corresponding to perceived emotions from digital content has also been proposed to combat deepfakes.[42][43][44]

Another critical aspect concerns the mitigation of this problem. It would be better to keep some proprietary detection tools only for those who need them, such as fact-checkers for journalists.[13] That way, those who create the generation models, perhaps for nefarious purposes, would not know precisely what features facilitate the detection of a deepfake,[13] discouraging possible attackers.

To improve the detection instead, researchers are trying to generalize the process,[45] looking for preprocessing techniques that improve performance and testing different loss functions used for training.[46][47]

Research programs

Numerous research groups worldwide are working to recognize media manipulations, i.e., audio deepfakes but also image and video deepfake. These projects are usually supported by public or private funding and are in close contact with universities and research institutions.

For this purpose, the Defense Advanced Research Projects Agency (DARPA) runs the Semantic Forensics (SemaFor).[48][49] Leveraging some of the research from the Media Forensics (MediFor)[50][51] program, also from DARPA, these semantic detection algorithms will have to determine whether a media object has been generated or manipulated, to automate the analysis of media provenance and uncover the intent behind the falsification of various content.[52][48]

Another research program is the Preserving Media Trustworthiness in the Artificial Intelligence Era (PREMIER)[53] program, founded by the Italian Ministry of Education, University and Research (MIUR) and runs by five Italian universities. PREMIER will pursue novel hybrid approaches to obtain forensic detectors that are more interpretable and secure.[54]

Public challenges

In the last few years, numerous challenges have been organized to push this field of audio deepfake research even further.

The most famous world challenge is the ASVspoof[30], the Automatic Speaker Verification Spoofing and Countermeasures Challenge. This challenge is a bi-annual community-led initiative that aims to promote the consideration of spoofing and the development of countermeasures.[55]

Another recent challenge is the ADD[56] - Audio Deepfake Detection - which considers fake situations in a more real-life scenario.[57]

Also the Voice Conversion Challenge[58] is a bi-annual challenge, created with the need to compare different voice conversion systems and approaches using the same voice data.

See Also

References

- ^ "Deepfake Detection: Current Challenges and Next Steps". ieeexplore.ieee.org. doi:10.1109/icmew46912.2020.9105991. Retrieved 2022-06-29.

- ^ a b Diakopoulos, Nicholas; Johnson, Deborah (June 2020). "Anticipating and addressing the ethical implications of deepfakes in the context of elections". New Media & Society. 23 (7) (published 2020-06-05): 2072–2098. doi:10.1177/1461444820925811. ISSN 1461-4448.

{{cite journal}}: Check date values in:|year=(help)CS1 maint: date and year (link) - ^ Chadha, Anupama; Kumar, Vaibhav; Kashyap, Sonu; Gupta, Mayank (2021), Singh, Pradeep Kumar; Wierzchoń, Sławomir T.; Tanwar, Sudeep; Ganzha, Maria (eds.), "Deepfake: An Overview", Proceedings of Second International Conference on Computing, Communications, and Cyber-Security, vol. 203, Singapore: Springer Singapore, pp. 557–566, doi:10.1007/978-981-16-0733-2_39, ISBN 978-981-16-0732-5, retrieved 2022-06-29

- ^ "AI gave Val Kilmer his voice back. But critics worry the technology could be misused". Washington Post. ISSN 0190-8286. Retrieved 2022-06-29.

- ^ August 19, Vanessa Etienne; Pm, 2021 12:31. "Val Kilmer Gets His Voice Back After Throat Cancer Battle Using AI Technology: Hear the Results". PEOPLE.com. Retrieved 2022-07-01.

{{cite web}}:|first2=has numeric name (help)CS1 maint: numeric names: authors list (link) - ^ "Text to Speech Newscaster AI Voices". play.ht. Retrieved 2022-06-29.

- ^ "Recreate Famous Voices with AI Audio Tool Uberduck". Influence Digital. 2021-06-23. Retrieved 2022-06-29.

- ^ a b c d Almutairi, Zaynab; Elgibreen, Hebah (2022-05-04). "A Review of Modern Audio Deepfake Detection Methods: Challenges and Future Directions". Algorithms. 15 (5): 155. doi:10.3390/a15050155. ISSN 1999-4893.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Chen, Tianxiang; Kumar, Avrosh; Nagarsheth, Parav; Sivaraman, Ganesh; Khoury, Elie (2020-11-01). "Generalization of Audio Deepfake Detection". The Speaker and Language Recognition Workshop (Odyssey 2020). ISCA: 132–137. doi:10.21437/Odyssey.2020-19.

- ^ a b c Ballesteros, Dora M.; Rodriguez-Ortega, Yohanna; Renza, Diego; Arce, Gonzalo (2021-12-01). "Deep4SNet: deep learning for fake speech classification". Expert Systems with Applications. 184: 115465. doi:10.1016/j.eswa.2021.115465. ISSN 0957-4174.

- ^ Suwajanakorn, Supasorn; Seitz, Steven M.; Kemelmacher-Shlizerman, Ira (2017-07-20). "Synthesizing Obama: learning lip sync from audio". ACM Transactions on Graphics. 36 (4): 95:1–95:13. doi:10.1145/3072959.3073640. ISSN 0730-0301.

- ^ Brewster, Thomas. "Fraudsters Cloned Company Director's Voice In $35 Million Bank Heist, Police Find". Forbes. Retrieved 2022-06-29.

- ^ a b c d e Khanjani, Zahra; Watson, Gabrielle; Janeja, Vandana P. (2021-11-28). "How Deep Are the Fakes? Focusing on Audio Deepfake: A Survey". arXiv:2111.14203 [cs, eess]. doi:10.48550/arxiv.2111.14203.

- ^ Pradhan, Swadhin; Sun, Wei; Baig, Ghufran; Qiu, Lili (2019-09-09). "Combating Replay Attacks Against Voice Assistants". Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. 3 (3): 100:1–100:26. doi:10.1145/3351258.

- ^ "Preventing replay attacks on speaker verification systems". ieeexplore.ieee.org. Retrieved 2022-06-29.

- ^ Tom, Francis; Jain, Mohit; Dey, Prasenjit (2018-09-02). "End-To-End Audio Replay Attack Detection Using Deep Convolutional Networks with Attention". Interspeech 2018. ISCA: 681–685. doi:10.21437/Interspeech.2018-2279.

- ^ Tan, Xu; Qin, Tao; Soong, Frank; Liu, Tie-Yan (2021-07-23). "A Survey on Neural Speech Synthesis". arXiv:2106.15561 [cs, eess]. doi:10.48550/arxiv.2106.15561.

- ^ a b Oord, Aaron van den; Dieleman, Sander; Zen, Heiga; Simonyan, Karen; Vinyals, Oriol; Graves, Alex; Kalchbrenner, Nal; Senior, Andrew; Kavukcuoglu, Koray (2016-09-19). "WaveNet: A Generative Model for Raw Audio". arXiv:1609.03499 [cs]. doi:10.48550/arxiv.1609.03499.

- ^ Kuchaiev, Oleksii; Li, Jason; Nguyen, Huyen; Hrinchuk, Oleksii; Leary, Ryan; Ginsburg, Boris; Kriman, Samuel; Beliaev, Stanislav; Lavrukhin, Vitaly; Cook, Jack; Castonguay, Patrice (2019-09-13). "NeMo: a toolkit for building AI applications using Neural Modules". arXiv:1909.09577 [cs, eess]. doi:10.48550/arxiv.1909.09577.

- ^ Wang, Yuxuan; Skerry-Ryan, R. J.; Stanton, Daisy; Wu, Yonghui; Weiss, Ron J.; Jaitly, Navdeep; Yang, Zongheng; Xiao, Ying; Chen, Zhifeng; Bengio, Samy; Le, Quoc (2017-04-06). "Tacotron: Towards End-to-End Speech Synthesis". arXiv:1703.10135 [cs]. doi:10.48550/arxiv.1703.10135.

- ^ a b Prenger, Ryan; Valle, Rafael; Catanzaro, Bryan (2018-10-30). "WaveGlow: A Flow-based Generative Network for Speech Synthesis". arXiv:1811.00002 [cs, eess, stat]. doi:10.48550/arxiv.1811.00002.

- ^ Vasquez, Sean; Lewis, Mike (2019-06-04). "MelNet: A Generative Model for Audio in the Frequency Domain". arXiv:1906.01083 [cs, eess, stat]. doi:10.48550/arxiv.1906.01083.

- ^ a b Ping, Wei; Peng, Kainan; Gibiansky, Andrew; Arik, Sercan O.; Kannan, Ajay; Narang, Sharan; Raiman, Jonathan; Miller, John (2018-02-22). "Deep Voice 3: Scaling Text-to-Speech with Convolutional Sequence Learning". arXiv:1710.07654 [cs, eess]. doi:10.48550/arxiv.1710.07654.

- ^ Ren, Yi; Ruan, Yangjun; Tan, Xu; Qin, Tao; Zhao, Sheng; Zhao, Zhou; Liu, Tie-Yan (2019-11-20). "FastSpeech: Fast, Robust and Controllable Text to Speech". arXiv:1905.09263 [cs, eess]. doi:10.48550/arxiv.1905.09263.

- ^ Ning, Yishuang; He, Sheng; Wu, Zhiyong; Xing, Chunxiao; Zhang, Liang-Jie (January 2019). "A Review of Deep Learning Based Speech Synthesis". Applied Sciences. 9 (19): 4050. doi:10.3390/app9194050. ISSN 2076-3417.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ a b Kuligowska, Karolina; Kisielewicz, Paweł; Włodarz, Aleksandra (2018-05-16). "Speech synthesis systems: disadvantages and limitations". International Journal of Engineering & Technology. 7 (2.28): 234. doi:10.14419/ijet.v7i2.28.12933. ISSN 2227-524X.

- ^ a b Rodríguez-Ortega, Yohanna; Ballesteros, Dora María; Renza, Diego (2020). Florez, Hector; Misra, Sanjay (eds.). "A Machine Learning Model to Detect Fake Voice". Applied Informatics. Cham: Springer International Publishing: 3–13. doi:10.1007/978-3-030-61702-8_1. ISBN 978-3-030-61702-8.

- ^ Zhang, Mingyang; Wang, Xin; Fang, Fuming; Li, Haizhou; Yamagishi, Junichi (2019-04-07). "Joint training framework for text-to-speech and voice conversion using multi-source Tacotron and WaveNet". arXiv:1903.12389 [cs, eess, stat]. doi:10.48550/arxiv.1903.12389.

- ^ a b Sercan, Ö Arık; Jitong, Chen; Kainan, Peng; Wei, Ping; Yanqi, Zhou (12 October 2018). "Neural Voice Cloning with a Few Samples". Advances in Neural Information Processing Systems (NeurIPS 2018). 31: 10040–10050. arXiv:1802.06006.

- ^ a b "| ASVspoof". www.asvspoof.org. Retrieved 2022-07-01.

- ^ resemble-ai/Resemblyzer, Resemble AI, 2022-06-30, retrieved 2022-07-01

- ^ mendaxfz (2022-06-28), Synthetic-Voice-Detection, retrieved 2022-07-01

- ^ HUA, Guang (2022-06-29), End-to-End Synthetic Speech Detection, retrieved 2022-07-01

- ^ Kong, Jungil; Kim, Jaehyeon; Bae, Jaekyoung (2020-10-23). "HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis". arXiv:2010.05646 [cs, eess]. doi:10.48550/arxiv.2010.05646.

- ^ Kumar, Kundan; Kumar, Rithesh; de Boissiere, Thibault; Gestin, Lucas; Teoh, Wei Zhen; Sotelo, Jose; de Brebisson, Alexandre; Bengio, Yoshua; Courville, Aaron (2019-12-08). "MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis". arXiv:1910.06711 [cs, eess]. doi:10.48550/arxiv.1910.06711.

- ^ Babbel.com; GmbH, Lesson Nine. "The 10 Most Spoken Languages In The World". Babbel Magazine. Retrieved 2022-06-30.

- ^ Najafian, Maryam; Russell, Martin (September 2020). "Automatic accent identification as an analytical tool for accent robust automatic speech recognition". Speech Communication. 122: 44–55. doi:10.1016/j.specom.2020.05.003.

- ^ Liu, Xiao; Zhang, Fanjin; Hou, Zhenyu; Mian, Li; Wang, Zhaoyu; Zhang, Jing; Tang, Jie (2021). "Self-supervised Learning: Generative or Contrastive". IEEE Transactions on Knowledge and Data Engineering: 1–1. doi:10.1109/TKDE.2021.3090866. ISSN 1558-2191.

- ^ Rashid, Md Mamunur; Lee, Suk-Hwan; Kwon, Ki-Ryong (2021). "Blockchain Technology for Combating Deepfake and Protect Video/Image Integrity". Journal of Korea Multimedia Society. 24 (8): 1044–1058. doi:10.9717/kmms.2021.24.8.1044. ISSN 1229-7771.

- ^ Fraga-Lamas, Paula; Fernández-Caramés, Tiago M. (2019-10-20). "Fake News, Disinformation, and Deepfakes: Leveraging Distributed Ledger Technologies and Blockchain to Combat Digital Deception and Counterfeit Reality". arXiv:1904.05386 [cs]. doi:10.48550/arxiv.1904.05386.

- ^ Ki Chan, Christopher Chun; Kumar, Vimal; Delaney, Steven; Gochoo, Munkhjargal (September 2020). "Combating Deepfakes: Multi-LSTM and Blockchain as Proof of Authenticity for Digital Media". 2020 IEEE / ITU International Conference on Artificial Intelligence for Good (AI4G): 55–62. doi:10.1109/AI4G50087.2020.9311067.

- ^ Mittal, Trisha; Bhattacharya, Uttaran; Chandra, Rohan; Bera, Aniket; Manocha, Dinesh (2020-10-12), "Emotions Don't Lie: An Audio-Visual Deepfake Detection Method using Affective Cues", Proceedings of the 28th ACM International Conference on Multimedia, New York, NY, USA: Association for Computing Machinery, pp. 2823–2832, doi:10.1145/3394171.3413570, ISBN 978-1-4503-7988-5, retrieved 2022-06-29

- ^ Conti, Emanuele; Salvi, Davide; Borrelli, Clara; Hosler, Brian; Bestagini, Paolo; Antonacci, Fabio; Sarti, Augusto; Stamm, Matthew C.; Tubaro, Stefano (2022-05-23). "Deepfake Speech Detection Through Emotion Recognition: A Semantic Approach". ICASSP 2022 - 2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Singapore, Singapore: IEEE: 8962–8966. doi:10.1109/ICASSP43922.2022.9747186. ISBN 978-1-6654-0540-9.

- ^ Hosler, Brian; Salvi, Davide; Murray, Anthony; Antonacci, Fabio; Bestagini, Paolo; Tubaro, Stefano; Stamm, Matthew C. (June 2021). "Do Deepfakes Feel Emotions? A Semantic Approach to Detecting Deepfakes Via Emotional Inconsistencies". 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Nashville, TN, USA: IEEE: 1013–1022. doi:10.1109/CVPRW53098.2021.00112. ISBN 978-1-6654-4899-4.

- ^ Müller, Nicolas M.; Czempin, Pavel; Dieckmann, Franziska; Froghyar, Adam; Böttinger, Konstantin (2022-04-21). "Does Audio Deepfake Detection Generalize?". arXiv:2203.16263 [cs, eess]. doi:10.48550/arxiv.2203.16263.

- ^ Chen, Tianxiang; Kumar, Avrosh; Nagarsheth, Parav; Sivaraman, Ganesh; Khoury, Elie (2020-11-01). "Generalization of Audio Deepfake Detection". The Speaker and Language Recognition Workshop (Odyssey 2020). ISCA: 132–137. doi:10.21437/Odyssey.2020-19.

- ^ Zhang, You; Jiang, Fei; Duan, Zhiyao (2021). "One-Class Learning Towards Synthetic Voice Spoofing Detection". IEEE Signal Processing Letters. 28: 937–941. doi:10.1109/LSP.2021.3076358. ISSN 1558-2361.

- ^ a b "SAM.gov". sam.gov. Retrieved 2022-06-29.

- ^ "The SemaFor Program". www.darpa.mil. Retrieved 2022-07-01.

- ^ "The DARPA MediFor Program". govtribe.com. Retrieved 2022-06-29.

- ^ "The MediFor Program". www.darpa.mil. Retrieved 2022-07-01.

- ^ Cite error: The named reference

:12was invoked but never defined (see the help page). - ^ "PREMIER". sites.google.com. Retrieved 2022-07-01.

- ^ "PREMIER - Project". sites.google.com. Retrieved 2022-06-29.

- ^ Yamagishi, Junichi; Wang, Xin; Todisco, Massimiliano; Sahidullah, Md; Patino, Jose; Nautsch, Andreas; Liu, Xuechen; Lee, Kong Aik; Kinnunen, Tomi; Evans, Nicholas; Delgado, Héctor (2021-09-01). "ASVspoof 2021: accelerating progress in spoofed and deepfake speech detection". arXiv:2109.00537 [cs, eess].

- ^ "Audio Deepfake Detection: ICASSP 2022". IEEE Signal Processing Society. 2021-12-17. Retrieved 2022-07-01.

- ^ Yi, Jiangyan; Fu, Ruibo; Tao, Jianhua; Nie, Shuai; Ma, Haoxin; Wang, Chenglong; Wang, Tao; Tian, Zhengkun; Bai, Ye; Fan, Cunhang; Liang, Shan (2022-02-26). "ADD 2022: the First Audio Deep Synthesis Detection Challenge". arXiv:2202.08433 [cs, eess].

- ^ "Joint Workshop for the Blizzard Challenge and Voice Conversion Challenge 2020 - SynSIG". www.synsig.org. Retrieved 2022-07-01.