Bootstrap aggregating: Difference between revisions

m →Intro |

m Citation maintenance. You can use this bot yourself! Please report any bugs. |

||

| Line 33: | Line 33: | ||

| year = 1996 |

| year = 1996 |

||

| url = http://citeseer.ist.psu.edu/breiman96bagging.html |

| url = http://citeseer.ist.psu.edu/breiman96bagging.html |

||

| doi = 10.1007/BF00058655 |

|||

}} |

}} |

||

* {{Cite journal |

* {{Cite journal |

||

| Line 40: | Line 41: | ||

| volume = 1 |

| volume = 1 |

||

| issue = 4 |

| issue = 4 |

||

| pages = |

| pages = 324–333 |

||

| year = 2004 |

| year = 2004 |

||

| url = http://www.math.upatras.gr/~esdlab/en/members/kotsiantis/ijci%20paper%20kotsiantis.pdf |

| url = http://www.math.upatras.gr/~esdlab/en/members/kotsiantis/ijci%20paper%20kotsiantis.pdf |

||

Revision as of 17:26, 22 June 2008

Bootstrap aggregating (bagging) is a meta-algorithm to improve machine learning of classification and regression models in terms of stability and classification accuracy. It also reduces variance and helps to avoid overfitting. Although it is usually applied to decision tree models, it can be used with any type of model. Bagging is a special case of the model averaging approach.

Given a standard training set D of size n, bagging generates m new training sets of size n' ≤ n, by sampling examples from D uniformly and with replacement. By sampling with replacement it is likely that some examples will be repeated in each . If n'=n, then for large n the set expected to have 63.2% of the unique examples of D, the rest being duplicates. This kind of sample is known as a bootstrap sample. The m models are fitted using the above m bootstrap samples and combined by averaging the output (for regression) or voting (for classification).

Since the method averages several predictors, it is not useful for improving linear models.

Example: Ozone data

This example is rather artificial, but illustrates the basic principles of bagging.

Rousseeuw and Leroy (1986) describe a data set concerning ozone levels. The data are available via the classic data sets page. All computations were performed in R.

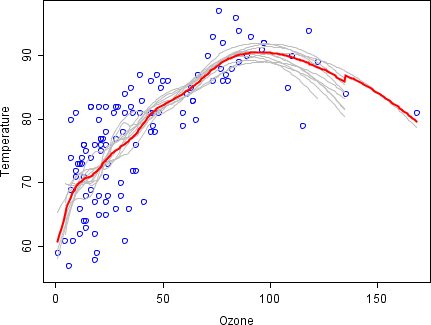

A scatter plot reveals an apparently non-linear relationship between temperature and ozone. One way to model the relationship is to use a loess smoother. Such a smoother requires that a span parameter be chosen. In this example, a span of 0.5 was used.

One hundred bootstrap samples of the data were taken, and the LOESS smoother was fit to each sample. Predictions from these 100 smoothers were then made across the range of the data. The first 10 predicted smooth fits appear as grey lines in the figure below. The lines are clearly very wiggly and they overfit the data - a result of the span being too low.

The red line on the plot below represents the mean of the 100 smoothers. Clearly, the mean is more stable and there is less overfit. This is the bagged predictor.

History

Bagging (Bootstrap aggregating) was proposed by Leo Breiman in 1994 to improve the classification by combining classifications of randomly generated training sets. See Breiman, 1994. Technical Report No. 421.

References

- Leo Breiman (1996). "Bagging predictors". Machine Learning. 24 (2): 123140. doi:10.1007/BF00058655.

{{cite journal}}: soft hyphen character in|pages=at position 4 (help) - S. Kotsiantis, P. Pintelas (2004). "Combining Bagging and Boosting" (PDF). International Journal of Computational Intelligence. 1 (4): 324–333.