Optical music recognition: Difference between revisions

Citation bot (talk | contribs) m Alter: url, volume. Add: doi, jstor, pages, issue, volume, journal. Removed URL that duplicated unique identifier. Removed accessdate with no specified URL. Removed parameters. | You can use this bot yourself. Report bugs here. | User-activated. |

Completely rewritten the entire article with the latest scientific state of the art. |

||

| Line 1: | Line 1: | ||

Optical Music Recognition |

|||

{{refimprove|date=November 2015}} |

|||

Optical Music Recognition (OMR) is a field of research that investigates how to computationally read music notation in documents.<ref>{{cite thesis|type=PhD|last=Pacha|first=Alexander|date=2019|title=Self-Learning Optical Music Recognition|publisher=TU Wien, Austria|degree=PhD|url=https://alexanderpacha.files.wordpress.com/2019/07/dissertation-self-learning-optical-music-recognition-alexander-pacha.pdf|doi=10.13140/RG.2.2.18467.40484}}</ref> The goal of OMR is to teach the computer to read and interpret [[sheet music]] and produce a machine-readable version of the written music score. |

|||

In the past it has, misleadingly, also been called Music [[OCR]]. |

|||

'''Optical music recognition''' ('''OMR''') or '''Music OCR''' is the application of [[optical character recognition]] to interpret [[sheet music]] or printed scores into editable or playable form. Once captured digitally, the music can be saved in commonly used file formats, e.g. [[MIDI]] (for playback) and [[MusicXML]] (for page layout). |

|||

= History = |

|||

[[File:FirstPublishedDigitalScanOfMusic-Prerau1971.png|thumb|First published digital scan of music scores]] |

|||

Research into the automatic recognition of printed sheet music started in the late 1960s at the [[Massachusetts Institute of Technology|MIT]] when the first [[Image scanner|image scanners]] became affordable for research institutes. |

|||

<ref>{{YouTube|id=Mr7simdf0eA|title=Fujinaga, Ichiro (2018). The History of OMR}}</ref> |

|||

<ref>{{cite thesis|type=PhD|last=Pruslin|first=Denis|date=1966|title=Automatic Recognition of Sheet Music|publisher=Massachusetts Institute of Technology, Cambridge, Massachusetts, USA|degree=PhD}}</ref> |

|||

<ref name=prerau1971>{{cite conference |last=Prerau |first=David S. |date=1971 |title=Computer pattern recognition of printed music |conference=Fall Joint Computer Conference|pages=153-162}}</ref> |

|||

Due to memory restrictions of the used computers, the first attempts were limited to only a few measures of music (see first published scan of music). |

|||

In 1984, a Japanese research group from [[Waseda University]] developed a specialized robot, called WABOT (WAseda roBOT), which was capable of reading the music sheet in front of it and accompanying a singer on an [[electric organ]]. |

|||

<ref>{{cite web | url=http://www.humanoid.waseda.ac.jp/booklet/kato_2.html | title=WABOT -WAseda roBOT | publisher=Waseda University Humanoid | accessdate=July 14, 2019}}</ref> |

|||

<ref>{{cite web | url=https://robots.ieee.org/robots/wabot/ | title=Wabot’s entry in the IEEE collection of Robots | publisher=IEEE | accessdate=July 14, 2019}}</ref> |

|||

Substantial research in the early days of OMR has also been conducted by Ichiro Fujinaga, Nicholas Carter, Kia Ng, David Bainbridge, and Tim Bell, who developed many of the techniques that are still being used in some systems today. |

|||

== History == |

|||

With the widespread availability of cheap flatbed scanners, OMR became more popular among researchers and a number of projects were started, including the development of the first commercial application MIDISCAN (now [[SmartScore]]), which was released in 1991 by [[Musitek Corporation]]. |

|||

Early research into recognition of printed sheet music was performed at the graduate level in the late 1960s at MIT and other institutions.<ref> |

|||

{{cite paper |

|||

| author = Pruslin, Dennis Howard |

|||

| title = Automatic Recognition of Sheet Music |

|||

| journal = Perspectives of New Music |

|||

| volume = 11 |

|||

| issue = 1 |

|||

| pages = 250–254 |

|||

| date = 1966 |

|||

| jstor = 832471 |

|||

}}</ref> |

|||

Successive efforts were made to localize and remove musical staff lines leaving symbols to be recognized and parsed. The first commercial music-scanning product, MIDISCAN (now [[SmartScore]]), was released in 1991 by [[SmartScore|Musitek]] corporation. |

|||

The availability of [[smartphones]] with good cameras and sufficient computational power, paved the way to mobile solutions where the user takes a picture with the smartphone and the device directly processes the image. |

|||

Unlike OCR of text, where words are parsed sequentially, music notation involves parallel elements, as when several voices are present along with unattached performance symbols positioned nearby. Therefore, the spatial relationship between notes, expression marks, dynamics, articulations and other annotations is an important part of the expression of the music.{{citation needed|date=November 2015}} |

|||

= Relation to Other Fields = |

|||

Optical Music Recognition relates to other fields of research, including [[Computer Vision]], Document Analysis, and [[Music Information Retrieval]]. It is relevant for practicing musicians and composers that could use OMR systems as a means to enter music into the computer and thus ease the process of [[musical composition|composing]], [[transcription (music)|transcribing]], and editing music. In a library, an OMR system could make music scores searchable |

|||

<ref>{{cite conference |last1=Laplante |first1=Audrey |last2=Fujinaga |first2=Ichiro |date=2016 |title=Digitizing Musical Scores: Challenges and Opportunities for Libraries |conference=3rd International Workshop on Digital Libraries for Musicology|pages=45-48}}</ref> |

|||

and for musicologists it would allow to conduct quantitative musicological studies at scale. |

|||

<ref>{{cite conference |last1=Hajič |first1=Jan jr. |last2=Kolárová |first2=Marta |first3=Alexander |last3=Pacha |first4=Jorge |last4=Calvo-Zaragoza |date=2018 |title=How Current Optical Music Recognition Systems Are Becoming Useful for Digital Libraries |conference=5th International Conference on Digital Libraries for Musicology|pages=57-61|location=Paris, France}}</ref> |

|||

[[File:RelationToOtherFields.svg|thumb|Relation of Optical Music Recognition to other fields of research]] |

|||

== Proprietary software == |

|||

== OMR vs. OCR == |

|||

{{primarysources|section|date=March 2016}} |

|||

Optical Music Recognition has frequently been compared to Optical Character Recognition. The biggest difference is that music notation is a featural writing system. This means that while the alphabet consists of well-defined primitives (e.g., stems, noteheads, or flags), it is their configuration - how they are placed and arranged on the staff - that determines the semantics and how it should be interpreted. |

|||

* [[Capella (notation program)#Companion products|capella-scan]]<ref>[http://www.capella-software.com/capscan.htm Info capella-scan]</ref> |

|||

* ForteScan Light by Fortenotation<ref>[http://www.fortenotation.com/en/products/sheet-music-scanning/forte-scan-light/ FORTE Scan Light] {{webarchive|url=https://web.archive.org/web/20130922202552/http://www.fortenotation.com/en/products/sheet-music-scanning/forte-scan-light/ |date=2013-09-22 }}</ref> |

|||

* MIDI-Connections Scan by MIDI-Connections<ref>[http://www.midi-connections.com/Product_Scan.htm MIDI-Connections SCAN 2.0] {{webarchive|url=https://web.archive.org/web/20131220015015/http://www.midi-connections.com/Product_Scan.htm |date=2013-12-20 }}</ref> |

|||

* MP Scan by Braeburn.<ref>[http://www.braeburn.co.uk/mpsinfo.htm Music Publisher Scanning Edition]</ref> Uses SharpEye SDK. |

|||

* NoteScan bundled with Nightingale<ref>[http://www.ngale.com NoteScan]</ref> |

|||

* OMeR (Optical Music easy Reader) Add-on for Harmony Assistant and Melody Assistant: Myriad Software<ref>[http://www.myriad-online.com/en/products/omer.htm OMeR]</ref> (ShareWare) |

|||

* PhotoScore by Neuratron.<ref>[http://www.neuratron.com/photoscore.htm PhotoScore Ultimate 7]</ref> The Light version of PhotoScore is used in [[Sibelius notation program|Sibelius]]. PhotoScore uses the SharpEye SDK. |

|||

* ScoreMaker by Kawai<ref>[http://www.kawai.co.jp/cmusic/products/scomwinm/ Scoremaker (Japanese)] {{webarchive|url=https://archive.is/20131008005557/http://www.kawai.co.jp/cmusic/products/scomwinm/ |date=2013-10-08 }}</ref> |

|||

* Scorscan by npcImaging.<ref>[http://www.npcimaging.com/scscinfo/scscinfo.html ScorScan]</ref> Based on SightReader(?) |

|||

* SharpEye By Visiv<ref>[http://www.visiv.co.uk/ SharpEye]</ref> |

|||

** VivaldiScan (same as SharpEye)<ref>[http://www.vivaldistudio.com/ENG/VivaldiScan.asp VivaldiScan]</ref> |

|||

* [[SmartScore]] By Musitek.<ref>[http://www.musitek.com/smartscre.html SmartScore] {{webarchive|url=https://web.archive.org/web/20120417140717/http://www.musitek.com/smartscre.html |date=2012-04-17 }}</ref> Formerly packaged as "MIDISCAN". (SmartScore Lite is used in [[Finale (software)|Finale]]). |

|||

The second major distinction is the fact that while an OCR system does not go beyond recognizing letters and words, an OMR system is expected to also recover the semantics of music. Meaning that the user expects that the vertical position of a note (graphical concept) is being translated into the pitch (musical concept) by applying the rules of music notation. Notice that there is no proper equivalent in text recognition. By analogy, recovering the music from an image of a music sheet can be as challenging as recovering the [[HTML]] [[source code]] from the [[screenshot]] of a [[website]]. |

|||

== Free/open source software == |

|||

* [[Audiveris]] ([[Java (programming language)|Java]]) (last release December 2018<ref>[https://github.com/Audiveris/audiveris Audiveris - Github page]</ref>) |

|||

* [[OpenOMR]] ([[Java (programming language)|Java]]) (last release November 2006<ref>[https://sourceforge.net/projects/openomr/files/ OpenOMR]</ref>) |

|||

The third difference comes from the used character set. Although writing systems like Chinese have extraordinarily complex character sets, the character set of primitives for OMR spans a much greater range of sizes, ranging from tiny elements such as a dot to big elements that potentially span an entire page such as a brace. Some systems have a nearly unrestricted appearance like slurs, that are only defined as more-or-less smooth curves that may be interrupted anywhere. |

|||

== Similar but different == |

|||

PDFtoMUSIC by Myriad is often seen as a Music OCR software, but it does actually no optical character recognition. The program simply reads PDF files which have been created by some scorewriter, locates the musical glyphs which have been written directly as characters of a music notation font. The optical recognition consists of concluding the musical relationship of those glyphs from their relative position in space, i.e. on the logical page of the PDF document, and combine those to a musical score. Only the PRO version can export this to a MusicXML file, while the standard version works only for the scorewriters by Myriad.<ref name="Myriad_PDF2music">{{cite web |

|||

|url= http://www.myriad-online.com/en/products/pdftomusicpro.htm |

|||

|title=PDFtoMusic Pro |work=myriad-online.com |year=2015 |

|||

|accessdate=13 November 2015}}</ref> |

|||

Finally, music notation involves ubiquitous two-dimensional spatial relationships, whereas text can be read as a one-dimensional stream of information, once the baseline is established. |

|||

== See also == |

|||

= Approaches to OMR = |

|||

The process of recognizing music scores is typically broken down into smaller steps that are handled with specialized [[pattern recognition]] algorithms. |

|||

Many competing approaches have been proposed with most of them sharing a pipeline architecture, where each step in this pipeline performs a certain operation, such as detecting and removing staff lines before moving on to the next stage. A common problem with that approach is that errors and artifacts that were made in one stage are propagated through the system and can heavily affect the performance. For example, if the staff line detection stage fails to correctly identify the existence of the music staffs, subsequent steps will probably ignore that region of the image, leading to missing information in the output. |

|||

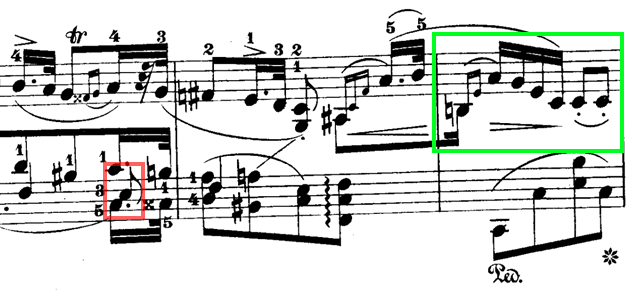

Optical Music Recognition is frequently underestimated due to the seemingly easy nature of the problem: If provided with a perfect scan of typeset music, the visual recognition can be solved with a sequence of fairly simple algorithms, such as projections and template matching. However, the process gets significantly harder for poor scans or handwritten music, which many systems fail to recognize altogether. And even if all symbols would have been detected perfectly, it is still challenging to recover the musical semantics due to ambiguities and frequent violations of the rules of music notation (see the example of Chopin’s Nocturne below). Donald Byrd and Jakob Simonsen argue that OMR is difficult, because modern music notation is extremely complex. |

|||

<ref>{{cite journal |last1=Byrd |first1=Donald |last2=Simonsen |first2=Jakob Grue |date=2015 |title=Towards a Standard Testbed for Optical Music Recognition: Definitions, Metrics, and Page Images |journal=Journal of New Music Research |volume=44 |issue=3 |pages=169–195 |doi=10.1080/09298215.2015.1045424}}</ref> |

|||

[[File:Excerpt from Nocturne Op. 15, no. 2 by Frédéric Chopin.png|Excerpt of Nocturne Op. 15, no. 2 by Frédéric Chopin, which demonstrates the challenges encountered in Optical Music Recognition.]] |

|||

Donald Byrd also collected a number of interesting examples |

|||

<ref>{{cite web | url=http://homes.sice.indiana.edu/donbyrd/InterestingMusicNotation.html | title=Gallery of Interesting Music Notation | publisher=Donald Byrd | accessdate=July 14, 2019}}</ref> |

|||

as well as extreme examples |

|||

<ref>{{cite web | url=http://homes.sice.indiana.edu/donbyrd/CMNExtremes.htm | title=Extremes of Conventional Music Notation | publisher=Donald Byrd | accessdate=July 14, 2019}}</ref> |

|||

of music notation that demonstrate the sheer complexity of music notation. |

|||

== Outputs of OMR Systems == |

|||

Typical applications for OMR systems include the creation of an audible version of the music score (referred to as Replayability). A common way to create such a version is by generating a [[MIDI]] file, which can be [[Synthesizer|synthesised]] into an audio file. MIDI files, though, are not capable of storing engraving information (how the notes were laid out) or enharmonic spelling. |

|||

If the music scores are recognized with the goal of human readability (referred to as Reprintability), the Structured Encoding has to be recovered, which includes precise information on the layout and engraving. Suitable formats to store this information include [[Music Encoding Initiative|MEI]] and [[MusicXML]]. |

|||

Apart from those two applications, it might also be interesting to just extract metadata from the image or enable searching. In contrast to the first two applications, a lower level of comprehension of the music score might be sufficient to perform these tasks. |

|||

== General Framework (2001) == |

|||

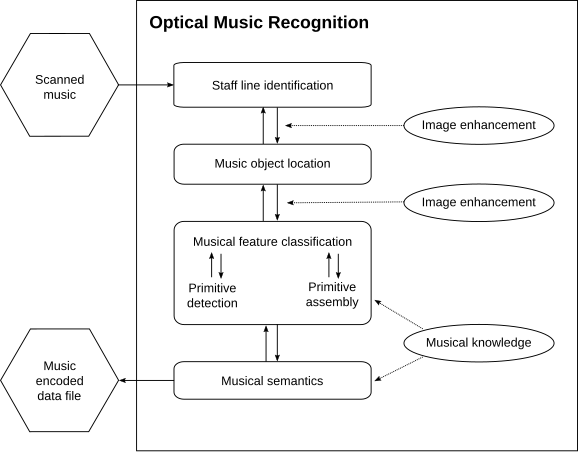

In 2001, David Bainbridge and Tim Bell published their work on the challenges of OMR, where they reviewed previous research and extracted a general framework for OMR which has served as a template for many systems that were developed after 2001. |

|||

<ref name=Bainbridge2001/> |

|||

It contained four distinct stages with heavy emphasis on the visual detection of objects. They noticed that the reconstruction of the musical semantics was often omitted from published articles, because the used operations were specific to the output format. |

|||

[[File:Optical Music Recognition Architecture by Bainbridge and Bell (2001).svg|Optical Music Recognition Architecture by Bainbridge and Bell (2001)]] |

|||

== Refined Framework (2012) == |

|||

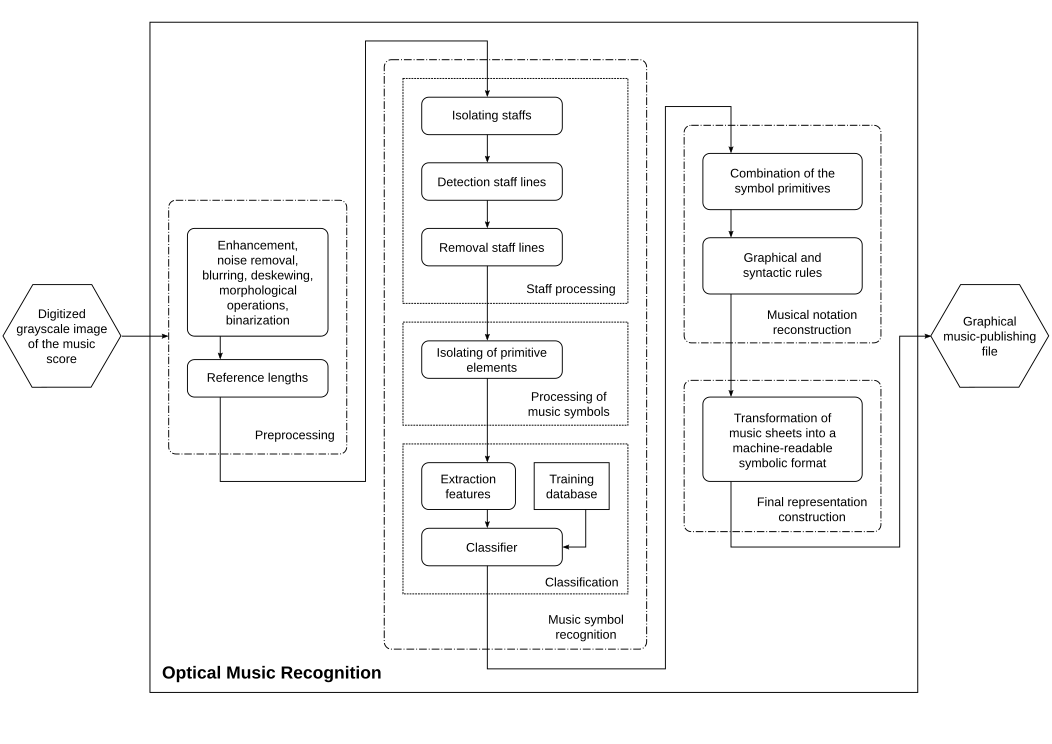

In 2012, Ana Rebelo et al. compiled a survey of techniques used for Optical Music Recognition. |

|||

<ref>{{cite journal |last1=Rebelo |first1=Ana |last2=Fujinaga |first2=Ichiro |last3=Paszkiewicz |first3=Filipe |last4=Marcal |first4=Andre R.S. |last5=Guedes |first5=Carlos |last6=Cardoso |first6=Jamie dos Santos |date=2012 |title=Optical music recognition: state-of-the-art and open issues |journal=International Journal of Multimedia Information Retrieval |volume=1 |issue=3 |pages=173–190 |doi=10.1007/s13735-012-0004-6 |url=https://link.springer.com/content/pdf/10.1007%2Fs13735-012-0004-6.pdf}}</ref> |

|||

They categorized the published research and refined the OMR pipeline into the four stages: Preprocessing, Music symbols recognition, Musical notation reconstruction and Final representation construction. This framework became the de-facto standard for OMR and is still being used today (although sometimes with a slightly different terminology). For each block, they give an overview of techniques that are used to tackle that problem. This publication is the most cited paper on OMR research as of 2019. |

|||

[[File:Optical Music Recognition Architecture by Rebelo (2012).svg|Reproduction of the general framework for Optical Music Recognition proposed by Ana Rebelo et al. in 2012.]] |

|||

== Deep Learning (since 2016) == |

|||

With the advent of [[Deep learning]], many computer vision problems have seen a shift from imperative programming with hand-crafted heuristics and feature engineering towards machine learning. In Optical Music Recognition, the staff processing stage, |

|||

<ref>{{cite conference |last1=Castellanos |first1=Fancisco J. |last2=Calvo-Zaragoza |first2=Jorge |first3=Gabriel |last3=Vigliensoni |first4=Ichiro |last4=Fujinaga |date=2018 |title=Document Analysis of Music Score Images with Selectional Auto-Encoders |conference=19th International Society for Music Information Retrieval Conference|pages=256-263|location=Paris, France |url=http://ismir2018.ircam.fr/doc/pdfs/93_Paper.pdf}}</ref> |

|||

the music object detection stage, |

|||

<ref>{{cite conference |last1=Tuggener |first1=Lukas |last2=Elezi |first2=Ismail |first3=Jürgen |last3=Schmidhuber |first4=Thilo |last4=Stadelmann |date=2018 |title=Deep Watershed Detector for Music Object Recognition |conference=19th International Society for Music Information Retrieval Conference|pages=271-278|location=Paris, France |url=http://ismir2018.ircam.fr/doc/pdfs/225_Paper.pdf}}</ref> |

|||

<ref>{{cite conference |last1=Hajič |first1=Jan jr. |last2=Dorfer |first2=Matthias |first3=Gerhard |last3=Widmer |first4=Pavel |last4=Pecina |date=2018 |title=Towards Full-Pipeline Handwritten OMR with Musical Symbol Detection by U-Nets |conference=19th International Society for Music Information Retrieval Conference|pages=225-232|location=Paris, France |url=http://ismir2018.ircam.fr/doc/pdfs/175_Paper.pdf}}</ref> |

|||

<ref>{{cite journal |last1=Pacha |first1=Alexander |last2=Hajič |first2=Jan jr. |last3=Calvo-Zaragoza |first3=Jorge |date=2018 |title=A Baseline for General Music Object Detection with Deep Learning |journal=Applied Sciences |volume=8 |issue=9 |pages=1488–1508 |doi=10.3390/app8091488 |url=https://www.mdpi.com/2076-3417/8/9/1488}}</ref> |

|||

<ref>{{cite conference |last1=Pacha |first1=Alexander |last2=Choi |first2=Kwon-Young |last3=Coüasnon |first3=Bertrand |last4=Ricquebourg |first4=Yann |last5=Zanibbi |first5=Richard |last6=Eidenberger |first6=Horst |date=2018 |title=Handwritten Music Object Detection: Open Issues and Baseline Results |conference=13th International Workshop on Document Analysis Systems |pages=163–168 |doi=10.1109/DAS.2018.51}}</ref> |

|||

as well as the music notation reconstruction stage |

|||

<ref>{{cite conference |last1=Pacha |first1=Alexander |last2=Calvo-Zaragoza |first2=Jorge |last3=Hajič |first3=Jan jr. |date=2019 |title=Learning Notation Graph Construction for Full-Pipeline Optical Music Recognition |conference=20th International Society for Music Information Retrieval Conference (in press)}}</ref> |

|||

have seen successful attempts to solve them with deep learning. |

|||

Even completely new approaches have been proposed, including solving OMR in an end-to-end fashion with Sequence-to-Sequence models, that take an image of music scores and directly produce the recognized music in a simplified format. |

|||

<ref>{{cite conference |last1=van der Wel |first1=Eelco |last2=Ullrich |first2=Karen |date=2017 |title=Optical Music Recognition with Convolutional Sequence-to-Sequence Models |conference=18th International Society for Music Information Retrieval Conference |location=Suzhou, China |url=https://archives.ismir.net/ismir2017/paper/000069.pdf}}</ref> |

|||

<ref>{{cite journal |last1=Calvo-Zaragoza |first1=Jorge |last2=Rizo |first2=David |date=2018 |title=End-to-End Neural Optical Music Recognition of Monophonic Scores |journal=Applied Sciences |volume=8 |issue=4 |doi=10.3390/app8040606 |url=https://www.mdpi.com/2076-3417/8/4/606}}</ref> |

|||

<ref>{{cite conference |last1=Baró |first1=Arnau |last2=Riba |first2=Pau |last3=Calvo-Zaragoza |first3=Jorge |last4=Fornés |first4=Alicia |date=2017 |title=Optical Music Recognition by Recurrent Neural Networks |conference=14th International Conference on Document Analysis and Recognition |pages=25-26 |doi=10.1109/ICDAR.2017.260}}</ref> |

|||

= Notable Scientific Projects = |

|||

== Staff Removal Challenge == |

|||

For systems that were developed before 2016, staff detection and removal posed a significant obstacle. A scientific competition was organized to improve the state of the art and advance the field. |

|||

<ref>{{cite journal |last1=Fornés |first1=Alicia |last2=Dutta |first2=Anjan |last3=Gordo |first3=Albert |last4=Lladós |first4=Josep |date=2013 |title=The 2012 Music Scores Competitions: Staff Removal and Writer Identification |journal=Graphics Recognition. New Trends and Challenges |publisher=Springer |pages=173–186 |doi=10.1007/978-3-642-36824-0_17}}</ref> |

|||

Due to excellent results and modern techniques that made the staff removal stage obsolete, this competition was discontinued. |

|||

However, the freely available CVC-MUSCIMA dataset that was developed for this challenge is still highly relevant for OMR research as it contains 1000 high-quality images of handwritten music scores, transcribed by 50 different musicians. It has been further extended into the MUSCIMA++ dataset, which contains detailed annotations for 140 out of 1000 pages. |

|||

== SIMSSA == |

|||

The Single Interface for Music Score Searching and Analysis project (SIMSSA) |

|||

<ref>{{cite web | url=https://simssa.ca/ | title=The SIMSSA project website | publisher=McGill University | accessdate=July 14, 2019}}</ref> |

|||

is probably the largest project that attempts to teach computers to recognize musical scores and make them accessible. Several sub-projects have already been successfully completed, including the Liber Usualis |

|||

<ref>{{cite web | url=http://liber.simssa.ca/ | title=The Liber Usualis project website | publisher=McGill University | accessdate=July 14, 2019}}</ref> |

|||

and Cantus Ultimus. |

|||

<ref>{{cite web | url=https://cantus.simssa.ca/ | title=The Cantus Ultimus project website | publisher=McGill University | accessdate=July 14, 2019}}</ref> |

|||

== TROMPA == |

|||

Towards Richer Online Music Public-domain Archives (TROMPA) is an international research project, sponsored by the European Union that investigates how to make public-domain digital music resources more accessible. |

|||

<ref>{{cite web | url=https://trompamusic.eu/ | title=The TROMPA project website | publisher=Trompa consortium | accessdate=July 14, 2019}}</ref> |

|||

= Datasets = |

|||

The development of OMR systems greatly benefits from a dataset of sufficient size and diversity to ensure the system works under various conditions. However, due to legal reasons and potential copyright violations, it is challenging to compile and publish such a dataset. The most notable datasets for OMR are referenced and summarized by the OMR Datasets project |

|||

<ref>{{cite web | url=https://apacha.github.io/OMR-Datasets/ | title=The OMR Datasets Project (Github Repository) | publisher=Pacha, Alexander | accessdate=July 14, 2019}}</ref> |

|||

and include the CVC-MUSCIMA |

|||

<ref>{{cite journal |last1=Fornés |first1=Alicia |last2=Dutta |first2=Anjan |last3=Gordo |first3=Albert |last4=Lladós |first4=Josep |date=2012 |title=CVC-MUSCIMA: A Ground-truth of Handwritten Music Score Images for Writer Identification and Staff Removal |journal=International Journal on Document Analysis and Recognition |volume=15 |issue=3 |pages=243–251 |doi=10.1007/s10032-011-0168-2}}</ref> |

|||

, MUSCIMA++ |

|||

<ref>{{cite conference |last1=Hajič |first1=Jan jr. |last2=Pecina |first2=Pavel |date=2017 |title=The MUSCIMA++ Dataset for Handwritten Optical Music Recognition |conference=14th International Conference on Document Analysis and Recognition |pages=39-46 |doi=10.1109/ICDAR.2017.16 |location=Kyoto, Japan}}</ref> |

|||

, DeepScores |

|||

<ref>{{cite conference |last1=Tuggener |first1=Lukas |last2=Elezi |first2=Ismail |last3=Schmidhuber |first3=Jürgen |last4=Pelillo |first4=Marcello |last5=Stadelmann |first5=Thilo |date=2018 |title=DeepScores - A Dataset for Segmentation, Detection and Classification of Tiny Objects |conference=24th International Conference on Pattern Recognition |doi=10.21256/zhaw-4255 |location=Beijing, China}}</ref> |

|||

, PrIMuS |

|||

<ref>{{cite conference |last1=Calvo-Zaragoza |first1=Jorge |last2=Rizo |first2=David |date=2018 |title=Camera-PrIMuS: Neural End-to-End Optical Music Recognition on Realistic Monophonic Scores |conference=19th International Society for Music Information Retrieval Conference |pages=248–255 |url=http://ismir2018.ircam.fr/doc/pdfs/33_Paper.pdf |location=Paris, France}}</ref> |

|||

, HOMUS |

|||

<ref>{{cite conference |last1=Calvo-Zaragoza |first1=Jorge |last2=Oncina |first2=Jose |date=2014 |title=Recognition of Pen-Based Music Notation: The HOMUS Dataset |conference=22nd International Conference on Pattern Recognition |pages=3038–3043 |doi=10.1109/ICPR.2014.524}}</ref> |

|||

, and SEILS dataset |

|||

<ref>{{cite conference |last1=Parada-Cabaleiro |first1=Emilia |last2=Batliner |first2=Anton |last3=Baird |first3=Alice |last4=Schuller |first4=Björn |date=2017 |title=The SEILS Dataset: Symbolically Encoded Scores in Modern-Early Notation for Computational Musicology |conference=18th International Society for Music Information Retrieval Conference |pages=575-581 |url=https://ismir2017.smcnus.org/wp-content/uploads/2017/10/14_Paper.pdf |location=Suzhou, China}}</ref> |

|||

, as well as the Universal Music Symbol Collection. |

|||

<ref>{{cite conference |last1=Pacha |first1=Alexander |last2=Eidenberger |first2=Horst |date=2017 |title=Towards a Universal Music Symbol Classifier |conference=14th International Conference on Document Analysis and Recognition |pages=35-36 |doi=10.1109/ICDAR.2017.265 |location=Kyoto, Japan}}</ref> |

|||

= Software = |

|||

== Academic and Open-Source Software == |

|||

A large number of OMR projects have been realized in academia but only a few of them reached a mature state and were successfully deployed to users. These systems are: |

|||

* Gamera<ref>[https://gamera.informatik.hsnr.de/addons/musicstaves/ Gamera]</ref> |

|||

* Aruspix<ref>[http://www.aruspix.net/ Aruspix]</ref> |

|||

* Rodan<ref>[https://github.com/DDMAL/Rodan/wiki Rodan]</ref> |

|||

* DMOS<ref>{{cite conference |last1=Coüasnon |first1=Bertrand |date=2001 |title=DMOS: a generic document recognition method, application to an automatic generator of musical scores, mathematical formulae and table structures recognition systems |conference=Sixth International Conference on Document Analysis and Recognition |pages=215-220 |doi=10.1109/ICDAR.2001.953786}}</ref> |

|||

* Audiveris<ref>[https://github.com/audiveris Audiveris]</ref> |

|||

* CANTOR<ref>[https://www.cs.waikato.ac.nz/~davidb/home.html CANTOR]</ref> |

|||

== Commercial Software == |

|||

Most of the commercial desktop applications that were developed in the last 20 years have been shut down again due to the lack of commercial success, leaving only a few vendors that are still developing, maintaining, and selling OMR products. These are: |

|||

* [[Capella (notation program)#Companion products|capella-scan]]<ref name=capella-scan>[https://www.capella-software.com/us/index.cfm/products/capella-scan/eighth-rest-or-smudge/ Info capella-scan]</ref> |

|||

* PhotoScore<ref name=photoscore>[https://www.neuratron.com/photoscore.htm PhotoScore]</ref> |

|||

* PDFtoMusic <ref>[http://www.myriad-online.com/en/products/pdftomusicpro.htm PDFtoMusic]</ref> |

|||

Some of these products claim extremely high recognition rates with up to 100% accuracy <ref name=capella-scan/><ref name=photoscore/> but fail to disclose how those numbers were obtained, making it nearly impossible to verify them and compare different OMR systems. |

|||

Apart from the desktop applications, a range of mobile applications have emerged as well, but received mixed reviews on the Google Play store and were probably discontinued (or at least did not receive any update since 2017). <ref>[https://play.google.com/store/apps/details?id=uk.co.dolphin_com.camrascore PlayScore Pro]</ref><ref>[https://play.google.com/store/apps/details?id=com.gearup.iseenotes iSeeNotes]</ref><ref>[https://play.google.com/store/apps/details?id=com.neuratron.notatemenow NotateMe Now]</ref> |

|||

= See also = |

|||

* [[Music information retrieval]] (MIR) is the broader problem of retrieving music information from media including music scores and audio. |

* [[Music information retrieval]] (MIR) is the broader problem of retrieving music information from media including music scores and audio. |

||

* [[Optical character recognition]] (OCR) is the recognition of text which can be applied to [[document retrieval]], analogously to OMR and MIR. However, a complete OMR system must faithfully represent text that is present in music scores, so OMR is in fact a superset of OCR.<ref>{{Cite journal|last=Bainbridge|first=David|last2=Bell|first2=Tim|year=2001|title=The challenge of optical music recognition|url=https://www.researchgate.net/publication/220147775|journal=Computers and the Humanities|volume=35|issue=2|pages=95–121|accessdate=23 February 2017|via=|doi=10.1023/A:1002485918032}}</ref> |

* [[Optical character recognition]] (OCR) is the recognition of text which can be applied to [[document retrieval]], analogously to OMR and MIR. However, a complete OMR system must faithfully represent text that is present in music scores, so OMR is in fact a superset of OCR.<ref name=Bainbridge2001>{{Cite journal|last=Bainbridge|first=David|last2=Bell|first2=Tim|year=2001|title=The challenge of optical music recognition|url=https://www.researchgate.net/publication/220147775|journal=Computers and the Humanities|volume=35|issue=2|pages=95–121|accessdate=23 February 2017|via=|doi=10.1023/A:1002485918032}}</ref> |

||

= |

= References = |

||

{{Reflist}} |

{{Reflist}} |

||

= External links = |

|||

* [https://omr-research.net/ Website on Optical Music Recognition research] |

|||

* [https://github.com/omr-research Github page for open-source projects on Optical Music Recognition] |

|||

* [https://omr-research.github.io/ Bibliography on OMR-Research] |

|||

* [https://www.youtube.com/playlist?list=PL1jvwDVNwQke-04UxzlzY4FM33bo1CGS0 Recording of the ISMIR 2018 tutorial “Optical Music Recognition for Dummies”] |

|||

* [http://www.music-notation.info/en/compmus/omr.html Optical Music Recognition (OMR): Programs and scientific papers] |

* [http://www.music-notation.info/en/compmus/omr.html Optical Music Recognition (OMR): Programs and scientific papers] |

||

* [http://ddmal.music.mcgill.ca/wiki/Optical_Music_Recognition_Bibliography Optical Music Recognition Bibliography]: A comprehensive list of papers published in OMR. |

|||

* [http://www.informatics.indiana.edu/donbyrd/OMRSystemsTable.html OMR (Optical Music Recognition) Systems]: Comprehensive table of OMR (Last updated: 30 Jan. 2007). |

* [http://www.informatics.indiana.edu/donbyrd/OMRSystemsTable.html OMR (Optical Music Recognition) Systems]: Comprehensive table of OMR (Last updated: 30 Jan. 2007). |

||

* [http://dl.acm.org/citation.cfm?id=1245321 Assessing Optical Music Recognition Tools] Authors: Pierfrancesco Bellini, Ivan Bruno, Paolo Nesi |

|||

* [http://www.leeds.ac.uk/icsrim/omr/ Optical Manuscript Analysis] University of Leeds research project. |

|||

[[Category:Music OCR software| ]] |

|||

Revision as of 15:07, 15 July 2019

Optical Music Recognition Optical Music Recognition (OMR) is a field of research that investigates how to computationally read music notation in documents.[1] The goal of OMR is to teach the computer to read and interpret sheet music and produce a machine-readable version of the written music score.

In the past it has, misleadingly, also been called Music OCR.

History

Research into the automatic recognition of printed sheet music started in the late 1960s at the MIT when the first image scanners became affordable for research institutes. [2] [3] [4] Due to memory restrictions of the used computers, the first attempts were limited to only a few measures of music (see first published scan of music). In 1984, a Japanese research group from Waseda University developed a specialized robot, called WABOT (WAseda roBOT), which was capable of reading the music sheet in front of it and accompanying a singer on an electric organ. [5] [6]

Substantial research in the early days of OMR has also been conducted by Ichiro Fujinaga, Nicholas Carter, Kia Ng, David Bainbridge, and Tim Bell, who developed many of the techniques that are still being used in some systems today.

With the widespread availability of cheap flatbed scanners, OMR became more popular among researchers and a number of projects were started, including the development of the first commercial application MIDISCAN (now SmartScore), which was released in 1991 by Musitek Corporation.

The availability of smartphones with good cameras and sufficient computational power, paved the way to mobile solutions where the user takes a picture with the smartphone and the device directly processes the image.

Relation to Other Fields

Optical Music Recognition relates to other fields of research, including Computer Vision, Document Analysis, and Music Information Retrieval. It is relevant for practicing musicians and composers that could use OMR systems as a means to enter music into the computer and thus ease the process of composing, transcribing, and editing music. In a library, an OMR system could make music scores searchable [7] and for musicologists it would allow to conduct quantitative musicological studies at scale. [8]

OMR vs. OCR

Optical Music Recognition has frequently been compared to Optical Character Recognition. The biggest difference is that music notation is a featural writing system. This means that while the alphabet consists of well-defined primitives (e.g., stems, noteheads, or flags), it is their configuration - how they are placed and arranged on the staff - that determines the semantics and how it should be interpreted.

The second major distinction is the fact that while an OCR system does not go beyond recognizing letters and words, an OMR system is expected to also recover the semantics of music. Meaning that the user expects that the vertical position of a note (graphical concept) is being translated into the pitch (musical concept) by applying the rules of music notation. Notice that there is no proper equivalent in text recognition. By analogy, recovering the music from an image of a music sheet can be as challenging as recovering the HTML source code from the screenshot of a website.

The third difference comes from the used character set. Although writing systems like Chinese have extraordinarily complex character sets, the character set of primitives for OMR spans a much greater range of sizes, ranging from tiny elements such as a dot to big elements that potentially span an entire page such as a brace. Some systems have a nearly unrestricted appearance like slurs, that are only defined as more-or-less smooth curves that may be interrupted anywhere.

Finally, music notation involves ubiquitous two-dimensional spatial relationships, whereas text can be read as a one-dimensional stream of information, once the baseline is established.

Approaches to OMR

The process of recognizing music scores is typically broken down into smaller steps that are handled with specialized pattern recognition algorithms.

Many competing approaches have been proposed with most of them sharing a pipeline architecture, where each step in this pipeline performs a certain operation, such as detecting and removing staff lines before moving on to the next stage. A common problem with that approach is that errors and artifacts that were made in one stage are propagated through the system and can heavily affect the performance. For example, if the staff line detection stage fails to correctly identify the existence of the music staffs, subsequent steps will probably ignore that region of the image, leading to missing information in the output.

Optical Music Recognition is frequently underestimated due to the seemingly easy nature of the problem: If provided with a perfect scan of typeset music, the visual recognition can be solved with a sequence of fairly simple algorithms, such as projections and template matching. However, the process gets significantly harder for poor scans or handwritten music, which many systems fail to recognize altogether. And even if all symbols would have been detected perfectly, it is still challenging to recover the musical semantics due to ambiguities and frequent violations of the rules of music notation (see the example of Chopin’s Nocturne below). Donald Byrd and Jakob Simonsen argue that OMR is difficult, because modern music notation is extremely complex. [9]

Donald Byrd also collected a number of interesting examples [10] as well as extreme examples [11] of music notation that demonstrate the sheer complexity of music notation.

Outputs of OMR Systems

Typical applications for OMR systems include the creation of an audible version of the music score (referred to as Replayability). A common way to create such a version is by generating a MIDI file, which can be synthesised into an audio file. MIDI files, though, are not capable of storing engraving information (how the notes were laid out) or enharmonic spelling.

If the music scores are recognized with the goal of human readability (referred to as Reprintability), the Structured Encoding has to be recovered, which includes precise information on the layout and engraving. Suitable formats to store this information include MEI and MusicXML.

Apart from those two applications, it might also be interesting to just extract metadata from the image or enable searching. In contrast to the first two applications, a lower level of comprehension of the music score might be sufficient to perform these tasks.

General Framework (2001)

In 2001, David Bainbridge and Tim Bell published their work on the challenges of OMR, where they reviewed previous research and extracted a general framework for OMR which has served as a template for many systems that were developed after 2001. [12] It contained four distinct stages with heavy emphasis on the visual detection of objects. They noticed that the reconstruction of the musical semantics was often omitted from published articles, because the used operations were specific to the output format.

Refined Framework (2012)

In 2012, Ana Rebelo et al. compiled a survey of techniques used for Optical Music Recognition. [13] They categorized the published research and refined the OMR pipeline into the four stages: Preprocessing, Music symbols recognition, Musical notation reconstruction and Final representation construction. This framework became the de-facto standard for OMR and is still being used today (although sometimes with a slightly different terminology). For each block, they give an overview of techniques that are used to tackle that problem. This publication is the most cited paper on OMR research as of 2019.

Deep Learning (since 2016)

With the advent of Deep learning, many computer vision problems have seen a shift from imperative programming with hand-crafted heuristics and feature engineering towards machine learning. In Optical Music Recognition, the staff processing stage, [14] the music object detection stage, [15] [16] [17] [18] as well as the music notation reconstruction stage [19] have seen successful attempts to solve them with deep learning. Even completely new approaches have been proposed, including solving OMR in an end-to-end fashion with Sequence-to-Sequence models, that take an image of music scores and directly produce the recognized music in a simplified format. [20] [21] [22]

Notable Scientific Projects

Staff Removal Challenge

For systems that were developed before 2016, staff detection and removal posed a significant obstacle. A scientific competition was organized to improve the state of the art and advance the field. [23] Due to excellent results and modern techniques that made the staff removal stage obsolete, this competition was discontinued.

However, the freely available CVC-MUSCIMA dataset that was developed for this challenge is still highly relevant for OMR research as it contains 1000 high-quality images of handwritten music scores, transcribed by 50 different musicians. It has been further extended into the MUSCIMA++ dataset, which contains detailed annotations for 140 out of 1000 pages.

SIMSSA

The Single Interface for Music Score Searching and Analysis project (SIMSSA) [24] is probably the largest project that attempts to teach computers to recognize musical scores and make them accessible. Several sub-projects have already been successfully completed, including the Liber Usualis [25] and Cantus Ultimus. [26]

TROMPA

Towards Richer Online Music Public-domain Archives (TROMPA) is an international research project, sponsored by the European Union that investigates how to make public-domain digital music resources more accessible. [27]

Datasets

The development of OMR systems greatly benefits from a dataset of sufficient size and diversity to ensure the system works under various conditions. However, due to legal reasons and potential copyright violations, it is challenging to compile and publish such a dataset. The most notable datasets for OMR are referenced and summarized by the OMR Datasets project [28] and include the CVC-MUSCIMA [29] , MUSCIMA++ [30] , DeepScores [31] , PrIMuS [32] , HOMUS [33] , and SEILS dataset [34] , as well as the Universal Music Symbol Collection. [35]

Software

Academic and Open-Source Software

A large number of OMR projects have been realized in academia but only a few of them reached a mature state and were successfully deployed to users. These systems are:

Commercial Software

Most of the commercial desktop applications that were developed in the last 20 years have been shut down again due to the lack of commercial success, leaving only a few vendors that are still developing, maintaining, and selling OMR products. These are:

- capella-scan[42]

- PhotoScore[43]

- PDFtoMusic [44]

Some of these products claim extremely high recognition rates with up to 100% accuracy [42][43] but fail to disclose how those numbers were obtained, making it nearly impossible to verify them and compare different OMR systems.

Apart from the desktop applications, a range of mobile applications have emerged as well, but received mixed reviews on the Google Play store and were probably discontinued (or at least did not receive any update since 2017). [45][46][47]

See also

- Music information retrieval (MIR) is the broader problem of retrieving music information from media including music scores and audio.

- Optical character recognition (OCR) is the recognition of text which can be applied to document retrieval, analogously to OMR and MIR. However, a complete OMR system must faithfully represent text that is present in music scores, so OMR is in fact a superset of OCR.[12]

References

- ^ Pacha, Alexander (2019). Self-Learning Optical Music Recognition (PDF) (PhD). TU Wien, Austria. doi:10.13140/RG.2.2.18467.40484.

- ^ Fujinaga, Ichiro (2018). The History of OMR on YouTube

- ^ Pruslin, Denis (1966). Automatic Recognition of Sheet Music (PhD). Massachusetts Institute of Technology, Cambridge, Massachusetts, USA.

- ^ Prerau, David S. (1971). Computer pattern recognition of printed music. Fall Joint Computer Conference. pp. 153–162.

- ^ "WABOT -WAseda roBOT". Waseda University Humanoid. Retrieved July 14, 2019.

- ^ "Wabot's entry in the IEEE collection of Robots". IEEE. Retrieved July 14, 2019.

- ^ Laplante, Audrey; Fujinaga, Ichiro (2016). Digitizing Musical Scores: Challenges and Opportunities for Libraries. 3rd International Workshop on Digital Libraries for Musicology. pp. 45–48.

- ^ Hajič, Jan jr.; Kolárová, Marta; Pacha, Alexander; Calvo-Zaragoza, Jorge (2018). How Current Optical Music Recognition Systems Are Becoming Useful for Digital Libraries. 5th International Conference on Digital Libraries for Musicology. Paris, France. pp. 57–61.

- ^ Byrd, Donald; Simonsen, Jakob Grue (2015). "Towards a Standard Testbed for Optical Music Recognition: Definitions, Metrics, and Page Images". Journal of New Music Research. 44 (3): 169–195. doi:10.1080/09298215.2015.1045424.

- ^ "Gallery of Interesting Music Notation". Donald Byrd. Retrieved July 14, 2019.

- ^ "Extremes of Conventional Music Notation". Donald Byrd. Retrieved July 14, 2019.

- ^ a b Bainbridge, David; Bell, Tim (2001). "The challenge of optical music recognition". Computers and the Humanities. 35 (2): 95–121. doi:10.1023/A:1002485918032. Retrieved 23 February 2017.

- ^ Rebelo, Ana; Fujinaga, Ichiro; Paszkiewicz, Filipe; Marcal, Andre R.S.; Guedes, Carlos; Cardoso, Jamie dos Santos (2012). "Optical music recognition: state-of-the-art and open issues" (PDF). International Journal of Multimedia Information Retrieval. 1 (3): 173–190. doi:10.1007/s13735-012-0004-6.

- ^ Castellanos, Fancisco J.; Calvo-Zaragoza, Jorge; Vigliensoni, Gabriel; Fujinaga, Ichiro (2018). Document Analysis of Music Score Images with Selectional Auto-Encoders (PDF). 19th International Society for Music Information Retrieval Conference. Paris, France. pp. 256–263.

- ^ Tuggener, Lukas; Elezi, Ismail; Schmidhuber, Jürgen; Stadelmann, Thilo (2018). Deep Watershed Detector for Music Object Recognition (PDF). 19th International Society for Music Information Retrieval Conference. Paris, France. pp. 271–278.

- ^ Hajič, Jan jr.; Dorfer, Matthias; Widmer, Gerhard; Pecina, Pavel (2018). Towards Full-Pipeline Handwritten OMR with Musical Symbol Detection by U-Nets (PDF). 19th International Society for Music Information Retrieval Conference. Paris, France. pp. 225–232.

- ^ Pacha, Alexander; Hajič, Jan jr.; Calvo-Zaragoza, Jorge (2018). "A Baseline for General Music Object Detection with Deep Learning". Applied Sciences. 8 (9): 1488–1508. doi:10.3390/app8091488.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Pacha, Alexander; Choi, Kwon-Young; Coüasnon, Bertrand; Ricquebourg, Yann; Zanibbi, Richard; Eidenberger, Horst (2018). Handwritten Music Object Detection: Open Issues and Baseline Results. 13th International Workshop on Document Analysis Systems. pp. 163–168. doi:10.1109/DAS.2018.51.

- ^ Pacha, Alexander; Calvo-Zaragoza, Jorge; Hajič, Jan jr. (2019). Learning Notation Graph Construction for Full-Pipeline Optical Music Recognition. 20th International Society for Music Information Retrieval Conference (in press).

- ^ van der Wel, Eelco; Ullrich, Karen (2017). Optical Music Recognition with Convolutional Sequence-to-Sequence Models (PDF). 18th International Society for Music Information Retrieval Conference. Suzhou, China.

- ^ Calvo-Zaragoza, Jorge; Rizo, David (2018). "End-to-End Neural Optical Music Recognition of Monophonic Scores". Applied Sciences. 8 (4). doi:10.3390/app8040606.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Baró, Arnau; Riba, Pau; Calvo-Zaragoza, Jorge; Fornés, Alicia (2017). Optical Music Recognition by Recurrent Neural Networks. 14th International Conference on Document Analysis and Recognition. pp. 25–26. doi:10.1109/ICDAR.2017.260.

- ^ Fornés, Alicia; Dutta, Anjan; Gordo, Albert; Lladós, Josep (2013). "The 2012 Music Scores Competitions: Staff Removal and Writer Identification". Graphics Recognition. New Trends and Challenges. Springer: 173–186. doi:10.1007/978-3-642-36824-0_17.

- ^ "The SIMSSA project website". McGill University. Retrieved July 14, 2019.

- ^ "The Liber Usualis project website". McGill University. Retrieved July 14, 2019.

- ^ "The Cantus Ultimus project website". McGill University. Retrieved July 14, 2019.

- ^ "The TROMPA project website". Trompa consortium. Retrieved July 14, 2019.

- ^ "The OMR Datasets Project (Github Repository)". Pacha, Alexander. Retrieved July 14, 2019.

- ^ Fornés, Alicia; Dutta, Anjan; Gordo, Albert; Lladós, Josep (2012). "CVC-MUSCIMA: A Ground-truth of Handwritten Music Score Images for Writer Identification and Staff Removal". International Journal on Document Analysis and Recognition. 15 (3): 243–251. doi:10.1007/s10032-011-0168-2.

- ^ Hajič, Jan jr.; Pecina, Pavel (2017). The MUSCIMA++ Dataset for Handwritten Optical Music Recognition. 14th International Conference on Document Analysis and Recognition. Kyoto, Japan. pp. 39–46. doi:10.1109/ICDAR.2017.16.

- ^ Tuggener, Lukas; Elezi, Ismail; Schmidhuber, Jürgen; Pelillo, Marcello; Stadelmann, Thilo (2018). DeepScores - A Dataset for Segmentation, Detection and Classification of Tiny Objects. 24th International Conference on Pattern Recognition. Beijing, China. doi:10.21256/zhaw-4255.

- ^ Calvo-Zaragoza, Jorge; Rizo, David (2018). Camera-PrIMuS: Neural End-to-End Optical Music Recognition on Realistic Monophonic Scores (PDF). 19th International Society for Music Information Retrieval Conference. Paris, France. pp. 248–255.

- ^ Calvo-Zaragoza, Jorge; Oncina, Jose (2014). Recognition of Pen-Based Music Notation: The HOMUS Dataset. 22nd International Conference on Pattern Recognition. pp. 3038–3043. doi:10.1109/ICPR.2014.524.

- ^ Parada-Cabaleiro, Emilia; Batliner, Anton; Baird, Alice; Schuller, Björn (2017). The SEILS Dataset: Symbolically Encoded Scores in Modern-Early Notation for Computational Musicology (PDF). 18th International Society for Music Information Retrieval Conference. Suzhou, China. pp. 575–581.

- ^ Pacha, Alexander; Eidenberger, Horst (2017). Towards a Universal Music Symbol Classifier. 14th International Conference on Document Analysis and Recognition. Kyoto, Japan. pp. 35–36. doi:10.1109/ICDAR.2017.265.

- ^ Gamera

- ^ Aruspix

- ^ Rodan

- ^ Coüasnon, Bertrand (2001). DMOS: a generic document recognition method, application to an automatic generator of musical scores, mathematical formulae and table structures recognition systems. Sixth International Conference on Document Analysis and Recognition. pp. 215–220. doi:10.1109/ICDAR.2001.953786.

- ^ Audiveris

- ^ CANTOR

- ^ a b Info capella-scan

- ^ a b PhotoScore

- ^ PDFtoMusic

- ^ PlayScore Pro

- ^ iSeeNotes

- ^ NotateMe Now

External links

- Website on Optical Music Recognition research

- Github page for open-source projects on Optical Music Recognition

- Bibliography on OMR-Research

- Recording of the ISMIR 2018 tutorial “Optical Music Recognition for Dummies”

- Optical Music Recognition (OMR): Programs and scientific papers

- OMR (Optical Music Recognition) Systems: Comprehensive table of OMR (Last updated: 30 Jan. 2007).