Propagation delay

Networking

Propagation delay is defined as the amount of time it takes for a certain number of bytes to be transferred over a medium. Propagation delay is the distance between the two routers divided by the propagation speed.

Propagation delay = d/s where d is the distance and s is the speed.

Electronics

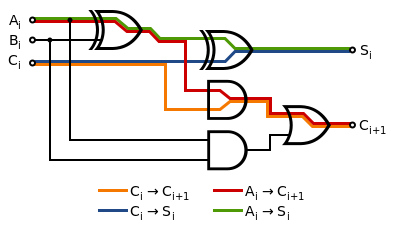

In electronics, digital circuits and digital electronics, the propagation delay, or gate delay, is the length of time starting from when the input to a logic gate becomes stable and valid, to the time that the output of that logic gate is stable and valid. Often this refers to the time required for the output to reach 50% of its final output level when the input changes. Reducing gate delays in digital circuits allows them to process data at a faster rate and improve overall performance.

The difference in propagation delays of logic elements is the major contributor to glitches in asynchronous circuits as a result of race conditions.

The principle of logical effort utilizes propagation delays to compare designs implementing the same logical statement.

Propagation delay increases with operating temperature, marginal supply voltage as well as an increased output load capacitance. The latter is the largest contributor to the increase of propagation delay. If the output of a logic gate is connected to a long trace or used to drive many other gates (high fanout) the propagation delay increases substantially.

Physics

In physics, particularly in the electromagnetism field, the propagation delay is the length of time it takes for a signal to travel to its destination. For example, in the case of an electric signal, it is the time taken for the signal to travel through a wire. See also, velocity of propagation.

See also