Latent learning

Latent learning is the subconscious retention of information without reinforcement or motivation. In latent learning, one changes behavior only when there is sufficient motivation later than when they subconsciously retained the information.[1]

Latent learning is a form of observational learning, which is when the observation of something, rather than experiencing something directly, can effect later behavior. Observational learning can be many things. A human observes a behavior, and later repeats that behavior at another time (not direct imitation) even though no one is rewarding them to do that behavior.

In the social learning theory, humans observe others receiving rewards or punishments, which invokes feelings in the observer and motivates them to change their behavior.

In latent learning particularly, there is no observation of a reward or punishment. Latent learning is simply animals observing their surroundings with no particular motivation to learn the geography of it; however, at a later date, they are able to exploit this knowledge when there is motivation - such as the biological need to find food or escape trouble.

The lack of reinforcement, associations, or motivation with a stimulus is what differentiates this type of learning from the other learning theories such as operant conditioning or classical conditioning.[2]

Latent Learning vs Other Types of Learning

Latent Learning vs Classical Conditioning

Classical conditioning is when an animal eventually subconsciously anticipates a biological stimulus such as food when they experience a seemingly random stimulus, due to a repeated experience of their association. One significant example of classical conditioning is Ivan Pavlov's experiment in which dogs showed a conditioned response to a bell the experimenters had purposely tried to associate with feeding time. After the dogs had been conditioned, the dogs no longer only salivated for the food, which was a biological need and therefore an unconditioned stimulus. The dogs began to salivate at the sound of a bell, the bell being a conditioned stimulus and the salivating now being a conditioned response to it. They salivated at the sound of a bell because they were anticipating food.

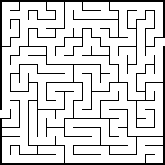

Latent learning is when an animal learns something even though it has no motivation or stimulus associating a reward with learning it. Animals are therefore able to simply be exposed to information for the sake of information and it will come to their One significant example of latent learning is rats subconsciously creating mental maps and using that information to be able to find a biological stimulus such as food faster later on when there is a reward.[3] These rats due to already knowing the maze of the map, though there was no motivation to learn the maze before the food was introduced.

Latent Learning vs Operant Conditioning

Operant Conditioning is the ability to tailor an animals behavior using rewards and punishments. Latent Learning is tailoring an animals behavior by giving them time to create a mental map before a stimulus is introduced.

Latent Learning vs Social Learning Theory

Social learning theory suggests that behaviors can be learned through observation, but actively cognizant observation. In this theory, observation leads to a change in behavior more often when rewards or punishments associated with specific behaviors are observed. Latent learning theory is similar in the observation aspect, but again it is different due to the lack of reinforcement needed for learning.

Early studies

In a classic study by Edward C. Tolman, three groups of rats were placed in mazes and their behavior observed each day for more than two weeks. The rats in Group 1 always found food at the end of the maze; the rats in Group 2 never found food; and the rats in Group 3 found no food for 10 days, but then received food on the eleventh. The Group 1 rats quickly learned to rush to the end of the maze; Group 2 rats wandered in the maze but did not preferentially go to the end. Group 3 acted the same as the Group 2 rats until food was introduced on Day 11; then they quickly learned to run to the end of the maze and did as well as the Group 1 rats by the next day. This showed that the Group 3 rats had learned about the organisation of the maze, but without the reinforcement of food. Until this study, it was largely believed that reinforcement was necessary for animals to learn such tasks.[4] Other experiments showed that latent learning can happen in shorter durations of time, e.g. 3–7 days.[5] Among other early studies, it was also found that animals allowed to explore the maze and then detained for one minute in the empty goal box learned the maze much more rapidly than groups not given such goal orientation.[6][clarification needed]

In 1949, John Seward conducted studies in which rats were placed in a T-maze with one arm coloured white and the other black. One group of rats had 30 mins to explore this maze with no food present, and the rats were not removed as soon as they had reached the end of an arm. Seward then placed food in one of the two arms. Rats in this exploratory group learned to go down the rewarded arm much faster than another group of rats that had not previously explored the maze.[7] Similar results were obtained by Bendig in 1952 where rats were trained to escape from water in a modified T-maze with food present while satiated for food, then tested while hungry. Upon being returned to the maze while food deprived, the rats learned where the food was located at a rate that increased with the number of pre-exposures given the rat in the training phase. This indicated varying levels of latent learning.[8]

Most early studies of latent learning were conducted with rats, but a study by Stevenson in 1954 explored this method of learning in children.[9] Stevenson required children to explore a series of objects to find a key, and then he determined the knowledge the children had about various non-key objects in the set-up.[9] The children found non-key objects faster if they had previously seen them, indicating they were using latent learning. Their ability to learn in this way increased as they became older.[9]

In 1982, Wirsig and co-researchers used the taste of sodium chloride to explore which parts of the brain are necessary for latent learning in rats. Decorticate rats were just as able as normal rats to accomplish the latent learning task.[10]

More recent studies

Latent Learning in Infants

The human ability to perform latent learning seems to be a major contributor to why infants can use knowledge they learned while they did not have the skills to use them. For example, infants do not gain the ability to imitate until they are 6 months. In one experiment, one group of infants was exposed to hand puppets A and B simultaneously at the age of three-months. Another control group, the same age, was only presented to with puppet A. All of the infants were then periodically presented with puppet A until six-months of age. At six-months of age, the experimenters performed a target behavior on the first puppet while all the infants watched. Then, all the infants were presented with puppet A and B. The infants that had seen both puppets at 3-months of age imitated the target behavior on puppet B at a significantly higher rate than the control group which had not seen the two puppets paired. This suggests that the pre-exposed infants had formed an association between the puppets without any reinforcement. This exhibits latent learning in infants, showing that infants can learn by observation, even when they do not show any indication that they are learning until they are older. [11]

The Impact of Different Drugs on Latent Learning

Many drugs abused by humans imitate dopamine, the neurotransmitter that gives humans motivation to seek rewards. [12] It is shown that zebra-fish can still latently learn about rewards while lacking dopamine if they are given caffeine. If they were given caffeine before learning, then they could use the knowledge they learned to find the reward when they were given dopamine at a later time. [13]

Alcohol may impede on latent learning. Some zebra-fish were exposed to alcohol before exploring a maze, then continued to be exposed to alcohol when the maze had a reward introduced. It took these zebra-fish much longer to find a reward in the maze than the control group that had not been exposed to alcohol, even though they showed the same amount of motivation. However, it was shown that the longer the zebra-fish were exposed to alcohol, the less it had an effect of their latent learning. Another experiment group were zebra-fish representing alcohol withdrawal. Zebra-fish that performed the worst were those who had been exposed to alcohol for a long period, then had it removed before the reward was introduced. These fish lacked in motivation, motor dysfunction, and seemed to have not latently learned the maze. [14]

Other Factors Impacting Latent Learning

Though the specific area of the brain responsible for latent learning may not have been pinpointed, it was found that patients with medial temporal amnesia had particular difficulty with a latent learning task which required representational processing. [15]

Another study, conducted with mice, found intriguing evidence that the absence of a prion protein disrupts latent learning and other memory functions in the water maze latent learning task.[16] A lack of phencyclidine was also found to impair latent learning in a water finding task.[17]

References

- ^ Wade, Carol Tavris, Carole (1997). Psychology In Perspective (2nd ed.). New York: Longman. ISBN 978-0-673-98314-5.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ "Latent Learning | Introduction to Psychology". courses.lumenlearning.com. Retrieved 2019-02-05.

- ^ Johnson, A & DA, Crowe. (2009). Revisiting Tolman: theories and cognitive maps. Cogn Crit. 1. 43-72. http://www.cogcrit.umn.edu/docs/Johnson_Crowe_10.pdf

- ^ Tolman, E. C., & Honzik, C. H. (1930). Introduction and removal of reward, and maze performance in rats. University of California publications in psychology.

- ^ Reynolds, B. (1 January 1945). "A repetition of the Blodgett experiment on 'latent learning'". Journal of Experimental Psychology. 35 (6): 504–516. doi:10.1037/h0060742. PMID 21007969.

- ^ Karn, H. W.; Porter, J. M., Jr. (1 January 1946). "The effects of certain pre-training procedures upon maze performance and their significance for the concept of latent learning". Journal of Experimental Psychology. 36 (5): 461–469. doi:10.1037/h0061422. PMID 21000777.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Seward, John P. (1 January 1949). "An experimental analysis of latent learning". Journal of Experimental Psychology. 39 (2): 177–186. doi:10.1037/h0063169.

- ^ Bendig, A. W. (1 January 1952). "Latent learning in a water maze". Journal of Experimental Psychology. 43 (2): 134–137. doi:10.1037/h0059428. PMID 14927813.

- ^ a b c Stevenson, Harold W. (1 January 1954). "Latent learning in children". Journal of Experimental Psychology. 47 (1): 17–21. doi:10.1037/h0060086. PMID 13130805.

- ^ Wirsig, Celeste R.; Grill, Harvey J. (1 January 1982). "Contribution of the rat's neocortex to ingestive control: I. Latent learning for the taste of sodium chloride". Journal of Comparative and Physiological Psychology. 96 (4): 615–627. doi:10.1037/h0077911. PMID 7119179.

- ^ Campanella, Jennifer; Rovee-Collier, Carolyn (2005-05-01). "Latent Learning and Deferred Imitation at 3 Months". Infancy. 7 (3): 243–262. doi:10.1207/s15327078in0703_2. ISSN 1525-0008.

- ^ Di Chiara, G.; Imperato, A. (1988-07-01). "Drugs abused by humans preferentially increase synaptic dopamine concentrations in the mesolimbic system of freely moving rats". Proceedings of the National Academy of Sciences. 85 (14): 5274–5278. Bibcode:1988PNAS...85.5274D. doi:10.1073/pnas.85.14.5274. ISSN 0027-8424. PMC 281732. PMID 2899326.

- ^ Berridge, Kent C. (2005). "Espresso Reward Learning, Hold the Dopamine: Theoretical Comment on Robinson et al. (2005)". Behavioral Neuroscience. 119 (1): 336–341. doi:10.1037/0735-7044.119.1.336. ISSN 1939-0084.

- ^ Luchiari, Ana C.; Salajan, Diana C.; Gerlai, Robert (2015). "Acute and chronic alcohol administration: Effects on performance of zebrafish in a latent learning task". Behavioural Brain Research. 282: 76–83. doi:10.1016/j.bbr.2014.12.013. ISSN 0166-4328. PMC 4339105. PMID 25557800.

- ^ Myers, Catherine E. (1 January 2000). "Latent learning in medial temporal amnesia: Evidence for disrupted representational but preserved attentional processes". Neuropsychology. 14 (1). McGlinchey-Berroth, Regina, Warren, Stacey, Monti, Laura, Brawn, Catherine M., Gluck, Mark A.: 3–15. doi:10.1037/0894-4105.14.1.3. PMID 10674794.

- ^ Nishida, Noriyuki; Katamine, Shigeru; Shigematsu, Kazuto; Nakatani, Akira; Sakamoto, Nobuhiro; Hasegawa, Sumitaka; Nakaoke, Ryota; Atarashi, Ryuichiro; Kataoka, Yasufumi; Miyamoto, Tsutomu (1 January 1997). "Prion Protein Is Necessary for Latent Learning and Long-Term Memory Retention". Cellular and Molecular Neurobiology. 17 (5): 537–545. doi:10.1023/A:1026315006619.

- ^ Noda, A (2001). "Phencyclidine impairs latent learning in mice interaction between glutamatergic systems and sigma1 receptors". Neuropsychopharmacology. 24 (4): 451–460. doi:10.1016/S0893-133X(00)00192-5. PMID 11182540.