Optimality theory

This article needs additional citations for verification. (June 2018) |

Optimality theory (frequently abbreviated OT) is a linguistic model proposing that the observed forms of language arise from the optimal satisfaction of conflicting constraints. OT differs from other approaches to phonological analysis, which typically use rules rather than constraints. However, phonological models of representation, such as autosegmental phonology, prosodic phonology, and linear phonology (SPE), are equally compatible with rule-based and constraint-based models. OT views grammars as systems that provide mappings from inputs to outputs; typically, the inputs are conceived of as underlying representations, and the outputs as their surface realizations. It is an approach within the larger framework of generative grammar.

Optimality theory has its origin in a talk given by Alan Prince and Paul Smolensky in 1991[1] which was later developed in a book manuscript by the same authors in 1993.[2]

Overview

[edit]There are three basic components of the theory:

- Generator (Gen) takes an input, and generates the list of possible outputs, or candidates,

- Constraint component (Con) provides the criteria, in the form of strictly ranked violable constraints, used to decide between candidates, and

- Evaluator (Eval) chooses the optimal candidate based on the constraints, and this candidate is the output.

Optimality theory assumes that these components are universal. Differences in grammars reflect different rankings of the universal constraint set, Con. Part of language acquisition can then be described as the process of adjusting the ranking of these constraints.

Optimality theory as applied to language was originally proposed by the linguists Alan Prince and Paul Smolensky in 1991, and later expanded by Prince and John J. McCarthy. Although much of the interest in OT has been associated with its use in phonology, the area to which OT was first applied, the theory is also applicable to other subfields of linguistics (e.g. syntax and semantics).

Optimality theory is like other theories of generative grammar in its focus on the investigation of universal principles, linguistic typology and language acquisition.

Optimality theory also has roots in neural network research. It arose in part as an alternative to the connectionist theory of harmonic grammar, developed in 1990 by Géraldine Legendre, Yoshiro Miyata and Paul Smolensky. Variants of OT with connectionist-like weighted constraints continue to be pursued in more recent work (Pater 2009).

Input and Gen: the candidate set

[edit]Optimality theory supposes that there are no language-specific restrictions on the input. This is called "richness of the base". Every grammar can handle every possible input. For example, a language without complex clusters must be able to deal with an input such as /flask/. Languages without complex clusters differ on how they will resolve this problem; some will epenthesize (e.g. [falasak], or [falasaka] if all codas are banned) and some will delete (e.g. [fas], [fak], [las], [lak]).

Gen is free to generate any number of output candidates, however much they deviate from the input. This is called "freedom of analysis". The grammar (ranking of constraints) of the language determines which of the candidates will be assessed as optimal by Eval.[3]

Con: the constraint set

[edit]In optimality theory, every constraint is universal. Con is the same in every language. There are two basic types of constraints:

- Faithfulness constraints require that the observed surface form (the output) match the underlying or lexical form (the input) in some particular way; that is, these constraints require identity between input and output forms.

- Markedness constraints impose requirements on the structural well-formedness of the output.[4]

Each plays a crucial role in the theory. Markedness constraints motivate changes from the underlying form, and faithfulness constraints prevent every input from being realized as some completely unmarked form (such as [ba]).

The universal nature of Con makes some immediate predictions about language typology. If grammars differ only by having different rankings of Con, then the set of possible human languages is determined by the constraints that exist. Optimality theory predicts that there cannot be more grammars than there are permutations of the ranking of Con. The number of possible rankings is equal to the factorial of the total number of constraints, thus giving rise to the term factorial typology. However, it may not be possible to distinguish all of these potential grammars, since not every constraint is guaranteed to have an observable effect in every language. Two total orders on the constraints of Con could generate the same range of input–output mappings, but differ in the relative ranking of two constraints which do not conflict with each other. Since there is no way to distinguish these two rankings they are said to belong to the same grammar. A grammar in OT is equivalent to an antimatroid.[5] If rankings with ties are allowed, then the number of possibilities is an ordered Bell number rather than a factorial, allowing a significantly larger number of possibilities.[6]

Faithfulness constraints

[edit]McCarthy and Prince (1995) propose three basic families of faithfulness constraints:

- Max prohibits deletion (from "maximal").

- Dep prohibits epenthesis (from "dependent").

- Ident(F) prohibits alteration to the value of feature F (from "identical").

Each of the constraints' names may be suffixed with "-IO" or "-BR", standing for input/output and base/reduplicant, respectively—the latter of which is used in analysis of reduplication—if desired. F in Ident(F) is substituted by the name of a distinctive feature, as in Ident-IO(voice).

Max and Dep replace Parse and Fill proposed by Prince and Smolensky (1993), which stated "underlying segments must be parsed into syllable structure" and "syllable positions must be filled with underlying segments", respectively.[7][8] Parse and Fill serve essentially the same functions as Max and Dep, but differ in that they evaluate only the output and not the relation between the input and output, which is rather characteristic of markedness constraints.[9] This stems from the model adopted by Prince and Smolensky known as containment theory, which assumes the input segments unrealized by the output are not removed but rather "left unparsed" by a syllable.[10] The model put forth by McCarthy and Prince (1995, 1999), known as correspondence theory, has since replaced it as the standard framework.[8]

McCarthy and Prince (1995) also propose:

- I-Contig, violated when a word- or morpheme-internal segment is deleted (from "input-contiguity")

- O-Contig, violated when a segment is inserted word- or morpheme-internally (from "output-contiguity")

- Linearity, violated when the order of some segments is changed (i.e. prohibits metathesis)

- Uniformity, violated when two or more segments are realized as one (i.e. prohibits fusion)

- Integrity, violated when a segment is realized as multiple segments (i.e. prohibits unpacking or vowel breaking—opposite of Uniformity)

Markedness constraints

[edit]Markedness constraints introduced by Prince and Smolensky (1993) include:

| Name | Statement | Other names |

|---|---|---|

| Nuc | Syllables must have nuclei. | |

| −Coda | Syllables must have no codas. | NoCoda |

| Ons | Syllables must have onsets. | Onset |

| HNuc | A nuclear segment must be more sonorous than another (from "harmonic nucleus"). | |

| *Complex | A syllable must not have more than one segment in its onset, nucleus or coda. | |

| CodaCond | Coda consonants cannot have place features that are not shared by an onset consonant. | CodaCondition |

| NonFinality | A word-final syllable (or foot) must not bear stress. | NonFin |

| FtBin | A foot must be two syllables (or moras). | FootBinarity |

| Pk-Prom | Light syllables must not be stressed. | PeakProminence |

| WSP | Heavy syllables must be stressed (from "weight-to-stress principle"). | Weight-to-Stress |

Precise definitions in literature vary. Some constraints are sometimes used as a "cover constraint", standing in for a set of constraints that are not fully known or important.[11]

Some markedness constraints are context-free and others are context-sensitive. For example, *Vnasal states that vowels must not be nasal in any position and is thus context-free, whereas *VoralN states that vowels must not be oral when preceding a tautosyllabic nasal and is thus context-sensitive.[12]

Alignment constraints

[edit]This section needs expansion. You can help by adding to it. (June 2018) |

Local conjunctions

[edit]Two constraints may be conjoined as a single constraint, called a local conjunction, which gives only one violation each time both constraints are violated within a given domain, such as a segment, syllable or word. For example, [NoCoda & VOP]segment is violated once per voiced obstruent in a coda ("VOP" stands for "voiced obstruent prohibition"), and may be equivalently written as *VoicedCoda.[13][14] Local conjunctions are used as a way of circumventing the problem of phonological opacity that arises when analyzing chain shifts.[13]

Eval: definition of optimality

[edit]In the original proposal, given two candidates, A and B, A is better, or more "harmonic", than B on a constraint if A incurs fewer violations than B. Candidate A is more harmonic than B on an entire constraint hierarchy if A incurs fewer violations of the highest-ranked constraint distinguishing A and B. A is "optimal" in its candidate set if it is better on the constraint hierarchy than all other candidates. However, this definition of Eval is able to model relations that exceed regularity.[15]

For example, given the constraints C1, C2, and C3, where C1 dominates C2, which dominates C3 (C1 ≫ C2 ≫ C3), A beats B, or is more harmonic than B, if A has fewer violations than B on the highest ranking constraint which assigns them a different number of violations (A is "optimal" if A beats B and the candidate set comprises only A and B). If A and B tie on C1, but A does better than B on C2, A is optimal, even if A has however many more violations of C3 than B does. This comparison is often illustrated with a tableau. The pointing finger marks the optimal candidate, and each cell displays an asterisk for each violation for a given candidate and constraint. Once a candidate does worse than another candidate on the highest ranking constraint distinguishing them, it incurs a fatal violation (marked in the tableau by an exclamation mark and by shaded cells for the lower-ranked constraints). Once a candidate incurs a fatal violation, it cannot be optimal, even if it outperforms the other candidates on the rest of Con.

| Input | Constraint 1 | Constraint 2 | Constraint 3 | |

|---|---|---|---|---|

| a. ☞ | Candidate A | * | * | *** |

| b. | Candidate B | * | **! | |

Other notational conventions include dotted lines separating columns of unranked or equally ranked constraints, a check mark ✔ in place of a finger in tentatively ranked tableaux (denoting harmonic but not conclusively optimal), and a circled asterisk ⊛ denoting a violation by a winner; in output candidates, the angle brackets ⟨ ⟩ denote segments elided in phonetic realization, and □ and □́ denote an epenthetic consonant and vowel, respectively.[16] The "much greater than" sign ≫ (sometimes the nested ⪢) denotes the domination of a constraint over another ("C1 ≫ C2" = "C1 dominates C2") while the "succeeds" operator ≻ denotes superior harmony in comparison of output candidates ("A ≻ B" = "A is more harmonic than B").[17]

Constraints are ranked in a hierarchy of strict domination. The strictness of strict domination means that a candidate which violates only a high-ranked constraint does worse on the hierarchy than one that does not, even if the second candidate fared worse on every other lower-ranked constraint. This also means that constraints are violable; the winning (i.e. the most harmonic) candidate need not satisfy all constraints, as long as for any rival candidate that does better than the winner on some constraint, there is a higher-ranked constraint on which the winner does better than that rival. Within a language, a constraint may be ranked high enough that it is always obeyed; it may be ranked low enough that it has no observable effects; or, it may have some intermediate ranking. The term the emergence of the unmarked describes situations in which a markedness constraint has an intermediate ranking, so that it is violated in some forms, but nonetheless has observable effects when higher-ranked constraints are irrelevant.

An early example proposed by McCarthy and Prince (1994) is the constraint NoCoda, which prohibits syllables from ending in consonants. In Balangao, NoCoda is not ranked high enough to be always obeyed, as witnessed in roots like taynan (faithfulness to the input prevents deletion of the final /n/). But, in the reduplicated form ma-tayna-taynan 'repeatedly be left behind', the final /n/ is not copied. Under McCarthy and Prince's analysis, this is because faithfulness to the input does not apply to reduplicated material, and NoCoda is thus free to prefer ma-tayna-taynan over hypothetical ma-taynan-taynan (which has an additional violation of NoCoda).

Some optimality theorists prefer the use of comparative tableaux, as described in Prince (2002b). Comparative tableaux display the same information as the classic or "flyspeck" tableaux, but the information is presented in such a way that it highlights the most crucial information. For instance, the tableau above would be rendered in the following way.

| Constraint 1 | Constraint 2 | Constraint 3 | |

|---|---|---|---|

| A ~ B | e | W | L |

Each row in a comparative tableau represents a winner–loser pair, rather than an individual candidate. In the cells where the constraints assess the winner–loser pairs, "W" is placed if the constraint in that column prefers the winner, "L" if the constraint prefers the loser, and "e" if the constraint does not differentiate between the pair. Presenting the data in this way makes it easier to make generalizations. For instance, in order to have a consistent ranking some W must dominate all L's. Brasoveanu and Prince (2005) describe a process known as fusion and the various ways of presenting data in a comparative tableau in order to achieve the necessary and sufficient conditions for a given argument.

Example

[edit]As a simplified example, consider the manifestation of the English plural:

- /dɒɡ/ + /z/ → [dɒɡz] (dogs)

- /kæt/ + /z/ → [kæts] (cats)

- /dɪʃ/ + /z/ → [dɪʃɪz] (dishes)

Also consider the following constraint set, in descending order of domination:

| Type | Name | Description |

|---|---|---|

| Markedness | *SS | Two successive sibilants are prohibited. One violation for every pair of adjacent sibilants in the output. |

| Agree(Voice) | Output segments agree in specification of [±voice]. One violation for every pair of adjacent obstruents in the output which disagree in voicing. | |

| Faithfulness | Max | Maximizes all input segments in the output. One violation for each segment in the input that does not appear in the output. This constraint prevents deletion. |

| Dep | Output segments are dependent on having an input correspondent. One violation for each segment in the output that does not appear in the input. This constraint prevents insertion. | |

| Ident(Voice) | Maintains the identity of the [±voice] specification. One violation for each segment that differs in voicing between the input and output. |

| /dɒɡ/ + /z/ | *SS | Agree | Max | Dep | Ident | |

|---|---|---|---|---|---|---|

| a. ☞ | dɒɡz | |||||

| b. | dɒɡs | *! | * | |||

| c. | dɒɡɪz | *! | ||||

| d. | dɒɡɪs | *! | * | |||

| e. | dɒɡ | *! | ||||

| /kæt/ + /z/ | *SS | Agree | Max | Dep | Ident | |

|---|---|---|---|---|---|---|

| a. | kætz | *! | ||||

| b. ☞ | kæts | * | ||||

| c. | kætɪz | *! | ||||

| d. | kætɪs | *! | * | |||

| e. | kæt | *! | ||||

| /dɪʃ/ + /z/ | *SS | Agree | Max | Dep | Ident | |

|---|---|---|---|---|---|---|

| a. | dɪʃz | *! | * | |||

| b. | dɪʃs | *! | * | |||

| c. ☞ | dɪʃɪz | * | ||||

| d. | dɪʃɪs | * | *! | |||

| e. | dɪʃ | *! | ||||

No matter how the constraints are re-ordered, the allomorph [ɪs] will always lose to [ɪz]. This is called harmonic bounding. The violations incurred by the candidate [dɒɡɪz] are a subset of the violations incurred by [dɒɡɪs]; specifically, if you epenthesize a vowel, changing the voicing of the morpheme is a gratuitous violation of constraints. In the /dɒɡ/ + /z/ tableau, there is a candidate [dɒɡz] which incurs no violations whatsoever. Within the constraint set of the problem, [dɒɡz] harmonically bounds all other possible candidates. This shows that a candidate does not need to be a winner in order to harmonically bound another candidate.

The tableaux from above are repeated below using the comparative tableaux format.

| /dɒɡ/ + /z/ | *SS | Agree | Max | Dep | Ident |

|---|---|---|---|---|---|

| dɒɡz ~ dɒɡs | e | W | e | e | W |

| dɒɡz ~ dɒɡɪz | e | e | e | W | e |

| dɒɡz ~ dɒɡɪs | e | e | e | W | W |

| dɒɡz ~ dɒɡ | e | e | W | e | e |

| /kæt/ + /z/ | *SS | Agree | Max | Dep | Ident |

|---|---|---|---|---|---|

| kæts ~ kætz | e | W | e | e | L |

| kæts ~ kætɪz | e | e | e | W | L |

| kæts ~ kætɪs | e | e | e | W | e |

| kæts ~ kæt | e | e | W | e | L |

| /dɪʃ/ + /z/ | *SS | Agree | Max | Dep | Ident |

|---|---|---|---|---|---|

| dɪʃɪz ~ dɪʃz | W | W | e | L | e |

| dɪʃɪz ~ dɪʃs | W | e | e | L | W |

| dɪʃɪz ~ dɪʃɪs | e | e | e | e | W |

| dɪʃɪz ~ dɪʃ | e | e | W | L | e |

From the comparative tableau for /dɒɡ/ + /z/, it can be observed that any ranking of these constraints will produce the observed output [dɒɡz]. Because there are no loser-preferring comparisons, [dɒɡz] wins under any ranking of these constraints; this means that no ranking can be established on the basis of this input.

The tableau for /kæt/ + /z/ contains rows with a single W and a single L. This shows that Agree, Max, and Dep must all dominate Ident; however, no ranking can be established between those constraints on the basis of this input. Based on this tableau, the following ranking has been established:

- Agree, Max, Dep ≫ Ident

The tableau for /dɪʃ/ + /z/ shows that several more rankings are necessary in order to predict the desired outcome. The third row says nothing; there is no loser-preferring comparison in the third row. The first row reveals that either *SS or Agree must dominate Dep, based on the comparison between [dɪʃɪz] and [dɪʃz]. The fourth row shows that Max must dominate Dep. The second row shows that either *SS or Ident must dominate Dep. From the /kæt/ + /z/ tableau, it was established that Dep dominates Ident; this means that *SS must dominate Dep.

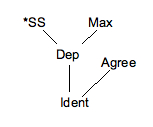

So far, the following rankings have been shown to be necessary:

- *SS, Max ≫ Dep ≫ Ident

While it is possible that Agree can dominate Dep, it is not necessary; the ranking given above is sufficient for the observed [dɪʃɪz] to emerge.

When the rankings from the tableaux are combined, the following ranking summary can be given:

- *SS, Max ≫ Agree, Dep ≫ Ident

- or

- *SS, Max, Agree ≫ Dep ≫ Ident

There are two possible places to put Agree when writing out rankings linearly; neither is truly accurate. The first implies that *SS and Max must dominate Agree, and the second implies that Agree must dominate Dep. Neither of these are truthful, which is a failing of writing out rankings in a linear fashion like this. These sorts of problems are the reason why most linguists utilize a lattice graph to represent necessary and sufficient rankings, as shown below.

A diagram that represents the necessary rankings of constraints in this style is a Hasse diagram.

Criticism

[edit]Optimality theory has attracted substantial amounts of criticism, most of which is directed at its application to phonology (rather than syntax or other fields).[18][19][20][21][22][23]

It is claimed that OT cannot account for phonological opacity (see Idsardi 2000, for example). In derivational phonology, effects that are inexplicable at the surface level but are explainable through "opaque" rule ordering may be seen; but in OT, which has no intermediate levels for rules to operate on, these effects are difficult to explain.

For example, in Quebec French, high front vowels triggered affrication of /t/, (e.g. /tipik/ → [tˢpɪk]), but the loss of high vowels (visible at the surface level) has left the affrication with no apparent source. Derivational phonology can explain this by stating that vowel syncope (the loss of the vowel) "counterbled" affrication—that is, instead of vowel syncope occurring and "bleeding" (i.e. preventing) affrication, it says that affrication applies before vowel syncope, so that the high vowel is removed and the environment destroyed which had triggered affrication. Such counterbleeding rule orderings are therefore termed opaque (as opposed to transparent), because their effects are not visible at the surface level.

The opacity of such phenomena finds no straightforward explanation in OT, since theoretical intermediate forms are not accessible (constraints refer only to the surface form and/or the underlying form). There have been a number of proposals designed to account for it, but most of the proposals significantly alter OT's basic architecture and therefore tend to be highly controversial. Frequently, such alterations add new types of constraints (which are not universal faithfulness or markedness constraints), or change the properties of Gen (such as allowing for serial derivations) or Eval. Examples of these include John J. McCarthy's sympathy theory and candidate chains theory.

A relevant issue is the existence of circular chain shifts, i.e. cases where input /X/ maps to output [Y], but input /Y/ maps to output [X]. Many versions of OT predict this to be impossible (see Moreton 2004, Prince 2007).

Optimality theory is also criticized as being an impossible model of speech production/perception: computing and comparing an infinite number of possible candidates would take an infinitely long time to process. Idsardi (2006) argues this position, though other linguists dispute this claim on the grounds that Idsardi makes unreasonable assumptions about the constraint set and candidates, and that more moderate instantiations of OT do not present such significant computational problems (see Kornai (2006) and Heinz, Kobele and Riggle (2009)).[24][25] Another common rebuttal to this criticism of OT is that the framework is purely representational. In this view, OT is taken to be a model of linguistic competence and is therefore not intended to explain the specifics of linguistic performance.[26][27]

Another objection to OT is that it is not technically a theory, in that it does not make falsifiable predictions. The source of this issue may be in terminology: the term theory is used differently here than in physics, chemistry, and other sciences. Specific instantiations of OT may make falsifiable predictions, in the same way specific proposals within other linguistic frameworks can. What predictions are made, and whether they are testable, depends on the specifics of individual proposals (most commonly, this is a matter of the definitions of the constraints used in an analysis). Thus, OT as a framework is best described[according to whom?] as a scientific paradigm.[28][irrelevant citation]

Theories within optimality theory

[edit]In practice, implementations of OT often make use of many concepts of phonological theories of representations, such as the syllable, the mora, or feature geometry. Completely distinct from these, there are sub-theories which have been proposed entirely within OT, such as positional faithfulness theory, correspondence theory (McCarthy and Prince 1995), sympathy theory, stratal OT, and a number of theories of learnability, most notably by Bruce Tesar. Other theories within OT are concerned with issues like the need for derivational levels within the phonological domain, the possible formulations of constraints, and constraint interactions other than strict domination.

Use outside of phonology

[edit]Optimality theory is most commonly associated with the field of phonology, but has also been applied to other areas of linguistics. Jane Grimshaw, Geraldine Legendre and Joan Bresnan have developed instantiations of the theory within syntax.[29][30] Optimality theoretic approaches are also relatively prominent in morphology (and the morphology–phonology interface in particular).[31][32]

In the domain of semantics, OT is less commonly used. But constraint-based systems have been developed to provide a formal model of interpretation.[33] OT has also been used as a framework for pragmatics.[34]

For orthography, constraint-based analyses have also been proposed, among others, by Richard Wiese[35] and Silke Hamann/Ilaria Colombo.[36] Constraints cover both the relations between sound and letter as well as preferences for spelling itself.

Notes

[edit]- ^ "Optimality". Proceedings of the talk given at Arizona Phonology Conference, University of Arizona, Tucson, Arizona.

- ^ Prince, Alan, and Smolensky, Paul (1993) "Optimality Theory: Constraint interaction in generative grammar." Technical Report CU-CS-696-93, Department of Computer Science, University of Colorado at Boulder.

- ^ Kager (1999), p. 20.

- ^ Prince, Alan (2004). Optimality Theory: Constraint Interaction in Generative Grammar. Paul Smolensky. Malden, MA: Blackwell Pub. ISBN 978-0-470-75940-0. OCLC 214281882.

- ^ Merchant, Nazarré; Riggle, Jason (2016-02-01). "OT grammars, beyond partial orders: ERC sets and antimatroids". Natural Language & Linguistic Theory. 34 (1): 241–269. doi:10.1007/s11049-015-9297-5. ISSN 1573-0859. S2CID 254861452.

- ^ Ellison, T. Mark; Klein, Ewan (2001), "Review: The Best of All Possible Words (review of Optimality Theory: An Overview, Archangeli, Diana & Langendoen, D. Terence, eds., Blackwell, 1997)", Journal of Linguistics, 37 (1): 127–143, JSTOR 4176645.

- ^ Prince & Smolensky (1993), p. 94.

- ^ a b McCarthy (2008), p. 27.

- ^ McCarthy (2008), p. 209.

- ^ Kager (1999), pp. 99–100.

- ^ McCarthy (2008), p. 224.

- ^ Kager (1999), pp. 29–30.

- ^ a b Kager (1999), pp. 392–400.

- ^ McCarthy (2008), pp. 214–20.

- ^ Frank, Robert; Satta, Giorgio (1998). "Optimality theory and the generative complexity of constraint violability". Computational Linguistics. 24 (2): 307–315. Retrieved 5 September 2021.

- ^ Tesar & Smolensky (1998), pp. 230–1, 239.

- ^ McCarthy (2001), p. 247.

- ^ Chomsky (1995)

- ^ Dresher (1996)

- ^ Hale & Reiss (2008)

- ^ Halle (1995)

- ^ Idsardi (2000)

- ^ Idsardi (2006)

- ^ Heinz, Jeffrey; Kobele, Gregory M.; Riggle, Jason (April 2009). "Evaluating the Complexity of Optimality Theory". Linguistic Inquiry. 40 (2): 277–288. doi:10.1162/ling.2009.40.2.277. ISSN 0024-3892. S2CID 14131378.

- ^ Kornai, András (2006). "Is OT NP-hard?" (PDF).

- ^ Kager, René (1999). Optimality Theory. Section 1.4.4: Fear of infinity, pp. 25–27.

- ^ Prince, Alan and Paul Smolensky. (2004): Optimality Theory: Constraint Interaction in Generative Grammar. Section 10.1.1: Fear of Optimization, pp. 215–217.

- ^ de Lacy (editor). (2007). The Cambridge Handbook of Phonology, p. 1.

- ^ McCarthy, John (2001). A Thematic Guide to Optimality Theory, Chapter 4: "Connections of Optimality Theory".

- ^ Legendre, Grimshaw & Vikner (2001)

- ^ Trommer (2001)

- ^ Wolf (2008)

- ^ Hendriks, Petra, and Helen De Hoop. "Optimality theoretic semantics". Linguistics and philosophy 24.1 (2001): 1-32.

- ^ Blutner, Reinhard; Bezuidenhout, Anne; Breheny, Richard; Glucksberg, Sam; Happé, Francesca (2003). Optimality Theory and Pragmatics. Springer. ISBN 978-1-349-50764-1.

- ^ Wiese, Richard (2004). "How to optimize orthography". Written Language and Literacy. 7 (2): 305–331. doi:10.1075/wll.7.2.08wie.

- ^ Hamann, Silke; Colombo, Ilaria (2017). "A formal account of the interaction of orthography and perception". Natural Language & Linguistic Theory. 35 (3): 683–714. doi:10.1007/s11049-017-9362-3. hdl:11245.1/bab74c16-4f58-4b1f-9507-cd51fbd6ae49. S2CID 254872721.

References

[edit]- Brasoveanu, Adrian, and Alan Prince (2005). Ranking & Necessity. ROA-794.

- Chomsky (1995). The Minimalist Program. Cambridge, Massachusetts: The MIT Press.

- Dresher, Bezalel Elan (1996): The Rise of Optimality Theory in First Century Palestine. GLOT International 2, 1/2, January/February 1996, page 8 (a humorous introduction for novices)

- Hale, Mark, and Charles Reiss (2008). The Phonological Enterprise. Oxford University Press.

- Halle, Morris (1995). Feature Geometry and Feature Spreading. Linguistic Inquiry 26, 1-46.

- Heinz, Jeffrey, Greg Kobele, and Jason Riggle (2009). Evaluating the Complexity of Optimality Theory. Linguistic Inquiry 40, 277–288.

- Idsardi, William J. (2006). A Simple Proof that Optimality Theory is Computationally Intractable. Linguistic Inquiry 37:271-275.

- Idsardi, William J. (2000). Clarifying opacity. The Linguistic Review 17:337-50.

- Kager, René (1999). Optimality Theory. Cambridge: Cambridge University Press.

- Kornai, Andras (2006). Is OT NP-hard?. ROA-838.

- Legendre, Géraldine, Jane Grimshaw and Sten Vikner. (2001). Optimality-theoretic Syntax. MIT Press.

- McCarthy, John (2001). A Thematic Guide to Optimality Theory. Cambridge: Cambridge University Press.

- McCarthy, John (2007). Hidden Generalizations: Phonological Opacity in Optimality Theory. London: Equinox.

- McCarthy, John (2008). Doing Optimality Theory: Applying Theory to Data. Blackwell.

- McCarthy, John and Alan Prince (1993): Prosodic Morphology: Constraint Interaction and Satisfaction. Rutgers University Center for Cognitive Science Technical Report 3.

- McCarthy, John and Alan Prince (1994): The Emergence of the Unmarked: Optimality in Prosodic Morphology. Proceedings of NELS.

- McCarthy, John J. & Alan Prince. (1995). Faithfulness and reduplicative identity. In J. Beckman, L. W. Dickey, & S. Urbanczyk (Eds.), University of Massachusetts occasional papers in linguistics (Vol. 18, pp. 249–384). Amherst, Massachusetts: GLSA Publications.

- Merchant, Nazarre & Jason Riggle. (2016) OT grammars, beyond partial orders: ERC sets and antimatroids. Nat Lang Linguist Theory, 34: 241. doi:10.1007/s11049-015-9297-5

- Moreton, Elliott (2004): Non-computable Functions in Optimality Theory. Ms. from 1999, published 2004 in John J. McCarthy (ed.), Optimality Theory in Phonology.

- Pater, Joe. (2009). Weighted Constraints in Generative Linguistics. "Cognitive Science" 33, 999–1035.

- Prince, Alan (2007). The Pursuit of Theory. In Paul de Lacy, ed., Cambridge Handbook of Phonology.

- Prince, Alan (2002a). Entailed Ranking Arguments. ROA-500.

- Prince, Alan (2002b). Arguing Optimality. In Coetzee, Andries, Angela Carpenter and Paul de Lacy (eds). Papers in Optimality Theory II. GLSA, UMass. Amherst. ROA-536.

- Prince, Alan and Paul Smolensky. (1993/2002/2004): Optimality Theory: Constraint Interaction in Generative Grammar. Blackwell Publishers (2004) [1](2002). Technical Report, Rutgers University Center for Cognitive Science and Computer Science Department, University of Colorado at Boulder (1993).

- Tesar, Bruce and Paul Smolensky (1998). Learnability in Optimality Theory. Linguistic Inquiry 29(2): 229–268.

- Trommer, Jochen. (2001). Distributed Optimality. PhD dissertation, Universität Potsdam.

- Wolf, Matthew. (2008). Optimal Interleaving: Serial Phonology-Morphology Interaction in a Constraint-Based Model. PhD dissertation, University of Massachusetts. ROA-996.