Help:Creating a bot

Robots or bots are automatic processes which interact with Wikipedia as though they were human editors. This article attempts to explain how to carry out the development of a bot for use on Wikipedia.

Why would I need to create a bot?

Bots can automate tasks and perform them much faster than humans. If you have a simple task which you need to perform lots of times (an example might be to add a template to all pages in a category with 1000 pages) then this is a task better suited to a bot than a human.

Do I have to create it myself?

This help page is mainly geared towards those who have some prior programming experience but are unsure of how to apply this knowledge to creating a Wikipedia bot.

If you lack any previous programming experience at all, it might be better to have others develop a bot for you. If so, please make a post to the bot requests page instead. If you have no previous programming experience, you could become an additional operator for one of the existing semi-bots (page currently unmaintained). If you want to have a stab at making your own bot anyway, feel free to read the rest of this article.

If you have no prior programming experience whatsoever, you should be warned that learning a programming language is a non-trivial task. However, it is not black magic and anyone can learn how to program with sufficient time and effort.

How does a Wikipedia bot work?

You must add a |reason= parameter to this Cleanup template – replace it with {{Cleanup|section|reason=<Fill reason here>}}, or remove the Cleanup template.

Overview of operation

Just like a human editor, a wikipedia bot can read wikipedia content and then make calculated decisions and then edit wikipedia content. However, computers are better than humans at some tasks and worse at others. They are able to make multiple reads and writes to pages per second. They are not so good at making value judgements based on page contents as humans are.

Like a human editor, a typical bot would operate by making one or more GET requests to Wikipedia via the HTTP protocol (just as a human editor does with their browser), making calculations on the data returned by the server, and making another request based on what they calculated at the previous step.

For example, if one bot would want to get the list of pages that link to a specific page, they would tell the server "I want Special:Whatlinkshere". The server would respond with generated html data. Then the bot would act depending on what the html contained.

APIs for bots

An API is an Application Programming Interface. It describes how a bot should interact with wikipedia.

The most common API is probably Screen scraping. Screenscraping involves requesting a wikipedia page, and then looking at the raw HTML (just like a human could do by clicking View->Source in most browsers) and then extracting certain values from the HTML. It is not recommended, as interface may change at any moment, and it's a huge server load. However currently this is the only way to get an HTML-formatted wiki page. Also currently scraping the edit page and making a http post submission based on the data from the edit form is the only way to perform edits.

You can pass action=render to index.php to reduce the amount of data to transfer and the dependence to the user interface. Other parameters of index.php may be useful: Manual:Parameters to index.php is a partial list of these parameters and their possible values.

Other APIs that may be of use:

- MediaWiki API extension - this is the next generation API that may one day be expanded to include data posting, as well as various data requests. Currently a number of features have already been implemented, such as revision+content, log events, watchlist, and many other. Data is available in many different formats (JSON,XML,YAML,...). See it live. Features are being ported from the older Query API interface.

- Status: Partially complete engine feature, available on all Wikimedia projects.

- Query API -- a multi-format API to query data directly from the Wiki-servers (Live)

- Status: Production. This is an extension enabled on all WikiMedia servers.

- Special:Export feature (bulk export of xml formatted data); see Parameters_to_Special:Export for arguments;

- Status: Production. Built-in engine feature, available on all WikiMedia servers.

- Raw page: passing

action=raworaction=raw&templates=expandto index.php allows for a direct access to the source of pages

The Wikipedia web servers are configured to grant requests for compressed (gzip) content. This can be exploited by including a line "Accept-Encoding: gzip" in the HTTP request header; if the HTTP reply header contains "Content-Encoding: gzip", the document is in gzip form; otherwise, it is in the regular uncompressed form; not checking the returned header may lead to incorrect results. Note that this is specific to the web server and not to the MediaWiki software; other sites employing MediaWiki may not have this feature.

Logging in as your bot when making edits

It will be necessary for an approved bot to be logged in under its user account before making edits. A bot is free to make read requests without logging in, but ideally it should log in for all activities. One can login via a POST at a URL like http://en.wikipedia.org/w/index.php?title=Special:Userlogin&action=submitlogin&type=login

passing the POST data wpName=BOTUSERNAME&wpPassword=BOTPASSWORD&wpRemember=1&wpLoginattempt=Log+in

Logging in is also possible using API, using an URL like

http://en.wikipedia.org/w/api.php?action=login&lgname=BOTUSERNAME&lgpassword=BOTPASSWORD. For security reasons, it is safer to use a URL like http://en.wikipedia.org/w/api.php?action=login to POST lgname=BOTUSERNAME&lgpassword=BOTPASSWORD

Once logged in, the bot will need to save Wikipedia cookies and make sure it passes these back when making edit requests. When editing on the English Wikipedia, these three cookies should be used: enwikiUserID, enwikiToken, and enwikiUserName. enwiki_session is required to actually make an edit (or commit some change), or else this message will appear.

See Hypertext Transfer Protocol and HTTP cookie for more details.

Edit tokens

Wikipedia uses a system of edit tokens for making edits to wikipedia pages and performing some other operations.

What this means is that it is not possible for your bot to pass a single POST request via HTTP to make a page edit. Just like a human editor, it is necessary for the bot to go through a number of stages:

- Request the page using a string such as

http://en.wikipedia.org/w/index.php?title=Wikipedia:Creating_a_bot&action=edit - The bot is returned an edit page with a text field. It is also returned (and this is important) an edit token as a hidden input form field in the html form tag. The edit token is of the approximate form

b66655fjr7fd5drr3411ss23456s65eg\. The edit token is associated to the current PHP session (identified by theenwiki_sessiontoken); as long as the session lasts (typically some hours), the same token can be used for editing other pages. - The bot must then make a "write my edit to the page" request passing back the edit token it has been issued.

Why am I being returned an empty or near-empty edit token?

Your bot may be returned an empty edit token or the edit token "+\". This indicates that the bot is not logged in; this may be due to a failure in its authentication with the server, or a failure in storing and returning the correct cookies.

Edit conflicts

A high possibility is that your bot will get caught in an edit conflict, in which another user will have made an edit between your requesting an edit (and getting your edit token) and actually making the edit. Another possible conflict is an edit/delete conflict, where the edited page is deleted between when the edit form is downloaded and submitted.

An edit conflict error may incorrectly result if the value of the wpEdittime variable in the data sent to the server is wrong. In a similar way, a wrong value of wpStarttime may result in an edit/delete conflict error.

To check for edit conflicts, sadly you will have to do it by checking the HTML document. Generally, if the server returns a "200 OK" HTML header when submitting a form, the edit has not been done (either because of a conflict, a loss of session token, protection, or a database locks). On a successful edit, the server returns a "302 Moved Temporarily", and the page URL in the Location field.

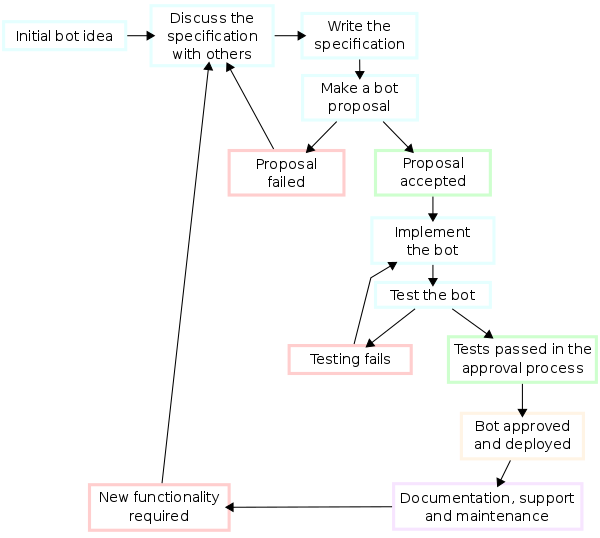

Overview of the process of developing a bot

Actually coding or writing a bot is only one part of developing a bot. You should generally follow the development cycle below. Failure to comply with this development cycle, particularly the sections on Wikipedia bot policy, may lead to your bot failing to be approved or being blocked from editing Wikipedia.

Software Elements Analysis:

- The first task in creating a Wikipedia bot is extracting the requirements or coming up with an idea. If you don't have an idea of what to write a bot for, you could pick up ideas at requests for work to be done by a bot.

- Make sure an existing bot isn't already doing what you think your bot should do. To see what tasks are already being performed by a bot, see the list of currently operating bots.

Specification:

- Specification is the task of precisely describing the software to be written, possibly in a rigorous way. You should come up with a detailed proposal of what you want it to do. Try to discuss this proposal with some editors and refine it based on feedback. Even a great idea can be made better by incorporating ideas from other editors.

- In the most basic form, your specified bot must meet the following criteria:

- The bot is harmless (it must not make edits that could be considered vandalism)

- The bot is useful (it provides a useful service more effectively than a human editor could), and

- The bot does not waste server resources.

- Make sure your proposal meets the criteria of Wikipedia bot policy

Software architecture:

- Think about how you might create it and which programming language and tools you would use. Architecture is concerned with making sure the software system will meet the requirements of the product as well as ensuring that future requirements can be addressed. There are different types of bots and the main body of the article below will cover this technical side.

Implementation:

Implementation (or coding) involves reducing design to code. It may be the most obvious part of the software engineering job but it is not necessarily the largest portion. In the implementation stage you should:

- Create a user page for your bot. Your bot's edits must not be made under your own account. Your bot will need its own account with its own username and password.

- Add these details to your proposal and post it to requests for bot approval

- Add the same information to the user page of the bot. You should also add a link to the approval page (whether approved or not) for each function. People will comment on your proposal and it will be either accepted or rejected.

- Code your bot in your chosen programming language.

Testing:

If accepted, it would probably be put on a trial period during which it may be run to fine-tune it and iron out any bugs. You should test your bot widely and ensure that it works correctly. At the end of the trial period it would hopefully be accepted.

Documentation:

An important (and often overlooked) task is documenting the internal design of your bot for the purpose of future maintenance and enhancement. This is especially important if you are going to allow clones of your bot. Ideally, you should post up the source code of your bot on its userpage if you want others to be able to run clones of it. This code should be well documented for ease of use.

Software Training and Support:

You should be ready to field queries or objections to your bot on your user talk page.

Maintenance:

Maintaining and enhancing your bot to cope with newly discovered problems or new requirements can take far more time than the initial development of the software. Not only may it be necessary to add code that does not fit the original design but just determining how software works at some point after it is completed may require significant effort.

- If you want to make a major functionality change to your bot in the future, you should request this as above using the requests for bot approval.

Considerations about type of bot to be developed

- Is it to run server-side or client-side?

- Is it to be manually assisted or fully automated?

- Will its requests be logged?

- Will it be reporting its actions to a human?

General guidelines for running a bot

In addition to the official bot policy, which covers the main points to consider when developing your bot, there are a number of more general advisory points to consider when developing your bot.

Bot best practice

- Try not to make more than 10 requests (read and write added together) per minute.

- Try to run the bot only at low server load times, or throttle the read/write request rate down at busy server times.

- Server lag can be checked with the maxlag parameter.

- Edit/write requests are more expensive in server time than read requests. Be edit-light.

- Do not make multi-threaded requests. Wait for one server request to complete before beginning another

- Back off on receiving errors from the server. Errors are an indication often of heavy server load. Try to respond by backing off and not repeatedly hammering the server with replacement requests.

- Try to consolidate edits. One single large edit is better than 10 smaller ones. Try and write your code with this in mind.

Common bot features you should consider implementing

Timers

You don't want your bot to edit too fast. Timers are ways for bots to control how fast they edit. A common way to do this is to send your bot to "sleep" for a certain time after performing an action before doing anything else. In Perl, this is accomplished by the simple command sleep(10); where 10 is the number of seconds to sleep for. In C# you can use Thread.Sleep(10000); which will make the bot sleep for 10 seconds. Make sure to call using System.Threading first.

Manual assistance

If your bot is doing anything that requires judgement or evaluation of context (e.g., correcting spelling) then you should consider making your bot manually-assisted. That is, not making edits without human confirmation.

Disabling the bot

It is good bot policy to have a feature to disable the bot's operation if it is requested. You should probably have the bot refuse to run if a message has been left on its talk page, on the assumption that the message may be a complaint against its activities. This can be checked by looking for the "You have new messages..." banner in the HTML for the edit form. Remember that if your bot goes bad, it is your responsibility to clean up after it! You can also have a page that will turn the bot off if True on the page is changed. This can be done by grabbing and checking the page before each edit.

Signature

Just like a human, if your bot makes edits to a talk page on wikipedia, it should sign its post with four tildes (~~~~). It should not sign any edits to text in the main namespace.

What technique and language should I use?

Semi-Bots or auxiliary software

In addition to true bots, there are a number of semi-bots known as auxiliary software available to anyone. Most of these take the form of enhanced web browsers with Wikipedia-specific functionality. The most popular one of these is AWB (Click here for a full list of these and other editing tools).

Bot clones

There are already a number of bots running on wikipedia. Many of these bots publish their source code and allow you to subscribe to them, download and operate a copy (clone) of them in order to perform useful tasks whilst browsing wikipedia.

Developing a new bot

Bots are essentially small computer applications or applets written in one or more programming languages. There are generally two types of bots: client-side and server-side. Client-side bots run on your local machine, and can only be run by you. Server-side bots are hosted online by your own machine or on a remote webserver, and (if you chose) can be initiated by others.

An overview is given below of various languages that can be used for writing a wikipedia bot. For each, a list is given of external articles advising how to get started programming in that language, as well as a list of existing libraries (sub-programs) you can use with your bot to prevent having to "reinvent the wheel" for basic functions.

Perl

Perl has a run-time compiler. This means that it is not necessary to compile builds of your code yourself as it is with other programming languages. Instead, you simply create your program using a text editor such as gvim. You then run the code by passing it to an interpreter. This can be located either on your own computer or on a remote computer (webserver). If located on a webserver, you can start your program running and interface with your program while it is running via the Common Gateway Interface from your browser. Perl is available for most operating systems, including Microsoft Windows (which most human editors use) and UNIX (which many webservers use). If your internet service provider provides you with webspace, the chances are good that you have access to a perl build on the webserver from which you can run your Perl programs.

An example of some Perl code:

open (INPUT, "< $filepageid") || &file_open_error("$filepageid");

while ($page_id=<INPUT>){

chomp($page_id);

push @page_ids, $page_id;

$hits_upto_sth->execute($page_id, $start_date);

$hits{upto}{$page_id} = $hits_upto_sth->fetchrow_array();

$hits_daily_sth->execute($page_id, $today);

$hits{today}{$page_id} = $hits_daily_sth->fetchrow_array();

$hits_daily_sth->execute($page_id, $yesterday);

$hits{yesterday}{$page_id} = $hits_daily_sth->fetchrow_array();

$hits_range_sth->execute($page_id, $start_of_30_days,

$end_of_30_days,);

$hits{monthly}{$page_id} = $hits_range_sth->fetchrow_array();

}

Guides to getting started with Perl programming:

- A Beginner's Introduction to Perl

- CGI Programming 101: Learn CGI Today!

- Perl lessons

- Get started learning Perl

Libraries:

- Anura -- Perl interface to MediaWiki using libwww-perl. Not recommended, as the current version does not check for edit conflicts.

- WWW::Mediawiki::Client -- perl module and command line client

- WWW::Wikipedia -- perl module for interfacing wikipedia

- Perl Wikipedia ToolKit -- perl modules, parsing wikitext and extracting data

- perlwikipedia � A fairly-complete Wikipedia bot framework written in Perl.

PHP

PHP can also be used for programming bots. PHP is an especially good choice if you wish to provide a webform-based interface to your bot. For example, suppose you wanted to create a bot for renaming categories. You could create an HTML form into which you will type the current and desired names of a category. When the form is submitted, your bot could read these inputs, then edit all the articles in the current category and move them to the desired category. (Obviously, any bot with a form interface would need to be secured somehow from random web surfers.)

To log in your bot, you will need to know how to use PHP to send and receive cookies; to edit with your bot, you will need to know how to send form variables. Libraries like Snoopy simplify such actions.

Libraries:

- BasicBot: A basic framework with sample bot scripts. Based on Snoopy.

- SxWiki: A very simple bot framework

Python

Introduction to use

An example of some Python code:

def addt5(x):

return x+5

def dotwrite(ast):

nodename = getNodeName()

label=symbol.sym_name.get(int(ast[0]),ast[0])

print ' %s [label="%s' % (nodename, label) ,

if isinstance(ast[1], str):

if ast[1].strip():

print '= %s "];' % ast[1]

else:

print '"]'

else:

print '"]'

children = []

for n, child in enumerate(ast[1:]):

children.append(dotwrite(child))

print ' %s -> {' % nodename,

for name in children:

print '%s' % name,

Getting started with Python:

Libraries:

- PyWikipediaBot -- Python Wikipedia Robot Framework (Home Page, SF Project Page)

Microsoft .NET

Languages include C#, Managed C++, Visual Basic .NET, J#, JScript .NET, IronPython, and Windows PowerShell.

Free Microsoft Visual Studio .NET development environment is often used.

Example of C# bot code, based on DotNetWikiBot Framework:

using System;

using DotNetWikiBot;

class MyBot : Bot

{

public static void Main()

{

Site enWP = new Site("http://en.wikipedia.org", "myLogin", "myPassword");

Page p = new Page(enWP, "Art");

p.Load();

p.AddToCategory("Visual arts");

p.Save("comment: category link added", true);

PageList pl = new PageList(enWP);

pl.FillFromPageHistory("Science", 30);

pl.LoadEx();

pl.SaveXMLDumpToFile("Dumps\\ScienceArticleHistory.xml");

}

}

Getting started:

- Add links here!

Libraries:

- DotNetWikiBot Framework - a clean full-featured C# API, compiled as DLL library, that allows to build programs and web robots easily to manage information on MediaWiki-powered sites. Detailed documentation is available.

- WikiFunctions .NET library - Bundled with AWB, is a library of stuff useful for bots, such as generating lists, loading/editing articles, connecting to the recent changes IRC channel and more.

- WikiAccess library

- MediaWikiEngine, used by Commonplace upload tool

- Tyng.MediaWiki class library, a MediaWiki API written in C# used by NrhpBot

Java

Generally developed with Eclipse

Example of code:

public static void main(String [] args) throws Exception {

MediaWikiBot bot = new MediaWikiBot("http://en.wikipedia.org/w/");

bot.login("user", "pw");

SimpleArticle a = null;

try {

a = new SimpleArticle(bot.readContent("Main Page"));

}catch(Exception e){

System.out.println("The bot could not find the main page");

}

modifyContent(a);

bot.writeContent(a);

}

Getting started:

Libraries:

Ruby

RWikiBot is a Ruby framework for writing bots. Currently, it is under development and looking for contributors. It uses MediaWiki's official API, and as such is limited in certain capabilities.

A simple example:

require "rubygems"

require "rwikibot"

# Create a bot

bot = RWikiBot.new "TestBot", "http://site.tld/wiki/api.php"

# Login

bot.login

# Get content of article "Horse"

content = bot.content "horse"

Libraries: