Additive synthesis: Difference between revisions

Conundrumer (talk | contribs) →Inharmonic form: more logical wording |

Conundrumer (talk | contribs) |

||

| Line 102: | Line 102: | ||

| isbn = 978-0-9745607-3-1 |

| isbn = 978-0-9745607-3-1 |

||

|accessdate=9 January 2012 |

|accessdate=9 January 2012 |

||

}}</ref> While many conventional musical instruments have harmonic partials (e.g. an [[oboe]]), some have inharmonic partials (e.g. |

}}</ref> While many conventional musical instruments have harmonic partials (e.g. an [[oboe]]), some have inharmonic partials (e.g. [[bell (instrument)|bells]]). Inharmonic additive synthesis can be described as |

||

: <math>y(t) = \sum_{k=1}^{K} r_k(t) \cos\left(2 \pi f_k t + \phi_k \right),</math> |

: <math>y(t) = \sum_{k=1}^{K} r_k(t) \cos\left(2 \pi f_k t + \phi_k \right),</math> |

||

Revision as of 14:42, 26 June 2013

Additive synthesis is a sound synthesis technique that creates timbre by adding sine waves together.[1][2]

The timbre of musical instruments can be considered in the light of Fourier theory to consist of multiple harmonic or inharmonic partials or overtones. Each partial is a sine wave of different frequency and amplitude that swells and decays over time.

Additive synthesis generates sound by adding the output of multiple sine wave generators. It may also be implemented using pre-computed wavetables or inverse Fast Fourier transforms.

Definitions

|

Harmonic additive synthesis is closely related to the concept of a Fourier series which is a way of expressing a periodic function as the sum of sinusoidal functions with frequencies equal to integer multiples of a common fundamental frequency. These sinusoids are called harmonics, overtones, or generally, partials. In general, a Fourier series contains an infinite number of sinusoidal components, with no upper limit to the frequency of the sinusoidal functions and includes a DC component (one with frequency of 0 Hz). Frequencies outside of the human audible range can be omitted in additive synthesis. As a result only a finite number of sinusoidal terms with frequencies that lie within the audible range are modeled in additive synthesis.

A waveform or function is said to be periodic if

for all and for some period .

The Fourier series of a periodic function is mathematically expressed as:

where

- is the fundamental frequency of the waveform and is equal to the reciprocal of the period,

- is the amplitude of the th harmonic,

- is the phase offset of the th harmonic. atan2( ) is the four-quadrant arctangent function,

Being inaudible, the DC component, , and all components with frequencies higher than some finite limit, , are omitted in the following expressions of additive synthesis.

Harmonic form

The simplest harmonic additive synthesis can be mathematically expressed as:

, (1)

where is the synthesis output, , , and are the amplitude, frequency, and the phase offset of the th harmonic partial of a total of harmonic partials, and is the fundamental frequency of the waveform and the frequency of the musical note.

Time-dependent amplitudes

|

Example of harmonic additive synthesis in which each harmonic has a time-dependent amplitude. The fundamental frequency is 440 Hz.

Problems listening to this file? See Media help |

More generally, the amplitude of each harmonic can be prescribed as a function of time, , in which case the synthesis output is

. (2)

Each envelope should vary slowly relative to the frequency spacing between adjacent sinusoids. The bandwidth of should be significantly less than .

Inharmonic form

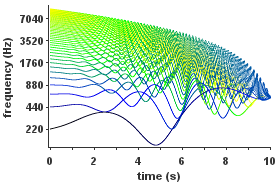

Additive synthesis can also produce inharmonic sounds (which are aperiodic waveforms) in which the individual overtones need not have frequencies that are integer multiples of some common fundamental frequency.[3][4] While many conventional musical instruments have harmonic partials (e.g. an oboe), some have inharmonic partials (e.g. bells). Inharmonic additive synthesis can be described as

where is the constant frequency of th partial.

|

Example of inharmonic additive synthesis in which both the amplitude and frequency of each partial are time-dependent.

Problems listening to this file? See Media help |

Time-dependent frequencies

In the general case, the instantaneous frequency of a sinusoid is the derivative (with respect to time) of the argument of the sine or cosine function. If this frequency is represented in Hz, rather than in angular frequency form, then this derivative is divided by . This is the case whether the partial is harmonic or inharmonic and whether its frequency is constant or time-varying.

In the most general form, the frequency of each non-harmonic partial is a non-negative function of time, , yielding

(3)

Broader definitions

Additive synthesis has been used as an umbrella term for the class of sound synthesis techniques that sum simple elements to create more complex timbres, even when the elements are not sine waves.[5][6] For example, F. Richard Moore listed additive synthesis as one of the "four basic categories" of sound synthesis alongside subtractive synthesis, nonlinear synthesis, and physical modelling.[6] In this broad sense, pipe organs, which also have pipes producing non-sinusoidal waveforms, can be considered as additive synthesizers. Summation of principal components and Walsh functions have also been classified as additive synthesis.[7]

Additive analysis/resynthesis

It is possible to analyze the frequency components of a recorded sound giving a "sum of sinusoids" representation. This representation can be re-synthesized using additive synthesis. One method of decomposing a sound into time varying sinusoidal partials is Fourier Transform-based McAulay-Quatieri Analysis.[8][9]

By modifying the sum of sinusoids representation, timbral alterations can be made prior to resynthesis. For example, a harmonic sound could be restructured to sound inharmonic, and vice versa. Sound hybridisation or "morphing" has been implemented by additive resynthesis.[10]

Additive analysis/resynthesis has been employed in a number of techniques including Sinusoidal Modelling,[11] Spectral Modelling Synthesis (SMS),[10] and the Reassigned Bandwidth-Enhanced Additive Sound Model.[12] Software that implements additive analysis/resynthesis includes: SPEAR,[13] LEMUR, LORIS,[14] SMSTools,[15] ARSS.[16]

Applications

Musical instruments

Additive synthesis is used in electronic musical instruments.

Speech synthesis

In linguistics research, harmonic additive synthesis was used in 1950s to play back modified and synthetic speech spectrograms.[17] Later, in early 1980s, listening tests were carried out on synthetic speech stripped of acoustic cues to assess their significance. Time-varying formant frequencies and amplitudes derived by linear predictive coding were synthesized additively as pure tone whistles. This method is called sinewave synthesis.[18][19]

Implementation methods

Modern-day implementations of additive synthesis are mainly digital. (See section Discrete-time equations for the underlying discrete-time theory)

Oscillator bank synthesis

Additive synthesis can be implemented using a bank of sinusoidal oscillators, one for each partial.[1]

Wavetable synthesis

In the case of harmonic, quasi-periodic musical tones, wavetable synthesis can be as general as time-varying additive synthesis, but requires less computation during synthesis.[20] As a result, an efficient implementation of time-varying additive synthesis of harmonic tones can be accomplished by use of wavetable synthesis.

Group additive synthesis[21][22][23] is a method to group partials into harmonic groups (of differing fundamental frequencies) and synthesize each group separately with wavetable synthesis before mixing the results.

Inverse FFT synthesis

An inverse Fast Fourier Transform can be used to efficiently synthesize frequencies that evenly divide the transform period. By careful consideration of the DFT frequency domain representation it is also possible to efficiently synthesize sinusoids of arbitrary frequencies using a series of overlapping inverse Fast Fourier Transforms.[24]

History

Harmonic analysis was discovered by Joseph Fourier,[25] who published an extensive treatise of his research in the context of heat transfer in 1822.[26] The theory found an early application in prediction of tides. Around 1876,[27] Lord Kelvin constructed a mechanical tide predictor. It consisted of a harmonic analyzer and a harmonic synthesizer, as they were called already in the 19th century.[28][29] The analysis of tide measurements was done using James Thomson's integrating machine. The resulting Fourier coefficients were input into the synthesizer, which then used a system of cords and pulleys to generate and sum harmonic sinusoidal partials for prediction of future tides. In 1910, a similar machine was built for the analysis of periodic waveforms of sound.[30] The synthesizer drew a graph of the combination waveform, which was used chiefly for visual validation of the analysis.[30]

Georg Ohm applied Fourier's theory to sound in 1843. The line of work was greatly advanced by Hermann von Helmholtz, who published his eight years worth of research in 1863.[31] Helmholtz believed that the psychological perception of tone color is subject to learning, while hearing in the sensory sense is purely physiological.[32] He supported the idea that perception of sound derives from signals from nerve cells of the basilar membrane and that the elastic appendages of these cells are sympathetically vibrated by pure sinusoidal tones of appropriate frequencies.[30] Helmholtz agreed with the finding of Ernst Chladni from 1787 that certain sound sources have inharmonic vibration modes.[32]

In Helmholtz's time, electronic amplification was unavailable. For synthesis of tones with harmonic partials, Helmholtz built an electrically excited array of tuning forks and acoustic resonance chambers that allowed adjustment of the amplitudes of the partials.[33] Built at least as early as in 1862,[33] these were in turn refined by Rudolph Koenig, who demonstrated his own setup in 1872.[33] For harmonic synthesis, Koenig also built a large apparatus based on his wave siren. It was pneumatic and utilized cut-out tonewheels, and was criticized for low purity of its partial tones.[27] Also tibia pipes of pipe organs have nearly sinusoidal waveforms and can be combined in the manner of additive synthesis.[27]

In 1938, with significant new supporting evidence,[34] it was reported on the pages of Popular Science Monthly that the human vocal cords function like a fire siren to produce a harmonic-rich tone, which is then filtered by the vocal tract to produce different vowel tones.[35] By the time, the additive Hammond organ was already on market. Most early electronic organ makers thought it too expensive to manufacture the plurality of oscillators required by additive organs, and began instead to built subtractive ones.[36] In a 1940 Institute of Radio Engineers meeting, the head field engineer of Hammond elaborated on the company's new Novachord as having a “subtractive system” in contrast to the original Hammond organ in which “the final tones were built up by combining sound waves”.[37] Alan Douglas used the qualifiers additive and subtractive to describe different types of electronic organs in a 1948 paper presented to the Royal Musical Association.[38] The contemporary wording additive synthesis and subtractive synthesis can be found in his 1957 book The electrical production of music, in which he categorically lists three methods of forming of musical tone-colours, in sections titled Additive synthesis, Subtractive synthesis, and Other forms of combinations.[39]

A typical modern additive synthesizer produces its output as an electrical, analog signal, or as digital audio, such as in the case of software synthesizers, which became popular around year 2000.[40]

Timeline

The following is a timeline of historically and technologically notable analog and digital synthesizers and devices implementing additive synthesis.

| Research implementation or publication | Commercially available | Company or institution | Synthesizer or synthesis device | Description | Audio samples |

|---|---|---|---|---|---|

| 1900[41] | 1906[41] | New England Electric Music Company | Telharmonium | The first polyphonic, touch-sensitive music synthesizer.[42] Implemented sinuosoidal additive synthesis using tonewheels and alternators. Invented by Thaddeus Cahill. | no known recordings[41] |

| 1933[43] | 1935[43] | Hammond Organ Company | Hammond Organ | An electronic additive synthesizer that was commercially more successful than Telharmonium.[42] Implemented sinusoidal additive synthesis using tonewheels and magnetic pickups. Invented by Laurens Hammond. | |

| 1950 or earlier[17] | Haskins Laboratories | Pattern Playback | A speech synthesis system that controlled amplitudes of harmonic partials by a spectrogram that was either hand-drawn or an analysis result. The partials were generated by a multi-track optical tonewheel.[17] | samples | |

| 1958[44] | ANS | An additive synthesizer[45] that played microtonal spectrogram-like scores using multiple multi-track optical tonewheels. Invented by Evgeny Murzin. A similar instrument that utilized electronic oscillators, the Oscillator Bank, and its input device Spectrogram were realized by Hugh Le Caine in 1959.[46][47] | |||

| 1963[48] | MIT | An off-line system for digital spectral analysis and resynthesis of the attack and steady-state portions of musical instrument timbres by David Luce.[48] | |||

| 1964[49] | University of Illinois | Harmonic Tone Generator | An electronic, harmonic additive synthesis system invented by James Beauchamp.[49][50] | samples (info) | |

| 1974 or earlier[51][52] | 1974[51][52] | RMI | Harmonic Synthesizer | The first synthesizer product that implemented additive[53] synthesis using digital oscillators.[51][52] The synthesizer also had a time-varying analog filter.[51] RMI was a subsidiary of Allen Organ Company, which had released the first commercial digital church organ, the Allen Computer Organ, in 1971, using digital technology developed by North American Rockwell.[54] | 1 2 3 4 |

| 1974[55] | EMS (London) | Digital Oscillator Bank | A bank of digital oscillators with arbitrary waveforms, individual frequency and amplitude controls,[56] intended for use in analysis-resynthesis with the digital Analysing Filter Bank (AFB) also constructed at EMS.[55][56] Also known as: DOB. | in The New Sound of Music[57] | |

| 1976[58] | 1976[59] | Fairlight | Qasar M8 | An all-digital synthesizer that used the Fast Fourier Transform[60] to create samples from interactively drawn amplitude envelopes of harmonics.[61] | samples |

| 1977[62] | Bell Labs | Digital Synthesizer | A real-time, digital additive synthesizer[62] that has been called the first true digital synthesizer.[63] Also known as: Alles Machine, Alice. | sample (info) | |

| 1979[63] | 1979[63] | New England Digital | Synclavier II | A commercial digital synthesizer that enabled development of timbre over time by smooth cross-fades between waveforms generated by additive synthesis. |

Discrete-time equations

In digital implementations of additive synthesis, discrete-time equations are used in place of the continuous-time synthesis equations. A notational convention for discrete-time signals uses brackets i.e. and the argument can only be integer values. If the continuous-time synthesis output is expected to be sufficiently bandlimited; below half the sampling rate or , it suffices to directly sample the continuous-time expression to get the discrete synthesis equation. The continuous synthesis output can later be reconstructed from the samples using a digital-to-analog converter. The sampling period is .

Beginning with (3),

and sampling at discrete times results in

where

- is the discrete-time varying amplitude envelope

- is the discrete-time backward difference instantaneous frequency.

This is equivalent to

where

- for all [24]

and

See also

References

- ^ a b

Julius O. Smith III. "Additive Synthesis (Early Sinusoidal Modeling)". Retrieved 14 January 2012.

The term "additive synthesis" refers to sound being formed by adding together many sinusoidal components

Cite error: The named reference "JOS_Additive" was defined multiple times with different content (see the help page). - ^ Gordon Reid. "Synth Secrets, Part 14: An Introduction To Additive Synthesis". Sound On Sound. Retrieved 14 January 2012.

- ^

Smith III, Julius O.; Serra, Xavier (2005), "Additive Synthesis", PARSHL: An Analysis/Synthesis Program for Non-Harmonic Sounds Based on a Sinusoidal Representation, CCRMA, Department of Music, Stanford University, retrieved 9 January 2012

{{citation}}: Cite has empty unknown parameter:|1=(help); External link in|chapterurl=|chapterurl=ignored (|chapter-url=suggested) (help) (online reprint) - ^

Smith III, Julius O. (2011), "Additive Synthesis (Early Sinusoidal Modeling)", Spectral Audio Signal Processing, CCRMA, Department of Music, Stanford University, ISBN 978-0-9745607-3-1, retrieved 9 January 2012

{{citation}}: External link in|chapterurl=|chapterurl=ignored (|chapter-url=suggested) (help) - ^ Roads, Curtis (1995). The Computer Music Tutorial. MIT Press. p. 134. ISBN 0-262-68082-3.

- ^ a b Moore, F. Richard (1995). Foundations of Computer Music. Prentice Hall. p. 16. ISBN 0-262-68082-3.

- ^ Roads, Curtis (1995). The Computer Music Tutorial. MIT Press. pp. 150–153. ISBN 0-262-68082-3.

- ^ R. J. McAulay and T. F. Quatieri (Aug 1986), "Speech analysis/synthesis based on a sinusoidal representation", IEEE Transactions on Acoustics, Speech, Signal Processing ASSP-34: 744-754

- ^ McAulay-Quatieri Method

- ^ a b Serra, Xavier (1989). A System for Sound Analysis/Transformation/Synthesis based on a Deterministic plus Stochastic Decomposition (Ph.D. thesis). Stanford University. Retrieved 13 January 2012.

- ^ Julius O. Smith III, Xavier Serra. "PARSHL: An Analysis/Synthesis Program for Non-Harmonic Sounds Based on a Sinusoidal Representation". Retrieved 9 January 2012.

- ^

Fitz, Kelly (1999). The Reassigned Bandwidth-Enhanced Method of Additive Synthesis (Ph.D. thesis). Dept. of Electrical and Computer Engineering, University of Illinois at Urbana-Champaign. CiteSeerx: 10.1.1.10.1130.

{{cite thesis}}:|access-date=requires|url=(help) - ^ SPEAR Sinusoidal Partial Editing Analysis and Resynthesis for MacOS X, MacOS 9 and Windows

- ^ Loris Software for Sound Modeling, Morphing, and Manipulation

- ^ SMSTools application for Windows

- ^ ARSS: The Analysis & Resynthesis Sound Spectrograph

- ^ a b c "The interconversion of audible and visible patterns as a basis for research in the perception of speech". Proc. Natl. Acad. Sci. U.S.A. 37 (5): 318–25. 1951. doi:10.1073/pnas.37.5.318. PMC 1063363. PMID 14834156.

{{cite journal}}: Unknown parameter|month=ignored (help) - ^

Remez, R.E. (1981). "Speech perception without traditional speech cues". Science (212): 947–950.

{{cite journal}}: Unknown parameter|coauthors=ignored (|author=suggested) (help) - ^ Rubin, P.E. (1980). "Sinewave Synthesis Instruction Manual (VAX)" (PDF). Internal memorandum. Haskins Laboratories, New Haven, CT.

- ^ Robert Bristow-Johnson (November 1996). "Wavetable Synthesis 101, A Fundamental Perspective" (PDF).

- ^ Julius O. Smith III. "Group Additive Synthesis". CCRMA, Stanford University. Archived from the original on 6 June 2011. Retrieved 12 May 2011.

{{cite web}}: Unknown parameter|deadurl=ignored (|url-status=suggested) (help) - ^ P. Kleczkowski (1989). "Group additive synthesis". Computer Music Journal. 13 (1): 12–20.

- ^ B. Eaglestone and S. Oates (1990). "Proceedings of the 1990 International Computer Music Conference, Glasgow". Computer Music Association.

{{cite journal}}:|chapter=ignored (help); Cite journal requires|journal=(help) - ^ a b Rodet, X.; Depalle, P. (1992). "Spectral Envelopes and Inverse FFT Synthesis". Proceedings of the 93rd Audio Engineering Society Convention. CiteSeerx: 10.1.1.43.4818.

- ^ Prestini, Elena (2004) [Rev. ed of: Applicazioni dell'analisi armonica. Milan: Ulrico Hoepli, 1996]. The Evolution of Applied Harmonic Analysis: Models of the Real World. trans. New York, USA: Birkhäuser Boston. pp. 114–115. ISBN 0-8176-4125-4. Retrieved 6 February 2012.

- ^ Fourier, Jean Baptiste Joseph (1822). Théorie analytique de la chaleur (in French). Paris, France: Chez Firmin Didot, père et fils.

{{cite book}}: Unknown parameter|trans_title=ignored (|trans-title=suggested) (help) - ^ a b c Miller, Dayton Clarence (1926) [First published 1916]. The Science Of Musical Sounds. New York: The Macmillan Company. pp. 110, 244–248.

- ^ The London, Edinburgh and Dublin philosophical magazine and journal of science. 49. Taylor & Francis: 490. 1875.

{{cite journal}}: Missing or empty|title=(help) - ^ Thomson, Sir W. (1878). "Harmonic analyzer". Proceedings of the Royal Society of London. 27. Taylor and Francis: 371–373. JSTOR 113690.

- ^ a b c Cahan, David (1993). Cahan, David (ed.). Hermann von Helmholtz and the foundations of nineteenth-century science. Berkeley and Los Angeles, USA: University of California Press. pp. 110–114, 285–286. ISBN 978-0-520-08334-9.

- ^ Helmholtz, von, Hermann (1863). Die Lehre von den Tonempfindungen als physiologische Grundlage für die Theorie der Musik (in German) (1st ed.). Leipzig: Leopold Voss. pp. v.

{{cite book}}: Unknown parameter|trans_title=ignored (|trans-title=suggested) (help) - ^ a b Christensen, Thomas Street (2002). The Cambridge History of Western Music. Cambridge, United Kingdom: Cambridge University Press. pp. 251, 258. ISBN 0-521-62371-5.

- ^ a b c von Helmholtz, Hermann (1875). On the sensations of tone as a physiological basis for the theory of music. London, United Kingdom: Longmans, Green, and co. pp. xii, 175–179.

- ^ Russell, George Oscar (1936). Year book - Carnegie Institution of Washington (1936). Carnegie Institution of Washington: Year Book. Vol. 35. Washington: Carnegie Institution of Washington. pp. 359–363.

- ^ Lodge, John E. (April, 1938). Brown, Raymond J. (ed.). "Odd Laboratory Tests Show Us How We Speak: Using X Rays, Fast Movie Cameras, and Cathode-Ray Tubes, Scientists Are Learning New Facts About the Human Voice and Developing Teaching Methods To Make Us Better Talkers". Popular Science Monthly. 132 (4). New York, USA: Popular Science Publishing: 32–33.

{{cite journal}}: Check date values in:|date=(help) - ^ Attention: This template ({{cite jstor}}) is deprecated. To cite the publication identified by jstor:3680869, please use {{cite journal}} with

|jstor=3680869instead. - ^ Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1109/JRPROC.1940.228904 , please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1109/JRPROC.1940.228904instead. - ^ Attention: This template ({{cite jstor}}) is deprecated. To cite the publication identified by jstor:765906, please use {{cite journal}} with

|jstor=765906instead. - ^ Douglas, Alan Lockhart Monteith (1957). The Electrical Production of Music. London, UK: Macdonald. pp. 140, 142.

- ^ Pejrolo, Andrea; DeRosa, Rich (2007). Acoustic and MIDI orchestration for the contemporary composer. Oxford, UK: Elsevier. pp. 53–54.

- ^ a b c Weidenaar, Reynold (1995). Magic Music from the Telharmonium. Lanham, MD: Scarecrow Press. ISBN 0-8108-2692-5.

- ^ a b Moog, Robert A. (October/November 1977). "Electronic Music". Journal of the Audio Engineering Society (JAES). 25 (10/11): 856.

{{cite journal}}: Check date values in:|date=(help)CS1 maint: date and year (link) - ^ a b Olsen, Harvey (14 December 2011). Brown, Darren T. (ed.). "Leslie Speakers and Hammond organs: Rumors, Myths, Facts, and Lore". The Hammond Zone. Hammond Organ in the U.K. Retrieved 20 January 2012.

- ^ Derek Holzer (22 February 2010). "A brief history of optical synthesis". Retrieved 13 January 2012.

- ^ Vail, Mark (1 November 2002), Eugeniy Murzin's ANS — Additive Russian synthesizer, Keyboard Magazine, p. 120

- ^ Gayle Young, Oscillator Bank (1959)

- ^ Gayle Young, Spectrogram (1959)

- ^ a b Luce, David Alan (1963). Physical correlates of nonpercussive musical instrument tones. Cambridge, Massachusetts, U.S.A.: Massachusetts Institute of Technology. hdl:1721.1/27450.

- ^ a b Beauchamp, James (17 November 2009). "The Harmonic Tone Generator: One of the First Analog Voltage-Controlled Synthesizers". Prof. James W. Beauchamp Home Page.

- ^ Beauchamp, James W. (1966). "Additive Synthesis of Harmonic Musical Tones". Journal of the Audio Engineering Society. 14 (4): 332–342.

{{cite journal}}: Unknown parameter|month=ignored (help) - ^ a b c d

"RMI Harmonic Synthesizer". Synthmuseum.com. Archived from the original on 9 June 2011. Retrieved 12 May 2011.

{{cite web}}: Unknown parameter|deadurl=ignored (|url-status=suggested) (help) - ^ a b c Reid, Gordon (December, 2011). "PROG SPAWN! The Rise And Fall Of Rocky Mount Instruments (Retro)". Sound On Sound. Retrieved 22 January 2012.

{{cite journal}}: Check date values in:|date=(help) - ^ Flint, Tom (February, 2008). "Jean Michel Jarre: 30 Years Of Oxygene". Sound On Sound. Retrieved 22 January 2012.

{{cite journal}}: Check date values in:|date=(help) - ^ "Allen Organ Company", fundinguniverse.com

- ^ a b Cosimi, Enrico (20 May 2009). "EMS Story - Prima Parte". Audio Accordo.it (in Italian). Retrieved 21 January 2012.

{{cite journal}}: Unknown parameter|trans_title=ignored (|trans-title=suggested) (help) - ^ a b

Hinton, Graham (2002). "EMS: The Inside Story". Electronic Music Studios (Cornwall).

{{cite web}}: Invalid|ref=harv(help) - ^ The New Sound of Music (TV). UK: BBC. 1979. Includes a demonstration of DOB and AFB.

- ^ Leete, Norm (April, 1999). "Fairlight Computer – Musical Instrument (Retro)". Sound On Sound. Retrieved 29 January 2012.

{{cite journal}}: Check date values in:|date=(help) - ^ Twyman, John (1 November 2004). (inter)facing the music: The history of the Fairlight Computer Musical Instrument (pdf) (Bachelor of Science (Honours) thesis). Unit for the History and Philosophy of Science, University of Sydney. Retrieved 29 January 2012.

- ^ Street, Rita (8 November 2000). "Fairlight: A 25-year long fairytale". Audio Media magazzine. IMAS Publishing UK. Archived from the original on 8 October 2003. Retrieved 29 January 2012.

{{cite web}}: Unknown parameter|deadurl=ignored (|url-status=suggested) (help) - ^ "Computer Music Journal" (JPG). 1978. Retrieved 29 January 2012.

- ^ a b Leider, Colby (2004). "The Development of the Modern DAW". Digital Audio Workstation. McGraw-Hill. p. 58.

- ^ a b c Joel Chadabe (1997). Electric Sound. Upper Saddle River, N.J., U.S.A.: Prentice Hall. pp. 177–178, 186. ISBN 978-0-13-303231-4.

![{\displaystyle {\begin{aligned}y(t)&={\frac {a_{0}}{2}}+\sum _{k=1}^{\infty }\left[a_{k}\cos(2\pi kf_{0}t)-b_{k}\sin(2\pi kf_{0}t)\right]\\&={\frac {a_{0}}{2}}+\sum _{k=1}^{\infty }r_{k}\cos \left(2\pi kf_{0}t+\phi _{k}\right)\\\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/522ace082a6d8990934113a639987f09a885cf02)

![{\displaystyle y[n]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e73a8042c0d1a4c5d264982972d2ef33eda55e34)

![{\displaystyle {\begin{aligned}y[n]&=y(nT)=\sum _{k=1}^{K}r_{k}(nT)\cos \left(2\pi \int _{0}^{nT}f_{k}(u)\ du+\phi _{k}\right)\\&=\sum _{k=1}^{K}r_{k}(nT)\cos \left(2\pi \sum _{i=1}^{n}\int _{(i-1)T}^{iT}f_{k}(u)\ du+\phi _{k}\right)\\&=\sum _{k=1}^{K}r_{k}(nT)\cos \left(2\pi \sum _{i=1}^{n}(Tf_{k}[i])+\phi _{k}\right)\\&=\sum _{k=1}^{K}r_{k}[n]\cos \left({\frac {2\pi }{f_{\mathrm {s} }}}\sum _{i=1}^{n}f_{k}[i]+\phi _{k}\right)\\\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/235e423ed6dcd7564ba02d91eee3b6a012e84ce4)

![{\displaystyle r_{k}[n]=r_{k}(nT)\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2a91ccc3b0935394fad361cdc119c27a51b0c278)

![{\displaystyle f_{k}[n]={\frac {1}{T}}\int _{(n-1)T}^{nT}f_{k}(t)\ dt\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1043b3eaee212fedc8c9c8d408aad44dce3c4af1)

![{\displaystyle y[n]=\sum _{k=1}^{K}r_{k}[n]\cos \left(\theta _{k}[n]\right)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bbce88acb5e1e6a4ef32dd0be9bd49b78325a7de)

![{\displaystyle {\begin{aligned}\theta _{k}[n]&={\frac {2\pi }{f_{\mathrm {s} }}}\sum _{i=1}^{n}f_{k}[i]+\phi _{k}\\&=\theta _{k}[n-1]+{\frac {2\pi }{f_{\mathrm {s} }}}f_{k}[n]\\\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e9b7b0acccac5e1f4c4d5739654caf234277411e)

![{\displaystyle \theta _{k}[0]=\phi _{k}.\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ea1d7745c11e8890b24efb6c72f8c5a320d8b27e)