K-d tree: Difference between revisions

m →Complexity: Journal cites, Added 1 doi to a journal cite using AWB (10870) |

Le Creusot (talk | contribs) m Added a sentence. |

||

| Line 109: | Line 109: | ||

This algorithm creates the [[invariant (computer science)|invariant]] that for any node, all the nodes in the left [[subtree]] are on one side of a splitting [[plane (mathematics)|plane]], and all the nodes in the right subtree are on the other side. Points that lie on the splitting plane may appear on either side. The splitting plane of a node goes through the point associated with that node (referred to in the code as ''node.location''). |

This algorithm creates the [[invariant (computer science)|invariant]] that for any node, all the nodes in the left [[subtree]] are on one side of a splitting [[plane (mathematics)|plane]], and all the nodes in the right subtree are on the other side. Points that lie on the splitting plane may appear on either side. The splitting plane of a node goes through the point associated with that node (referred to in the code as ''node.location''). |

||

Alternative tree-building algorithms build a balanced {{nobr|''k''-d tree}} for the purpose of three-dimensional graphics ray tracing. These algorithms build |

Alternative tree-building algorithms build a balanced {{nobr|''k''-d tree}} for the purpose of three-dimensional graphics ray tracing. These algorithms build a {{nobr|''k''-d tree}} in expected, but not proven, [[Big O notation|O]](''n'' log ''n'') time by presorting ''n'' triangles prior to building the tree, thereby eliminating the costly step of finding the median at each level of subdivision.<ref>{{cite journal | author = Wald I, Havran V | title = On building fast kd-trees for ray tracing, and on doing that in O(N log N) | journal = In: Proceedings of the 2006 IEEE Symposium on Interactive Ray Tracing | pages = 61–69 | date = September 2006 | url = http://dcgi.felk.cvut.cz/home/havran/ARTICLES/ingo06rtKdtree.pdf | doi = 10.1109/RT.2006.280216}}</ref><ref>{{cite journal | author = Havran V, Bittner J | title = On improving k-d trees for ray shooting | journal = In: Proceedings of the WSCG | pages = 209–216 | date = 2002 | url = http://dcgi.felk.cvut.cz/home/bittner/publications/wscg02.pdf}}</ref> A {{nobr|''k''-d}} tree-building algorithm that sorts points presorts ''n'' points in each of ''k'' dimensions using an [[Big O notation|O]](''n'' log ''n'') sort such as [[Heapsort]] or [[Mergesort]] prior to building the tree and has worst-case complexity of [[Big O notation|O]](''kn'' log ''n'').<ref name="knlogn">{{cite journal | author = Brown R.A. | title = Building a balanced {{nobr|''k''-d tree}} in {{math|O(''kn'' log ''n'')}} time | journal = Journal of Computer Graphics Techniques | pages = 50-68 | volume = 4 | issue = 1 | date = 2015 | url = http://jcgt.org/published/0004/01/03/}}</ref> |

||

=== Adding elements === |

=== Adding elements === |

||

| Line 178: | Line 178: | ||

* Building a static ''k''-d tree from ''n'' points takes: |

* Building a static ''k''-d tree from ''n'' points takes: |

||

** [[Big O notation|O]](''n'' log<sup>2</sup> ''n'') time if an O(''n'' log ''n'') sort such as [[Heapsort]] is used to compute the median at each level; |

** [[Big O notation|O]](''n'' log<sup>2</sup> ''n'') time if an [[Big O notation|O]](''n'' log ''n'') sort such as [[Heapsort]] is used to compute the median at each level; |

||

** O(''n'' log ''n'') time if [[Quickselect]]<ref>{{cite journal | author = Blum M, Floyd RW, Pratt V, Rivest RL, Tarjan RE | title = Time bounds for selection | journal = Journal of Computer and System Sciences | volume = 7 | pages = 448–461 | date = 1973 | doi=10.1016/s0022-0000(73)80033-9}}</ref><ref>{{Introduction to Algorithms}} Chapter 10.</ref> is used; |

** [[Big O notation|O]](''n'' log ''n'') time if [[Quickselect]]<ref>{{cite journal | author = Blum M, Floyd RW, Pratt V, Rivest RL, Tarjan RE | title = Time bounds for selection | journal = Journal of Computer and System Sciences | volume = 7 | pages = 448–461 | date = 1973 | doi=10.1016/s0022-0000(73)80033-9}}</ref><ref>{{Introduction to Algorithms}} Chapter 10.</ref> is used; |

||

** O(''kn'' log ''n'') time if ''n'' points are |

** [[Big O notation|O]](''kn'' log ''n'') time if ''n'' points are presorted in each of ''k'' dimensions using an [[Big O notation|O]](''n'' log ''n'') sort such as [[Heapsort]] or [[Mergesort]] prior to building the {{nobr|''k''-d tree}}.<ref name = "knlogn"/> |

||

* Inserting a new point into a balanced ''k''-d tree takes O(log ''n'') time. |

* Inserting a new point into a balanced ''k''-d tree takes O(log ''n'') time. |

||

Revision as of 14:25, 9 April 2015

| k-d tree | ||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type | Multidimensional BST | |||||||||||||||||||||||

| Invented | 1975 | |||||||||||||||||||||||

| Invented by | Jon Louis Bentley | |||||||||||||||||||||||

| ||||||||||||||||||||||||

In computer science, a k-d tree (short for k-dimensional tree) is a space-partitioning data structure for organizing points in a k-dimensional space. k-d trees are a useful data structure for several applications, such as searches involving a multidimensional search key (e.g. range searches and nearest neighbor searches). k-d trees are a special case of binary space partitioning trees.

Informal description

The k-d tree is a binary tree in which every node is a dimensional point. Every non-leaf node can be thought of as implicitly generating a splitting hyperplane that divides the space into two parts, known as half-spaces. Points to the left of this hyperplane are represented by the left subtree of that node and points right of the hyperplane are represented by the right subtree. The hyperplane direction is chosen in the following way: every node in the tree is associated with one of the k-dimensions, with the hyperplane perpendicular to that dimension's axis. So, for example, if for a particular split the "x" axis is chosen, all points in the subtree with a smaller "x" value than the node will appear in the left subtree and all points with larger "x" value will be in the right subtree. In such a case, the hyperplane would be set by the x-value of the point, and its normal would be the unit x-axis.[1]

Operations on k-d trees

Construction

Since there are many possible ways to choose axis-aligned splitting planes, there are many different ways to construct k-d trees. The canonical method of k-d tree construction has the following constraints:[2]

- As one moves down the tree, one cycles through the axes used to select the splitting planes. (For example, in a 3-dimensional tree, the root would have an x-aligned plane, the root's children would both have y-aligned planes, the root's grandchildren would all have z-aligned planes, the root's great-grandchildren would all have x-aligned planes, the root's great-great-grandchildren would all have y-aligned planes, and so on.)

- Points are inserted by selecting the median of the points being put into the subtree, with respect to their coordinates in the axis being used to create the splitting plane. (Note the assumption that we feed the entire set of n points into the algorithm up-front.)

This method leads to a balanced k-d tree, in which each leaf node is about the same distance from the root. However, balanced trees are not necessarily optimal for all applications.

Note also that it is not required to select the median point. In that case, the result is simply that there is no guarantee that the tree will be balanced. A simple heuristic to avoid coding a complex linear-time median-finding algorithm, or using an O(n log n) sort of all n points, is to use sort to find the median of a fixed number of randomly selected points to serve as the splitting plane. In practice, this technique often results in nicely balanced trees.

Given a list of n points, the following algorithm uses a median-finding sort to construct a balanced k-d tree containing those points.

function kdtree (list of points pointList, int depth)

{

// Select axis based on depth so that axis cycles through all valid values

var int axis := depth mod k;

// Sort point list and choose median as pivot element

select median by axis from pointList;

// Create node and construct subtrees

var tree_node node;

node.location := median;

node.leftChild := kdtree(points in pointList before median, depth+1);

node.rightChild := kdtree(points in pointList after median, depth+1);

return node;

}

It is common that points "after" the median include only the ones that are strictly greater than the median. For points that lie on the median, it is possible to define a "superkey" function that compares the points in all dimensions. In some cases, it is acceptable to let points equal to the median lie on one side of the median, for example, by splitting the points into a "lesser than" subset and a "greater than or equal to" subset.

|

The above algorithm implemented in the Python programming language is as follows: from collections import namedtuple

from operator import itemgetter

from pprint import pformat

class Node(namedtuple('Node', 'location left_child right_child')):

def __repr__(self):

return pformat(tuple(self))

def kdtree(point_list, depth=0):

try:

k = len(point_list[0]) # assumes all points have the same dimension

except IndexError as e: # if not point_list:

return None

# Select axis based on depth so that axis cycles through all valid values

axis = depth % k

# Sort point list and choose median as pivot element

point_list.sort(key=itemgetter(axis))

median = len(point_list) // 2 # choose median

# Create node and construct subtrees

return Node(

location=point_list[median],

left_child=kdtree(point_list[:median], depth + 1),

right_child=kdtree(point_list[median + 1:], depth + 1)

)

def main():

"""Example usage"""

point_list = [(2,3), (5,4), (9,6), (4,7), (8,1), (7,2)]

tree = kdtree(point_list)

print(tree)

if __name__ == '__main__':

main()

Output would be: ((7, 2),

((5, 4), ((2, 3), None, None), ((4, 7), None, None)),

((9, 6), ((8, 1), None, None), None))

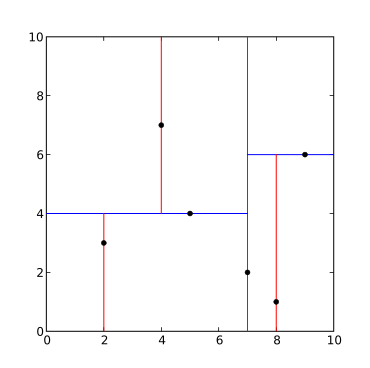

The generated tree is shown below.  (2,3), (5,4), (9,6), (4,7), (8,1), (7,2). |

This algorithm creates the invariant that for any node, all the nodes in the left subtree are on one side of a splitting plane, and all the nodes in the right subtree are on the other side. Points that lie on the splitting plane may appear on either side. The splitting plane of a node goes through the point associated with that node (referred to in the code as node.location).

Alternative tree-building algorithms build a balanced k-d tree for the purpose of three-dimensional graphics ray tracing. These algorithms build a k-d tree in expected, but not proven, O(n log n) time by presorting n triangles prior to building the tree, thereby eliminating the costly step of finding the median at each level of subdivision.[3][4] A k-d tree-building algorithm that sorts points presorts n points in each of k dimensions using an O(n log n) sort such as Heapsort or Mergesort prior to building the tree and has worst-case complexity of O(kn log n).[5]

Adding elements

This section needs expansion. You can help by adding to it. (November 2008) |

One adds a new point to a k-d tree in the same way as one adds an element to any other search tree. First, traverse the tree, starting from the root and moving to either the left or the right child depending on whether the point to be inserted is on the "left" or "right" side of the splitting plane. Once you get to the node under which the child should be located, add the new point as either the left or right child of the leaf node, again depending on which side of the node's splitting plane contains the new node.

Adding points in this manner can cause the tree to become unbalanced, leading to decreased tree performance. The rate of tree performance degradation is dependent upon the spatial distribution of tree points being added, and the number of points added in relation to the tree size. If a tree becomes too unbalanced, it may need to be re-balanced to restore the performance of queries that rely on the tree balancing, such as nearest neighbour searching.

Removing elements

This section needs expansion. You can help by adding to it. (February 2011) |

To remove a point from an existing k-d tree, without breaking the invariant, the easiest way is to form the set of all nodes and leaves from the children of the target node, and recreate that part of the tree.

Another approach is to find a replacement for the point removed.[6] First, find the node R that contains the point to be removed. For the base case where R is a leaf node, no replacement is required. For the general case, find a replacement point, say p, from the subtree rooted at R. Replace the point stored at R with p. Then, recursively remove p.

For finding a replacement point, if R discriminates on x (say) and R has a right child, find the point with the minimum x value from the subtree rooted at the right child. Otherwise, find the point with the maximum x value from the subtree rooted at the left child.

Balancing

Balancing a k-d tree requires care because k-d trees are sorted in multiple dimensions so the tree rotation technique cannot be used to balance them as this may break the invariant.

Several variants of balanced k-d trees exist. They include divided k-d tree, pseudo k-d tree, k-d B-tree, hB-tree and Bkd-tree. Many of these variants are adaptive k-d trees.

Nearest neighbour search

The nearest neighbour search (NN) algorithm aims to find the point in the tree that is nearest to a given input point. This search can be done efficiently by using the tree properties to quickly eliminate large portions of the search space.

Searching for a nearest neighbour in a k-d tree proceeds as follows:

- Starting with the root node, the algorithm moves down the tree recursively, in the same way that it would if the search point were being inserted (i.e. it goes left or right depending on whether the point is lesser than or greater than the current node in the split dimension).

- Once the algorithm reaches a leaf node, it saves that node point as the "current best"

- The algorithm unwinds the recursion of the tree, performing the following steps at each node:

- If the current node is closer than the current best, then it becomes the current best.

- The algorithm checks whether there could be any points on the other side of the splitting plane that are closer to the search point than the current best. In concept, this is done by intersecting the splitting hyperplane with a hypersphere around the search point that has a radius equal to the current nearest distance. Since the hyperplanes are all axis-aligned this is implemented as a simple comparison to see whether the difference between the splitting coordinate of the search point and current node is lesser than the distance (overall coordinates) from the search point to the current best.

- If the hypersphere crosses the plane, there could be nearer points on the other side of the plane, so the algorithm must move down the other branch of the tree from the current node looking for closer points, following the same recursive process as the entire search.

- If the hypersphere doesn't intersect the splitting plane, then the algorithm continues walking up the tree, and the entire branch on the other side of that node is eliminated.

- When the algorithm finishes this process for the root node, then the search is complete.

Generally the algorithm uses squared distances for comparison to avoid computing square roots. Additionally, it can save computation by holding the squared current best distance in a variable for comparison.

Finding the nearest point is an O(log N) operation in the case of randomly distributed points, although analysis in general is tricky. However an algorithm has been given that claims guaranteed O(log N) complexity.[7]

In high-dimensional spaces, the curse of dimensionality causes the algorithm to need to visit many more branches than in lower-dimensional spaces. In particular, when the number of points is only slightly higher than the number of dimensions, the algorithm is only slightly better than a linear search of all of the points.

The algorithm can be extended in several ways by simple modifications. It can provide the k nearest neighbours to a point by maintaining k current bests instead of just one. A branch is only eliminated when k points have been found and the branch cannot have points closer than any of the k current bests.

It can also be converted to an approximation algorithm to run faster. For example, approximate nearest neighbour searching can be achieved by simply setting an upper bound on the number points to examine in the tree, or by interrupting the search process based upon a real time clock (which may be more appropriate in hardware implementations). Nearest neighbour for points that are in the tree already can be achieved by not updating the refinement for nodes that give zero distance as the result, this has the downside of discarding points that are not unique, but are co-located with the original search point.

Approximate nearest neighbour is useful in real-time applications such as robotics due to the significant speed increase gained by not searching for the best point exhaustively. One of its implementations is best-bin-first search.

Range search

A range search searches for ranges of parameters. For example, if a tree is storing values corresponding to income and age, then a range search might be something like looking for all members of the tree which have an age between 20 and 50 years and an income between 50,000 and 80,000. Since k-d trees divide the range of a domain in half at each level of the tree, they are useful for performing range searches.

Analyses of binary search trees has found that the worst case time for range search in a k-dimensional KD tree containing N nodes is given by the following equation.[8]

High-dimensional data

k-d trees are not suitable for efficiently finding the nearest neighbour in high-dimensional spaces. As a general rule, if the dimensionality is k, the number of points in the data, N, should be N >> 2k. Otherwise, when k-d trees are used with high-dimensional data, most of the points in the tree will be evaluated and the efficiency is no better than exhaustive search,[9] and approximate nearest-neighbour methods should be used instead.

Complexity

- Building a static k-d tree from n points takes:

- O(n log2 n) time if an O(n log n) sort such as Heapsort is used to compute the median at each level;

- O(n log n) time if Quickselect[10][11] is used;

- O(kn log n) time if n points are presorted in each of k dimensions using an O(n log n) sort such as Heapsort or Mergesort prior to building the k-d tree.[5]

- Inserting a new point into a balanced k-d tree takes O(log n) time.

- Removing a point from a balanced k-d tree takes O(log n) time.

- Querying an axis-parallel range in a balanced k-d tree takes O(n1-1/k +m) time, where m is the number of the reported points, and k the dimension of the k-d tree.

- Finding 1 nearest neighbour in a balanced k-d tree with randomly distributed points takes O(log n) time on average.

Variations

Volumetric objects

Instead of points, a k-d tree can also contain rectangles or hyperrectangles.[12][13] Thus range search becomes the problem of returning all rectangles intersecting the search rectangle. The tree is constructed the usual way with all the rectangles at the leaves. In an orthogonal range search, the opposite coordinate is used when comparing against the median. For example, if the current level is split along xhigh, we check the xlow coordinate of the search rectangle. If the median is less than the xlow coordinate of the search rectangle, then no rectangle in the left branch can ever intersect with the search rectangle and so can be pruned. Otherwise both branches should be traversed. See also interval tree, which is a 1-dimensional special case.

Points only in leaves

It is also possible to define a k-d tree with points stored solely in leaves.[2] This form of k-d tree allows a variety of split mechanics other than the standard median split. The midpoint splitting rule[14] selects on the middle of the longest axis of the space being searched, regardless of the distribution of points. This guarantees that the aspect ratio will be at most 2:1, but the depth is dependent on the distribution of points. A variation, called sliding-midpoint, only splits on the middle if there are points on both sides of the split. Otherwise, it splits on point nearest to the middle. Maneewongvatana and Mount show that this offers "good enough" performance on common data sets. Using sliding-midpoint, an approximate nearest neighbour query can be answered in . Approximate range counting can be answered in with this method.

See also

- implicit k-d tree, a k-d tree defined by an implicit splitting function rather than an explicitly-stored set of splits

- min/max k-d tree, a k-d tree that associates a minimum and maximum value with each of its nodes

- Ntropy, computer library for the rapid development of algorithms that uses a kd-tree for running on a parallel computer

- Octree, a higher-dimensional generalization of a quadtree

- Quadtree, a space-partitioning structure that splits at the geometric midpoint rather than the median coordinate

- R-tree and bounding interval hierarchy, structure for partitioning objects rather than points, with overlapping regions

- Recursive partitioning, a technique for constructing statistical decision trees that are similar to k-d trees

- Klee's measure problem, a problem of computing the area of a union of rectangles, solvable using k-d trees

- Guillotine problem, a problem of finding a k-d tree whose cells are large enough to contain a given set of rectangles

References

- ^ Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1145/361002.361007, please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1145/361002.361007instead. - ^ a b Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1007/978-3-540-77974-2_5, please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1007/978-3-540-77974-2_5instead. - ^ Wald I, Havran V (September 2006). "On building fast kd-trees for ray tracing, and on doing that in O(N log N)" (PDF). In: Proceedings of the 2006 IEEE Symposium on Interactive Ray Tracing: 61–69. doi:10.1109/RT.2006.280216.

- ^ Havran V, Bittner J (2002). "On improving k-d trees for ray shooting" (PDF). In: Proceedings of the WSCG: 209–216.

- ^ a b Brown R.A. (2015). "Building a balanced k-d tree in O(kn log n) time". Journal of Computer Graphics Techniques. 4 (1): 50–68.

- ^ Chandran, Sharat. Introduction to kd-trees. University of Maryland Department of Computer Science.

- ^ Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1145/355744.355745, please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1145/355744.355745instead. - ^ Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1007/BF00263763, please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1007/BF00263763instead. - ^ Jacob E. Goodman, Joseph O'Rourke and Piotr Indyk (Ed.) (2004). "Chapter 39 : Nearest neighbours in high-dimensional spaces". Handbook of Discrete and Computational Geometry (2nd ed.). CRC Press.

- ^ Blum M, Floyd RW, Pratt V, Rivest RL, Tarjan RE (1973). "Time bounds for selection". Journal of Computer and System Sciences. 7: 448–461. doi:10.1016/s0022-0000(73)80033-9.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Cormen, Thomas H.; Leiserson, Charles E.; Rivest, Ronald L. Introduction to Algorithms. MIT Press and McGraw-Hill. Chapter 10.

- ^ Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1109/TCAD.1985.1270098, please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1109/TCAD.1985.1270098instead. - ^ Attention: This template ({{cite doi}}) is deprecated. To cite the publication identified by doi:10.1007/BF01952830, please use {{cite journal}} (if it was published in a bona fide academic journal, otherwise {{cite report}} with

|doi=10.1007/BF01952830instead. - ^ S. Maneewongvatana and D. M. Mount. It's okay to be skinny, if your friends are fat. 4th Annual CGC Workshop on Computational Geometry, 1999.

External links

- libkdtree++, an open-source STL-like implementation of k-d trees in C++.

- A tutorial on KD Trees

- FLANN and its fork nanoflann, efficient C++ implementations of k-d tree algorithms.

- Spatial C++ Library, a generic implementation of k-d tree as multi-dimensional containers, algorithms, in C++.

- kdtree A simple C library for working with KD-Trees

- K-D Tree Demo, Java applet

- libANN Approximate Nearest Neighbour Library includes a k-d tree implementation

- Caltech Large Scale Image Search Toolbox: a Matlab toolbox implementing randomized k-d tree for fast approximate nearest neighbour search, in addition to LSH, Hierarchical K-Means, and Inverted File search algorithms.

- Heuristic Ray Shooting Algorithms, pp. 11 and after

- Into contains open source implementations of exact and approximate (k)NN search methods using k-d trees in C++.

- Math::Vector::Real::kdTree Perl implementation of k-d trees.