Aviation accident analysis: Difference between revisions

Yiningou22 (talk | contribs) heading change |

Yiningou22 (talk | contribs) reference |

||

| Line 12: | Line 12: | ||

* '''Unsafe supervision''': This layer includes inadequate supervision, inappropriate operations, failure to correct a problem and supervisory violation. For example, if emergency procedure training is not provided to a new employee, it will increase the potential risk of a fatal accident. |

* '''Unsafe supervision''': This layer includes inadequate supervision, inappropriate operations, failure to correct a problem and supervisory violation. For example, if emergency procedure training is not provided to a new employee, it will increase the potential risk of a fatal accident. |

||

*'''Unsafe action''': Unsafe action is not the direct cause of accident. There are some preconditions that lead to unsafe actions; unstable mental state is one of the reasons for bad decisions. |

*'''Unsafe action''': Unsafe action is not the direct cause of accident. There are some preconditions that lead to unsafe actions; unstable mental state is one of the reasons for bad decisions. |

||

*'''Error and violation''': These are part of unsafe action. Error refers to an individual unable to perform a correct action to achieve an outcome. Violation involves the action of breaking a rule or regulation. All these four layers form the basic component of the Swiss cheese model and accident analysis can be performed by tracing all these factors.<ref name=":3" /><ref>{{cite journal|url = |title = A Review of Accident Modelling Approaches for Complex Critical Sociotechnical Systems|last = Qureshi|first = Zahid H|date = Jan 2008|journal = Defense Science and Technology Organisation|doi = |pmid = |access-date = Oct 26, 2015}}</ref> |

*'''Error and violation''': These are part of unsafe action. Error refers to an individual unable to perform a correct action to achieve an outcome. Violation involves the action of breaking a rule or regulation. All these four layers form the basic component of the Swiss cheese model and accident analysis can be performed by tracing all these factors.<ref name=":3" /><ref>{{cite journal|url = |title = A Review of Accident Modelling Approaches for Complex Critical Sociotechnical Systems|last = Qureshi|first = Zahid H|date = Jan 2008|journal = Defense Science and Technology Organisation|doi = |pmid = |access-date = Oct 26, 2015}}</ref><ref>{{cite journal|last1=Mearns|first1=Kathryn J.|last2=Flin|first2=Rhona|title=Assessing the state of organizational safety—culture or climate?|journal=Current Psychology|date=March 1999|volume=18|issue=1|pages=5–17|doi=10.1007/s12144-999-1013-3}}</ref> |

||

=== Investigation using Reason's model === |

=== Investigation using Reason's model === |

||

Based on Reason's model, accident investigators analyze the accident from all four layers to determine the cause of the accident. There are two main types of failure investigators will focus on: active failure and latent failure. |

Based on Reason's model, accident investigators analyze the accident from all four layers to determine the cause of the accident. There are two main types of failure investigators will focus on: active failure and latent failure. |

||

* '''Active failure''' is an unsafe act conducted by an individual that directly leads to accident. Investigators will identify [[pilot error]] first. Misconducting of emergency procedure, misunderstanding of instructions, failing to put proper flaps on landing, and ignoring in-flight warning system are few examples of active failure. The difference between active failure and latent failure is that the effect caused by active failure will show up immediately. |

* '''Active failure''' is an unsafe act conducted by an individual that directly leads to accident. Investigators will identify [[pilot error]] first. Misconducting of emergency procedure, misunderstanding of instructions, failing to put proper flaps on landing, and ignoring in-flight warning system are few examples of active failure. The difference between active failure and latent failure is that the effect caused by active failure will show up immediately. |

||

* '''Latent failure''' usually occurs from the high level management. Investigators may ignore this kind of failure because it may remain undetected for a long time.<ref>{{cite journal|title = Human error: models and management|url = http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1117770/|journal = BMJ : British Medical Journal|date = 2000-03-18|issn = 0959-8138|pmc = 1117770|pmid = 10720363|pages = 768–770|volume = 320|issue = 7237|first = James|last = Reason}}</ref> During the investigation of latent failure, investigators have three levels to assess. The first is the factor that directly affects the operator's behavior: precondition (fatigue and illness). On February 12, 2009, a Colgan Air Bombardier DHC-8-400 was on approach to Buffalo-Niagara International Airport. The pilots were experiencing fatigue and their inattentiveness cost the lives of everyone on board and one person on the ground when it crashed near the airport.<ref>{{cite web|title = Aviation Accident Report AAR-10-01|url = http://www.ntsb.gov/investigations/AccidentReports/Pages/AAR1001.aspx|website = www.ntsb.gov|accessdate = 2015-10-26}}</ref> The investigation suggested that both pilots were tired and their conversation was not related to the flight operation, which indirectly caused the accident.<ref>{{cite web|title = A double tragedy: Colgan Air Flight 3407 – Air Facts Journal|url = http://airfactsjournal.com/2014/03/double-tragedy-colgan-air-flight-3407/|website = Air Facts Journal|accessdate = 2015-10-26|language = en-US}}</ref><ref>{{cite journal|title = Crew Schedules, Sleep Deprivation, and Aviation Performance|url = http://cdp.sagepub.com/content/21/2/85|journal = Current Directions in Psychological Science|date = 2012-04-01|issn = 0963-7214|pages = 85–89|volume = 21|issue = 2|doi = 10.1177/0963721411435842|first = John A.|last = Caldwell}}</ref> The second level investigator will track precursors of accidents related to latent threats. On June 1, 2009, Air France 447 crashed into the Atlantic Ocean and 228 passengers on broad were killed. Analysis of the black box indicated that the airplane was controlled by an inexperienced co-pilot who lifted the nose too high and induced a [[compressor stall|stall]].<ref>{{cite journal|title = Lessons from the past: Inaccurate credibility assessments made during crisis situations|url = http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=6699098|journal = 2013 IEEE International Conference on Technologies for Homeland Security (HST)|date = 2013-11-01|pages = 754–759|doi = 10.1109/THS.2013.6699098|first = L.C.|last = Landrigan|first2 = J.P.|last2 = Wade|first3 = A.|last3 = Milewski|first4 = B.|last4 = Reagor}}</ref> Letting an inexperienced pilot fly the airplane by himself is one of the cases of unsafe supervision. The last area that latent failure investigation will assess is organizational failure. For example, an airline company decides to reduce the cost spent on pilot training. Those who lack of training will directly lead to the existence of inexperienced pilots. |

* '''Latent failure''' usually occurs from the high level management. Investigators may ignore this kind of failure because it may remain undetected for a long time.<ref>{{cite journal|title = Human error: models and management|url = http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1117770/|journal = BMJ : British Medical Journal|date = 2000-03-18|issn = 0959-8138|pmc = 1117770|pmid = 10720363|pages = 768–770|volume = 320|issue = 7237|first = James|last = Reason}}</ref> During the investigation of latent failure, investigators have three levels to assess. The first is the factor that directly affects the operator's behavior: precondition (fatigue and illness). On February 12, 2009, a Colgan Air Bombardier DHC-8-400 was on approach to Buffalo-Niagara International Airport. The pilots were experiencing fatigue and their inattentiveness cost the lives of everyone on board and one person on the ground when it crashed near the airport.<ref>{{cite web|title = Aviation Accident Report AAR-10-01|url = http://www.ntsb.gov/investigations/AccidentReports/Pages/AAR1001.aspx|website = www.ntsb.gov|accessdate = 2015-10-26}}</ref> The investigation suggested that both pilots were tired and their conversation was not related to the flight operation, which indirectly caused the accident.<ref>{{cite web|title = A double tragedy: Colgan Air Flight 3407 – Air Facts Journal|url = http://airfactsjournal.com/2014/03/double-tragedy-colgan-air-flight-3407/|website = Air Facts Journal|accessdate = 2015-10-26|language = en-US}}</ref><ref>{{cite journal|title = Crew Schedules, Sleep Deprivation, and Aviation Performance|url = http://cdp.sagepub.com/content/21/2/85|journal = Current Directions in Psychological Science|date = 2012-04-01|issn = 0963-7214|pages = 85–89|volume = 21|issue = 2|doi = 10.1177/0963721411435842|first = John A.|last = Caldwell}}</ref> The second level investigator will track precursors of accidents related to latent threats. On June 1, 2009, Air France 447 crashed into the Atlantic Ocean and 228 passengers on broad were killed. Analysis of the black box indicated that the airplane was controlled by an inexperienced co-pilot who lifted the nose too high and induced a [[compressor stall|stall]].<ref>{{cite journal|title = Lessons from the past: Inaccurate credibility assessments made during crisis situations|url = http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=6699098|journal = 2013 IEEE International Conference on Technologies for Homeland Security (HST)|date = 2013-11-01|pages = 754–759|doi = 10.1109/THS.2013.6699098|first = L.C.|last = Landrigan|first2 = J.P.|last2 = Wade|first3 = A.|last3 = Milewski|first4 = B.|last4 = Reagor}}</ref> Letting an inexperienced pilot fly the airplane by himself is one of the cases of unsafe supervision. The last area that latent failure investigation will assess is organizational failure. For example, an airline company decides to reduce the cost spent on pilot training. Those who lack of training will directly lead to the existence of inexperienced pilots.<ref>{{cite journal|last1=Haddad|first1=Ziad S.|last2=Park|first2=Kyung-Won|title=Vertical profiling of tropical precipitation using passive microwave observations and its implications regarding the crash of Air France 447|journal=Journal of Geophysical Research|date=23 June 2010|volume=115|issue=D12|doi=10.1029/2009JD013380}}</ref> |

||

In order to fully understand the cause of the accident all those steps need to be performed. Investigation that is different from the causing of the accident, then it is necessary to investigate from backward of Reason's model. |

In order to fully understand the cause of the accident all those steps need to be performed. Investigation that is different from the causing of the accident, then it is necessary to investigate from backward of Reason's model. |

||

Revision as of 18:09, 2 December 2015

Aviation accident analysis is performed to determine the cause of errors once an accident has happened. In the modern aviation industry, it is also used to analyze the database in order to prevent an accident from happening. Many models have been used not only for the accident investigation but also for educational purpose.[1]

Human factors

In the aviation industry, human error is the major cause of accidents. About 38% of 329 major airline crashes, 74% of 1627 commuter/air taxi crashes, and 85% of 27935 general aviation crashes were related to pilot error.[2] The Swiss cheese model is an accident causation model which analyzes the accident more from the human factor aspect.[3][4]

Reason's model

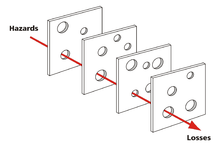

Reason's model, commonly referred to as the Swiss cheese model, was based on Reason's approach that all organizations should work together to ensure a safe and efficient operation.[1] From the pilot's perspective, in order to maintain a safe flight operation, all human and mechanical elements must co-operate effectively in the system. In Reason's model, the holes represent weakness or failure. These holes will not lead to accident directly, because of the existence of defense layers. However, once all the holes line up, an accident will occur.[5]

There are four layers in this model: organizational influences, unsafe supervision, precondition and unsafe acts.

- Organizational influences: This layer is about resources management, organizational climate and organizational process. For example, a crew underestimating the cost of maintenance will leave the airplane and equipment in bad condition.

- Unsafe supervision: This layer includes inadequate supervision, inappropriate operations, failure to correct a problem and supervisory violation. For example, if emergency procedure training is not provided to a new employee, it will increase the potential risk of a fatal accident.

- Unsafe action: Unsafe action is not the direct cause of accident. There are some preconditions that lead to unsafe actions; unstable mental state is one of the reasons for bad decisions.

- Error and violation: These are part of unsafe action. Error refers to an individual unable to perform a correct action to achieve an outcome. Violation involves the action of breaking a rule or regulation. All these four layers form the basic component of the Swiss cheese model and accident analysis can be performed by tracing all these factors.[1][6][7]

Investigation using Reason's model

Based on Reason's model, accident investigators analyze the accident from all four layers to determine the cause of the accident. There are two main types of failure investigators will focus on: active failure and latent failure.

- Active failure is an unsafe act conducted by an individual that directly leads to accident. Investigators will identify pilot error first. Misconducting of emergency procedure, misunderstanding of instructions, failing to put proper flaps on landing, and ignoring in-flight warning system are few examples of active failure. The difference between active failure and latent failure is that the effect caused by active failure will show up immediately.

- Latent failure usually occurs from the high level management. Investigators may ignore this kind of failure because it may remain undetected for a long time.[8] During the investigation of latent failure, investigators have three levels to assess. The first is the factor that directly affects the operator's behavior: precondition (fatigue and illness). On February 12, 2009, a Colgan Air Bombardier DHC-8-400 was on approach to Buffalo-Niagara International Airport. The pilots were experiencing fatigue and their inattentiveness cost the lives of everyone on board and one person on the ground when it crashed near the airport.[9] The investigation suggested that both pilots were tired and their conversation was not related to the flight operation, which indirectly caused the accident.[10][11] The second level investigator will track precursors of accidents related to latent threats. On June 1, 2009, Air France 447 crashed into the Atlantic Ocean and 228 passengers on broad were killed. Analysis of the black box indicated that the airplane was controlled by an inexperienced co-pilot who lifted the nose too high and induced a stall.[12] Letting an inexperienced pilot fly the airplane by himself is one of the cases of unsafe supervision. The last area that latent failure investigation will assess is organizational failure. For example, an airline company decides to reduce the cost spent on pilot training. Those who lack of training will directly lead to the existence of inexperienced pilots.[13]

In order to fully understand the cause of the accident all those steps need to be performed. Investigation that is different from the causing of the accident, then it is necessary to investigate from backward of Reason's model.

Related reading

References

- ^ a b c Wiegmann, Douglas A (2003). "A Human Error Approach to Aviation Accident Analysis: The Human Factors Analysis and Classification System" (PDF). Ashgate Publishing Limited. Retrieved Oct 26, 2015.

- ^ Li, Guohua (Feb 2001). "Factors associated with pilot error in aviation crashes" (PDF). Research Gate. Retrieved Oct 26, 2015.

- ^ P.M, Salmon (2012). "Systems-based analysis methods: a comparison of AcciMap, HFACS, and STAMP". Safety Science.

{{cite journal}}:|access-date=requires|url=(help) - ^ Underwood, Peter; Waterson, Patrick (2014-07-01). "Systems thinking, the Swiss Cheese Model and accident analysis: A comparative systemic analysis of the Grayrigg train derailment using the ATSB, AcciMap and STAMP models". Accident Analysis & Prevention. Systems thinking in workplace safety and health. 68: 75–94. doi:10.1016/j.aap.2013.07.027.

- ^ Roelen, A. L. C.; Lin, P. H.; Hale, A. R. (2011-01-01). "Accident models and organisational factors in air transport: The need for multi-method models". Safety Science. The gift of failure: New approaches to analyzing and learning from events and near-misses – Honoring the contributions of Bernhard Wilpert. 49 (1): 5–10. doi:10.1016/j.ssci.2010.01.022.

- ^ Qureshi, Zahid H (Jan 2008). "A Review of Accident Modelling Approaches for Complex Critical Sociotechnical Systems". Defense Science and Technology Organisation.

{{cite journal}}:|access-date=requires|url=(help) - ^ Mearns, Kathryn J.; Flin, Rhona (March 1999). "Assessing the state of organizational safety—culture or climate?". Current Psychology. 18 (1): 5–17. doi:10.1007/s12144-999-1013-3.

- ^ Reason, James (2000-03-18). "Human error: models and management". BMJ : British Medical Journal. 320 (7237): 768–770. ISSN 0959-8138. PMC 1117770. PMID 10720363.

- ^ "Aviation Accident Report AAR-10-01". www.ntsb.gov. Retrieved 2015-10-26.

- ^ "A double tragedy: Colgan Air Flight 3407 – Air Facts Journal". Air Facts Journal. Retrieved 2015-10-26.

- ^ Caldwell, John A. (2012-04-01). "Crew Schedules, Sleep Deprivation, and Aviation Performance". Current Directions in Psychological Science. 21 (2): 85–89. doi:10.1177/0963721411435842. ISSN 0963-7214.

- ^ Landrigan, L.C.; Wade, J.P.; Milewski, A.; Reagor, B. (2013-11-01). "Lessons from the past: Inaccurate credibility assessments made during crisis situations". 2013 IEEE International Conference on Technologies for Homeland Security (HST): 754–759. doi:10.1109/THS.2013.6699098.

- ^ Haddad, Ziad S.; Park, Kyung-Won (23 June 2010). "Vertical profiling of tropical precipitation using passive microwave observations and its implications regarding the crash of Air France 447". Journal of Geophysical Research. 115 (D12). doi:10.1029/2009JD013380.