Timsort

| Class | Sorting algorithm |

|---|---|

| Data structure | Array |

| Worst-case performance | [1] |

| Best-case performance | [2] |

| Average performance | |

| Worst-case space complexity | |

| Optimal | Yes |

Timsort is a hybrid stable sorting algorithm, derived from merge sort and insertion sort, designed to perform well on many kinds of real-world data. It uses techniques from Peter McIlroy's "Optimistic Sorting and Information Theoretic Complexity", in Proceedings of the Fourth Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 467–474, January 1993. It was implemented by Tim Peters in 2002 for use in the Python programming language. The algorithm finds subsequences of the data that are already ordered, and uses that knowledge to sort the remainder more efficiently. This is done by merging an identified subsequence, called a run, with existing runs until certain criteria are fulfilled. Timsort has been Python's standard sorting algorithm since version 2.3. It is also used to sort arrays of non-primitive type in Java SE 7,[3] on the Android platform,[4] and in GNU Octave.[5]

Operation

Timsort was designed to take advantage of partial orderings that already exist in most real-world data. Timsort operates by finding runs, subsequences of at least two elements that are either non-descending (each element is equal to or greater than its predecessor) or strictly descending (each element is lower than its predecessor). If it is descending, it must be strictly descending, since descending runs are later reversed by a simple swap of elements from both ends converging in the middle. After obtaining such a run in the given array, Timsort processes it, and then searches for the next run.

Minrun

A natural run is a subsequence that is already ordered. Natural runs in real-world data may be of varied lengths. Timsort chooses a sorting technique depending on the length of the run. For example, if the run length is smaller than a certain value, insertion sort is used. Thus Timsort is an adaptive sort.[6]

The size of the run is checked against the minimum run size. The minimum run size (minrun) depends on the size of the data being sorted, with a minimum of 64. So, for fewer than 64 elements, Timsort reduces to an insertion sort. For larger arrays, minrun is chosen from the range 32 to 64 inclusive, such that the size of the array, divided by minrun, is equal to, or slightly smaller than, a power of two. The final algorithm takes the six most significant bits of the size of the array, adds one if any of the remaining bits are set, and uses that result as the minrun. This algorithm works for all arrays, including those smaller than 64.[6]

Insertion sort

When an array is random, natural runs most likely contain fewer than minrun elements. In this case, an appropriate number of succeeding elements is selected, and an insertion sort increases the size of the run to minrun size. Thus, most runs in a random array are, or become, minrun in length. This results in efficient, balanced merges. It also results in a reasonable number of function calls in the implementation of the sort.[7]

Merge memory

Once run lengths are optimized, the runs are merged. When a run is found, the algorithm pushes its base address and length on a stack. A function determines whether the run should be merged with previous runs. Timsort does not merge non-consecutive runs, because doing this would cause the element common to all three runs to become out of order with respect to the middle run.

Thus, merging is always done on consecutive runs. For this, the three top-most runs in the stack which are unsorted are considered. If, say, X, Y, Z represent the lengths of the three uppermost runs in the stack, the algorithm merges the runs so that ultimately the following two rules are satisfied:

- X > Y + Z

- Y > Z[6]

For example, if the first of the two rules is not satisfied by the current run status, that is, if X ≤ Y + Z, then, Y is merged with the smaller of X and Z. The merging continues until both rules are satisfied. Then the algorithm determines the next run.[7]

The rules above aim at maintaining run lengths as close to each other as possible to balance the merges. Only a small number of runs are remembered, as the stack is of a specific size. The algorithm exploits the fresh occurrence of the runs to be merged, in cache memory. Thus a compromise is attained between delaying merging, and exploiting fresh occurrence in cache.

Merging procedure

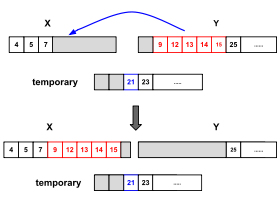

Merging adjacent runs is done with the help of temporary memory. The temporary memory is of the size of the lesser of the two runs. The algorithm copies the smaller of the two runs into this temporary memory and then uses the original memory (of the smaller run) and the memory of the other run to store sorted output.

A simple merge algorithm runs left to right or right to left depending on which run is smaller, on the temporary memory and original memory of the larger run. The final sorted run is stored in the original memory of the two initial runs. Timsort searches for appropriate positions for the starting element of one array in the other using an adaptation of binary search.

Say, for example, two runs A and B are to be merged, with A as the smaller run. In this case a binary search examines A to find the first position larger than the first element of B (a'). Note that A and B are already sorted individually. When a' is found, the algorithm can ignore elements before that position while inserting B. Similarly, the algorithm also looks for the smallest element in B (b') greater than the largest element in A (a"). The elements after b' can also be ignored for the merging. This preliminary searching is not efficient for highly random data, but is efficient in other situations and is hence included.

Galloping mode

Most of the merge occurs in what is called "one pair at a time" mode, where respective elements of both runs are compared. When the algorithm merges left-to-right, the smaller of the two is brought to a merge area. A count of the number of times the final element appears in a given run is recorded. When this value reaches a certain threshold, MIN_GALLOP, the merge switches to "galloping mode". In this mode, we use the previously mentioned adaptation of binary search to identify where the first element of the smaller array must be placed in the larger array (and vice versa). All elements in the larger array that occur before this location can be moved to the merge area as a group (and vice versa). The functions merge-lo and merge-hi increment the value of min-gallop (initialized to MIN_GALLOP), if galloping is not efficient, and decrement it, if it is. If too many consecutive elements come from different runs, galloping mode is exited.[6]

In galloping mode, the algorithm searches for the first element of one array in the other. This is done by comparing that first element (initial element) with the zeroth element of the other array, then the first, the third and so on, that is (2k − 1)th element, so as to get a range of elements between which the initial element will lie. This shortens the range for binary searching, thus increasing efficiency. Galloping proves to be more efficient except in cases with especially long runs, but random data usually has shorter runs. Also, in cases where galloping is found to be less efficient than binary search, galloping mode is exited.

Galloping is not always efficient. One reason is due to excessive function calls. Function calls are expensive and thus when frequent, they affect program efficiency. In some cases galloping mode requires more comparisons than a simple linear search (one at a time search). While for the first few cases both modes may require the same number of comparisons, over time galloping mode requires 33% more comparisons than linear search to arrive at the same results. Moreover, all comparisons in galloping mode are done by function calls.

Galloping is beneficial only when the initial element of one run is not one of the first seven elements of the other run. This implies a MIN_GALLOP of 7. To avoid the drawbacks of galloping mode, the merging functions adjust the value of min-gallop. If the element is from the array currently that has been returning elements, min-gallop is reduced by one. Otherwise, the value is incremented by one, thus discouraging a return to galloping mode. When this is done, in the case of random data, the value of min-gallop becomes so large that galloping mode never recurs.

In the case where merge-hi is used (that is, merging is done right-to-left), galloping starts from the right end of the data, that is, the last element. Galloping from the beginning also gives the required results, but makes more comparisons. Thus, the galloping algorithm uses a variable that gives the index at which galloping should begin. Timsort can enter galloping mode at any index and continue checking at the next index which is offset by 1, 3, 7, ..., (2k − 1)... and so on from the current index. In the case of merge-hi, the offsets to the index will be −1, −3, −7, ....[6]

Analysis

In the worst case, Timsort takes comparisons to sort an array of n elements. In the best case, which occurs when the input is already sorted, it runs in linear time, meaning that it is an adaptive sorting algorithm.[2]

Debugging with formal methods

Researchers discovered using formal verification (KeY) that the three runs mentioned above are not sufficient to hold the invariant for any arbitrary array.[8] The bug was not deemed critical because no current machine could hold a sufficient number of elements, approximately 249 or 562 trillion, to trigger the error. The bug was patched in Python a day later.[9]

References

- ^ Peters, Tim. "[Python-Dev] Sorting". Python Developers Mailinglist. Retrieved 24 February 2011.

[Timsort] also has good aspects: It's stable (items that compare equal retain their relative order, so, e.g., if you sort first on zip code, and a second time on name, people with the same name still appear in order of increasing zip code; this is important in apps that, e.g., refine the results of queries based on user input). ... It has no bad cases (O(N log N) is worst case; N−1 compares is best).

- ^ a b Chandramouli, Badrish; Goldstein, Jonathan (2014). Patience is a Virtue: Revisiting Merge and Sort on Modern Processors. SIGMOD/PODS.

- ^ "[#JDK-6804124] (coll) Replace "modified mergesort" in java.util.Arrays.sort with timsort". JDK Bug System. Retrieved 11 June 2014.

- ^ "Class: java.util.TimSort<T>". Android Gingerbread Documentation. Retrieved 24 February 2011.[dead link]

- ^

"liboctave/util/oct-sort.cc". Mercurial repository of Octave source code. Lines 23-25 of the initial comment block. Retrieved 18 February 2013.

Code stolen in large part from Python's, listobject.c, which itself had no license header. However, thanks to Tim Peters for the parts of the code I ripped-off.

- ^ a b c d e timsort, python. "python_timsort".

- ^ a b MacIver, David R. (11 January 2010). "Understanding timsort, Part 1: Adaptive Mergesort". Retrieved 5 December 2015.

- ^ Proving that Android’s, Java’s and Python’s sorting algorithm is broken (and showing how to fix it)

- ^ Python Issue Tracker – Issue 23515: Bad logic in timsort's merge_collapse

Further reading

- Auger, Nicolas; Nicaud, Cyril; Pivoteau, Carine (2015). "Merge Strategies: from Merge Sort to TimSort". hal-01212839.

External links

- timsort.txt – original explanation by Tim Peters revised branch version

- listobject.c:1910@7b5057b89a56 – Python's Tree implementation

- Visualising Timsort – the source for the image on this page

- Python's listobject.c – the C implementation of Timsort used in CPython

- OpenJDK's TimSort.java – the Java implementation of Timsort

- GNU Octave's oct-sort.cc – the C++ implementation of Timsort used in GNU Octave

- Sort Comparison – a pure Python and Cython implementation of Timsort, among other sorts