Binary heap: Difference between revisions

rvt WP:COI WP:REFSPAM addition by user with same name. |

|||

| Line 215: | Line 215: | ||

*[http://sites.google.com/site/indy256/algo-en/binary_heap Java Implementation of Binary Heap] |

*[http://sites.google.com/site/indy256/algo-en/binary_heap Java Implementation of Binary Heap] |

||

*[https://github.com/valyala/gheap C++ implementation of generalized heap with Binary Heap support] |

*[https://github.com/valyala/gheap C++ implementation of generalized heap with Binary Heap support] |

||

*[http://opendatastructures.org/versions/edition-0. |

*[http://opendatastructures.org/versions/edition-0.1d/ods-java/node52.html Open Data Structures - Section 10.1 - BinaryHeap: An Implicit Binary Tree] |

||

{{DEFAULTSORT:Binary Heap}} |

{{DEFAULTSORT:Binary Heap}} |

||

Revision as of 16:52, 5 March 2012

A binary heap is a heap data structure created using a binary tree. It can be seen as a binary tree with two additional constraints:

- The shape property: the tree is a complete binary tree; that is, all levels of the tree, except possibly the last one (deepest) are fully filled, and, if the last level of the tree is not complete, the nodes of that level are filled from left to right.

- The heap property: each node is greater than or equal to each of its children according to a comparison predicate defined for the data structure.

Heaps with a mathematical "greater than or equal to" comparison function are called max-heaps; those with a mathematical "less than or equal to" comparison function are called min-heaps. Min-heaps are often used to implement priority queues.[1][2]

Since the ordering of siblings in a heap is not specified by the heap property, a single node's two children can be freely interchanged unless doing so violates the shape property (compare with treap).

The binary heap is a special case of the d-ary heap in which d = 2.

Heap operations

Both the insert and remove operations modify the heap to conform to the shape property first, by adding or removing from the end of the heap. Then the heap property is restored by traversing up or down the heap. Both operations take O(log n) time.

Insert

To add an element to a heap we must perform an up-heap operation (also known as bubble-up, percolate-up, sift-up, trickle up, heapify-up, or cascade-up), by following this algorithm:

- Add the element to the bottom level of the heap.

- Compare the added element with its parent; if they are in the correct order, stop.

- If not, swap the element with its parent and return to the previous step.

The worst case is when the new element needs to become the root, requiring one iteration for each level in the tree, which is O(log n). However, since approximately 50% of the elements are leaves and 75% are in the bottom two levels, it is likely that the new element to be inserted will only move a few levels upwards to maintain the heap. Thus, binary heaps support insertion in average constant time, O(1).

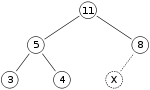

As an example, say we have a max-heap

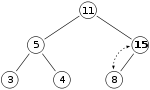

and we want to add the number 15 to the heap. We first place the 15 in the position marked by the X. However, the heap property is violated since 15 is greater than 8, so we need to swap the 15 and the 8. So, we have the heap looking as follows after the first swap:

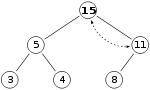

However the heap property is still violated since 15 is greater than 11, so we need to swap again:

which is a valid max-heap. There is no need to check the children after this. Before we placed 15 on X, the heap was valid, meaning 11 is greater than 5. If 15 is greater than 11, and 11 is greater than 5, then 15 must be greater than 5, because of the transitive relation.

Remove

The procedure for deleting the root from the heap (effectively extracting the maximum element in a max-heap or the minimum element in a min-heap) and restoring the properties is called down-heap (also known as bubble-down, percolate-down, sift-down, trickle down, heapify-down, or cascade-down).

- Replace the root of the heap with the last element on the last level.

- Compare the new root with its children; if they are in the correct order, stop.

- If not, swap the element with one of its children and return to the previous step. (Swap with its smaller child in a min-heap and its larger child in a max-heap.)

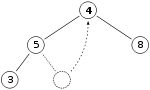

So, if we have the same max-heap as before, we remove the 11 and replace it with the 4.

Now the heap property is violated since 8 is greater than 4. In this case, swapping the two elements, 4 and 8, is enough to restore the heap property and we need not swap elements further:

The downward-moving node is swapped with the larger of its children in a max-heap (in a min-heap it would be swapped with its smaller child), until it satisfies the heap property in its new position. This functionality is achieved by the Max-Heapify function as defined below in pseudocode for an array-backed heap A. Note that "A" is indexed starting at 1, not 0 as is common in many programming languages.

For the following algorithm to correctly re-heapify the array, the node at index i and its two direct children must violate the heap property. If they do not, the algorithm will fall through with no change to the array.

Max-Heapify[3] (A, i):

left ← 2i

right ← 2i + 1

largest ← i

if left ≤ heap_length[A] and A[left] > A[largest] then:

largest ← left

if right ≤ heap_length[A] and A[right] > A[largest] then:

largest ← right

if largest ≠ i then:

swap A[i] ↔ A[largest]

Max-Heapify(A, largest)

The down-heap operation (without the preceding swap) can also be used to modify the value of the root, even when an element is not being deleted.

Building a heap

A heap could be built by successive insertions. This approach requires time because each insertion takes time and there are elements. However this is not the optimal method. The optimal method starts by arbitrarily putting the elements on a binary tree, respecting the shape property (the tree could be represented by an array, see below). Then starting from the lowest level and moving upwards, shift the root of each subtree downward as in the deletion algorithm until the heap property is restored. More specifically if all the subtrees starting at some height (measured from the bottom) have already been "heapified", the trees at height can be heapified by sending their root down along the path of maximum valued children when building a max-heap, or minimum valued children when building a min-heap. This process takes operations (swaps) per node. In this method most of the heapification takes place in the lower levels. The number of nodes at height is . Therefore, the cost of heapifying all subtrees is:

This uses the fact that the given infinite series h / 2h converges to 2.

The Build-Max-Heap function that follows, converts an array A which stores a complete binary tree with n nodes to a max-heap by repeatedly using Max-Heapify in a bottom up manner. It is based on the observation that the array elements indexed by floor(n/2) + 1, floor(n/2) + 2, ..., n are all leaves for the tree, thus each is a one-element heap. Build-Max-Heap runs Max-Heapify on each of the remaining tree nodes.

Build-Max-Heap[3] (A):

heap_length[A] ← length[A]

for i ← floor(length[A]/2) downto 1 do

Max-Heapify(A, i)

Heap implementation

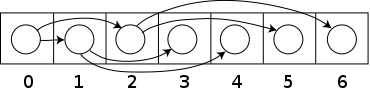

Heaps are commonly implemented with an array. Any binary tree can be stored in an array, but because a heap is always an almost complete binary tree, it can be stored compactly. No space is required for pointers; instead, the parent and children of each node can be found by arithmetic on array indices. These properties make this heap implementation a simple example of an implicit data structure or Ahnentafel list. Details depend on the root position, which in turn may depend on constraints of a programming language used for implementation, or programmer preference. Specifically, sometimes the root is placed at index 1, wasting space in order to simplify arithmetic.

Let n be the number of elements in the heap and i be an arbitrary valid index of the array storing the heap. If the tree root is at index 0, with valid indices 0 through n-1, then each element a[i] has

- children a[2i+1] and a[2i+2]

- parent a[floor((i−1)/2)]

Alternatively, if the tree root is at index 1, with valid indices 1 through n, then each element a[i] has

- children a[2i] and a[2i+1]

- parent a[floor(i/2)].

This implementation is used in the heapsort algorithm, where it allows the space in the input array to be reused to store the heap (i.e. the algorithm is done in-place). The implementation is also useful for use as a Priority queue where use of a dynamic array allows insertion of an unbounded number of items.

The upheap/downheap operations can then be stated in terms of an array as follows: suppose that the heap property holds for the indices b, b+1, ..., e. The sift-down function extends the heap property to b−1, b, b+1, ..., e. Only index i = b−1 can violate the heap property. Let j be the index of the largest child of a[i] (for a max-heap, or the smallest child for a min-heap) within the range b, ..., e. (If no such index exists because 2i > e then the heap property holds for the newly extended range and nothing needs to be done.) By swapping the values a[i] and a[j] the heap property for position i is established. At this point, the only problem is that the heap property might not hold for index j. The sift-down function is applied tail-recursively to index j until the heap property is established for all elements.

The sift-down function is fast. In each step it only needs two comparisons and one swap. The index value where it is working doubles in each iteration, so that at most log2 e steps are required.

For big heaps and using virtual memory, storing elements in an array according to the above scheme is inefficient: (almost) every level is in a different page. B-heaps are binary heaps that keep subtrees in a single page, reducing the number of pages accessed by up to a factor of ten.[4]

The operation of merging two binary heaps takes Θ(n) for equal-sized heaps. The best you can do is (in case of array implementation) simply concatenating the two heap arrays and build a heap of the result.[5] When merging is a common task, a different heap implementation is recommended, such as binomial heaps, which can be merged in O(log n).

Additionally, a binary heap can be implemented with a traditional binary tree data structure, but there is an issue with finding the adjacent element on the last level on the binary heap when adding an element. This element can be determined algorithmically or by adding extra data to the nodes, called "threading" the tree—instead of merely storing references to the children, we store the inorder successor of the node as well.

It is possible to modify the heap structure to allow extraction of both the smallest and largest element in time.[6] To do this, the rows alternate between min heap and max heap. The algorithms are roughly the same, but, in each step, one must consider the alternating rows with alternating comparisons. The performance is roughly the same as a normal single direction heap. This idea can be generalised to a min-max-median heap.

Derivation of children's index in an array implementation

This derivation will show how for any given node (starts from zero), its children would be found at and .

Mathematical proof

From the figure in "Heap Implementation" section, it can be seen that any node can store its children only after its right siblings and its left siblings' children have been stored. This fact will be used for derivation.

Total number of elements from root to any given level = , where starts at zero.

Suppose the node is at level .

So, the total number of nodes from root to previous level would be =

Total number of nodes stored in the array till the index = (Counting too)

So, total number of siblings on the left of is

Hence, total number of children of these siblings =

Number of elements at any given level =

So, total siblings to right of is:-

So, index of 1st child of node would be:-

- [Proved]

Intuitive proof

Although the mathematical approach proves this without doubt, the simplicity of the resulting equation suggests that there should be a simpler way to arrive at this conclusion.

For this two facts should be noted.

- Children for node will be found at the very first empty slot.

- Second is that, all nodes previous to node , right up to the root, will have exactly two children. This is necessary to maintain the shape of the heap.

Now since all nodes have two children (as per the second fact) so all memory slots taken by the children will be . We add one since starts at zero. Then we subtract one since node doesn't yet have any children.

This means all filled memory slots have been accounted for except one – the root node. Root is child to none. So finally, the count of all filled memory slots are .

So, by fact one and since our indexing starts at zero, itself gives the index of the first child of .

See also

References

- ^ "heapq – Heap queue algorithm". Python Standard Library.

- ^ "Class PriorityQueue". Java™ Platform Standard Ed. 6.

- ^ a b Cormen, T. H.; al. (2001), Introduction to Algorithms (2nd ed.), Cambridge, Massachusetts: The MIT Press, ISBN 0070131511

{{citation}}: Unknown parameter|lastauthoramp=ignored (|name-list-style=suggested) (help) - ^ Poul-Henning Kamp. "You're Doing It Wrong". ACM Queue. June 11, 2010.

- ^ Chris L. Kuszmaul. "binary heap". Dictionary of Algorithms and Data Structures, Paul E. Black, ed., U.S. National Institute of Standards and Technology. 16 November 2009.

- ^ Atkinson, M.D., J.-R. Sack, N. Santoro, and T. Strothotte (1 October 1986). "Min-max heaps and generalized priority queues" (PDF). Programming techniques and Data structures. Comm. ACM, 29(10): 996–1000.

{{cite web}}: CS1 maint: multiple names: authors list (link)