Earthquake prediction: Difference between revisions

No edit summary |

→2009: L'Aquila, Italy (Giuliani): The prosecution absolutely should be mentioned here |

||

| Line 361: | Line 361: | ||

After the L'Aquila event Giuliani claimed that he had found alarming rises in radon levels just hours before.<ref>{{Harvnb|Kerr|2009}}; {{Harvnb|The Telegraph, 6 April|2009}}.</ref> Although he reportedly claimed to have "phoned urgent warnings to relatives, friends and colleagues" on the evening before the earthquake hit,<ref>{{Harvnb|The Guardian, 5 April|2010}}; {{Harvnb|Kerr|2009}}.</ref> the ''International Commission on Earthquake Forecasting for Civil Protection'', after interviewing Giuliani, found that there had been no valid prediction of the mainshock before its occurrence.<ref>{{Harvnb|ICEF|2011}}, p. 323, and see also p. 335.</ref> |

After the L'Aquila event Giuliani claimed that he had found alarming rises in radon levels just hours before.<ref>{{Harvnb|Kerr|2009}}; {{Harvnb|The Telegraph, 6 April|2009}}.</ref> Although he reportedly claimed to have "phoned urgent warnings to relatives, friends and colleagues" on the evening before the earthquake hit,<ref>{{Harvnb|The Guardian, 5 April|2010}}; {{Harvnb|Kerr|2009}}.</ref> the ''International Commission on Earthquake Forecasting for Civil Protection'', after interviewing Giuliani, found that there had been no valid prediction of the mainshock before its occurrence.<ref>{{Harvnb|ICEF|2011}}, p. 323, and see also p. 335.</ref> |

||

At a public meeting, a group of mainstream scientist downplayed Giuliani's predictions and stated that a large earthquake was probably not coming. Despite their stating that earthquakes can not be predicted and Giuliani's previous failed predictions, the seven scientists were convicted of involuntary manslaughter for not predicting the quake. This conviction was universally criticized by the scientific community, with Malcolm Sperrin, a British scientist, saying: |

|||

{{quote|If the scientific community is to be penalised for making predictions that turn out to be incorrect, or for not accurately predicting an event that subsequently occurs, then scientific endeavour will be restricted to certainties only, and the benefits that are associated with findings, from medicine to physics, will be stalled.<ref name="BBC News">{{cite web |url= http://www.bbc.co.uk/news/world-europe-20025626 |title=BBC News - L'Aquila quake: Italy scientists guilty of manslaughter |first=Alan |last=Johnston |work=bbc.co.uk |year=2012 [last update] |accessdate=22 October 2012}}</ref>}} |

|||

{{further|2009 L'Aquila earthquake}} |

{{further|2009 L'Aquila earthquake}} |

||

Revision as of 15:59, 11 January 2013

This article's tone or style may not reflect the encyclopedic tone used on Wikipedia. (October 2012) |

This article contains too many or overly lengthy quotations. (October 2012) |

| Part of a series on |

| Earthquakes |

|---|

|

Earthquake prediction "is usually defined as the specification of the time, location, and magnitude of a future earthquake within stated limits",[1] and particularly of "the next strong earthquake to occur in a region."[2] This can be distinguished from earthquake forecasting, which is the probabilistic assessment of general earthquake hazard, including the frequency and magnitude of damaging earthquakes, in a given area over periods of years or decades.[3] This can be further distinguished from real-time earthquake warning systems, which, upon detection of a severe earthquake, can provide neighboring regions a few seconds warning of potentially significant shaking.

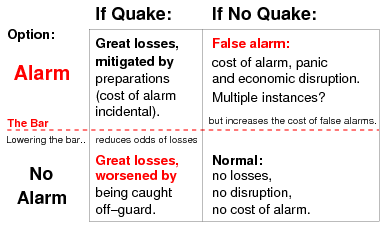

To be useful, an earthquake prediction must be precise enough to warrant the cost of increased preparations, including disruption of ordinary activities and commerce, and timely enough that preparations can be taken. Predictions must also be reliable, as false alarms and cancelled alarms are not only economically costly,[4] but seriously undermine confidence in, and thereby the effectiveness of, any kind of warning.[5]

With over 7,000 earthquakes around the world each year of magnitude 4.0 or greater, trivial success in earthquake prediction is easily obtained using sufficiently broad parameters of time, location, or magnitude.[6] However, such trivial "successful predictions" are not useful. Major earthquakes are often followed by reports that they were predicted, or at least anticipated, but no claim of a successful prediction of a major earthquake has survived close inquiry.[7]

In the 1970s there was intense optimism amongst scientists that some method of predicting earthquakes might be found, but by the 1990s continuing failure led many scientists to question whether it was even possible.[8] While many scientists still hold that, given enough resources, prediction might be possible, many others maintain that earthquake prediction is inherently impossible.[9]

The nature of earthquakes makes it difficult to meet the criteria for a successful prediction. Various approaches have been considered, and there have several notable predictions or claimed predictions. "An earthquake is like an assassin that returns to the scene of a crime after centuries" notes physicist Claudio Eva, "but you can never tell when."[10]

"Only fools and charlatans predict earthquakes."

—Charles Richter[11]

The problem of earthquake prediction

Definition and validity

The prediction of earthquakes is plagued from the outset by two problems: the definition of "prediction", and the definition of "earthquake". This might seem trivial, especially of the latter: it would seem that the ground shakes, or it doesn't. But in seismically active areas the ground frequently shakes. Just not hard enough for most people to notice.

| Mag. | Class. | # |

|---|---|---|

| M ≥ 8 | Great | 1 |

| M ≥ 7 | Major | 15 |

| M ≥ 6 | Large | 134 |

| M ≥ 5 | Moderate | 1319 |

| M ≥ 4 | Small | ~13,000 |

Notable shaking of the earth's crust typically includes one earthquake of Richter magnitude scale 8 or greater (M ≥ 8) somewhere in the world each year (the four M ≥ 8 quakes in 2007 being exceptional), and another 15 or so "major" M ≥ 7 quakes (but 23 in 2010).[13] The USGS reckons another 134 "large" quakes above M 6, and about 1300 quakes in the "moderate" range, from M 5 to M 5.9 ("felt by all, many frightened"[14]). In the M 4 to M 4.9 range — "small" — it is estimated that there are 13,000 quakes annually. Quakes less than M 4 — noticeable to only a few persons, and possibly not recognized as an earthquake — number over a million each year, or roughly 150 per hour.

With such a constant drumbeat of earthquakes various kinds of chicanery can be used to deceptively claim "predictions" that appear more successful than is truly the case.[6] E.g., predictions can be made that leave one or more parameters of location, time, and magnitude unspecified. These are subsequently adjusted to include what ever earthquakes as do occur. These would more properly be called "postdictions". Alternately, "pandictions" can be made, with such broad parameters as will most likely match some earthquake, some time, some where. These are indeed predictions, but trivial, meaningless for any purpose of fore-telling, and quite useless for making timely preparations for "the next big one". Or multiple predictions — "multidictions" — can be made, each of which, alone, seems statistically unlikely. "Success" derives from revealing, after the event, only those that prove successful.

To be more meaningful than a parlor trick, an earthquake prediction must be properly qualified. This includes unambiguous specification of time, location, and magnitude.[15] These should be stated either as ranges ("windows", error bounds), or with a weighting function, or with some definitive inclusion rule provided, so that there is no issue as to whether any particular event is, or is not, included in the prediction, so a prediction cannot be retrospectively expanded to include an earthquake it would have otherwise missed, or contracted to appear more significant than it really was. To show that was truly pre-dicted, not post-dicted, a prediction must be made before the event. And to expose attempts at scatter-shot multi-dictions predictions should be published in a manner that reveals all attempts at prediction, failures as well as successes.[16]

To be deemed "scientific" a prediction should be based on some kind of natural process, and derived in a manner such that any other researcher using the same method would obtain the same result.[17] Scientists are also expected to state their confidence in the prediction, and their estimate of an earthquake happening in the prediction window by chance (discussed below).[18]

A prediction ("alarm") can be made, or not, and an earthquake may occur, or not; these basic possibilities are shown in the contingency table at right. Once the various outcomes are tabulated various performance measures can be calculated.[19] E.g., the success rate is the proportion of all predictions which were successful [SR = a/(a+b)], while the Hit rate (or alarm rate) is the proportion of all events which were successfully predicted [H = a/(a+c)]. The false alarm ratio is the proportion of predictions which are false [FAR = b/(a+b)]. This is not to be confused[20] with the false alarm rate, which is the proportion of all non-events incorrectly "alarmed" [F = b/(b+d)].

Significance

"All predictions of the future can be to some extent successful by chance."

While the actual occurrence — or non-occurrence — of a specified earthquake might seem sufficient for evaluating a prediction, scientists understand there is always a chance, however small, of getting lucky. A prediction is significant only to the extent it is successful beyond chance.[21] Therefore they use statistical methods to determine the probability that an earthquake such as is predicted would happen anyway (the null hypothesis). They then evaluate whether the prediction — or a series of predictions produced by some method — correlates with actual earthquakes better than the null hypothesis.[22]

A null hypothesis must be chosen carefully. E.g., many studies have naively assumed that earthquakes occur randomly. But earthquakes do not occur randomly: they often cluster.[23] In particular, it has been shown that about 5% of earthquakes are "soon" followed by another earthquake in the "same" area of equal or greater magnitude. Simply predicting a subsequent event given the prior event will be successful about 5% of the time. Perhaps not as successful as a stopped clock, but still better, however slightly, than pure chance.[24] Similarly, many studies that have found some success for some precursor or prediction method have been shown to be flawed like this or in a similar way.[25]

Consequences

Predictions of major earthquakes by those claiming psychic premonitions are commonplace, uncredible, and create little disturbance. Predictions by those with scientific or even pseudo-scientific qualifications often cause serious social and economic disruption, and pose a great quandry for both scientists and public officials.

Some possibly predictive precursors — such as a sudden increase in seismicity — may give only a few hours of warning, allowing little deliberation and consultation with others. As the purpose of short-term prediction is to enable emergency measures to reduce death and destruction, failure to give warning of a major earthquake, that does occur, or at least an adequate evaluation of the hazard, can result in legal liability,[26] or even political purging.[27] But giving a warning — crying "wolf!" — of an earthquake that does not occur also incurs a cost. Not just of the emergency measures themselves, but of major civil and economic disruption. Geller[28] describes the arrangements made in Japan:

... if ‘anomalous data’ are recorded, an ‘Earthquake Assessment Committee’ (EAC) will be convened within two hours. Within 30 min the EAC must make a black (alarm) or white (no alarm) recommendation. The former would cause the Prime Minister to issue the alarm, which would shut down all expressways, bullet trains, schools, factories, etc., in an area covering seven prefectures. Tokyo would also be effectively shut down.

The cost of such measures has been estimated at US$7 billion per day. False alarms (including alarms that are cancelled) also undermine the credibility, and thereby the effectiveness, of future warnings.[5]

The quandary is that even when increased seismicity suggests that an earthquake is imminent in a given area, there is no way of getting definite knowledge of whether there will be a larger quake of any given magnitude, or when.[29] If scientists and the civil authorities knew that (for instance) in some area there was an 80% chance of a large (M > 6) earthquake in a matter of a day or two, they would see a clear benefit in issuing an alarm. But is it worth the cost of civil and economic disruption and possible panic, and the corrosive effect a false alarm has on future alarms, if the chance is only 5%?

Some of the trade-offs can be seen in the chart at right. By lowering "The Bar" — the threshold at which an alarm is issued — the chances of being caught off-guard are reduced, and the potential loss of life and property may be mitigated. But this also increases the number, and cost, of false alarms. If the threshold is set for an estimated one chance in ten of a quake, the other nine chances will be false alarms.[30] Such a high rate of false alarms is a public policy issue itself, which has not yet been resolved. To avoid the all-or-nothing ("black/white") kind of response the California Earthquake Prediction Evaluation Council (CEPEC) has used a notification protocol where short-term advisories of possible major earthquakes (M ≥ 7) can be provided at four levels of probability.[31]

Prediction methods

Earthquake prediction, as a branch of seismology, is an immature science in the sense that it cannot predict from first principles the location, date, and magnitude of an earthquake.[32] Research in this area therefore seeks a reliable basis for predictions in either empirically derived precursors, or some kind of trend or pattern.

Precursors

"... there is growing empirical evidence that precursors exist."

— Frank Evison, 1999[33]

"The search for diagnostic precursors has thus far been unsuccessful."

— ICEF, 2011[34]

An earthquake precursor could be any anomalous phenomena that can give effective warning of the imminence or severity of an impending earthquake in a given area. Phenomena anecdotally reported as possible precursors number in the thousands,[35] some dating back to antiquity,[36] and after every major earthquake there are reports of Strange Events. In the scientific literature there have been reports of hundreds of precursors,[37] running the gamut "from aeronomy to zoology".[38] But none have been shown to be successful beyond what might be expected by chance.[39] When (early 1990s) the IASPEI solicited nominations for a "Preliminary List of Significant Precursors" only 40 nominations were made, and only five selected as possible significant precursors, with two of those based on a single observation.[40]

After a critical review of the scientific literature the International Commission on Earthquake Forecasting for Civil Protection (ICEF) concluded in 2011 there was "considerable room for methodological improvements in this type of research."[41] Particularly:

In many cases of purported precursory behavior, the reported observational data are contradictory and unsuitable for a rigorous statistical evaluation. One related problem is a bias towards publishing positive rather than negative results, so that the rate of false negatives (earthquake but no precursory signal) cannot be ascertained. A second is the frequent lack of baseline studies that establish noise levels in the observational time series.[42]

Although none of the following precursors are convincingly successful, they do illustrate both various kinds of phenomena which have been examined, and the optimism that generally attaches to any report of a possible precursor.

Animal behavior

... the first quake prediction

oft mistaken for fiction,

was the cow jumping over the moon.

— Ralph Turner[43]

There are many accounts of unusual phenomena prior to an earthquake, especially reports of anomalous animal behavior. One of the earliest is from the Roman writer Claudius Aelianus concerning the destruction of the Greek city of Helike by earthquake and tsunami in 373 BC:

For five days before Helike disappeared, all the mice and martens and snakes and centipedes and beetles and every other creature of that kind in the city left in a body by the road that leads to Keryneia. ... But after these creatures had departed, an earthquake occurred in the night; the city subsided; an immense wave flooded and Helike disappeared....[44]

Aelianus wrote this in the Second Century, some 500 years after the event, so his somewhat fantastical account is necessarily about the myth that developed, not an eyewitness account.[45]

Scientific observation of such phenomena is limited because of the difficulty of performing an experiment, let alone repeating one. Yet there was a fortuitous case in 1992: some biologists just happened to be studying the behavior of an ant colony when the Landers earthquake struck just 100 km (60 mi) away. Despite severe ground shaking, the ants seemed oblivious to the quake itself, as well as to any precursors.[46]

In an earlier study, researchers monitored rodent colonies at two seismically active locations in California. In the course of the study there were several moderate quakes, and there was anomalous behavior. However, the latter was coincident with other factors; no connection with an earthquake could be shown.[47]

Given these results one might wonder about the many reports of precursory anomalous animal behavior following major earthquakes. Such reports are often given wide exposure by the major media, and almost universally cast in the form of animals predicting the subsequent earthquake, often with the suggestion of some "sixth sense" or other unknown power.[48] However, it is extremely important to note the time element: how much warning? For earthquakes radiate multiple kinds of seismic waves. The "p" (primary) waves travel through the earth's crust about twice as fast as the "s" (secondary) waves, so they arrive first. The greater the distance, the greater the delay between them. For an earthquake strong enough to be felt over several hundred kilometers (approximately M > 5) this can amount to some tens of seconds difference. The P waves are also weaker, and often unnoticed by people. Thus the signs of alarm reported in the animals at the National Zoo in Washington, D.C., some five to ten seconds prior to the shaking from the M 5.8 2011 Virginia earthquake, was undoubtably prompted by the p-waves. This was not so much a prediction as a warning of shaking from an earthquake that has already happened.[49]

As to reports of longer-term anticipations, these are rarely amenable to any kind of study.[50] There was an intriguing story in the 1980s that spikes in lost pet advertisements in the San Jose Mercury News portended an increased chance of an earthquake within 70 miles of downtown San Jose. This was a very testable hypothesis, being based on quantifiable, objective, publicly available data, and it was tested by Schaal (1988) — who found no correlation.

After reviewing the scientific literature the ICEF concluded in 2011 that

there is no credible scientific evidence that animals display behaviors indicative of earthquake-related environmental disturbances that are unobservable by the physical and chemical sensor systems available to earthquake scientists.[51]

Changes in Vp/Vs

Vp is the symbol for the velocity of a seismic "P" (primary or pressure) wave passing through rock, while Vs is the symbol for the velocity of the "S" (secondary or shear) wave. Small-scale laboratory experiments have shown that the ratio of these two velocities — represented as Vp/Vs — changes when rock is near the point of fracturing. In the 1970s it was considered a significant success and likely breakthrough when Russian seismologists reported observing such changes in the region of a subsequent earthquake.[52] This effect, as well as other possible precursors, has been attributed to dilatancy, where rock stressed to near its breaking point expands (dilates) slightly.[53]

Study of this phenomena near Blue Mountain Lake (New York) lead to a successful prediction in 1973.[54] However, additional successes there have not followed, and it has been suggested that the prediction was only a lucky fluke.[55] A Vp/Vs anomaly was the basis of Whitcomb's 1976 prediction of a M 5.5 to 6.5 earthquake near Los Angeles, which failed to occur.[56] Other studies relying on quarry blasts (more precise, and repeatable) found no such variations; Geller (1997) noted that reports of significant velocity changes have ceased since about 1980.

Radon emissions

Most rock contains small amount of gases that can be isotopically distinguished from the normal atmospheric gases. There are reports of spikes in the concentrations of such gases prior to a major earthquake; this has been attributed to release due to pre-seismic stress or fracturing of the rock. One of these gases is composed of radon, an element produced by radioactive decay of the trace amounts of uranium present in most rock.[57]

Radon is attractive as a potential earthquake predictor because being radioactive it is easily detected),[58] and its short half-life (3.8 days) makes it sensitive to short-term fluctuations. A 2009 review[59] found 125 reports of changes in radon emissions prior to 86 earthquakes since 1966. But as the ICEF found in its review, the earthquakes with which these changes are supposedly linked were up to a thousand kilometers away, months later, and at all magnitudes. In some cases the anomalies were observed at a distant site, but not at closer sites. The ICEF found "no significant correlation".[60] Another review concluded that in some cases changes in radon levels preceded an earthquake, but a correlation is not yet firmly established.[61]

Geoelectric

In a 1981 paper[62] professors P. Varotsos, K. Alexopoulos and K. Nomicos — "VAN" — of the National and Capodistrian University of Athens claimed that by measuring geoelectric voltages — what they called "seismic electric signals"(SES) — they could predict earthquakes of magnitude larger than 2.8 within all of Greece up to 7 hours beforehand. Later the claim changed to being able to predict earthquakes larger than magnitude 5, within 100 km of the epicentral location, within 0.7 units of magnitude, and in a 2-hour to 11-day time window.[63] Subsequent papers claimed a series of successful predictions.[64]

Objections have been raised that the physics of the claimed process is not possible. None of the earthquakes which VAN claimed were preceded by SESs generated an SES themselves, which would have to be expected because the main shock disturbance in the Earth is much larger than any precursory disturbance. An analysis of the wave propagation properties of SESs in the Earth’s crust showed that it is impossible that signals with the amplitude reported by VAN could have been generated by small earthquakes and transmitted over the several hundred kilometers distances from the epicenter to the monitoring station.[65]

Several authors have pointed out that VAN’s publications are characterized by a lack of addressing the problem of eliminating the many and strong sources of change in the magneto-electric field measured by them, such as currents generated near their recording station in suburban Athens, and especially by a lack of statistical testing of the validity of their hypothesis.[66] In particular, it was discovered that of the 22 claims of successful prediction by VAN[67] 74% were false, 9% correlated at random and for 14% the correlation was uncertain.[68]

Trends

Instead of watching for anomalous phenomena that might be precursory signs of an impending earthquake, other approaches to predicting earthquakes look for trends or patterns that lead to an earthquake. As these trends may be complex and involve many variables, advanced statistical techniques are often needed to understand them, wherefore these are sometimes called statistical methods. These approaches also tend to be more probabilistic, and to have larger time periods, and so verge into earthquake forecasting.

Elastic rebound

Even the stiffest of rock is not perfectly rigid. Given a large enough force (such as between two immense tectonic plates moving past each other) the earth's crust will bend or deform. What happens next is described by the elastic rebound theory of Reid (1910): eventually the deformation (strain) becomes great enough that something breaks, usually at an existing fault. Slippage along the break (an earthquake) allows the rock on each side to return to its undeformed shape (or nearly so), releasing the stress (energy) that had deformed the rock. The cycle of tectonic force being accumulated in elastic deformation and released in a sudden rebound is then repeated. As a single earthquake typically slips only a meter or two, the demonstrated existence of large strike-slip offsets of hundreds of miles shows the existence of a long running earthquake cycle.[69]

Characteristic earthquakes

The most studied earthquake faults (such as the Wasatch fault and San Andreas fault) appear to have distinct segments. The characteristic earthquake model postulates that earthquakes are generally constrained within these segments. As the lengths and other characteristics[70] of the segments are fixed, earthquakes that rupture the entire fault should have similar characteristics. These include the maximum magnitude (which is limited by the length of the rupture), and the amount of accumulated strain needed to rupture the fault segment. In that the strain accumulates steadily, it seems a fair inference that seismic activity on a given segment should be dominated by earthquakes of similar characteristics that recur at somewhat regular intervals.[71] For a given fault segment, identifying these characteristic earthquakes and timing their recurrence rate should therefore inform us when to expect the next rupture; this is the approach generally used in forecasting seismic hazard.[72]

This is essentially the basis of the Parkfield prediction: fairly similar earthquakes in 1857, 1881, 1901, 1922, 1934, and 1966 suggested a pattern of breaks every 21.9 years, with a standard deviation of ±3.1 years.[73] Extrapolation from the 1966 event led to a prediction of an earthquake around 1988, or before 1993 at the latest (at the 95% confidence interval).[74] The appeal of such a method is in being derived entirely from the trend, which supposedly accounts for the unknown and possibly unknowable earthquake physics and fault parameters. However, in the Parkfield case the predicted earthquake did not occur until 2004, a decade late. This demonstrated that earthquakes at Parkfield are not quasi-periodic, and differ sufficiently in other respects to question whether they have distinct characteristics.[75]

The failure of the Parkfield prediction has raised doubt as to the validity of the characteristic earthquake model itself.[76] Some studies have questioned the various assumptions, including the key one that earthquakes are constrained within segments, and suggested that the "characteristic earthquakes" may be an artifact of selection bias and the shortness of seismological records (relative to earthquake cycles).[77] Other studies have considered whether other factors need to be considered, such as the age of the fault.[78] Whether earthquake ruptures are more generally constrained within a segment (as is often seen), or break past segment boundaries (also seen), has a direct bearing on the degree of earthquake hazard: earthquakes are larger where multiple segments break, but in relieving more strain they will happen less often.[79]

Seismic gaps

At the contact where two tectonic plates slip past each other every section must eventually slip, as (in the long-term) none get left behind. But they do not all slip at the same time; different sections will be at different stages in the cycle of strain (deformation) accumulation and sudden rebound. In the seismic gap model the "next big quake" should be expected not in the segments where recent seismicity has relieved the strain, but in the intervening gaps where the unrelieved strain is the greatest.[80] This model has an intuitive appeal; it is used in long-term forecasting, and was the basis of a series of circum-Pacific (Pacific Rim) forecasts in 1979 and 1989—1991.[81]

It has been asked: "How could such an obvious, intuitive model not be true?"[82] Possibly because some of the underlying assumptions are not correct. A close examination suggests that "there may be no information in seismic gaps about the time of occurrence or the magnitude of the next large event in the region";[83] statistical tests of the circum-Pacific forecasts shows that the seismic gap model "did not forecast large earthquakes well".[84] Another study concluded: "The hypothesis of increased of earthquake potential after a long quiet period can be rejected with a large confidence."[85]

Seismicity patterns (M8, AMR)

Various heuristically derived algorithms have been developed for predicting earthquakes. Probably the most widely known is the M8 family of algorithms (including the RTP method) developed under the leadership of Vladimir Keilis-Borok. M8 issues a "Time of Increased Probability" (TIP) alarms for a large earthquake of a specified magnitude upon observing certain patterns of smaller earthquakes. TIPs generally cover large areas (up to a thousand kilometers across) for up to five years.[86] Such large parameters have made M8 controversial, as it is hard to determine whether any hits that happen were skillfully predicted, or only the result of chance.

M8 gained considerable attention when the 2003 San Simeon and Hokkaido earthquakes occurred within a TIP.[87] But a widely publicized TIP for an M 6.4 quake in Southern California in 2004 was not fulfilled, nor two other lesser known TIPs.[88] A deep study of the RTP method in 2008 found that out of some twenty alarms only two could be considered hits (and one of those had a 60% chance of happening anyway).[89] It concluded that "RTP is not significantly different from a naïve method of guessing based on the historical rates seismicity."[90]

Accelerating moment release (AMR, "moment" being a measurement of seismic energy), also known as time-to-failure analysis, or accelerating seismic moment release (ASMR), is based on observations that foreshock activity prior to a major earthquake not only increased, but increased at an exponential rate.[91] That is: a plot of the cumulative number of foreshocks gets steeper just before the main shock.

Following formulation by Bowman et al. (1998) into a testable hypothesis[92] and a number of positive reports, AMR seemed to have a promising future.[93] This despite several problems, including not being detected for all locations and events, and the difficulty of projecting an accurate occurrence time when the tail end of the curve gets steep.[94] But more rigorous testing has shown that apparent AMR trends likely result from how data fitting is done[95] and failing to account for spatiotemporal clustering of earthquakes,[96] and are statistically insignificant. Interest in AMR (as judged by the number of peer-reviewed papers) is reported to have fallen off since 2004.[97]

Notable predictions

These are predictions, or claims of predictions, that are notable either scientifically or because of public notoriety, and claim a scientific or quasi-scientific basis, or invoke the authority of a scientist. To be judged successful a prediction must be a proper prediction, published before the predicted event, and the event must occur exactly within the specified time, location, and magnitude parameters. Question marks indicate predictions that are questioned, or are so broad (e.g., for most of the state of California) as to as to lose any value as a prediction. As many predictions are held confidentially, or published in obscure locations, and become notable only when they are claimed, there may be some selection bias in that hits get more attention than misses.

Earthquake prediction ... appears to be on the verge of practical reality....

1973: Blue Mountain Lake, USA

Result: ![]()

A team studying earthquake activity at Blue Mountain Lake (BML), New York, made a prediction on August 1, 1973, that "an earthquake of magnitude 2.5—3 would occur in a few days." And: "At 2310 UT on August 3, 1973, a magnitude 2.6 earthquake occurred at BML".[98] According to the authors, this is the first time the approximate time, place, and size of an earthquake were successfully predicted in the United States.[99]

It has been suggested that the pattern they observed may have been a statistical fluke, that just happened to get out in front of a chance earthquake.[100] It seems significant that there has never been a second prediction from Blue Mountain Lake; this prediction now appears to be largely discounted.[101]

1975: Haicheng, China

Result: ![]()

The M 7.3 Haicheng (China) earthquake of 4 February 1975 is the most widely cited "success" of earthquake prediction,[102] and cited as such in several textbooks.[103] The putative story is that study of seismic activity in the region lead the Chinese authorities to issue both a medium-term prediction in June, 1974, and, following a number of foreshocks up to M 4.7 the previous day, a short-term prediction on February 4 that large earthquake might occur within one to two days. The political authorities (so the story goes) therefore ordered various measures taken, including enforced evacuation of homes, construction of "simple outdoor structures", and showing of movies out of doors. Although the quake, striking at 19:36, was powerful enough to destroy or badly damage about half of the homes, the "effective preventative measures taken" were credited with keeping the death toll under 300. This in a population of about 1.6 million, where otherwise tens of thousands of fatalities might have been expected.[104]

However, there has been some skepticism about this. This was during the Cultural Revolution, when "belief in earthquake prediction was made an element of ideological orthodoxy that distinguished the true party liners from right wing deviationists"[105] and record keeping was disordered, making it difficult to verify details of the claim, even as to whether there was an ordered evacuation. The methodology used for either the medium-term or short-term predictions (other than "Chairman Mao's revolutionary line"[106]) has not been specified.[107] It has been suggested that the evacuation was spontaneous, following the strong (M 4.7) foreshock that occurred the day before.[108]

Many of the missing details have been filled in by a 2006 report that had access to an extensive range of records.[109] This study found that the predictions were flawed. "In particular, there was no official short-term prediction, although such a prediction was made by individual scientists." [110] Also: "it was the foreshocks alone that triggered the final decisions of warning and evacuation". The light loss of life (which they set at 2,041) is attributed to a number of fortuitous circumstances, including earthquake education in the previous months (prompted by elevated seismic activity), local initiative, timing (occurring when people were neither working nor asleep), and local style of construction. The authors conclude that, while unsatisfactory as a prediction, "it was an attempt to predict a major earthquake that for the first time did not end up with practical failure."

"... routine announcement of reliable predictions may be possible within 10 years...."

— NAS Panel on Earthquake Prediction, 1976[111]

1976: Southern California, USA (Whitcomb)

Result: ![]()

On April 15, 1976 Dr. James Whitcomb presented a scientific paper[112] that found, based on changes in Vp/Vs (seismic wave velocities), an area northeast of Los Angeles along the San Andreas fault was "a candidate for intensified geophysical monitoring". He presented this not as a prediction that an earthquake would happen, but as a test of whether an earthquake would happen, as might be predicted on the basis of Vp/Vs. This distinction was generally lost; he was and has been held to have predicted an earthquake of magnitude 5.5 to 6.5 within 12 months.

The area identified by Whitcomb was quite large, and overlapped the area of the Palmdale Bulge,[113] an apparent uplift (later discounted[114]), which was causing some concern as a possible precursor of large earthquake on the San Andreas fault.Both the uplift and the changes in seismic velocities were predicted by the then current dilatancy theory, although Whitcomb emphasized his "hypothesis test" was based solely on the seismic velocities, and that he regarded that theory unproven.[56]

Dr. Whitcomb subsequently withdrew the prediction, as continuing measurements no longer supported it. No earthquake of the specified magnitude occurred within the specifed area or time.[115]

1976—1978: South Carolina, USA

Result: ![]()

![]()

Towards the end of 1975 a number of minor earthquakes in an area of South Carolina (USA) not known for seismic activity were linked to the filling of a new reservoir (Lake Jocassee). Changes in the Vp/Vs ratios of the seismic waves were observed with these quakes. Based on additional Vp/Vs changes observed between 30 December 1975 and 7 January 1976 an earthquake prediction was made on 12 January. A magnitude 2.5 event occurred on 14 January 1976.[116]

In the course of a three-year study a second prediction was claimed successful for an M 2.2 earthquake on November 25, 1977. However, this was only "two-thirds" successful (in respect of time and magnitude) as it occurred 7 km outside of the prediction location.[117]

This study also evaluated other precursors, such as the M8 algorithm, which "predicted" neither of these two events, and changes in radon emissions, which showed possible correlation with other events, but not with these two.

"... at least 10 years, perhaps more, before widespread, reliable prediction of major earthquakes is achieved."

— Richard Kerr, 1978[118]

1978: Izu-Oshima-Kinkai, Japan

Result: ![]()

On 14 January 1978 a swarm of intense microearthquakes prompted the Japan Meteorological Agence (JMA) to issue a statement suggesting that precautions for the prevention of damage might be considered. This was not a prediction, but coincidentally it was made just 90 minutes before the M 7.0 Izu-Oshima-Kinkai earthquake.[119] This was subsequently, but incorrectly,[120] claimed as successful prediction by Hamada (1991), and again by Roeloffs & Langbein (1994).

1978: Oaxaca, Mexico

Result: ![]()

Ohtake, Matumoto & Latham (1981) claimed:

The rupture zone and type of the 1978 Oaxaca: southern Mexico earthquake (Ms = 7.7) were successfully predicted based on the premonitory quiescence of seismic activity and the spatial and temporal relationships of recent large earthquakes.[121]

However, the 1977 paper on which the claim is based[122] said only that the "most probable" area "may [emphasis added] be the site of a future large earthquake"; a "firm prediction of the occurrence time is not attempted." This prediction is therefore incomplete, making its evaluation difficult.

After re-analysis of the region's seismicity Garza & Lomnitz (1979) concluded that though there was a slight decrease in seismicity, it was within the range of normal random variation, and did not amount to a seismic gap (the basis fo the prediction). The validity of the prediction is further undermined by a report that the apparent lack of seismicity was due to a cataloging omission.[123]

This prediction had an unusual twist in that its announcement by the University of Texas (UT) — for a destructive earthquake[124] in Oaxaca at an undetermined date — came just ten days before the date given by another prediction for a destructive earthquake in Oaxaca. This other prediction had been made by a pair of Las Vegas gamblers; the local authorities had deemed it uncredible, and decided to ignore it. The announcement from a respectable institution unfortunately confirmed (for many people) the more specific prediction; this appears to have caused some panic.[125] On the day of the amateur prediction there was an earthquake, but only a distinctly non-destructive M 4.2, which, as one mayor said, they get all the time.

" ... no general and definite way to successful earthquake prediction is clear."

— Ziro Suzuki, 1982 [126]

1981: Lima, Peru (Brady)

Result: ![]()

![]()

![]()

In 1976 Dr. Brian Brady, a physicist then at the U.S. Bureau of Mines, where he had studied how rocks fracture, "concluded a series of four articles on the theory of earthquakes with the deduction that strain building in the subduction zone [off-shore of Peru] might result in an earthquake of large magnitude within a period of seven to fourteen years from mid November 1974."[127] In an internal memo written in June 1978 he narrowed the time window to "October to November, 1981", with a main shock in the range of 9.2±0.2.[128] In a 1980 memo he was reported as specifying "mid-September 1980".[129] This was discussed at a scientific seminar in San Juan, Argentina, in October 1980, where Brady's colleague, Dr. W. Spence, presented a paper. Brady and Spence then met with government officials from the U.S. and Peru on 29 October, and "forecast a series of large magnitude earthquakes in the second half of 1981."[127] This prediction became widely known in Peru, following what the U.S. embassy described as "sensational first page headlines carried in most Lima dailies" on January 26, 1981.[130]

On 27 January 1981, after reviewing the Brady-Spence prediction, the U.S. National Earthquake Prediction Evaluation Council (NEPEC) announced it was "unconvinced of the scientific validity" of the prediction, and had been "shown nothing in the observed seismicity data, or in the theory insofar as presented, that lends substance to the predicted times, locations, and magnitudes of the earthquakes." It went on to say that while there was a probability of major earthquakes at the predicted times, that probability was low, and recommend that "the prediction not be given serious consideration."[131]

Unfazed,[132] Brady subsequently revised his forecast, stating there would be at least three earthquakes on or about July 6, August 18 and September 24, 1981,[133] leading one USGS official to complain: "If he is allowed to continue to play this game ... he will eventually get a hit and his theories will be considered valid by many."[134]

On June 28 (the date most widely taken as the date of the first predicted earthquake), it was reported that: "the population of Lima passed a quiet Sunday".[135] The headline on one Peruvian newspaper: "NO PASO NADA" ("Nothing happens").[136]

In July Brady formally withdrew his prediction on the grounds that prerequisite seismic activity had not occurred.[137] Economic losses due to reduced tourism during this episode has been roughly estimated at one hundred million dollars.[138]

"Recent advances ... make the routine prediction of earthquakes seem practicable."

— Stuart Crampin, 1987[139]

1985—1993: Parkfield, USA (Bakun-Lindh)

Result: ![]()

The "Parkfield earthquake prediction experiment" was the most heralded scientific earthquake prediction ever.[140] It was based on an observation that the Parkfield segment of the San Andreas Fault[141] breaks regularly with a moderate earthquake of about M 6 every several decades: 1857, 1881, 1901, 1922, 1934, and 1966.[142] More particularly, Bakun & Lindh (1985) pointed out that, if the 1934 quake is excluded, these occur every 22 years, ±4.3 years. Counting from 1966, they predicted a 95% chance that the next earthquake would hit around 1988, or 1993 at the latest. The National Earthquake Prediction Evaluation Council (NEPEC) evaluated this, and concurred.[143] The U.S. Geological Survey and the State of California therefore established one of the "most sophisticated and densest nets of monitoring instruments in the world",[144] in part to identify any precursors when the quake came. Confidence was high enough that detailed plans were made for alerting emergency authorities if there were signs an earthquake was imminent.[145] In the words of the Economist: "never has an ambush been more carefully laid for such an event."[146]

1993 came, and passed, without fullfillment. Eventually there was an M 6.0 earthquake, on 28 September 2004, but without forewarning or obvious precursors.[147] While the experiment in catching an earthquake is considered by many scientists to have been successful,[148] the prediction was unsuccessful in that the eventual event was a decade late.[149]

1987—1995: Greece (VAN)

Result: ![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Professors P. Varotsos, K. Alexopoulos and K. Nomicos — "VAN" — claimed in a 1981 paper[150] an ability to predict M ≥ 2.6 earthquakes within 80 km of their observatory (in Greece) approximately seven hours beforehand, by measurements of 'seismic electric signals'. In 1996 Varotsos and other colleagues claimed to have predicted impending earthquakes within windows of several weeks, 100–120 km, and ±0.7 of the magnitude.

The VAN predictions have been severely criticised on various grounds, including being geophysically implausible,[151] "vague and ambiguous",[152] failing to satisfy prediction criteria,[153] and retroactive adjustment of parameters.[154] A critical review of 14 cases where VAN claimed 10 successes showed only one case where an earthquake occurred within the prediction parameters,[155] more likely a lucky coincidence than a "success". In the end the VAN predictions not only fail to do better than chance, but show "a much better association with the events which occurred before them." [156]

1989: Loma Prieta, USA

Result: Lindh: ![]() Keilis-Borok:

Keilis-Borok: ![]()

![]()

![]()

![]() Browning:

Browning: ![]()

On October 17, 1989, the Mw 6.9 (Ms 7.1[157]) Loma Prieta ("World Series") earthquake (epicenter in the Santa Cruz Mountains northwest of San Juan Bautista, California) caused significant damage in the San Francisco Bay area of California.[158] The U.S. Geological Survey (USGS) reportedly claimed, twelve hours after the event, that it had "forecast" this earthquake in a report the previous year.[159] USGS staff subsequently claimed this quake had been "anticipated";[160] various other claims of prediction have also been made.[161]

Harris (1998) reviewed 18 papers (with 26 forecasts) dating from 1910 "that variously offer or relate to scientific forecasts of the 1989 Loma Prieta earthquake." (Forecast is often limited to a probabilistic estimate of an earthquake happening over some time period, distinguished from a more specific prediction.[162] However, in this case this distinction is not made.) None of these forecasts can be rigorously tested due to lack of specificity,[163] and where a forecast does bracket time and location it is because of such a broad window (e.g., covering the greater part of California for five years) as to lose any value as a prediction. Predictions that came close (but given a probability of only 30%) had ten- or twenty-year windows.[164]

Of the several prediction methods used perhaps the most debated was the M8 algorithm used by Keilis-Borok and associates in four forecasts.[165] The first of these foreacasts missed both magnitude (M 7.5) and time (a five-year window from Jan. 1, 1984, through Dec. 31, 1988). They did get the location, by including most of California and half of Nevada.[166] A subsequent revision, presented to the NEPEC, extended the time window to July 1, 1992, and reduced the location to only central California; the magnitude remained the same. A figure they presented had two more revisions, for M ≥ 7.0 quakes in central California. The five-year time window for one ended in July, 1989, and so missed the Loma Prieta event; the second revision extended to 1990, and so included Loma Prieta.[167]

Harris describes two differing views about whether the Loma Prieta earthquake was predicted. One view argues it did not occur on the San Andreas fault (the focus of most of the forecasts), and involved dip-slip (vertical) movement rather than strike-slip (horizontal) movement, and so was not predicted.[168] The other view argues that it did occur in the San Andreas fault zone, and released much of the strain accumulated since the 1906 San Francisco earthquake; therefore several of the forecasts were correct.[169] Hough states that "most seismologists" do not believe this quake was predicted "per se".[170] In a strict sense there were no predictions, only forecasts, which were only partially successful.

Iben Browning claimed to have predicted the Loma Prieta event, but (as will be seen in the next section) this claim has been rejected.

1990: New Madrid, USA (Browning)

Result: ![]()

Dr. Iben Browning (a scientist by virtue of a Ph.D. degree in zoology and training as a biophysicist, but no training or experience in geology, geophysics, or seismology) was an "independent business consultant" who forecast long-term climate trends for businesses, including publication of a newsletter.[171] He seems to have been enamored of the idea (scientifically unproven) that volcanoes and earthquakes are more likely to be triggered when the tidal force of the sun and the moon coincide to exert maximum stress on the earth's crust.[172] Having calculated when these tidal forces maximize, Browning then "projected"[173] what areas he thought might be ripe for a large earthquake. An area he mentioned frequently was the New Madrid Seismic Zone at the southeast corner of the state of Missouri, the site of three very large earthquakes in 1811-1812, which he coupled with the date of December 3, 1990.

Browning's reputation and perceived credibility were boosted when he claimed in various promotional flyers and advertisements to have predicted (among various other events[174]) the Loma Prieta earthquake of October 17, 1989.[175] The National Earthquake Prediction Evaluation Council (NEPEC) eventually formed an Ad Hoc Working Group (AHWG) to evaluate Browning's prediction. Its report (issued October 18, 1990) specifically rejected the claim of a successful prediction of the Loma Prieta earthquake;[176] examination of a transcript of his talk in San Francisco on October 10 showed he had said only: "there will probably be several earthquakes around the world, Richter 6+, and there may be a volcano or two" — which, on a global scale, is about average for a week — with no mention of any earthquake anywhere in California.[177]

Though the AHWG report thoroughly demolished Browning's claims of prior success and the basis of his "projection", it made little impact, coming after a year of continued claims of a successful prediction, the endorsement and support of geophysicist David Stewart,[178] and the tacit endorsement of many public authorities in their preparations for a major disaster, all of which was amplified by massive exposure in all major news media.[179] The result was predictable. According to Tierney:

... no forecast associated with an earthquake (or for that matter with any other hazard in the U. S.) has ever generated the degree of concern and public involvement that was observed with the Browning prediction.[180]

On December 3, despite tidal forces and the presence of some 30 TV and radio crews: nothing happened.[181]

1998: Iceland (Crampin)

Result: ![]()

Crampin, Volti & Stefánsson (1999) claimed a successful prediction — what they called a stress forecast — of an M 5 earthquake in Iceland on 13 November 1998 through observations of what is called shear wave splitting. This claim has been disputed;[182] a rigorous statistical analysis found that the result was as likely due to chance as not.[183]

"The 2004 Parkfield earthquake, with its lack of obvious precursors, demonstrates that reliable short-term earthquake prediction still is not achievable."

2004 & 2005: Southern California, USA (Keilis-Borok)

Result: ![]()

![]()

The M8 algorithm (developed under the leadership of Dr. Vladimir Keilis-Borok at UCLA) gained considerable respect by the apparently successful predictions of the 2003 San Simeon and Hokkaido earthquakes.[184] Great interest was therefore generated by the announcement in early 2004 of a predicted M ≥ 6.4 earthquake to occur somewhere within an area of southern California of approximately 12,000 sq. miles, on or before 5 September 2004.[185] In evaluating this prediction the California Earthquake Prediction Evaluation Council (CEPEC) noted that this method had not yet made enough predictions for statistical validation, and was sensitive to input assumptions. It therefore concluded that no "special public policy actions" were warranted, though it reminded all Californians "of the significant seismic hazards throughout the state."[186] The predicted earthquake did not occur.

A very similar prediction was made for an earthquake on or before August 14, 2005, in approximately the same area of southern California. The CEPEC's evaluation and recommendation were essentially the same, this time noting that the previous prediction and two others had not been fulfilled.[187] This prediction also failed.

"Despite over a century of scientific effort, the understanding of earthquake predictability remains immature."

— ICEF, 2011[188]

2009: L'Aquila, Italy (Giuliani)

Result: ![]()

![]()

![]()

At 3:32 in the morning of 6 April 2009, the Abruzzo region of central Italy was rocked by a magnitude M 6.3 earthquake.[189] In the city of L'Aquila and surrounding area some sixty thousand buildings, including many homes, collapsed or were seriously damaged, resulting in 308 deaths and 67,500 people left homeless.[190] Hard upon the news of the earthquake came news that a Giampaolo Giuliani had predicted this earthquake, had tried to warn the public, but had been muzzled by the Italian government.[191]

Closer examination shows a more subtle story. Giampaolo Giuliani is a laboratory technician at the Laboratori Nazionali del Gran Sasso. As a hobby he has for some years been monitoring radon (a short-lived radioactive gas that has been implicated as an earthquake precursor), using instruments he has designed and built. Prior to the L'Aqulia earthquake he was unknown to the scientific community, and had not published any kind of scientific work.[192] Giuliani's rise to fame may be dated to when he was interviewed on March 24, 2009, by an Italian-language blog, Donne Democratiche, about a swarm of low-level earthquakes in the Abruzzo region that had started the previous December. He reportedly said that this swarm was normal, and would diminish by the end of March. On March 30 L'Aquila was struck by a magnitude 4.0 tremblor, the largest to date.[193]

One source says that on the 27th Giuliani warned the mayor of L'Aquila there could be an earthquake with 24 hours. As indeed there was — but none larger than about M 2.3.[194]

On March 29 he made a second prediction.[195] The details are hazy, but apparently he telephoned the mayor of the town of Sulmona, about 55 kilometers southeast of L'Aquila, to expect a "damaging" — or even "catastrophic" — earthquake within 6 to 24 hours. This is the incident with the loudspeaker vans warning the inhabitants of Sulmona (not L'Aquila) to evacuate, with consequential panic. Nothing ensued, except Giuliano was cited for procurato allarme (inciting public alarm) and injoined from making public predictions.[196]

After the L'Aquila event Giuliani claimed that he had found alarming rises in radon levels just hours before.[197] Although he reportedly claimed to have "phoned urgent warnings to relatives, friends and colleagues" on the evening before the earthquake hit,[198] the International Commission on Earthquake Forecasting for Civil Protection, after interviewing Giuliani, found that there had been no valid prediction of the mainshock before its occurrence.[199]

At a public meeting, a group of mainstream scientist downplayed Giuliani's predictions and stated that a large earthquake was probably not coming. Despite their stating that earthquakes can not be predicted and Giuliani's previous failed predictions, the seven scientists were convicted of involuntary manslaughter for not predicting the quake. This conviction was universally criticized by the scientific community, with Malcolm Sperrin, a British scientist, saying:

If the scientific community is to be penalised for making predictions that turn out to be incorrect, or for not accurately predicting an event that subsequently occurs, then scientific endeavour will be restricted to certainties only, and the benefits that are associated with findings, from medicine to physics, will be stalled.[200]

Is earthquake prediction impossible?

As the preceding examples show, the record of earthquake prediction has been disappointing.[201] Even where earthquakes have unambiguously occurred within the parameters of a prediction, statistical analysis has generally shown these to be no better than lucky guesses. The optimism of the 1970s that routine prediction of earthquakes would be "soon", perhaps within ten years,[202] was coming up disappointingly short by the 1990s,[203] and many scientists began wondering why. By 1997 it was being positively stated that earthquakes can not be predicted,[204] which led to a notable debate in 1999 on whether prediction of individual earthquakes is a realistic scientific goal.[205] For many the question is whether the prediction of individual earthquakes is merely hard, or intrinsically impossible.

Has earthquake prediction failed only because it is "fiendishly difficult"[206] and still beyond the current competency of science? Despite the confident announcement four decades ago that seismology was "on the verge" of making reliable predictions,[207] there may yet be an underestimation of the difficulties. As early as 1978 it was reported that earthquake rupture might be complicated by "heterogeneous distribution of mechanical properties along the fault",[208] and in 1986 that geometrical irregularities in the fault surface "appear to exert major controls on the starting and stopping of ruptures".[209] Another study attributed significant differences in fault behavior to the maturity of the fault.[210] These kinds of complexities are not reflected in current prediction methods.[211]

Seismology may even yet lack an adequate grasp of its most central concept, elastic rebound theory. A simulation that explored assumptions regarding the distribution of slip found results "not in agreement with the classical view of the elastic rebound theory". (This was attributed to details of fault heterogeneity not accounted for in the theory.[212])

Or is earthquake prediction intrinsically impossible? It has been argued that the Earth is in a state of self-organized criticality "where any small earthquake has some probability of cascading into a large event".[213] It has also been argued on decision-theoretic grounds that "prediction of major earthquakes is, in any practical sense, impossible."[214]

That earthquake prediction might be intrinsically impossible has been strongly disputed[215] But the best disproof of impossibility — effective earthquake prediction — has yet to be demonstrated.[216]

"... predicting earthquakes is challenging and maybe possible in the future ..."

See also

- Forecasting

- Earthquake engineering

- Earthquake storm

- Earthquake weather

- Pacific Ring of Fire

- Quakesat

- Supermoon

- National Earthquake Prediction Evaluation Council

- Coordinating Committee for Earthquake Prediction, Japan

Notes

- ^ Geller et al. 1997, p. 1616.

- ^ Kagan 1997b, p. 507.

- ^ Kanamori 2003, p. 1205. See also ICEF 2011, p. 327. Not all scientists distinguish "prediction" and "forecast", but it is useful, and will be observed in this article.

- ^ Thomas 1983.

- ^ a b Atwood & Major 1998.

- ^ a b Mabey 2001.

- ^ Geller et al. 1997.

- ^ Geller 1997, §2.3, p. 427; Console 2001, p. 261.

- ^ Kagan 1997b; Geller 1997. See also Nature Debates.

- ^ Kington 2012

- ^ Quoted by Hough 2007, p. 253. Scholz 1997 quotes a variant: "Bah, no one but fools and charlatans try to predict earthquakes!"

- ^ From USGS: Earthquake statistics and Earthquakes and Seismicity.

- ^ USGS: Earthquake Facts and Statistics

- ^ USGS: Modified Mercalli Intensity Scale, level VI.

- ^ See Jackson 1996a, p. 3772, for an example.

- ^ Allen 1976, p. 2070; PEP 1976, p. 6.

- ^ Geller 1997, §4.7, p. 437.

- ^ Jackson 2004 examines this in-depth.

- ^ See Jolliffe & Stephenson 2003, §3.2.2, Nurmi 2003, §4.1, and Zechar 2008, Table 2.6, for details.

- ^ This is a point which many scientific papers get wrong. See Barnes et al. 2009.

- ^ Mulargia & Gasperini 1992, p. 32; Luen & Stark 2008, p. 302.

- ^ Luen & Stark 2008; Console 2001.

- ^ Jackson 1996a, p. 3775.

- ^ Luen & Stark 2008, p. 302; Kafka & Ebel 2011.

- ^ Hough 2010b relates how several claims of successful predictions are statistically flawed. For a deeper view of the pitfalls of the null hypothesis see Stark 1997 and Luen & Stark 2008.

- ^ The manslaughter charges against the seven scientists and technicians in Italy are not for failing to predict the L'Aquila earthquake (where some 300 people died) as for giving undue assurance to the populace — one victim called it "anaesthesizing" — that there would be no serious earthquake, and therefore no need to take precautions. Hall 2011; Cartlidge 2011. Additional details in Cartlidge 2012.

- ^ It has been reported that members of the Chinese Academy of Sciences were purged for "having ignored scientific predictions of the disastrous Tangshan earthquake of summer 1976." Wade 1977.

- ^ Geller 1997, §5.2, p. 437.

- ^ The L'Aquila earthquake came after three months of tremors, but many devastating earthquakes hit with no warning at all.

- ^ One study (Zechar 2008, p. 18, table 2.5) calculated (for the method and data studied) a result distribution of 2 correct predictions, 2 misses, and 19 false alarms.

- ^ Details at Southern SAF Working Group 1991, pp. 1–2. See Jordan & Jones 2010 for examples.

- ^ Kagan 1999, p. 234, and quoting Ben-Menahem (1995) on p. 235; ICEF 2011, p. 360.

- ^ Evison 1999, p. 769.

- ^ ICEF 2011, p. 338.

- ^ Geller 1997, p. 429, §3.

- ^ E.g., Claudius Aelianus, in De natura animalium, book 11, commenting on the destruction of Helike in 373 BC, but writing five centuries later.

- ^ Rikitake 1979, p. 294. Cicerone, Ebel & Britton 2009 has a more recent compilation.

- ^ Jackson 2004, p. 335.

- ^ Geller (1997, p. 425): "Extensive searches have failed to find reliable precursors." Jackson (2004, p. 348): "The search for precursors has a checkered history, with no convincing successes." Zechar & Jordan (2008, p. 723): "The consistent failure to find reliable earthquake precursors...". ICEF (2009): "... no convincing evidence of diagnostic precursors."

- ^ Wyss & Booth 1997, p. 424.

- ^ ICEF 2011, p. 338.

- ^ ICEF 2011, p. 361.

- ^ Turner (visiting China after the Haicheng earthquake) was commenting on being "told repeatedly of the importance of animal behavior". Turner 1993, p. 456.

- ^ From De natura animalium, book 11, quoted by Roger Pearse at A myth-take about Helice, the earthquake, and Diodorus Siculu. See also http://www.helike.org/.

- ^ As an illustration of how myths develop: the destruction of Helike is thought by some to be the origin of the story of Atlantis.

- ^ Lighton & Duncan 2005.

- ^ Lindberg, Skiles & Hayden 1981.

- ^ ABC News reported: "Sixth Sense? Zoo Animals Sensed Quake Early". Miller, Patrick & Capatides 2011

- ^ According to a press release from the Zoo (National Zoo Animals React to the Earthquake, August 23, 2011; see also Miller, Patrick & Capatides 2011 [ABC News]) most of the activity was co-seismic. It was also reported that the red lemurs "called out 15 minutes before the quake", which would be well before the arrival of the p waves. Lacking any other details it is impossible to say whether the lemur activity was in any way connected with the quake, or was merely a chance activity that was given significance for happening just before the quake, a failing typical of such reports.

- ^ Geller (1997, p. 432) calls such reports "doubly dubious": they fail to distinguish precusory behavior from ordinary behavior, and also depend on human observers who have just undergone a traumatic experience.

- ^ ICEF 2011, p. 336.

- ^ Hammond 1973. Additional references in Geller 1997, §2.4.

- ^ Scholz, Sykes & Aggarwal 1973; Smith 1975a.

- ^ Aggarwal et al. 1975.

- ^ Hough 2010b, p. 110.

- ^ a b Allen 1983.

- ^ ICEF 2011, p. 333. For a fuller account of radon as an earthquake precursor see Immè & Morelli 2012.

- ^ Giampaolo Giuiliani's claimed prediction of the L'Aquila earthquake was based on monitoring of radon levels.

- ^ Cicerone, Ebel & Britton 2009, p. 382.

- ^ ICEF 2011, p. 334. See also Hough 2010b, pp. 93–95.

- ^ Immè & Morelli 2012, p. 158.

- ^ Varotsos, Alexopoulos & Nomicos 1981, described by Mulargia & Gasperini 1992, p. 32, and Kagan 1997b, §3.3.1, p. 512.

- ^ Varotsos et al. 1986.

- ^ Varotsos et al. 1986; Varotsos & Lazaridou 1991.

- ^ Bernard 1992; Bernard & LeMouel 1996.

- ^ Mulargia & Gasperini 1992; Mulargia & Gasperini 1996; Wyss 1996; Kagan 1997b.

- ^ Varotsos & Lazaridou 1991.

- ^ Wyss & Allmann 1996.

- ^ Zoback 2006 provides a clear explanation.

- ^ These include the type of rock and fault geometry.

- ^ Schwartz & Coppersmith 1984; Tiampo & Shcherbakov 2012, p. 93, §2.2.

- ^ UCERF 2008.

- ^ Bakun & Lindh 1985, p. 619. Of course these were not the only earthquakes in this period. The attentive reader will recall that, in seismically active areas, earthquakes of some magnitude happen fairly constantly. The "Parkfield earthquakes" are either the ones noted in the historical record, or were selected from the instrumental record on the basis of location and magnitude. Jackson & Kagan (2006, p. S399) and Kagan (1997a, pp. 211–212, 213) argue that the selection parameters can bias the statistics, and that a sequences of four or six quakes, with different recurrence intervals, are also plausible.

- ^ Bakun & Lindh 1985, p. 621.

- ^ Jackson & Kagan 2006, p. S408.

- ^ Jackson & Kagan 2006.

- ^ Kagan & Jackson 1991, p. 21,420; Stein, Friedrich & Newman 2005; Jackson & Kagan 2006; Tiampo & Shcherbakov 2012, §2.2, and references there; Kagan, Jackson & Geller 2012. See also the Nature debates.

- ^ Young faults are expected to have complex, irregular surfaces, which impedes slippage. In time these rough spots are ground off, changing the mechanical characteristics of the fault. Cowan, Nicol & Tonkin 1996; Stein & Newman 2004, p. 185.

- ^ Stein & Newman 2004

- ^ Scholz 2002, p. 284, §5.3.3; Kagan & Jackson 1991, p. 21,419; Jackson & Kagan 2006, p. S404.

- ^ Kagan & Jackson 1991, p. 21,419; McCann et al. 1979; Rong, Jackson & Kagan 2003.

- ^ Jackson & Kagan 2006, p. S404.

- ^ Lomnitz & Nava 1983.

- ^ Rong, Jackson & Kagan 2003, p. 23.

- ^ Kagan & Jackson 1991, Summary.

- ^ See details in Tiampo & Shcherbakov 2012, §2.4.

- ^ CEPEC 2004a. The CEPEC said these two quakes were properly predicted, but this is questionable lacking documentation of pre-event publication. The point is important because M8 tends to generate many alarms, and without irrevocable pre-event publication of all alarms it is difficult to determine if there is a bias towards publishing only the successful results. Lack of reliable documentation regarding these predictions is also why they are not included in the list below.

- ^ Hough 2010b, pp. 142–149.

- ^ Zechar 2008; Hough 2010b, pp. 145.

- ^ Zechar 2008, p. 7. See also p. 26.

- ^ Tiampo & Shcherbakov 2012, §2.1. Hough 2010b, chapter 12, provides a good description.

- ^ Hardebeck, Felzer & Michael 2008, par. 6

- ^ Hough 2010b, pp. 154–155.

- ^ Tiampo & Shcherbakov 2012, §2.1, p. 93.

- ^ Hardebeck, Felzer & Michael (2008, §4) show how suitable selection of parameters shows DMR: Decelerating Moment Release.

- ^ Hardebeck, Felzer & Michael 2008, par. 1, 73.

- ^ Mignan 2011, Abstract.

- ^ Aggarwal et al. 1975, p. 718. See also Smith 1975 and Scholz, Sykes & Aggarwal 1973.

- ^ Aggarwal et al. 1975, p. 719. The statement is ambiguous as to whether this was the first such success by any method, or the first by the method they used.

- ^ Hough 2010b, p. 110.

- ^ Suzuki (1982, p. 244) cites several studies that did not find the phenomena reported by Aggarwal et al. See also Turcotte (1991), who says (p. 266): "The general consensus today is that the early observations were an optimistic interpretation of a noisy signal."

- ^ E.g.: Davies 1975; Whitham et al. 1976, p. 265; Hammond 1976; Ward 1978; Kerr 1979, p. 543; Allen 1982, p. S332; Rikitake 1982; Zoback 1983; Ludwin 2001; Jackson 2004, p. 335; ICEF 2011, pp. 328, 351.

- ^ Jackson 2004, p. 344.

- ^ Whitham et al. 1976, p. 266.

- ^ Raleigh et al. (1977), quoted in Geller 1997, p 434. Geller has a whole section (§4.1) of discussion and many sources. See also Kanamori 2003, pp. 1210-11.

- ^ Quoted in Geller 1997, p. 434. Lomnitz (1994, Ch. 2) describes some of circumstances attending to the practice of seismology at that time; Turner 1993, pp. 456–458 has additional observations.

- ^ Measurement of an uplift has been claimed, but that was 185 km away, and likely surveyed by inexperienced amateurs. Jackson 2004, p. 345.

- ^ Kanamori 2003, p. 1211. According to Wang et al. 2006 foreshocks were widely understood to precede a large earthquake, "which may explain why various [local authorities] made their own evacuation decisions" (p. 762).

- ^ Wang et al. 2006.

- ^ Wang et al. 2006, p. 785.

- ^ "... in well instrumented areas." PEP 1976, p. 2. Further on (p. 31) the Panel states: "A program for routine announcement of reliable predictions may be 10 or more years away, although there will be, of course, many announcements of predictions (as, indeed, there already have been) long before such a systematic program is set up." According to Allen (1982, p. S331) "a certain euphoria of imminent victory pervaded the earthquake-prediction community...." See Geller 1997 §2.3 for additional quotes.

- ^ "Time-dependent Vp and Vp/Vs in an area of the Transverse Ranges of southern California", presented to the American Geophysical Union annual meeting. Allen 1983.

- ^ Shapley 1976.

- ^ Kerr 1981c.

- ^ Whitcomb 1976, Allen 1983, p. 79; Whitcomb 1976.

- ^ Stevenson, Talwani & Amick 1976.

- ^ Talwani 1981, pp. 385, 386. These results are somewhat problematical as in the study ten Vs/Vp anomalies and ten M ≥ 2.0 events occurred, but only six coincided (Talwani 1981, pp. 381, 386, and see table), and there is no explanation why only two were selected as predictions.

- ^ Kerr 1978.

- ^ Geller 1997, §4.3, p. 435.

- ^ Geller 1991.

- ^ Ohtake, Matumoto & Latham 1981, p. 53.

- ^ Ohtake, Matumoto & Latham 1977, p. 375. See also pp. 381—383.

- ^ Whiteside & Haberman, 1989, quoted in Geller 1997, §4.2. Lomnitz (1994, p. 122) says: "We had neglected to report our data in time for inclusion".

- ^ The UT administrative spokesman reportedly suggested on the order of the M 6.2 1972 Managua earthquake, where over 5,000 people died. Lomnitz 1983, p. 30.

- ^ Lomnitz (1983), McNally (1979, p. 30), and Lomnitz (1994, pp. 122–127) provide details.

- ^ Suzuki 1982, p. 235.

- ^ a b Roberts 1983, §4, p. 151.

- ^ Hough 2010, p. 114.

- ^ Gersony 1982b, p. 231.

- ^ Gersony 1982b, document 85, p. 247.

- ^ Quoted by Roberts 1983, p. 151. Copy of statement in Gersony 1982b, document 86, p. 248.

- ^ The chairman of the NEPEC later complained to the Agency for International Development that one of its staff members had been instrumental in encouraging Brady and promulgating his prediction long after it had been scientifically discredited. See Gersony (1982b), document 146 (p. 201) and following.

- ^ Gersony 1982b, document 116, p. 343; Roberts 1983, p. 152.

- ^ John Filson, deputy chief of the USGS Office of Earthquake Studies, quoted by Hough 2010, p. 116.

- ^ Gersony 1982b, document 147, p. 422, U.S. State Dept. cablegram.

- ^ Hough 2010, p. 117.

- ^ Gersony 1982b, p. 416; Kerr 1981.

- ^ Giesecke 1983, p. 68.

- ^ Crampin 1987. The "recent advances" Crampin refers is his work with Shear Wave Splitting (SWS)

- ^ Geller (1997, §6) describes some of the coverage. The most anticipated prediction ever is likely Iben Browning's 1990 New Madrid prediction (discussed below), but it lacked any scientific basis.

- ^ Near the small town of Parkfield, California, roughly half-way between San Francisco and Los Angeles.

- ^ Bakun & McEvilly 1979; Bakun & Lindh 1985; Kerr 1984.

- ^ Bakun et al. 1987.

- ^ Kerr 1984, "How to Catch an Earthquake". See also Roeloffs & Langbein 1994.

- ^ Roeloffs & Langbein 1994, p. 316.

- ^ Quoted by Geller 1997, p. 440.

- ^ Kerr 2004; Bakun et al. 2005, Harris & Arrowsmith 2006, p. S5.

- ^ Hough 2010b, p. 52.

- ^ It has also been argued that the actual quake differed from the kind expected (Jackson & Kagan 2006), and that the prediction was no more significant than a simpler null hypothesis (Kagan 1997a).

- ^ Varotsos, Alexopoulos & Nomicos 1981, described by Kagan 1997b, §3.3.1, p. 512, and Mulargia & Gasperini 1992, p. 32.

- ^ Jackson 1996b, p. 1365; Mulargia & Gasperini 1996, p. 1324.

- ^ Geller 1997, §4.5, p. 436: "VAN’s ‘predictions’ never specify the windows, and never state an unambiguous expiration date. Thus VAN are not making earthquake predictions in the first place."

- ^ Jackson 1996b, p. 1363. Also: Rhoades & Evison (1996), p. 1373: No one "can confidently state, except in the most general terms, what the VAN hypothesis is, because the authors of it have nowhere presented a thorough formulation of it."

- ^ Kagan & Jackson 1996,grl p. 1434.

- ^ Geller 1997, Table 1, p. 436.

- ^ Mulargia & Gasperini 1992, p. 37. They continue: "In particular, there is little doubt that the occurrence of a ‘large event’ (Ms ≥ 5.8 ) has been followed by a VAN prediction with essentially identical epicentre and magnitude with a probability too large to be ascribed to chance."

- ^ Ms is a measure of the intensity of surface shaking, the surface wave magnitude.

- ^ Harris 1998, p. B18.

- ^ Garwin 1989.

- ^ USGS staff 1990, p. 247.

- ^ Kerr 1989; Harris 1998.

- ^ E.g., ICEF 2011, p. 327.

- ^ Harris 1998, p. B22.

- ^ Harris 1990, Table 1, p. B5.

- ^ Harris 1998, pp. B10–B11.

- ^ Harris 1990, p. B10, and figure 4, p. B12.

- ^ Harris 1990, p. B11, figure 5.

- ^ Geller (1997, §4.4) cites several authors to say "it seems unreasonable to cite the 1989 Loma Prieta earthquake as having fulfilled forecasts of a right-lateral strike-slip earthquake on the San Andreas Fault."

- ^ Harris 1990, pp. B21–B22.

- ^ Hough 2010b, p. 143.

- ^ Spence et al. 1993 (USGS Circular 1083) is the most comprehensive, and most thorough, study of the Browning prediction, and appears to be the main source of most other reports. In the following notes, where an item is found in this document the pdf pagination is shown in brackets.

- ^ A report on Browning's prediction cited over a dozen studies of possible tidal triggering of earthquakes, but concluded that "conclusive evidence of such a correlation has not been found". AHWG 1990, p. 10 [62]. It also found that Browning's identification of a particular high tide as triggering a particular earthquake "difficult to justify".

- ^ According to a note in Spence, et al. (p. 4): "Browning preferred the term projection, which he defined as determining the time of a future event based on calculation. He considered 'prediction' to be akin to tea-leaf reading or other forms of psychic foretelling." See also Browning's own comment on p. 36 [44].

- ^ Including "a 50/50 probability that the federal government of the U.S. will fall in 1992." Spence et al. 1993, p. 39 [47].

- ^ Spence et al. 1993, pp. 9–11 [17–19 (pdf)], and see various documents in Appendix A, including The Browning Newsletter for November 21, 1989 (p. 26 [34]).

- ^ AHWG 1990, p. iii [55]. Included in Spence et al. 1993 as part of Appendix B, pp. 45—66 [53—75].

- ^ AHWG 1990, p. 30 [72].

- ^ Previously involved in a psychic prediction of an earthquake for North Carolina in 1975 Spence et al. 1993, p. 13 [21], Stewart sent a 13 page memo to a number of colleagues extolling Browning's supposed accomplishments, including predicting Loma Prieta. Spence et al. 1993, p. 29 [37]

- ^ See Spence et al. 1993 throughout.

- ^ Tierney 1993, p. 11.

- ^ A subsequent brochure for a Browning video tape stated: "the media got it wrong." Spence et al. 1993, p. 40 [48]. Browning died of a heart-attack seven months later (p. 4 [12]).

- ^ Jordan & Jones 2011.

- ^ Seher & Main 2004.

- ^ CEPEC 2004a; Hough 2010b, pp. 145–146.

- ^ CEPEC 2004a.

- ^ CEPEC 2004a.

- ^ CEPEC 2004b.

- ^ ICEF 2011, p. 360.

- ^ ICEF 2011, p. 320.

- ^ Alexander 2010, p. 326.