Vapnik–Chervonenkis dimension

In statistical learning theory and computational learning theory, the VC dimension (for Vapnik–Chervonenkis dimension) is a measure of the capacity (complexity, expressive power, richness, or flexibility) of a space of functions that can be learned by a statistical classification algorithm. It is defined as the cardinality of the largest set of points that the algorithm can shatter. It is a core concept in Vapnik–Chervonenkis theory, and was originally defined by Vladimir Vapnik and Alexey Chervonenkis.

Informally, the capacity of a classification model is related to how complicated it can be. For example, consider the thresholding of a high-degree polynomial: if the polynomial evaluates above zero, that point is classified as positive, otherwise as negative. A high-degree polynomial can be wiggly, so it can fit a given set of training points well. But one can expect that the classifier will make errors on other points, because it is too wiggly. Such a polynomial has a high capacity. A much simpler alternative is to threshold a linear function. This function may not fit the training set well, because it has a low capacity. This notion of capacity is made rigorous below.

Shattering

A classification model with some parameter vector is said to shatter a set of data points if, for all assignments of labels to those points, there exists a such that the model makes no errors when evaluating that set of data points.

The VC dimension of a model is the maximum number of points that can be arranged so that shatters them. More formally, it is the maximum integer such that some data point set of cardinality can be shattered by .

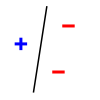

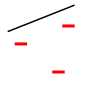

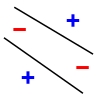

For example, consider a straight line as the classification model: the model used by a perceptron. The line should separate positive data points from negative data points. There exist sets of 3 points that can indeed be shattered using this model (any 3 points that are not collinear can be shattered). However, not all set of 4 points can be shattered: by Radon's theorem, any four points can be partitioned into two subsets with intersecting convex hulls, so it is not possible to separate one of these two subsets from the other. Thus, the VC dimension of this particular classifier is 3. It is important to remember that while one can choose any arrangement of points, the arrangement of those points cannot change when attempting to shatter for some label assignment. Note, only 3 of the 23 = 8 possible label assignments are shown for the three points.

|

|

|

|

| 3 points shattered | 4 points impossible | ||

Uses

In statistical learning theory

The VC dimension can predict a probabilistic upper bound on the test error of a classification model. Vapnik[1] proved that the probability of the test error distancing from an upper bound (on data that is drawn i.i.d. from the same distribution as the training set) is given by:

where is the VC dimension of the classification model, , and is the size of the training set (restriction: this formula is valid when . When is larger, the test-error may be much higher than the training-error. This is due to overfitting).

The VC dimension also appears in sample-complexity bounds. A space of binary functions with VC dimension can be learned with:

samples, where is the learning error and is the failure probability. Thus, the sample-complexity is a linear function of the VC dimension of the hypothesis space.

The VC dimension is one of the critical parameters in the size of ε-nets, which determines the complexity of approximation algorithms based on them; range sets without finite VC dimension may not have finite ε-nets at all.

Generalizations

The VC dimension is defined for spaces of binary functions (functions to {0,1}). Several generalizations have been suggested for spaces of non-binary functions.

- For multi-valued functions (functions to {0,...,n}), the Natarajan dimension[2] can be used. Ben David et al[3] present a generalization of this concept.

- For real-valued functions (e.g. functions to a real interval, [0,1]), Pollard's pseudo-dimension[4][5][6] can be used.

- The Rademacher complexity provides similar bounds to the VC, and can sometimes provide more insight than VC dimension calculations into such statistical methods such as those using kernels.

See also

- Sauer–Shelah lemma, a bound on the number of sets in a set system in terms of the VC dimension.

- Karpinski-Macintyre theorem,[7] a bound on the VC dimension of general Pfaffian formulas.

Footnotes

References

- Moore, Andrew. "VC dimension tutorial".

- Vapnik, Vladimir (2000). The nature of statistical learning theory. Springer.

{{cite book}}: Invalid|ref=harv(help) - Vapnik, V.; Chervonenkis, A. (1971). "On the uniform convergence of relative frequencies of events to their probabilities". Theory of Probability and its Applications. 16 (2): 264–280.

- Blumer, A.; Ehrenfeucht, A.; Haussler, D.; Warmuth, M. K. (1989). "Learnability and the Vapnik–Chervonenkis dimension" (PDF). Journal of the ACM. 36 (4): 929–865.

- Burges, Christopher. "Tutorial on SVMs for Pattern Recognition" (PDF). (containing information also for VC dimension)

- Chazelle, Bernard. "The Discrepancy Method".

- Natarajan, B.K. (1989). "On Learning sets and functions". Machine Learning. 4: 67–97.

{{cite journal}}: Invalid|ref=harv(help) - Ben-David, Shai; Cesa-Bianchi, Nicolò; Long, Philip M. (1992). "Characterizations of learnability for classes of {O, …, n}-valued functions". Proceedings of the fifth annual workshop on Computational learning theory - COLT '92. p. 333. doi:10.1145/130385.130423. ISBN 089791497X.

{{cite conference}}: Invalid|ref=harv(help) - Pollard, D. (1984). Convergence of Stochastic Processes. Springer. ISBN 9781461252542.

{{cite book}}: Invalid|ref=harv(help) - Anthony, Martin; Bartlett, Peter L. (2009). Neural Network Learning: Theoretical Foundations. ISBN 9780521118620.

{{cite book}}: Invalid|ref=harv(help) - Morgenstern, Jamie H.; Roughgarden, Tim (2015). On the Pseudo-Dimension of Nearly Optimal Auctions. NIPS. arXiv:1506.03684.

{{cite conference}}: Invalid|ref=harv(help) - Karpinski, Marek; Macintyre, Angus (February 1997). "Polynomial Bounds for VC Dimension of Sigmoidal and General Pfaffian Neural Networks". Journal of Computer and System Sciences. 54 (1): 169–176. doi:10.1006/jcss.1997.1477.

{{cite journal}}: Invalid|ref=harv(help)CS1 maint: date and year (link)

![{\displaystyle \Pr \left({\text{test error}}\leqslant {\text{training error}}+{\sqrt {{\frac {1}{N}}\left[D\left(\log \left({\tfrac {2N}{D}}\right)+1\right)-\log \left({\tfrac {\eta }{4}}\right)\right]}}\right)=1-\eta ,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fedf2a28deab07459cf7701c2c5adbd6ff7d6289)