Depth map: Difference between revisions

m Bot: link syntax and minor changes |

FavoritoHJS (talk | contribs) Fixed the "Uses" list and a citation that User:S Muhammad Hossein Mousavi had trouble with. |

||

| Line 27: | Line 27: | ||

* In [[Machine vision]] and computer vision, to allow 3D images to be processed by 2D image tools. |

* In [[Machine vision]] and computer vision, to allow 3D images to be processed by 2D image tools. |

||

[[File:Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes With Deep Generative Networks.png|thumb|Generating and reconstructing 3D shapes from single or multi-view depth maps or silhouettes<ref name="3DVAE" />]] |

[[File:Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes With Deep Generative Networks.png|thumb|Generating and reconstructing 3D shapes from single or multi-view depth maps or silhouettes<ref name="3DVAE" />]] |

||

° Making depth image datasets.<ref>{{Cite journal|last=Mousavi|first=Seyed Muhammad Hossein|last2=Mirinezhad|first2=S. Younes|date=January 2021|title=Iranian kinect face database (IKFDB): a color-depth based face database collected by kinect v.2 sensor|url=http://dx.doi.org/10.1007/s42452-020-03999-y|journal=SN Applied Sciences|volume=3|issue=1|doi=10.1007/s42452-020-03999-y|issn=2523-3963}}</ref> |

|||

° Making depth image datasets .[https://link.springer.com/article/10.1007%2Fs42452-020-03999-y 6] |

|||

== Limitations == |

== Limitations == |

||

Revision as of 16:18, 5 April 2021

| Three-dimensional (3D) computer graphics |

|---|

|

| Fundamentals |

| Primary uses |

| Related topics |

In 3D computer graphics and computer vision, a depth map is an image or image channel that contains information relating to the distance of the surfaces of scene objects from a viewpoint. The term is related to and may be analogous to depth buffer, Z-buffer, Z-buffering and Z-depth.[1] The "Z" in these latter terms relates to a convention that the central axis of view of a camera is in the direction of the camera's Z axis, and not to the absolute Z axis of a scene.

Examples

-

Cubic Structure

-

Depth Map: Nearer is darker

-

Depth Map: Nearer the Focal Plane is darker

Two different depth maps can be seen here, together with the original model from which they are derived. The first depth map shows luminance in proportion to the distance from the camera. Nearer surfaces are darker; further surfaces are lighter. The second depth map shows luminance in relation to the distances from a nominal focal plane. Surfaces closer to the focal plane are darker; surfaces further from the focal plane are lighter, (both closer to and also further away from the viewpoint).[citation needed]

Uses

Depth maps have a number of uses, including:

- Simulating the effect of uniformly dense semi-transparent media within a scene - such as fog, smoke or large volumes of water.

- Simulating shallow depths of field - where some parts of a scene appear to be out of focus. Depth maps can be used to selectively blur an image to varying degrees. A shallow depth of field can be a characteristic of macro photography and so the technique may form a part of the process of miniature faking.

- Z-buffering and z-culling, techniques which can be used to make the rendering of 3D scenes more efficient. They can be used to identify objects hidden from view and which may therefore be ignored for some rendering purposes. This is particularly important in real time applications such as computer games, where a fast succession of completed renders must be available in time to be displayed at a regular and fixed rate.

- Shadow mapping - part of one process used to create shadows cast by illumination in 3D computer graphics. In this use, the depth maps are calculated from the perspective of the lights, not the viewer.[2]

- To provide the distance information needed to create and generate autostereograms and in other related applications intended to create the illusion of 3D viewing through stereoscopy .

- Subsurface scattering - can be used as part of a process for adding realism by simulating the semi-transparent properties of translucent materials such as human skin.

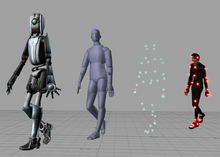

- In computer vision single-view or multi-view images depth maps, or other types of images, are used to model 3D shapes or reconstruct them.[3] Depth maps can be generated by 3D scanners[4] or reconstructed from multiple images.[5]

- In Machine vision and computer vision, to allow 3D images to be processed by 2D image tools.

° Making depth image datasets.[6]

Limitations

- Single channel depth maps record the first surface seen, and so cannot display information about those surfaces seen or refracted through transparent objects, or reflected in mirrors. This can limit their use in accurately simulating depth of field or fog effects.

- Single channel depth maps cannot convey multiple distances where they occur within the view of a single pixel. This may occur where more than one object occupies the location of that pixel. This could be the case - for example - with models featuring hair, fur or grass. More generally, edges of objects may be ambiguously described where they partially cover a pixel.

- Depending on the intended use of a depth map, it may be useful or necessary to encode the map at higher bit depths. For example, an 8 bit depth map can only represent a range of up to 256 different distances.

- Depending on how they are generated, depth maps may represent the perpendicular distance between an object and the plane of the scene camera. For example, a scene camera pointing directly at - and perpendicular to - a flat surface may record a uniform distance for the whole surface. In this case, geometrically, the actual distances from the camera to the areas of the plane surface seen in the corners of the image are greater than the distances to the central area. For many applications, however, this discrepancy is not a significant issue.

References

- ^ Computer Arts / 3D World Glossary[permanent dead link], Document retrieved 26 January 2011.

- ^ Eisemann, Elmar; Schwarz, Michael; Assarsson, Ulf; Wimmer, Michael (19 April 2016). Real-Time Shadows. CRC Press. ISBN 978-1-4398-6769-3.

- ^ a b "Soltani, A. A., Huang, H., Wu, J., Kulkarni, T. D., & Tenenbaum, J. B. Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes With Deep Generative Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 1511-1519)".

- ^ Schuon, Sebastian, et al. "Lidarboost: Depth superresolution for tof 3d shape scanning[permanent dead link]." Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on. IEEE, 2009.

- ^ Malik, Aamir Saeed, ed. Depth map and 3D imaging applications: algorithms and technologies: algorithms and technologies[permanent dead link]. IGI Global, 2011.

- ^ Mousavi, Seyed Muhammad Hossein; Mirinezhad, S. Younes (January 2021). "Iranian kinect face database (IKFDB): a color-depth based face database collected by kinect v.2 sensor". SN Applied Sciences. 3 (1). doi:10.1007/s42452-020-03999-y. ISSN 2523-3963.