Wikipedia:STiki

| |

| Developer(s) | Andrew G. West (west.andrew.g) |

|---|---|

| Initial release | June 2010 |

| Stable release | 2.0

/ January 17, 2012 |

| Written in | Java |

| Platform | Cross-platform |

| Available in | English |

| Type | Vandalism detection on Wikipedia |

| License | GNU General Public License |

| Website | http://www.cis.upenn.edu/~westand |

| Warning: You take full responsibility for any action you perform using STiki. You must read and understand Wikipedia policies and use this tool within these policies, or risk being blocked from editing. |

STiki is a utility for the detection and reversion of vandalism on Wikipedia. STiki uses state-of-the-art detection methods to determine which edits should be shown to end users. If a displayed edit is vandalism, STiki then streamlines the reversion and warning process. Critically, STiki is a collaborative approach to reverting vandalism, not a user-centric one; the list of edits to be inspected is consumed in a crowd-sourced fashion. STiki is not a Wikipedia bot, it is an intelligent routing tool that directs human-users to potential vandalism for definitive classification.

To date, STiki has been used to revert 1,265,447 instances of vandalism on Wikipedia (see the "leader-board").

Multiple approaches (scoring systems) are used to determine which edits will be displayed: some authored by STiki's developers and others by third parties. An end user can choose which scoring system they pull edits from. Currently implemented scoring systems include:

| STiki (metadata) | Cluebot-NG | WikiTrust | Anti-Spam |

|---|---|---|---|

| The "original" queue used by STiki, using metadata features and a machine-learning algorithm to arrive at vandalism predictions. More detail about this technique is available in the "Metadata Scoring System" section below. | Using an Artificial Neural Network (ANN) to score edits is the ClueBot NG approach. The worst-scoring edits are undone automatically. However, there are many edits that CBNG is quite confident are vandalism, but cannot revert due to a low false-positive tolerance, the one-revert-rule, or other constraints. These scores are consumed from an IRC feed. | Built upon editor reputations calculated from content-persistence is the WikiTrust system of Adler et al. More details are available at their website. WikiTrust scores are consumed via their API. | Now active! Parses new external links from revisions and measures their external link spam potential. See the "Link Spam Scoring" section below for additional details. |

Download

- Front-end GUI, distributed as an executable *.JAR. After unzipping, double-click the *.JAR file to launch (Windows, OS X), or issue the terminal command "java -jar STiki_exec_[date].jar" (UNIX).

- STiki remains in active development, both the front-end GUI and back-end scoring systems. Check back frequently for updated versions. Note that due to a significant code change, versions dated 2010-11-28 and older are non-functional; an upgrade is required

- Advanced users: STiki Source (1952 kB) --- Link Processing Component (114 kB)

Architecture

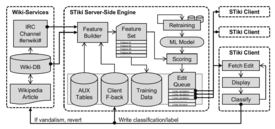

Architecturally, STiki uses a server/client architecture:

(1) Backend-processing: that watches all recent changes to Wikipedia and calculates/fetches the probability that each is vandalism. This engine calculates scores for the Metadata Scoring System, and uses APIs/feeds to retrieve the scores calculated by third-party systems. Edits populate a series of inter-linked priority queues, where the vandalism scores are the priority for insertion. Queue maintenance ensures that only the most-recent edit to an article is eligible to be viewed. Backend work is done on STiki's servers (hosted at the University of Pennsylvania), relying heavily on a MySQL database.

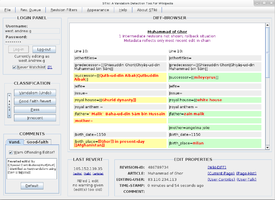

(2) Frontend-GUI: The user-facing GUI is a Java desktop application. It displays diffs that likely contain vandalism (per the backend) to human-users and asks for definitive classification. STiki streamlines the process of reverting poor edits and issuing warnings/AIV-notices to guilty editors. The interface is designed to enable quick review. Moreover, the classification process establishes a feedback loop to improve detection algorithms.

Metadata scoring and origins

Here we highlight a particular scoring system, based on machine-learning over metadata properties. This system was developed by the same authors as the STiki frontend GUI, was the only system shipped with the first versions, and shares a code-base/distribution with the STiki GUI. This system also gave the entire software package its name (derived from Spatio Temporal processing on Wikipedia), though this acronymic meaning is now downplayed.

The "metadata system" examines only 4 fields of an edit when scoring: (1) timestamp, (2) editor, (3) article, and (4) revision comment. These fields are used to calculate features pertaining to the editors registration status, edit time-of-day, edit day-of-week, geographical origin, page history, category memberships, revision comment length, etc. These signals are given to an ADTree classifier to arrive at vandalism probabilities. The ML models are trained over classifications provided on the STiki frontend. A more rigorous discussion of the technique can be found in a EUROSEC 2010 publication.

An API has been developed to give other researchers/developers access to the raw metadata features and the resulting vandalism probabilities. A README describes API details.

The paper was an academic attempt to show that language properties were not necessary to detect Wikipedia vandalism. It succeeded in this regard, but since then the system has been relaxed for general-purpose use. For example, the engine now includes some simple language features. Moreover, there was the decision to integrate other scoring systems in the GUI frontend.

Link spam scoring

As the core STiki engine processes revisions for vandalism, it also parses diffs for the addition of new external links. When one is found, it is passed to the link processor to have its spam potential analyzed. For each link, a feature vector of ~50 elements is constructed and given to a machine-learning classifier. Those features fall into one of three categories:

- Wikipedia metadata: In addition to re-using features from the anti-vandalism classifier, these also includes link-specific signals such as the length of the URL, whether it is a citation, the TLD of the URL, and metrics capturing the URL/domains addition history.

- Landing site analysis: For each site linked, the processor visits the page and obtains the source code (usually, HTML). In turn, this is analyzed to measure a site's commercial intention, offensive content, and the use of SEO tactics.

- Third-party data: First, Alexa data is used to learn about historical traffic patterns at the URL. This also provides interesting features pertaining to the age of the website, whether it contains adult content, and its quantity of backlinks. Second, the Google Safe Browsing project is queried. This allows URLs that distribute malware or engage in phishing to be identified.

A more formal description of the technique can be found in a WikiSym'11 paper, motivated in part by vulnerabilities and observations from a CEAS'11 paper. A Wikimania 2011 presentation discussed the live implementation of that technique (i.e., the software described in this section). Just as with anti-vandalism, the feedback from GUI use will help in refining the future accuracy of this technique. Orthogonal to the spam-detection task, the processor also reports dead links it encounters to WP:STiki/Dead_links, where they can be patrolled by humans to help address the issue of link rot on Wikipedia.

Related works and cooperation

Comparison to other tools: There are many tools in both literature and active use on Wikipedia that aim to curb vandalism (e.g. Huggle, Twinkle, and ClueBot NG). STiki does not intend to compete against these alternative systems, but complement them. In some cases, STiki has integrated these strategies by adding additional revision queues to its system. STiki's novelty lies in the fact it is an intelligent routing tool, directing humans to those edits needing inspection. In some ways, STiki is similar to the vandalism reversion GUI Huggle. However, Huggle uses simple static rules to prioritize edits, whereas STiki uses dynamic machine-learning strategies. Further, STiki is written in Java and is platform independent.

Data availability: STiki's authors are committed to working towards collaborative solutions to vandalism. To this end, an API is available to STiki's internally calculated scores. A live feed of scores is also published to channel "#arm-stiki-scores" on IRC server "armstrong.cis.upenn.edu". Moreover, all STiki code is open-sourced. In the course of our research, we have collected large amounts of data, both passively regarding Wikipedia, and through users' active use of the STiki tool. We are interested in sharing this data with other researchers. Finally, STiki distributions contain a program called the Offline Review Tool (ORT), which allows a user-provided set of edits to be quickly reviewed and annotated. We believe this tool will prove helpful to corpus-building researchers.

Credits and more information

STiki was written by Andrew G. West (west.andrew.g), a doctoral student in computer science at the University of Pennsylvania. The academic paper which shaped the STiki methodology was co-authored by Sampath Kannan and Insup Lee. The work was supported in part by ONR-MURI-N00014-07-1-0907.

In addition to the already discussed academic paper, there have been several STiki-specific write-ups/publications that may prove useful to anti-vandalism developers. The STiki software was presented in a WikiSym 2010 demonstration, and a WikiSym 2010 poster visualizes this content and provides some STiki-revert statistics. STiki was also presented at Wikimania 2010, with the following presentation slides. An additional writing (not peer reviewed), examines STiki and anti-vandalism techniques as they relate to the larger issue of trust in collaborative applications.

Beyond STiki in isolation, a CICLing 2011 paper examined STiki's metadata scoring technique relative (and in combination with) NLP and content-persistence features (the top 2 finishers from the 2010 PAN Competition) -- and set new performance baselines in the process. A 2011 edition of the PAN-CLEF competition was also held and required multiple natural-languages to be processed; our entry won at all tasks. Finally, a Wikimania 2011 Presentation surveyed the rapid anti-vandalism progress (both academic and on-wiki) of the 2010-2011 time period.

Queries not addressed by these writings should be addressed to STiki's authors.

Userboxes and awards

For those who would like to show their support for STiki via a userbox, the following have been created/made-available:

|

|

|||||

{{User:West.andrew.g/STiki UserBox 1}} |

{{User:West.andrew.g/STiki UserBox 2}} |

|

The da Vinci Barnstar | |

| I used to be a staunch Huggle user for about a year. Then when I stumbled across STiki, I found it to be faster and much more enjoyable to use. Consider me converted. :) Orphan Wiki 15:21, 30 January 2011 (UTC) |

|

The da Vinci Barnstar | |

| In recognition of an outstanding technical achievement. :) Ϫ 23:00, 22 February 2011 (UTC) |