Mean: Difference between revisions

→Small sample sizes: {{ move portions|section=y }} |

|||

| Line 14: | Line 14: | ||

As well as statistics, means are often used in geometry and analysis; a wide range of means have been developed for these purposes, which are not much used in statistics. Examples of means are listed below. |

As well as statistics, means are often used in geometry and analysis; a wide range of means have been developed for these purposes, which are not much used in statistics. Examples of means are listed below. |

||

Mean: a person who is rude |

|||

==Examples of means== |

|||

Example: Nick Hoover |

|||

=== Arithmetic mean (AM) === |

=== Arithmetic mean (AM) === |

||

{{Main|Arithmetic |

{{Main|Arithmetic meanh}} |

||

The ''arithmetic mean'' is the "standard" average, often simply called the "mean". |

The ''arithmetic mean'' is the "standard" average, often simply called the "mean". |

||

Revision as of 05:37, 6 March 2013

In statistics, mean has three related meanings[1] :

- the arithmetic mean of a sample (distinguished from the geometric mean or harmonic mean).

- the expected value of a random variable.

- the mean of a probability distribution.

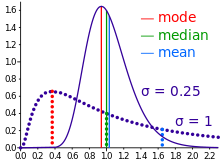

There are other statistical measures of central tendency that should not be confused with means - including the 'median' and 'mode'. Statistical analyses also commonly use measures of dispersion, such as the range, interquartile range, or standard deviation. Note that not every probability distribution has a defined mean; see the Cauchy distribution for an example.

For a data set, the arithmetic mean is equal to the sum of the values divided by the number of values. The arithmetic mean of a set of numbers x1, x2, ..., xn is typically denoted by , pronounced "x bar". If the data set were based on a series of observations obtained by sampling from a statistical population, the arithmetic mean is termed the "sample mean" () to distinguish it from the "population mean" ( or x).[2] For a finite population, the population mean of a property is equal to the arithmetic mean of the given property while considering every member of the population. For example, the population mean height is equal to the sum of the heights of every individual divided by the total number of individuals.

The sample mean may differ from the population mean, especially for small samples. The law of large numbers dictates that the larger the size of the sample, the more likely it is that the sample mean will be close to the population mean.[3]

For a probability distribution, the mean is equal to the sum or integral over every possible value weighted by the probability of that value. In the case of a discrete probability distribution, the mean of a discrete random variable x is computed by taking the product of each possible value of x and its probability P(x), and then adding all these products together, giving .[4]

As well as statistics, means are often used in geometry and analysis; a wide range of means have been developed for these purposes, which are not much used in statistics. Examples of means are listed below.

Mean: a person who is rude Example: Nick Hoover

Arithmetic mean (AM)

The arithmetic mean is the "standard" average, often simply called the "mean".

For example, the arithmetic mean of five values: 4, 36, 45, 50, 75 is

The mean may often be confused with the median, mode or range. The mean is the arithmetic average of a set of values, or distribution; however, for skewed distributions, the mean is not necessarily the same as the middle value (median), or the most likely (mode). For example, mean income is skewed upwards by a small number of people with very large incomes, so that the majority have an income lower than the mean. By contrast, the median income is the level at which half the population is below and half is above. The mode income is the most likely income, and favors the larger number of people with lower incomes. The median or mode are often more intuitive measures of such data.

Nevertheless, many skewed distributions are best described by their mean – such as the exponential and Poisson distributions.

Geometric mean (GM)

The geometric mean is an average that is useful for sets of positive numbers that are interpreted according to their product and not their sum (as is the case with the arithmetic mean) e.g. rates of growth.

For example, the geometric mean of five values: 4, 36, 45, 50, 75 is:

Harmonic mean (HM)

The harmonic mean is an average which is useful for sets of numbers which are defined in relation to some unit, for example speed (distance per unit of time).

For example, the harmonic mean of the five values: 4, 36, 45, 50, 75 is

Relationship between AM, GM, and HM

AM, GM, and HM satisfy these inequalities:

Equality holds only when all the elements of the given sample are equal.

Generalized means

Power mean

The generalized mean, also known as the power mean or Hölder mean, is an abstraction of the quadratic, arithmetic, geometric and harmonic means. It is defined for a set of n positive numbers xi by

By choosing different values for the parameter m, the following types of means are obtained:

ƒ-mean

This can be generalized further as the generalized f-mean

and again a suitable choice of an invertible ƒ will give

| arithmetic mean, | |

| harmonic mean, | |

| power mean, | |

| geometric mean. |

Weighted arithmetic mean

The weighted arithmetic mean (or weighted average) is used, if one wants to combine average values from samples of the same population with different sample sizes:

The weights represent the bounds of the partial sample. In other applications they represent a measure for the reliability of the influence upon the mean by respective values.

Truncated mean

Sometimes a set of numbers might contain outliers, i.e., a datum which is much lower or much higher than the others. Often, outliers are erroneous data caused by artifacts. In this case, one can use a truncated mean. It involves discarding given parts of the data at the top or the bottom end, typically an equal amount at each end, and then taking the arithmetic mean of the remaining data. The number of values removed is indicated as a percentage of total number of values.

Interquartile mean

The interquartile mean is a specific example of a truncated mean. It is simply the arithmetic mean after removing the lowest and the highest quarter of values.

assuming the values have been ordered, so is simply a specific example of a weighted mean for a specific set of weights.

Mean of a function

In calculus, and especially multivariable calculus, the mean of a function is loosely defined as the average value of the function over its domain. In one variable, the mean of a function f(x) over the interval (a,b) is defined by

Recall that a defining property of the average value of finitely many numbers is that . In other words, is the constant value which when added to itself times equals the result of adding the terms of . By analogy, a defining property of the average value of a function over the interval is that

In other words, is the constant value which when integrated over equals the result of integrating over . But by the second fundamental theorem of calculus, the integral of a constant is just

See also the first mean value theorem for integration, which guarantees that if is continuous then there exists a point such that

The point is called the mean value of on . So we write and rearrange the preceding equation to get the above definition.

In several variables, the mean over a relatively compact domain U in a Euclidean space is defined by

This generalizes the arithmetic mean. On the other hand, it is also possible to generalize the geometric mean to functions by defining the geometric mean of f to be

More generally, in measure theory and probability theory, either sort of mean plays an important role. In this context, Jensen's inequality places sharp estimates on the relationship between these two different notions of the mean of a function.

There is also a harmonic average of functions and a quadratic average (or root mean square) of functions.

Mean of a probability distribution

See expected value.

Mean of angles

Most of the usual means fail on circular quantities, like angles, daytimes, fractional parts of real numbers. For those quantities you need a mean of circular quantities.

Fréchet mean

The Fréchet mean gives a manner for determining the "center" of a mass distribution on a surface or, more generally, Riemannian manifold. Unlike many other means, the Fréchet mean is defined on a space whose elements cannot necessarily be added together or multiplied by scalars. It is sometimes also known as the Karcher mean (named after Hermann Karcher).

Other means

- Arithmetic-geometric mean

- Arithmetic-harmonic mean

- Cesàro mean

- Chisini mean

- Contraharmonic mean

- Distance-weighted estimator

- Elementary symmetric mean

- Geometric-harmonic mean

- Heinz mean

- Heronian mean

- Identric mean

- Lehmer mean

- Logarithmic mean

- Median

- Moving average

- Root mean square

- Rényi's entropy (a generalized f-mean)

- Stolarsky mean

- Weighted geometric mean

- Weighted harmonic mean

Properties

All means share some properties and additional properties are shared by the most common means. Some of these properties are collected here.

Weighted mean

A weighted mean M is a function which maps tuples of positive numbers to a positive number

such that the following properties hold:

- "Fixed point": M(1,1,...,1) = 1

- Homogeneity: M(λ x1, ..., λ xn) = λ M(x1, ..., xn) for all λ and xi. In vector notation: M(λ x) = λ Mx for all n-vectors x.

- Monotonicity: If xi ≤ yi for each i, then Mx ≤ My

It follows

- Boundedness: min x ≤ Mx ≤ max x

- Continuity:

- There are means which are not differentiable. For instance, the maximum number of a tuple is considered a mean (as an extreme case of the power mean, or as a special case of a median), but is not differentiable.

- All means listed above, with the exception of most of the Generalized f-means, satisfy the presented properties.

- If f is bijective, then the generalized f-mean satisfies the fixed point property.

- If f is strictly monotonic, then the generalized f-mean satisfy also the monotony property.

- In general a generalized f-mean will miss homogeneity.

The above properties imply techniques to construct more complex means:

If C, M1, ..., Mm are weighted means and p is a positive real number, then A and B defined by

are also weighted means.

Unweighted mean

Intuitively spoken, an unweighted mean is a weighted mean with equal weights. Since our definition of weighted mean above does not expose particular weights, equal weights must be asserted by a different way. A different view on homogeneous weighting is, that the inputs can be swapped without altering the result.

Thus we define M to be an unweighted mean if it is a weighted mean and for each permutation π of inputs, the result is the same.

- Symmetry: Mx = M(πx) for all n-tuples x and permutations π on n-tuples.

Analogously to the weighted means, if C is a weighted mean and M1, ..., Mm are unweighted means and p is a positive real number, then A and B defined by

are also unweighted means.

Convert unweighted mean to weighted mean

An unweighted mean can be turned into a weighted mean by repeating elements. This connection can also be used to state that a mean is the weighted version of an unweighted mean. Say you have the unweighted mean M and weight the numbers by natural numbers . (If the numbers are rational, then multiply them with the least common denominator.) Then the corresponding weighted mean A is obtained by

Means of tuples of different sizes

If a mean M is defined for tuples of several sizes, then one also expects that the mean of a tuple is bounded by the means of partitions. More precisely

- Given an arbitrary tuple x, which is partitioned into y1, ..., yk, then

- (See Convex hull.)

Population and sample means

The mean of a population is denoted μ, known as the population mean. The sample mean makes a good estimator of the population mean, as its expected value is equal to the population mean. The sample mean of a population is a random variable, not a constant, and consequently it will have its own distribution. For a random sample of n observations from a normally distributed population, the sample mean distribution is

Often, since the population variance is an unknown parameter, it is estimated by the mean sum of squares, which changes the distribution of the sample mean from a normal distribution to a Student's t distribution with n − 1 degrees of freedom.

Small sample sizes

It has been suggested that portions of this section be split out into another article titled Sample mean. (Discuss) (February 2013) |

Small sample sizes occur in practice and present unusually difficult problems for parameter estimation.

It is a common but false belief[citation needed] that from a single (n = 1) observation x that information about the variability in the population cannot be gained and that consequently that finite-length confidence intervals for mean and/or variance are impossible even in principle. Where the shape of the population distribution is known, some estimates are possible:

For a normally distributed variate, the confidence intervals for the (arithmetic) population mean at the 90% level have been shown to be x ± 5.84 | x | where || is the absolute value.[5][6] The 95% bound for a normally distributed variate is x ± 9.68 | x | and that for a 99% confidence interval is x ± 48.39 | x |.[7]

The estimate derived from this method shows behavior that is atypical of more conventional methods. A value of 0 for the sample mean cannot be rejected with any level of confidence.[8] If x = 0, the confidence interval collapses to a length of 0.[9] Finally the confidence interval is not stable under a linear transform x -> ax + b where a and b are constants.[10]

Machol has shown that that given a known density symmetrical about 0 and a single sample value ( x ) that the 90% confidence intervals of population mean are[11]

where ν is the population median.

For a sample size of two ( n = 2 ), the population mean is bounded by

where x1, x2 are the variate values, μ is the population mean and k is a constant that depends on the underlying distribution. For the normal distribution, k = cotangent( π α / 2 ). For α = 0.05, k = 12.71. For the rectangular distribution, k = ( 1 / α ) - 1. For α = 0.05, k = 19.[12]

For a sample size of three ( n = 3 ), the confidence intervals for the population mean are

where m is the sample mean, s is the sample standard deviation and k is a constant that depends on the distribution. For the normal distribution, k is approximately 1 / √α - 3 √α / 4 + ... When α = 0.05, k = 4.30. For the rectangular distribution with α = 0.05, k = 5.74.[12]

For medium-size samples ( 4 ≤ n ≤ 20 ), the pivot statistics give better estimates of the population mean than the t test does.[13][14]

The pivot depth (j) is int( ( n + 1 ) / 2 ) / 2 or int( ( n + 1 ) / 2 + 1 ) / 2 depending on which value is an integer. The lower pivot is xL = xj and the upper pivot xU is xn + 1 - j. The pivot half sum (P) is

and the pivot range (R) is

The confidence intervals for the population mean are then

where t is the value of the t test at 100( 1 - α / 2 )%.

The pivot statistic T = P / R has an approximately symmetrical distribution and its values for 4 ≤ n ≤ 20 for a number of values of 1 - α are given in Table 2 of Meloun et al.[15]

See also

- Algorithms for calculating mean and variance

- Average, same as central tendency

- Descriptive statistics

- For an independent identical distribution from the reals, the mean of a sample is an unbiased estimator for the mean of the population.

- Kurtosis

- Law of averages

- Median

- Mode (statistics)

- Spherical mean

- Summary statistics

- Taylor's law

References

- ^ Feller, William (1950). Introduction to Probability Theory and its Applications, Vol I. Wiley. p. 221. ISBN 0471257087.

- ^ Underhill, L.G.; Bradfield d. (1998) Introstat, Juta and Company Ltd. ISBN 0-7021-3838-X p. 181

- ^ Schaum's Outline of Theory and Problems of Probability by Seymour Lipschutz and Marc Lipson, p. 141

- ^ Elementary Statistics by Robert R. Johnson and Patricia J. Kuby, p. 279

- ^ Abbot JH, Rosenblatt J (1963) Two stage estimation with one observation on the first stage. Annals of the Institute of Statistical Mathematics 14: 229-235

- ^ Blachman NM, Machol R (1987) Confidence intervals based on one or more observations. IEEE Transactions on Information Theory 33(3): 373-382

- ^ Wall MM, Boen J, Tweedie R (2001) An effective confidence interval for the mean With samples of size one and two. The American Statistician http://www.biostat.umn.edu/ftp/pub/2000/rr2000-007.pdf

- ^ Vos P (2002) The Am Stat 56 (1) 80

- ^ Wittkowski KN (2002) The Am Stat 56 (1) 80

- ^ Wall ME (2002) The Am Stat 56 (1) 80-81

- ^ Machol R (1964) IEEE Trans Info Theor

- ^ a b Blackman NM, Machol RE (1987) IEEE Trans on inform theory 33:373

- ^ Horn PS, Pesce AJ, Copeland BE (1998) A robust approach to reference interval estimation and evaluation. Clin Chem 44:622–631

- ^ Horn J (1983) Some easy T-statistics. J Am Statist Assoc 78:930

- ^ Meloun M, Hill M, Militký J, Kupka K (2001) Analysis of large and small samples of biochemical and clinical data. Clin Chem Lab Med 39(1):53–61

![{\displaystyle (4\times 36\times 45\times 50\times 75)^{^{1}/_{5}}={\sqrt[{5}]{24\;300\;000}}=30.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3c4425218d27488f26ff3f661a0e6df4ad676e59)

![{\displaystyle [a,b]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935)

![{\displaystyle Bx={\sqrt[{p}]{C(x_{1}^{p},\dots ,x_{n}^{p})}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/43afdc52a7e1a037a6ab8cfd4c3d085fa9e598da)

![{\displaystyle Bx={\sqrt[{p}]{M_{1}(x_{1}^{p},\dots ,x_{n}^{p})}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0c663c75e1082752b452517289ab831779c49c8b)