Trolley problem

The trolley problem is a series of thought experiments in ethics and psychology, involving stylized ethical dilemmas of whether to sacrifice one person to save a larger number. Opinions on the ethics of each scenario turn out to be sensitive to details of the story that may seem immaterial to the abstract dilemma. The question of formulating a general principle that can account for the differing moral intuitions in the different variants of the story was dubbed the "trolley problem" in a 1976 philosophy paper by Judith Jarvis Thomson.

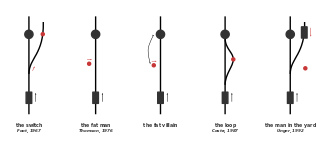

The most basic version of the dilemma, known as "Bystander at the Switch" or "Switch", goes:

There is a runaway trolley barreling down the railway tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person on the side track. You have two options:

- Do nothing and allow the trolley to kill the five people on the main track.

- Pull the lever, diverting the trolley onto the side track where it will kill one person.

Which is the more ethical option? Or, more simply: What is the right thing to do?

Philippa Foot introduced this genre of decision problems in 1967 as part of an analysis of debates on abortion and the doctrine of double effect.[1] Philosophers Judith Thomson,[2][3] Frances Kamm,[4] and Peter Unger have also analysed the dilemma extensively.[5] Thomson's 1976 article initiated the literature on the trolley problem as a subject in its own right. Characteristic of this literature are colorful and increasingly absurd alternative scenarios in which the sacrificed man is instead pushed onto the tracks as a weight to stop the trolley, has his organs harvested to save transplant patients, or is killed in more indirect ways that complicate the chain of causation and responsibility.

Earlier forms of individual trolley scenarios antedated Foot's publication. Frank Chapman Sharp included a version in a moral questionnaire given to undergraduates at the University of Wisconsin in 1905. In this variation, the railway's switchman controlled the switch, and the lone individual to be sacrificed (or not) was the switchman's child.[6][7] German philosopher of law Karl Engisch discussed a similar dilemma in his habilitation thesis in 1930, as did as German legal scholar Hans Welzel in a work from 1951.[8][9] In his commentary on the Talmud, published long before his death in 1953, Avrohom Yeshaya Karelitz considered the question of whether it is ethical to deflect a projectile from a larger crowd toward a smaller one.[10]

Beginning in 2001, the trolley problem and its variants have been used extensively in empirical research on moral psychology. It has been a topic of popular books.[11] Trolley-style scenarios also arise in discussing the ethics of autonomous vehicle design, which may require programming to choose whom or what to strike when a collision appears to be unavoidable.[12]

Original dilemma

Foot's version of the thought experiment, now known as "Trolley Driver", ran as follows:

Suppose that a judge or magistrate is faced with rioters demanding that a culprit be found for a certain crime and threatening otherwise to take their own bloody revenge on a particular section of the community. The real culprit being unknown, the judge sees himself as able to prevent the bloodshed only by framing some innocent person and having him executed. Beside this example is placed another in which a pilot whose airplane is about to crash is deciding whether to steer from a more to a less inhabited area. To make the parallel as close as possible, it may rather be supposed that he is the driver of a runaway tram, which he can only steer from one narrow track on to another; five men are working on one track and one man on the other; anyone on the track he enters is bound to be killed. In the case of the riots, the mob have five hostages, so that in both examples, the exchange is supposed to be one man's life for the lives of five.[1]

A utilitarian view asserts that it is obligatory to steer to the track with one man on it. According to classical utilitarianism, such a decision would be not only permissible, but, morally speaking, the better option (the other option being no action at all).[13] An alternate viewpoint [by whom?] is that since moral wrongs are already in place in the situation, moving to another track constitutes a participation in the moral wrong, making one partially responsible for the death when otherwise no one would be responsible. An opponent of action may also point to the incommensurability of human lives. Under some interpretations of moral obligation, simply being present in this situation and being able to influence its outcome constitutes an obligation to participate. If this is the case, then deciding to do nothing would be considered an immoral act if one values five lives more than one.

Empirical research

In 2001, Joshua Greene and colleagues published the results of the first significant empirical investigation of people's responses to trolley problems.[14] Using functional magnetic resonance imaging, they demonstrated that "personal" dilemmas (like pushing a man off a footbridge) preferentially engage brain regions associated with emotion, whereas "impersonal" dilemmas (like diverting the trolley by flipping a switch) preferentially engaged regions associated with controlled reasoning. On these grounds, they advocate for the dual-process account of moral decision-making. Since then, numerous other studies have employed trolley problems to study moral judgment, investigating topics like the role and influence of stress,[15] emotional state,[16] impression management,[17] levels of anonymity,[18] different types of brain damage,[19] physiological arousal,[20] different neurotransmitters,[21] and genetic factors[22] on responses to trolley dilemmas.

Trolley problems have been used as a measure of utilitarianism, but their usefulness for such purposes has been widely criticized.[23][24][25]

In 2017, a group led by Michael Stevens performed the first realistic trolley-problem experiment, where subjects were placed alone in what they thought was a train-switching station, and shown footage that they thought was real (but was actually prerecorded) of a train going down a track, with five workers on the main track, and one on the secondary track; the participants had the option to pull the lever to divert the train toward the secondary track. Most of the participants did not pull the lever.[26]

Survey data

The trolley problem has been the subject of many surveys in which about 90% of respondents have chosen to kill the one and save the five.[27] If the situation is modified where the one sacrificed for the five was a relative or romantic partner, respondents are much less likely to be willing to sacrifice the one life.[28]

A 2009 survey by David Bourget and David Chalmers shows that 69.9% of professional philosophers would switch (sacrifice the one individual to save five lives) in the case of the trolley problem, 8% would not switch, and the remaining 24% had another view or could not answer.[29]

Criticism

In a 2014 paper published in the Social and Personality Psychology Compass,[23] researchers criticized the use of the trolley problem, arguing, among other things, that the scenario it presents is too extreme and unconnected to real-life moral situations to be useful or educational.[30]

Brianna Rennix and Nathan J. Robinson of Current Affairs go even further and assert that the thought experiment is not only useless, but also downright detrimental to human psychology. The authors opine that to make cold calculations about hypothetical situations in which every alternative will result in one or more gruesome deaths is to encourage a type of thinking that is devoid of human empathy and assumes a mandate to decide who lives or dies. They also question the premise of the scenario. "If I am forced against my will into a situation where people will die, and I have no ability to stop it, how is my choice a "moral" choice between meaningfully different options, as opposed to a horror show I've just been thrust into, in which I have no meaningful agency at all?"[31]

In her 2017 paper published in the Science, Technology, and Human Values, Nassim JafariNaimi[32] lays out the reductive nature of the trolley problem in framing ethical problems that serves to uphold an impoverished version of utilitarianism. She argues that the popular argument that the trolley problem can serve as a template for algorithmic morality is based on fundamentally flawed premises that serve the most powerful with potentially dire consequences on the future of cities.[33]

In 2017, in his book On Human Nature, Roger Scruton criticises the usage of ethical dilemmas such as the trolley problem and their usage by philosophers such as Derek Parfit and Peter Singer as ways of illustrating their ethical views. Scruton writes, "These 'dilemmas' have the useful character of eliminating from the situation just about every morally relevant relationship and reducing the problem to one of arithmetic alone." Scruton believes that just because one would choose to change the track so that the train hits the one person instead of the five does not mean that they are necessarily a consequentialist. As a way of showing the flaws in consequentialist responses to ethical problems, Scruton points out paradoxical elements of belief in utilitarianism and similar beliefs. He believes that Nozick's experience machine thought experiment definitively disproves hedonism.[34]

In a 2018 article published in Psychological Review, researchers pointed out that, as measures of utilitarian decisions, sacrificial dilemmas such as the trolley problem measure only one facet of proto-utilitarian tendencies, namely permissive attitudes toward instrumental harm, while ignoring impartial concern for the greater good. As such, the authors argued that the trolley problem provides only a partial measure of utilitarianism.[24]

Related problems

Trolley problems highlight the difference between deontological and consequentialist ethical systems.[12] The central question that these dilemmas bring to light is on whether or not it is right to actively inhibit the utility of an individual if doing so produces a greater utility for other individuals.

The basic Switch form of the trolley problem also supports comparison to other, related dilemmas:

The fat man

As before, a trolley is hurtling down a track towards five people. You are on a bridge under which it will pass, and you can stop it by putting something very heavy in front of it. As it happens, there is a very fat man next to you – your only way to stop the trolley is to push him over the bridge and onto the track, killing him to save five. Should you proceed?

Resistance to this course of action seems strong; when asked, a majority of people will approve of pulling the switch to save a net of four lives, but will disapprove of pushing the fat man to save a net of four lives.[35] This has led to attempts to find a relevant moral distinction between the two cases.

One clear distinction is that in the first case, one does not intend harm towards anyone – harming the one is just a side effect of switching the trolley away from the five. However, in the second case, harming the one is an integral part of the plan to save the five. This is an argument which Shelly Kagan considers (and ultimately rejects) in his first book The Limits of Morality.[36]

A claim can be made that the difference between the two cases is that in the second, the subject intends someone's death to save the five, and this is wrong, whereas, in the first, they have no such intention. This solution is essentially an application of the doctrine of double effect, which says that one may take action that has bad side effects, but deliberately intending harm (even for good causes) is wrong.

Another distinction is that the first case is similar to a pilot in an airplane that has lost power and is about to crash into a heavily populated area. Even if the pilot knows for sure that innocent people will die if he redirects the plane to a less populated area—people who are "uninvolved"—he will actively turn the plane without hesitation. It may well be considered noble to sacrifice one's own life to protect others, but morally or legally allowing murder of one innocent person to save five people may be insufficient justification.[clarification needed]

The fat villain

The further development of this example involves the case wherein the fat man is, in fact, the villain who put these five people in peril. In this instance, pushing the villain to his death, especially to save five innocent people, seems not only morally permissible, but perhaps even morally obligatory. This is related to another thought experiment, known as ticking time bomb scenario, which forces one to choose between two morally questionable acts.

The loop variant

The claim that it is wrong to use the death of one to save five runs into a problem with variants like this:

As before, a trolley is hurtling down a track towards five people and you can divert it onto a secondary track. However, in this variant the secondary track later rejoins the main track, so diverting the trolley still leaves it on a track which leads to the five people. The person on the secondary track, though, is a fat person who, when he is killed by the trolley, will stop it from continuing on to the five people. Should you flip the switch?

The only physical difference here is the addition of an extra piece of track. This seems trivial since the trolley will never travel down it. The reason this might affect someone's decision is that in this case, the death of the one actually is part of the plan to save the five.

The rejoining variant may not be fatal to the "using a person as a means" argument. This has been suggested by Michael J. Costa in his 1987 article "Another Trip on the Trolley", where he points out that if we fail to act in this scenario, we will effectively be allowing the five to become a means to save the one.

Transplant

Here is an alternative case, as presented by Judith Jarvis Thomson,[3] containing similar numbers and results, but without a trolley:

A brilliant transplant surgeon has five patients, each in need of a different organ, each of whom will die without that organ. Unfortunately, no organs are available to perform any of these five transplant operations. A healthy young traveler, just passing through the city in which the doctor works, comes in for a routine checkup. In the course of doing the checkup, the doctor discovers that his organs are compatible with all five of his dying patients. Suppose further that if the young man were to disappear, no one would suspect the doctor. Do you support the morality of the doctor to kill that tourist and provide his healthy organs to those five dying people and save their lives?

The man in the yard

Unger argues extensively against traditional non-utilitarian responses to trolley problems. This is one of his examples:

As before, a trolley is hurtling down a track towards five people. You can divert its path by colliding another trolley into it, but if you do, both will be derailed and go down a hill, and into a yard where a man is sleeping in a hammock. He would be killed. Should you proceed?

Responses to this are partly dependent on whether the reader has already encountered the standard trolley problem (since a desire exists to keep one's responses consistent), but Unger notes that people who have not encountered such problems before are quite likely to say that, in this case, the proposed action would be wrong.

Unger, therefore, argues that different responses to these sorts of problems are based more on psychology than ethics – in this new case, he says, the only important difference is that the man in the yard does not seem particularly "involved". Unger claims that people then believe the man is not "fair game", but says that this lack of involvement in the scenario cannot make a moral difference.

Unger also considers cases that are more complex than the original trolley problem, involving more than just two results. In one such case, it is possible to do something which will (a) save the five and kill four (passengers of one or more trolleys and/or the hammock-sleeper), (b) save the five and kill three, (c) save the five and kill two, (d) save the five and kill one, or (e) do nothing and let five die.

Implications for autonomous vehicles

Problems analogous to the trolley problem arise in the design of software to control autonomous cars.[12] Situations are anticipated where a potentially fatal collision appears to be unavoidable, but in which choices made by the car's software, such as into whom or what to crash, can affect the particulars of the deadly outcome. For example, should the software value the safety of the car's occupants more, or less, than that of potential victims outside the car.[37][38][39][40]

A platform called Moral Machine[41] was created by MIT Media Lab to allow the public to express their opinions on what decisions autonomous vehicles should make in scenarios that use the trolley problem paradigm. Analysis of the data collected through Moral Machine showed broad differences in relative preferences among different countries.[42] Other approaches make use of virtual reality to assess human behavior in experimental settings.[43][44][45][46] However, some argue that the investigation of trolley-type cases is not necessary to address the ethical problem of driverless cars, because the trolley cases have a serious practical limitation. It would need to be top-down plan in order to fit the current approaches of addressing emergencies in artificial intelligence.[47]

Also, a question remains of whether the law should dictate the ethical standards that all autonomous vehicles must use, or whether individual autonomous car owners or drivers should determine their car's ethical values, such as favoring safety of the owner or the owner's family over the safety of others.[12] Although most people would not be willing to use an automated car that might sacrifice themselves in a life-or-death dilemma, some[who?] believe the somewhat counterintuitive claim that using mandatory ethics values would nevertheless be in their best interest. According to Gogoll and Müller, "the reason is, simply put, that [personalized ethics settings] would most likely result in a prisoner’s dilemma."[48]

In 2016, the German government appointed a commission to study the ethical implications of autonomous driving.[49][50] The commission adopted 20 rules to be implemented in the laws that will govern the ethical choices that autonomous vehicles will make.[50]: 10–13 Relevant to the trolley dilemma is this rule:

8. Genuine dilemmatic decisions, such as a decision between one human life and another, depend on the actual specific situation, incorporating “unpredictable” behaviour by parties affected. They can thus not be clearly standardized, nor can they be programmed such that they are ethically unquestionable. Technological systems must be designed to avoid accidents. However, they cannot be standardized to a complex or intuitive assessment of the impacts of an accident in such a way that they can replace or anticipate the decision of a responsible driver with the moral capacity to make correct judgements. It is true that a human driver would be acting unlawfully if he killed a person in an emergency to save the lives of one or more other persons, but he would not necessarily be acting culpably. Such legal judgements, made in retrospect and taking special circumstances into account, cannot readily be transformed into abstract/general ex ante appraisals and thus also not into corresponding programming activities. …[50]: 11

Real-life incident

An actual case approximating the trolley problem occurred on June 20, 2003, when a runaway string of 31 unmanned Union Pacific freight cars was barreling toward Los Angeles along the mainline track 1. To avoid the runaway train from entering the Union Pacific yards in Los Angeles, where it would not only cause damage, but was also where a Metrolink passenger train was thought to be located, dispatchers ordered the shunting of the runaway cars to track 4, through an area with lower-density housing of mostly lower-income residents. The switch to track 4 was rated for 15-mph transits, and dispatch knew the cars were moving significantly faster, thus likely causing a derailment.[51] The train, carrying over 3800 tons of mostly lumber and building materials, then derailed into the residential neighborhood in Commerce, California, crashing through several houses on Davie Street. The event resulted in 13 minor injuries, including a pregnant woman asleep in one of the houses who managed to escape through a window and avoided serious injury from the lumber and steel train wheels that fell around her.[52]

Example with COVID-19 vaccinations

A version of the trolley problem has been observed during administration of the Oxford–AstraZeneca COVID-19, and Johnson & Johnson COVID-19 vaccines which have been possibly linked to rare side effects of blood clotting, some cases being fatal.[53] This has led to the ethical question of whether the vaccines should be administered, accepting the unlikely but possible deadly side-effect among a small number of people. The alternative would be halting administration of the vaccines because of the side-effects, even though more people could die from the COVID-19 virus without widespread use of vaccination.[54]

In popular culture

In an urban legend that has existed since at least the mid-1960s, the decision is described as having been made in real life by a drawbridge keeper who was forced to choose between sacrificing a passenger train and his own four-year-old son.[55] A 2003 Czech short film titled Most (The Bridge in English) and The Bridge (US) deals with a similar plot.[56] This version is often given as an illustration of the Christian belief that God sacrificed his son, Jesus Christ.[55]

Similar dilemmas have been proposed in science fiction stories, most notably "The Cold Equations" (Tom Godwin, 1954), in which a pilot must decide whether to retain a stowaway, which would cause his ship to run out of fuel, or complete his mission to deliver vital medicine for six settlers.

Film, stage, and television

In the penultimate episode of Fate/Zero, Kiritsugu Emiya's ideals are challenged by Angra Mainyu, who presents him with a variant of the trolley problem revolving around boats containing the survivors of an apocalyptic event.

A trolley problem experiment was conducted in season two, episode one of the YouTube Original series Mind Field, presented by Michael Stevens,[26] but no paper was published on the findings.

In 2002 Spider-Man the titular character face off with the Green Goblin, who's holding Mary-Jane Watson in one hand while a cable car with passengers in the other. The Goblin challenge him to pick which to save. However, once he let goes both, Spider-Man saves both, avoiding the trolley problem outcome.

The trolley problem forms the major plot premise of an episode from The Good Place, also named "The Trolley Problem".[57] It is later referenced and solved in the second season within the context of the universe of the show by Michael (Ted Danson), who states that self-sacrifice is the only solution.

In season three of The Walking Dead, Rick Grimes must choose between giving up Michonne to the Governor or risk going to war with the town of Woodbury. If Grimes chooses the former, Michonne would face certain torture and death. The latter would mean death to many, if not all, of the Survivors in the prison.[citation needed]

An attempt to consider the trolley problem appears in season three, episode 15, "The Game", of the science-fiction television series Stargate Atlantis. Rodney (David Hewlett) poses a situation of 10 people on one track and one person (a baby!) on the other. Teyla (Rachel Luttrell), Ronan (Jason Momoa), and John (Joe Flanigan) thwart his effort to conduct a hypothetical discussion by asking why the person who sees the train does not simply warn the people on the track, outrun the train and shove them off the track, or "better yet, go get the baby"? [58]

In episodes 11 and 12 of the 2017 Korean television series While You Were Sleeping, a young prosecutor has to decide whether to approve organ transplants from a brain-dead donor who is about to die that will save as many as seven people, including the young son of one of his colleagues, or require an autopsy to determine whether the prospective donor died as a result of foul play, as is suspected, or as a result of an accident. Initially, the autopsy is posited to render the organ transplants impossible, but the problem is resolved when the prosecutor determines that both performing the autopsy and transplanting the organs might be possible.[citation needed]

In 2019, The Trolley Problem, written by Bo Robinson, made its world premiere. The play lays out a scenario in which one indecisive girl must choose to kill either a family of five on one track or a complete stranger on the other. The show evaluates various ethical and legal dilemmas in the trolley problem, and even includes the "fat man" variation in the play, showing the audience the many different possibilities and outcomes of different approaches to the trolley problem. The play won the Georgia Thespian Society Playworks Competition in 2018.[citation needed]

Internet memes

In 2016, a Facebook page under the name "Trolley Problem Memes" was recognized for its popularity on Facebook.[59] The group administration commonly shares comical variations of the trolley problem and often mixes in multiple types of philosophical dilemmas.[60] A common joke among the users regards "multitrack drifting", in which the lever is pulled after the first set of wheels passes the track, thereby creating a third, often morbidly humorous, solution, where all six people tied to the tracks are run over by the trolley, or are spared if the trolley derails.[61] The meme is in reference to the doujin manga and video game series Densha de D which crossed over Densha de Go! and Initial D.[62]

See also

References

- ^ a b Philippa Foot, "The Problem of Abortion and the Doctrine of the Double Effect" in Virtues and Vices (Oxford: Basil Blackwell, 1978) (originally appeared in the Oxford Review, Number 5, 1967.)

- ^ Judith Jarvis Thomson, Killing, Letting Die, and the Trolley Problem, 59 The Monist 204-17 (1976)

- ^ a b Jarvis Thomson, Judith (1985). "The Trolley Problem" (PDF). Yale Law Journal. 94 (6): 1395–1415. doi:10.2307/796133. JSTOR 796133.

- ^ Myrna Kamm, Francis (1989). "Harming Some to Save Others". Philosophical Studies. 57 (3): 227–60. doi:10.1007/bf00372696. S2CID 171045532.

- ^ Peter Unger, Living High and Letting Die (Oxford: Oxford University Press, 1996)

- ^ Frank Chapman Sharp, A Study of the Influence of Custom on the Moral Judgment Bulletin of the University of Wisconsin no.236 (Madison, June 1908), 138.

- ^ Frank Chapman Sharp, Ethics (New York: The Century Co, 1928), 42-44, 122.

- ^ Engisch, Karl (1930). Untersuchungen über Vorsatz und Fahrlässigkeit im Strafrecht. Berlinn: O. Liebermann. p. 288.

- ^ Hans Welzel, ZStW Zeitschrift für die gesamte Strafrechtswissenschaft 63 [1951], 47ff.

- ^ Hazon Ish, HM, Sanhedrin #25, s.v. "veyesh leayen". Available online, http://hebrewbooks.org/14332, page 404

- ^ Bakewell, Sarah (2013-11-22). "Clang Went the Trolley". The New York Times.

- ^ a b c d Lim, Hazel Si Min; Taeihagh, Araz (2019). "Algorithmic Decision-Making in AVs: Understanding Ethical and Technical Concerns for Smart Cities". Sustainability. 11 (20): 5791. doi:10.3390/su11205791.

- ^ Barcalow, Emmett, Moral Philosophy: Theories and Issues. Belmont, CA: Wadsworth, 2007. Print.

- ^ Greene, Joshua D.; Sommerville, R. Brian; Nystrom, Leigh E.; Darley, John M.; Cohen, Jonathan D. (2001-09-14). "An fMRI Investigation of Emotional Engagement in Moral Judgment". Science. 293 (5537): 2105–2108. Bibcode:2001Sci...293.2105G. doi:10.1126/science.1062872. ISSN 0036-8075. PMID 11557895. S2CID 1437941.

- ^ Youssef, Farid F.; Dookeeram, Karine; Basdeo, Vasant; Francis, Emmanuel; Doman, Mekaeel; Mamed, Danielle; Maloo, Stefan; Degannes, Joel; Dobo, Linda (2012). "Stress alters personal moral decision making". Psychoneuroendocrinology. 37 (4): 491–498. doi:10.1016/j.psyneuen.2011.07.017. PMID 21899956. S2CID 30489504.

- ^ Valdesolo, Piercarlo; DeSteno, David (2006-06-01). "Manipulations of Emotional Context Shape Moral Judgment". Psychological Science. 17 (6): 476–477. doi:10.1111/j.1467-9280.2006.01731.x. ISSN 0956-7976. PMID 16771796. S2CID 13511311.

- ^ Rom, Sarah C., Paul, Conway (2017-08-30). "The strategic moral self:self-presentation shapes moral dilemma judgments". Journal of Experimental Social Psychology. 74: 24–37. doi:10.1016/j.jesp.2017.08.003. ISSN 0022-1031.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Lee, Minwoo; Sul, Sunhae; Kim, Hackjin (2018-06-18). "Social observation increases deontological judgments in moral dilemmas". Evolution and Human Behavior. 39 (6): 611–621. doi:10.1016/j.evolhumbehav.2018.06.004. ISSN 1090-5138.

- ^ Ciaramelli, Elisa; Muccioli, Michela; Làdavas, Elisabetta; Pellegrino, Giuseppe di (2007-06-01). "Selective deficit in personal moral judgment following damage to ventromedial prefrontal cortex". Social Cognitive and Affective Neuroscience. 2 (2): 84–92. doi:10.1093/scan/nsm001. ISSN 1749-5024. PMC 2555449. PMID 18985127.

- ^ Navarrete, C. David; McDonald, Melissa M.; Mott, Michael L.; Asher, Benjamin (2012-04-01). "Virtual morality: Emotion and action in a simulated three-dimensional "trolley problem"". Emotion. 12 (2): 364–370. doi:10.1037/a0025561. ISSN 1931-1516. PMID 22103331.

- ^ Crockett, Molly J.; Clark, Luke; Hauser, Marc D.; Robbins, Trevor W. (2010-10-05). "Serotonin selectively influences moral judgment and behavior through effects on harm aversion". Proceedings of the National Academy of Sciences. 107 (40): 17433–17438. Bibcode:2010PNAS..10717433C. doi:10.1073/pnas.1009396107. ISSN 0027-8424. PMC 2951447. PMID 20876101.

- ^ Bernhard, Regan M.; Chaponis, Jonathan; Siburian, Richie; Gallagher, Patience; Ransohoff, Katherine; Wikler, Daniel; Perlis, Roy H.; Greene, Joshua D. (2016-12-01). "Variation in the oxytocin receptor gene (OXTR) is associated with differences in moral judgment". Social Cognitive and Affective Neuroscience. 11 (12): 1872–1881. doi:10.1093/scan/nsw103. ISSN 1749-5016. PMC 5141955. PMID 27497314.

- ^ a b Bauman, Christopher W.; McGraw, A. Peter; Bartels, Daniel M.; Warren, Caleb (September 4, 2014). "Revisiting External Validity: Concerns about Trolley Problems and Other Sacrificial Dilemmas in Moral Psychology". Social and Personality Psychology Compass. 8 (9): 536–554. doi:10.1111/spc3.12131.

- ^ a b Kahane, Guy; Everett, Jim A. C.; Earp, Brian D.; Caviola, Lucius; Faber, Nadira S.; Crockett, Molly J.; Savulescu, Julian (March 2018). "Beyond sacrificial harm: A two-dimensional model of utilitarian psychology". Psychological Review. 125 (2): 131–164. doi:10.1037/rev0000093. PMC 5900580. PMID 29265854.

- ^ Kahane, Guy (20 March 2015). "Sidetracked by trolleys: Why sacrificial moral dilemmas tell us little (or nothing) about utilitarian judgment". Social Neuroscience. 10 (5): 551–560. doi:10.1080/17470919.2015.1023400. PMC 4642180. PMID 25791902.

- ^ a b Stevens, Michael (6 December 2017). "The Greater Good". Mind Field. Season 2. Episode 1. Retrieved 23 December 2018.

- ^ "'Trolley Problem': Virtual-Reality Test for Moral Dilemma – TIME.com". TIME.com.

- ^ Journal of Social, Evolutionary, and Cultural Psychology Archived 2012-04-11 at the Wayback Machine – ISSN 1933-5377 – volume 4(3). 2010

- ^ Bourget, David; Chalmers, David J. (2013). "What do Philosophers believe?". Retrieved 11 May 2013.

- ^ Khazan, Olga (July 24, 2014). "Is One of the Most Popular Psychology Experiments Worthless?". The Atlantic.

- ^ Rennix, Brianna; Robinson, Nathan J. (November 3, 2017). "The Trolley Problem Will Tell You Nothing Useful About Morality". Current Affairs.

- ^ JafariNaimi, Nassim (2018). "Our Bodies in the Trolley's Path, or Why Self-driving Cars Must *Not* Be Programmed to Kill". Science, Technology, & Human Values. 43 (2): 302–323. doi:10.1177/0162243917718942. S2CID 148793137.

- ^ "Why Self-Driving Cars Must Be Programmed to Kill". MIT Technology Review. October 22, 2015.

- ^ Scruton, Roger (2017). On Human Nature (1st ed.). Princeton. pp. 79–112. ISBN 978-0-691-18303-9.

- ^ Peter Singer, Ethics and Intuitions The Journal of Ethics (2005). http://www.utilitarian.net/singer/by/200510--.pdf

- ^ Shelly Kagan, The Limits of Morality (Oxford: Oxford University Press, 1989)

- ^ Patrick Lin (October 8, 2013). "The Ethics of Autonomous Cars". The Atlantic.

- ^ Jean-François Bonnefon; Azim Shariff; Iyad Rahwan (October 13, 2015). "Autonomous Vehicles Need Experimental Ethics: Are We Ready for Utilitarian Cars?". Science. 352 (6293): 1573–1576. arXiv:1510.03346. Bibcode:2016Sci...352.1573B. doi:10.1126/science.aaf2654. PMID 27339987. S2CID 35400794.

- ^ Emerging Technology From the arXiv (October 22, 2015). "Why Self-Driving Cars Must Be Programmed to Kill". MIT Technology review.

- ^ Bonnefon, Jean-François; Shariff, Azim; Rahwan, Iyad (2016). "The social dilemma of autonomous vehicles". Science. 352 (6293): 1573–1576. arXiv:1510.03346. Bibcode:2016Sci...352.1573B. doi:10.1126/science.aaf2654. PMID 27339987. S2CID 35400794.

- ^ "Moral Machine". Moral Machine. Retrieved 2019-01-31.

- ^ Awad, Edmond; Dsouza, Sohan; Kim, Richard; Schulz, Jonathan; Henrich, Joseph; Shariff, Azim; Bonnefon, Jean-François; Rahwan, Iyad (October 24, 2018). "The Moral Machine experiment". Nature. 563 (7729): 59–64. Bibcode:2018Natur.563...59A. doi:10.1038/s41586-018-0637-6. hdl:10871/39187. PMID 30356211. S2CID 53029241.

- ^ Sütfeld, Leon R.; Gast, Richard; König, Peter; Pipa, Gordon (2017). "Using Virtual Reality to Assess Ethical Decisions in Road Traffic Scenarios: Applicability of Value-of-Life-Based Models and Influences of Time Pressure". Frontiers in Behavioral Neuroscience. 11: 122. doi:10.3389/fnbeh.2017.00122. PMC 5496958. PMID 28725188.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Skulmowski, Alexander; Bunge, Andreas; Kaspar, Kai; Pipa, Gordon (December 16, 2014). "Forced-choice decision-making in modified trolley dilemma situations: a virtual reality and eye tracking study". Frontiers in Behavioral Neuroscience. 8: 426. doi:10.3389/fnbeh.2014.00426. PMC 4267265. PMID 25565997.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Francis, Kathryn B.; Howard, Charles; Howard, Ian S.; Gummerum, Michaela; Ganis, Giorgio; Anderson, Grace; Terbeck, Sylvia (October 10, 2016). "Virtual Morality: Transitioning from Moral Judgment to Moral Action?". PLOS ONE. 11 (10): e0164374. Bibcode:2016PLoSO..1164374F. doi:10.1371/journal.pone.0164374. ISSN 1932-6203. PMC 5056714. PMID 27723826.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Patil, Indrajeet; Cogoni, Carlotta; Zangrando, Nicola; Chittaro, Luca; Silani, Giorgia (January 2, 2014). "Affective basis of judgment-behavior discrepancy in virtual experiences of moral dilemmas". Social Neuroscience. 9 (1): 94–107. doi:10.1080/17470919.2013.870091. ISSN 1747-0919. PMID 24359489. S2CID 706534.

- ^ Himmelreich, Johannes (June 1, 2018). "Never Mind the Trolley: The Ethics of Autonomous Vehicles in Mundane Situations". Ethical Theory and Moral Practice. 21 (3): 669–684. doi:10.1007/s10677-018-9896-4. ISSN 1572-8447. S2CID 150184601.

- ^ Gogoll, Jan; Müller, Julian F. (June 1, 2017). "Autonomous Cars: In Favor of a Mandatory Ethics Setting". Science and Engineering Ethics. 23 (3): 681–700. doi:10.1007/s11948-016-9806-x. ISSN 1471-5546. PMID 27417644. S2CID 3632738.

- ^ BMVI Commission (June 20, 2016). "Bericht der Ethik-Kommission Automatisiertes und vernetztes Fahren". Federal Ministry of Transport and Digital Infrastructure (German: Bundesministerium für Verkehr und digitale Infrastruktur). Archived from the original on November 15, 2017.

- ^ a b c BMVI Commission (28 August 2017). "Ethics Commission's complete report on automated and connected driving". Federal Ministry of Transport and Digital Infrastructure (German: Bundesministerium für Verkehr und digitale Infrastruktur). Archived from the original on 15 September 2017. Retrieved January 20, 2021.

- ^ "Update on Union Pacific Rail Accident in Commerce, California". NTSB News. National Transportation Safety Board. 2003-06-26. Retrieved 2021-03-27.

- ^ "Runaway freight train derails near Los Angeles". Commerce, California: CNN. 2003-06-21. Retrieved 2021-03-27.

- ^ "AstraZeneca's COVID-19 vaccine: EMA finds possible link to very rare cases of unusual blood clots with low blood platelets".

{{cite web}}: CS1 maint: url-status (link) - ^ "AstaZeneca's Vaccine Ethical Problem | Bioethics.net". www.bioethics.net. Retrieved 2021-04-12.

- ^ a b Barbara Mikkelson (27 February 2010). "The Drawbridge Keeper". Snopes.com. Retrieved 20 April 2016.

- ^ lewis-8 (25 January 2003). "Most (2003)". IMDb.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ Perkins, Dennis (October 19, 2017). "Chidi wrestles with "The Trolley Problem" on a brilliantly funny The Good Place". avclub.com. The Onion. Retrieved March 28, 2018.

- ^ http://www.stargate-sg1-solutions.com/wiki/SGA_3.15_%22The_Game%22_Transcript

- ^ Feldman, Brian (9 August 2016). "The Trolley Problem Is the Internet's Most Philosophical Meme". 2017, New York Media LLC. Retrieved 25 May 2017.

- ^ Raicu, Irina (8 June 2016). "Modern variations on the 'Trolley Problem' meme". Vox Media, Inc. Retrieved 25 May 2017.

- ^ Zhang, Linch (1 June 2016). "Behind the Absurd Popularity of Trolley Problem Memes". TheHuffingtonPost.com, Inc. Retrieved 25 May 2017.

- ^ "Trolley Problem Memes Present New Dilemma With Multi-Track Drifting". The Daily Dot. 13 February 2017. Retrieved 6 April 2020.

External links

- Should You Kill the Fat Man?

- Forced-choice decision-making in modified trolley dilemma situations: a virtual reality and eye tracking study

- Can Bad Men Make Good Brains Do Bad Things?

- The Trolley Problem as a retro video game

- Trolley Problem – Killing and Letting Die

- The Trolley Problem is Fundamentally Flawed

- Trolley Problem Memes Facebook page

- Lesser-Known Trolley Problem Variations

- Dr Iain Law's undergraduate lecture on The Trolley Problem