User:AradiaSilverWheel/sandbox: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

{{User sandbox}} |

{{User sandbox}} |

||

<!-- EDIT BELOW THIS LINE --> |

<!-- EDIT BELOW THIS LINE --> |

||

{{Infobox government agency |

|||

==Supercomputing History== |

|||

|agency_name = NASA Advanced Supercomputing Division |

|||

|picture = NASA_Advanced_Supercomputing_Facility.jpg |

|||

|picture_width = 250px |

|||

|picture_caption = |

|||

|formed = 1982 |

|||

|preceding1 = Numerical Aerodynamic Simulation Division (1982) |

|||

|preceding2 = Numerical Aerospace Simulation Division (1995) |

|||

|dissolved = |

|||

|superseding = |

|||

|jurisdiction = |

|||

|headquarters = [[NASA Ames Research Center]], [[Moffett Federal Airfield|Moffett Field]], [[California]] |

|||

|latd=|latm=|lats=|latNS= |

|||

|longd=|longm=|longs=|longEW= |

|||

|region_code = |

|||

|coordinates = |

|||

|motto = |

|||

|employees = |

|||

|budget = |

|||

|minister1_name = |

|||

|minister1_pfo = |

|||

|minister2_name = |

|||

|minister2_pfo = |

|||

<!-- (etc.) --> |

|||

|deputyminister1_name = |

|||

|deputyminister1_pfo = |

|||

|deputyminister2_name = |

|||

|deputyminister2_pfo = |

|||

<!-- (etc.) --> |

|||

|chief1_name = |

|||

|chief1_position = |

|||

|chief2_name = |

|||

|chief2_position = |

|||

<!-- (etc.) --> |

|||

|agency_type = |

|||

|parent_department = |

|||

|parent_agency = [[NASA]] |

|||

|child1_agency = |

|||

|child2_agency = |

|||

<!-- (etc.) --> |

|||

|keydocument1 = |

|||

<!-- (etc.) --> |

|||

|website = {{url|http://www.nas.nasa.gov}} |

|||

|footnotes = |

|||

|map = |

|||

|map_width = |

|||

|map_caption = |

|||

}} |

|||

{| class=infobox width=280px |

|||

|colspan=2 style="background:#DDDDDD" align=center|'''Current Supercomputing Systems''' |

|||

|- |

|||

|[[Pleiades (supercomputer)|'''Pleiades''']] |

|||

|SGI Altix ICE supercluster |

|||

|- |

|||

|[[Endeavour (supercomputer)|'''Endeavour''']] |

|||

|SGI UV shared-memory system |

|||

|} |

|||

The '''NASA Advanced Supercomputing (NAS) Division''' is located at [[NASA Ames Research Center]], [[Moffett Federal Airfield|Moffett Field]] in the heart of [[Silicon Valley]] in [[Mountain View, California|Mountain View]], [[California]]. It has been the major supercomputing resource for NASA missions in aerodynamics, space exploration, studies in weather patterns and ocean currents, and space shuttle and aircraft research and development for over thirty years. |

|||

It currently houses the [[petascale]] [[Pleiades (supercomputer)|Pleiades]] and [[terascale]] [[Endeavour (supercomputer)|Endeavour]] [[Supercomputer|supercomputers]] based on [[Silicon Graphics|SGI]] architecture and [[Intel]] processors, as well as disk and archival tape storage systems with a capacity of over 100 petabytes of data, the hyperwall-2 visualization system, and the largest [[InfiniBand]] network fabric in the world. The NAS Division is part of NASA's Exploration Technology Directorate and operates NASA's High-End Computing Capability Project.<ref>{{cite web|url=http://www.nas.nasa.gov/about/about.html|title=NAS Homepage - About the NAS Division|publisher=[http://www.nas.nasa.gov NAS]}}</ref> |

|||

===1984-1991=== |

|||

[[File:Cray 2 Supercomputer - GPN-2000-001633.jpg|left|thumb|250px|The Cray-2 supercomputer at the NAS Facility.]] |

|||

Originally built in 1984, the '''[[Cray X-MP|Cray X-MP-12]]''' was the first supercomputer built for NASA at the NASA Ames Central Computing Facility. It had a peak performance of 210.5 megaflops, or 210,500,000 floating point operations per second, and was the first customer installation of a UNIX-based [[Cray]] supercomputer and the first to provide a client-server interface with UNIX workstations.<ref name="25th" /> |

|||

==History== |

|||

The first 4-processor Cray-2 supercomputer, '''Navier''', was installed at the NASA Ames Central Computing Facility in 1985 and was the most powerful supercomputer to date with a peak performance of 1.95 gigaflops and a [[LINPACK benchmarks|sustained performance]] of 250 megaflops.<ref name="25th" /> In 1988, a second Cray-2, '''Stokes''', was added to Navier at the new Numerical Aerodynamic Simulating facility and doubled NASA's computing capabilities. The two computers were named for the physicists who developed the Navier-Stokes equations used to calculate computational fluid dynamics, which NASA ran on the two supercomputers to solve and analyze problems concerning the design and development of space launch vehicles and aircraft. |

|||

===Founding=== |

|||

In the mid-1970s, a group of aerospace engineers at Ames Research Center began to look into transferring [[aerospace]] research and development from costly and time-consuming wind tunnel testing to simulation-based design and engineering using [[computational fluid dynamics]] (CFD) models on supercomputers more powerful than those commercially available at the time. This endeavor was later named the Numerical Aerodynamic Simulator (NAS) Project and the first computer was installed at the Central Computing Facility at Ames Research Center in 1984. |

|||

Groundbreaking on a state-of-the-art supercomputing facility took place on March 14, 1985 in order to construct a building where CFD experts, computer scientists, visualization specialists, and network and storage engineers could be under one roof in a collaborative environment. In 1986, NAS transitioned into a full-fledged NASA division and in 1987, NAS staff and equipment, including a second supercomputer, a [[Cray-2]] named Navier, were relocated to the new facility, which was dedicated on March 9, 1987.<ref name="25th">{{cite web|url=http://www.nas.nasa.gov/assets/pdf/NAS_25th_brochure.pdf|title=NASA Advanced Supercomputing Division 25th Anniversary Brochure (PDF)|publisher=[http://www.nas.nasa.gov NAS] 2008}}</ref> |

|||

Installed later in 1988, '''Reynolds''' was an 8-processor [[Cray Y-MP]] system that replaced the Navier Cray-2. It had a peak performance of 2.54 gigaflops and allowed the agency to meet their goal of a sustained one gigaflops performance rate for CFD applications.<ref name="25th" /> Like many of the supercomputers at NAS, Reynolds was named after an important figure in supercomputing and aerodynamics, in this case, scientist and mathematician [[Osbourne Reynolds]], who was a prominent innovator in understanding CFD equations. |

|||

In 1995, NAS changed its name to the Numerical Aerospace Simulation Division, and in 2001 to the name it has today. |

|||

A 16,000-processor [[Connection Machine]] model CM2 named '''Pierre''' was installed at NAS in 1988 with 14.34 gigaflops of peak processing power. It was part of the computer science research being done at NAS in the area of massively [[parallel computing]]. An additional 32,000 [[CPU]]s were added in 1990. |

|||

===Industry Leading Innovations=== |

|||

Installed in 1991, '''Lagrange''' was an [[Intel iPSC/860]] system with 128 processors and a peak of 7.68 gigaflops. To test its effectiveness, NAS successfully ran incomprehensible Navier-Stokes simulations of [[Turbulence|isotropic turbulence]], the largest with a mesh size of about 16 million grid points.<ref name="25th" /> It was named after [[Joseph Louis Lagrange]], the 18th century mathematician who discovered the [[Lagrangian point|Lagrangian points]] and a number of other important astronomical discoveries concerning the moon, the elliptical nature of planetary orbits, and the nature of [[satellites]]. |

|||

NAS has been one of the leading innovators in the supercomputing world, developing many tools and processes that became widely used in commercial supercomputing. Some of these firsts include:<ref>{{cite web|url=http://www.nas.nasa.gov/about/history.html|title=NAS homepage: Division History|publisher=[http://www.nas.nasa.gov NAS]}}</ref> |

|||

* [[UNIX]]-based supercomputers |

|||

* A client/server interface by linking the supercomputers and workstations together to distribute computation and visualization |

|||

* Developed and implemented a high-speed [[wide-area network]] (WAN) connecting supercomputing resources to remote users (AEROnet) |

|||

* Successfully dynamically distributed production loads across supercomputing resources in geographically distant locations (NASA Metacenter) |

|||

* Implementation of [[TCP/IP]] networking in a supercomputing environment |

|||

* A [[batch-queuing system]] for supercomputers (NQS) |

|||

* A UNIX-based hierarchical mass storage system (NAStore) |

|||

* [[IRIX]] single-system image 256-, 512-, and 1,024-processor supercomputers |

|||

* [[Linux]]-based single-system image 512- and 1,024-processor supercomputers |

|||

* A 2,048-processor [[Shared memory architecture|shared memory]] environment |

|||

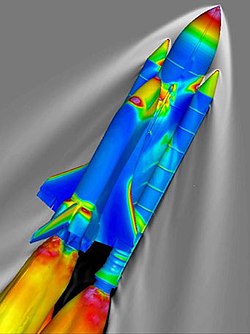

[[File:SSLV ascent.jpg|thumb|250px|An image of the flowfield around the Space Shuttle Launch Vehicle traveling at Mach 2.46 and at an altitude of {{convert|66000|ft}}. The surface of the vehicle is colored by the pressure coefficient, and the gray contours represent the density of the surrounding air, as calculated using the OVERFLOW codes.]] |

|||

===Software Development=== |

|||

The 4-processor [[Convex Computer|Convex]] 3240 system named '''von Karman''' was also brought to the NAS facility in 1991 and added 200 megaflops of processing power to the facility's capabilities. |

|||

NAS develops and adapts software in order to "compliment and enhance the work performed on its supercomputers, including software for systems support, monitoring systems, security, and scientific visualization," and often provides this software to its users through the NASA Open Source Agreement (NOSA).<ref>{{cite web|url=http://www.nas.nasa.gov/publications/software_datasets.html|title=NAS Software and Datasets|publisher=[http://www.nas.nasa.gov NAS]}}</ref> |

|||

A few of the important software developments from NAS include: |

|||

* '''[[NAS_Parallel_Benchmarks|NAS Parallel Benchmarks (NPB/NGB)]]''' were developed for the evaluation of highly parallel supercomputers and mimic the characteristics of large-scale CFD applications. |

|||

* '''[[Portable Batch System]] (PBS)''' was the first batch queuing software for parallel and distributed systems. It was released commercially in 1998 and is still widely used in the industry. |

|||

* '''[[PLOT3D file format|PLOT3D]]''' was created by NASA in 1982 and is a computer graphics program still used today to visualize the grid and solutions of structured CFD datasets. The PLOT3D team was awarded the fourth largest prize ever given by the NASA Space Act Program for the development of their software, which revolutionized scientific visualization and analysis of 3D CFD solutions.<ref name="25th" /> |

|||

* '''FAST (Flow Analysis Software Toolkit)''' is a software environment based off of PLOT3D and used to analyze data from numerical simulations which, though tailored to CFD visualization, can be used to visualize almost any [[Scalar (computing)|scalar]] and [[Vector graphics|vector]] data. It was awarded the NASA Software of the Year Award in 1995. |

|||

* '''INS2D''' and '''INS3D''' are codes developed by NAS engineers to solve incomprehensible [[Navier-Stokes equations]] in two- and three-dimentional generalized coordinates, respectively, for steady-state and time varying flow. In 1994, the INS3D code won the NASA Software of the Year Award. |

|||

* '''Cart3D''' is a high-fidelity analysis package for aerodynamic design which allows users to perform automated CFD simulations on complex forms. It is still used at NASA and other government agencies to test conceptual and preliminary air- and spacecraft designs.<ref>{{cite web|url=http://people.nas.nasa.gov/~aftosmis/cart3d/|title=NASA Cart3D Homepage}}</ref> The Cart3D team won the NASA Software of the Year award in 2002. |

|||

* '''[[Overflow_(software)|OVERFLOW]]''' (Overset grid flow solver) is a software package developed to simulate fluid flow around solid bodies using Reynolds-averaged, Navier-Stokes CFD equations. It was the first general-purpose NASA CFD code for overset (Chimera) grid systems and was released outside of NASA in 1992. |

|||

* '''Chimera Grid Tools (CGT)'''is a software package containing a variety of tools for the Chimera overset grid approach for solving CFD problems of surface and volume grid generation; as well as grid manipulation, smoothing, and projection. |

|||

==Supercomputing History== |

|||

The facility at the NASA Advanced Supercomputing Divison has housed and operated some of the most powerful supercomputers in the world since its construction in 1987. Many of these computers include [[testbed]] systems built to test new architecture, hardware, or networking set-ups which might be utilized on a larger scale.<ref>{{cite journal|title=NAS High-Performance Computer History|journal=Gridpoints|date=Spring 2002|year=2002|pages=1A-12A}}</ref><ref name="25th" /> Peak performance is shown in [[FLOPS|Floating Point Operations Per Second (FLOPS)]]. |

|||

{| class="wikitable" |

|||

|- |

|||

! Computer Name !! Architecture !! Peak Performance !! Number of CPUs !! Installation Date |

|||

|- |

|||

| || [[Cray]] [[Cray X-MP|XMP-12]] || 210.53 megaflops || 1 || 1984 |

|||

|- |

|||

| Navier || [[Cray-2|Cray 2]] || 1.95 gigaflops || 4 || 1985 |

|||

|- |

|||

| Chuck || [[Convex Computer|Convex 3820]] || 1.9 gigaflops || 8 || 1987 |

|||

|- |

|||

| rowspan="2"| Pierre ||rowspan="2" | [[Thinking Machines Corporation|Thinking Machines]] [[Connection Machine|CM2]] || 14.34 gigaflops || 16,000 || 1987 |

|||

|- |

|||

|| 43 gigaflops || 48,000 || 1991 |

|||

|- |

|||

| Stokes || Cray 2 || 1.95 gigaflops || 4 || 1988 |

|||

|- |

|||

| Piper || CDC/ETA-10Q || 840 megaflops || 4 || 1988 |

|||

|- |

|||

| rowspan="2" | Reynolds || rowspan="2" | [[Cray Y-MP]] || 2.54 gigaflops || 8 || 1988 |

|||

|- |

|||

|| 2.67 gigaflops || 8 || 1990 |

|||

|- |

|||

| Lagrange || [[Intel]] [[Intel iPSC|iPSC/860]] || 7.68 gigaflops || 128 || 1990 |

|||

|- |

|||

| Gamma|| Intel iPSC/860 || 7.68 gigaflops|| 128|| 1990 |

|||

|- |

|||

| von Karman || Convex 3240 || 200 megaflops|| 4|| 1991 |

|||

|- |

|||

| Boltzman || Thinking Machines CM5 || 16.38 gigaflops || 128 || 1993 |

|||

|- |

|||

| Sigma || [[Intel Paragon]] || 15.60 gigaflops || 208 || 1993 |

|||

|- |

|||

| von Neumann || [[Cray C90]] || 15.36 gigaflops || 16 || 1993 |

|||

|- |

|||

| Eagle || Cray C90 || 7.68 gigaflops|| 8 || 1993 |

|||

|- |

|||

| Grace || Intel Paragon || 15.6 gigaflops|| 209 || 1993 |

|||

|- |

|||

| rowspan="2" | Babbage || rowspan="2" | [[IBM Scalable POWERparallel|IBM SP-2]] || 34.05 gigaflops || 128 || 1994 |

|||

|- |

|||

|| 42.56 gigaflops || 160 || 1994 |

|||

|- |

|||

| rowspan="2" | da Vinci || [[Silicon Graphics|SGI]] [[SGI Challenge#POWER Challenge|Power Challenge]] || || 16 || 1994 |

|||

|- |

|||

|| SGI Power Challenge XL || 11.52 gigaflops || 32 || 1995 |

|||

|- |

|||

| Newton|| [[Cray J90]] || 7.2 gigaflops || 36 || 1996 |

|||

|- |

|||

| Piglet || [[SGI Origin 2000|SGI Origin 2000/250 MHz]] || 4 gigaflops || 8 || 1997 |

|||

|- |

|||

| rowspan="2" | Turing || rowspan="2" | SGI Origin 2000/195 MHz || 9.36 gigaflops || 24 || 1997 |

|||

|- |

|||

|| 25 gigaflops || 64 || 1997 |

|||

|- |

|||

| Fermi || SGI Origin 2000/195 MHz || 3.12 gigaflops || 8 || 1997 |

|||

|- |

|||

| Hopper || SGI Origin 2000/250 MHz || 32 gigaflops || 64 || 1997 |

|||

|- |

|||

| Evelyn || SGI Origin 2000/250 MHz || 4 gigaflops || 8 || 1997 |

|||

|- |

|||

| rowspan="2" | Steger || rowspan="2" | SGI Origin 2000/250 MHz || 64 gigaflops|| 128 || 1997 |

|||

|- |

|||

|| 128 gigaflops || 256 || 1998 |

|||

|- |

|||

| rowspan="2" | Lomax || rowspan="2" | SGI Origin 2800/300 MHz || 307.2 gigaflops || 512 || 1999 |

|||

|- |

|||

|| 409.6 gigaflops || 512 || 2000 |

|||

|- |

|||

| Lou || SGI Origin 2000/250 MHz || 4.68 gigaflops || 12 || 1999 |

|||

|- |

|||

| Ariel || SGI Origin 2000/250 MHz || 4 gigaflops || 8 || 2000 |

|||

|- |

|||

| Sebastian || SGI Origin 2000/250 MHz || 4 gigaflops || 8 || 2000 |

|||

|- |

|||

| SN1-512 || [[SGI Origin 3000 and Onyx 3000#Origin 3000|SGI Origin 3000/400 MHz]] || 409.6 gigaflops || 512 || 2001 |

|||

|- |

|||

| Bright || [[Cray SV1|Cray SVe1/500 MHz]] || 64 gigaflops || 32 || 2001 |

|||

|- |

|||

| rowspan="2" | Chapman || rowspan="2" | SGI Origin 3800/400 MHz || 819.2 gigaflops || 1,024 || 2001 |

|||

|- |

|||

|| 1.23 teraflops || 1,024 || 2002 |

|||

|- |

|||

| Lomax II || SGI Origin 3800/400 MHz || 409.6 gigaflops|| 512 || 2002 |

|||

|- |

|||

| [[Kalpana (supercomputer)|Kalpana]] || [[Altix#Altix 3000|SGI Altix 3000]] || 2.66 teraflops|| 512 || 2003 |

|||

|- |

|||

| rowspan="3" | [[Columbia (supercomputer)|Columbia]] || SGI Altix 3000 || 63 teraflops || 10,240 || 2004 |

|||

|- |

|||

| rowspan="2" | [[Altix#Altix 4000|SGI Altix 4700]] || || 10,296 || 2006 |

|||

|- |

|||

|| 85.8 teraflops || 13,824 || 2007 |

|||

|- |

|||

| Schirra || [[IBM POWER microprocessors#POWER5|IBM POWER5+]] || 4.8 teraflops || 640 || 2007 |

|||

|- |

|||

| RT Jones || [[Altix#Altix ICE|SGI ICE 8200]], [[Xeon#5400-series "Harpertown"|Intel Xeon "Harpertown" Processors]] || 43.5 teraflops || 4,096 || 2007 |

|||

|- |

|||

| rowspan="6" | [[Pleiades (supercomputer)|Pleiades]] || rowspan="2" | SGI ICE 8200, Intel Xeon "Harpertown" Processors || 487 teraflops || 51,200 || 2008 |

|||

|- |

|||

|| 544 teraflops || 56,320 || 2009 |

|||

|- |

|||

|| SGI ICE 8200, [[Xeon#5500-series "Gainestown"|Intel Xeon "Nehalem" Processors]]|| 773 teraflops || 81,920 || 2010 |

|||

|- |

|||

|| SGI ICE 8200/8400, Intel Xeon "Harpertown"/"Nehalem"/[[Xeon#3600/5600-series "Gulftown" & "Westmere-EP"|"Westmere"]] Processors || 1.09 petaflops || 111,104|| 2011 |

|||

|- |

|||

|| SGI ICE 8200/8400/X, Intel Xeon "Harpertown"/"Nehalem"/"Westmere"/[[Xeon#Sandy-Bridge-based Xeon|"Sandy Bridge"]] Processors || 1.24 petaflops || 125,9580 || 2012 |

|||

|- |

|||

|| SGI ICE 8200/8400/X, Intel Xeon "Nehalem"/"Westmere"/[[Xeon#Sandy-Bridge-based Xeon|"Sandy Bridge"]]/[[Ivy_Bridge_(microarchitecture)|"Ivy Bridge"]] Processors <ref>{{cite web|url=http://www.nas.nasa.gov/hecc/resources/pleiades.html|title=Pleiades Supercomputer Resource homepage|publisher=[http://www.nas.nasa.gov NAS]}}</ref>|| 1.54 petaflops || 162,496 || 2013 |

|||

|- |

|||

| [[Endeavour (supercomputer)|Endeavour]] || [[Altix#Altix UV|SGI UV 2000]], Intel Xeon "Sandy Bridge" Processors <ref>{{cite web|url=http://www.nas.nasa.gov/hecc/resources/endeavour.html|title=Endeavour Supercomputer Resource homepage|publisher=[http://www.nas.nasa.gov NAS]}}</ref>|| 32 teraflops || 1,536 || 2013 |

|||

|} |

|||

===Quantum Artificial Intelligence Laboratory=== |

|||

===1993-2001=== |

|||

One of several systems installed in 1993, '''Boltzman''' was a Connection Machine CM5 built by [[Thinking Machines Corporation|Thinking Machines]] with 128 CPUs and a peak performance of 16.38 gigaflops. A 208-processor [[Intel Paragon]] system named '''Grace''' was also installed that year and had a peak performance of 15.60 gigaflops. |

|||

In 1993, a new 16-processor [[Cray C90]] replaced the Stokes supercomputer, the last of the two Cray-2's originally installed at the NAS facility in 1988. With a peak of 15.36 gigaflops, it allowed NAS to run more multitasking jobs around the clock, some as large as 7,200 megabytes (MB).<ref name="25th" /> It was named after mathematician [[John von Neumann]] who was a founding figure in computer science. A second Cray C90 named '''Eagle''' with only half of '''von Neumann''''s CPUs (8 processors) and a peak performance of 7.68 gigaflops was also installed in 1993 to augment the massively parallel supercomputing environment at the NAS facility. |

|||

==Storage Resources== |

|||

'''Babbage''' was a 160-processor [[IBM]] [[IBM Scalable POWERparallel|SP2]] system configuration that was installed in 1994 and named for the [[Computer_pioneer|"father of the computer"]], [[Charles Babbage]].<ref>{{cite book | author=Halacy, Daniel Stephen | title = Charles Babbage, Father of the Computer | year = 1970 | publisher=Crowell-Collier Press | isbn = 0-02-741370-5 }}</ref> It had a peak of 42.56 gigaflops and started off with a user base of approximately 300. In 1996, it was part of an endeavor with NASA Langley to create the NASA Metacenter, the agency's first successful attempt to "dynamically distribute real-user production workloads across supercomputing resources at geographically distant locations."<ref name="25th" /> The project included two IBM SP2 systems, Babbage at Ames Research Center in Mountain View, California and the other at [[Langley Research Center]] in [[Hampton, Virginia|Hampton]], [[Virginia]]. An additional 160 CPUs were added to Babbage in 1995 and doubled it's processing power. |

|||

===Disk Storage=== |

|||

In 1987, NAS partnered with the [[Defense Advanced Research Projects Agency]] (DARPA) and the [[University of California, Berkeley]] in the [[Redundant Array of Inexpensive Disks]] (RAID) project, which sought to create a storage technology that combined multiple disk drive components into one logical unit. Completed in 1992, the resulting RAID storage technology is still used today.<ref name="25th" /> |

|||

NAS currently houses disk mass storage on an SGI parallel DMF cluster with high-availability software consisting of four 32-processor front-end systems, which are connected to the supercomputers and the archival tape storage system. The system has 64 GB of memory per front-end and 9 petabytes (PB) of RAID disk capacity.<ref name="nasstorage">{{cite web|url=http://www.nas.nasa.gov/hecc/resources/storage_systems.html|title=HECC Archival Storage System Resource homepage|publisher=[http://www.nas.nasa.gov NAS]}}</ref> Data stored on disk is regularly migrated to the tape archival storage systems at the facility to free up space for other user projects being run on the supercomputers. |

|||

[[File:NASA Lomax Supercomputer.jpg|thumb|right|250px|The Lomax SGI Origin 2000-series supercomputer, 1999.]] |

|||

A 16-processor [[Cray J90]] system named '''Newton''' was installed in 1996 with a peak performance of 7.20 gigaflops. It was supplemented in 1997 with an additional 36 CPUs. |

|||

===Archive and Storage Systems=== |

|||

Installed in 1997, '''Turing''' was a 64-processor SGI Origin 2000 [[testbed]] system -- the first of its kind to be run as a single system -- with a peak performance of 25 gigaflops and clocked at 195 [[Hertz|MHz]]. It was used in research being done at NAS into the potential of [[nanotubes]] for use with tiny electromechanical sensors and semiconductors.<ref name="25th" /> |

|||

In 1987, NAS developed the first UNIX-based hierarchical mass storage system, named NAStore. It contained two [[StorageTek]] 4400 cartridge tape robots, each with a storage capacity of approximately 1.1 terabytes, cutting tape-archived data retrieval from four minutes to fifteen seconds.<ref name="25th" /> |

|||

With the installation of the Pleiades supercomputer in 2008, the StorageTek systems that NAS had been using for twenty years were unable to meet the needs of the greater number of users and increasing file sizes of each project's [[Dataset|datasets]].<ref>{{cite web|url=http://www.nas.nasa.gov/SC09/PDF/Datasheets/Powers_NASSilo.pdf|title=NAS Silo, Tape Drive, and Storage Upgrades - SC09|publisher=[http://www.nas.nasa.gov NAS] November 2009}}</ref> In 2009, NAS brought in [[Spectra Logic]] robotic tape systems which increased the maximum capacity at the facility to 115 petabytes of space available for users to archive their data from the supercomputers, which consist of extremely large datasets. SGI's Data Migration Facility (DMF) and OpenVault manage disk-to-tape data migration and tape-to-disk de-migration for the NAS facility.<ref name="nasstorage" /> |

|||

'''Steger''' was an SGI Origin 2800 system with 128 processors installed in 1998, with an additional 256 processors added later in the same year. It had a peak performance of 128 gigaflops, over double the processing power of Turing and was clocked at 300 MHz. '''Lomax''', built in 1999, was also a Origin 2800 system with 512 processors and a peak performance of 409.6 gigaflops. |

|||

As of January 2014, there is over 30 petabytes of unique data stored in the NAS archival storage system.<ref name="nasstorage" /> |

|||

===2001-2007=== |

|||

'''Bright''', one of the two supercomputers installed in 2001, was a 32-processor [[Cray SV1|Cray SV1ex]] system with a peak of 64 gigaflops and clocked at 500 MHz. The SGI Origin 3800 supercomputer '''Chapman''' was the first NASA system to achieve a performance of over one teraflop, or one trillion floating point operations per second. Named for the former Ames Director of Astronautics (1974-1980), Dean Chapman, who established the first research group dedicated to combining CFD and high-speed computers, the supercomputer consisted of 1,024 processors for a peak performance of 1.23 teraflops (sustained [[LINPACK benchmarks|LINPACK]] performance 819.2 gigaflops) and was clocked at 600MHz. |

|||

==Data Visualization Systems== |

|||

A single SGI [[Altix#Altix_3000|Altix 3000]] system with 512 Intel Itanium 2 processors named '''[[Kalpana (supercomputer)|Kalpana]]''', the world's first single-system image (SSI) supercomputer, was built in 2003 and dedicated on May 12, 2004 to the late [[Kalpana Chawla]], an astronaut and scientist killed in the [[Space Shuttle Columbia disaster]].<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2004/05-10-04.html|title=NASA to Name Supercomputer After Columbia Astronaut|publisher=[http://www.nas.nasa.gov NAS] May 2005}}</ref> It was originally built in a joint effort by the [[NASA Jet Propulsion Laboratory]], Ames Research Center, and Goddard Space Flight Center to perform high-res ocean analysis with the ECCO (Estimating the Circulation and Climate of the Ocean) Consortium model.<ref>{{cite web|url=http://http://www.nas.nasa.gov/publications/news/2003/11-17-03.html|title=NASA Ames Installs World's First Alitx 512-Processor Supercomputer|publisher=[http://www.nas.nasa.gov NAS] November 2003}}</ref> In July 2004, it was officially integrated into the Columbia Project as the first [[Node (computer science)|node]] of the original 20-node system. |

|||

In 1984, NAS purchased 25 SGI IRIS 1000 graphics terminals, the beginning of their long partnership with the Silicon Valley-based company, which made a significant impact on post-processing and visualization of CFD results run on the supercomputers at the facility.<ref name="25th" /> Visualization became a key process in the analysis of simulation data run on the supercomputers, allowing engineers and scientists to view their results spatially and in ways that allowed for a greater understanding of the CFD forces at work in their designs. |

|||

{{multiple image|direction=vertical|width=250|footer=The hyperwall-2 visualization system at the NAS facility allows researchers to view multiple simulations run on the supercomputers, or a single large image or animation.|image1=NASA_Hyperwall_2.jpg|alt1=Hyperwall-2 displaying multiple images|image2 = Hyperwall-2.jpg|alt2=Hyperwall-2 displaying one single image}} |

|||

A '''[[Cray X1|Cray X1E]]''' system with 16 multi-stream processors (MSPs) and a peak performance of 204.8 gigaflops was installed in April 2004.<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2004/04-27-04.html|title=New Cray X1 System Arrives at NAS|publisher=[http://www.nas.nasa.gov NAS] April 2004}}</ref> It was later used as part of the Army High Performance Computing Research Center (AHPCRC) -- a consortium of NASA Ames Research Center, [[Stanford University]], High Performance Technologies, Inc., among other university partners -- located at the NAS facility, along with a '''[[Cray XT3]]''' installed in 2007 with 51.2 gigaflops of processing power and clocked at 800 MHz.<ref name="25th" /> |

|||

===The hyperwall=== |

|||

In 2002, NAS visualization experts developed a visualization system called the '''hyperwall''' which included 49 linked [[Liquid crystal display|LCD]] panels that allowed scientists to view complex [[Dataset|datasets]] on a large, dynamic seven-by-seven screen array. Each screen has its own processing power, allowing each one to display, process, and share datasets so that a single image can be displayed across all screens or configured so that data can be displayed in "cells" like a giant visual spreadsheet.<ref name="marsviz">{{cite web|url=http://www.nas.nasa.gov/publications/news/2003/09-16-03.html|title=Mars Flyer Debuts on Hyperwall|publisher=[http://www.nas.nasa.gov NAS] September 2003}}</ref> |

|||

===The hyperwall-2=== |

|||

[[Image:Columbia_Supercomputer_-_NASA_Advanced_Supercomputing_Facility.jpg|right|thumb|250px|The Columbia Supercomputer at the NASA Advanced Supercomputing Division.]] |

|||

The second generation '''hyperwall-2''' was developed in 2008 by NAS in partnership with Colfax International and is made up of 128 LCD screens arranged in an 8x16 grid 23 feet wide by 10 feet tall. It is capable of rendering one quarter billion [[pixels]], making it the highest resolution scientific visualization system in the world.<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2008/06-25-08.html|title=NASA Develops World's Highest Resolution Visualization System|publisher=[http://www.nas.nasa.gov NAS] June 2008}}</ref> It contains 128 nodes, each with two quad-core [[AMD]] [[Opteron]] ([[Opteron#Opteron_.2865_nm_SOI.29|Barcelona]]) processors and a [[Nvidia]] [[GeForce 400 Series|GeForce 480 GTX]] [[graphics processing unit]] (GPU) for a dedicated peak processing power of 128 teraflops across the entire system, 100 times more powerful than the original hyperwall.<ref>{{cite web|url=http://www.nas.nasa.gov/hecc/resources/viz_systems.html|title=NAS Visualization Systems Overview|publisher=[http://www.nas.nasa.gov NAS]}}</ref> The hyperwall-2 is directly connected to the Pleiades supercomputer's filesystem over an InfiniBand network, which allows the system to read data directly from the filesystem without needing to copy files onto the hyperwall-2's memory. |

|||

Using the same architecture as the Kalpana SSI supercomputer that NASA built with partners SGI and Intel, [[Columbia_(supercomputer)|'''Columbia''']] originally consisted of twenty SGI Altix 3000 512-processor systems containing Intel Itanium 2 processors, with Kalpana acting as the first node.<ref>{{cite web|url=http://www.nasa.gov/home/hqnews/2004/oct/HQ_04353_columbia.html|title=NASA Unveils Its Newest, Most Powerful Supercomputer|publisher=[http://www.nasa.gov NASA] October 2004}}</ref> Named to honor the crew of the Space Shuttle Columbia disaster, it was built in 2004 in a record 120 days<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2005/11-30-05.html|title=Project Columbia Wins GCN Agency Award for Innovation|publisher=[http://www.nas.nasa.gov NAS] November 2005}}</ref> and ranked second on the TOP500 list in November of the same year when it debuted, making it the first NASA supercomputer to rank in the top ten on the international supercomputing list.<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2004/11-08-04.html|title=NASA's Newest Supercomputing Ranked Among World's Fastest|publisher=[http://www.nasa.gov NASA] November 2004}}</ref><ref>{{cite web|url=http://i.top500.org/site/48408|title=TOP500 Site Rank History for the NASA Advanced Supercomputing Facility|publisher=[http://www.top500.org TOP500]}}</ref> In 2006, SGI and NASA replaced one of the original nodes with an [[Altix#Altix_4000|Altix 4700]] system which contained 256 dual-core Itanium 2 processors.<ref>{{cite web|url=http://www.sgi.com/company_info/newsroom/press_releases/2006/november/nasa.html |

|||

|title=NASA Ensures Columbia Supercomputer Will Continue to Fuel New Science with Latest SGI Technology Infusion|publisher=[http://www.sgi.com SGI] November 2006}}</ref> Three additional nodes were added in 2007, one a 2,048-core SGI Altix 4700 system installed along with Schirra at the NAS facility as a testbed for future supercomputing upgrades. When it integrated into Columbia, it added 13 teraflops of processing power. Two 1,024-core Altix 4700s with Montvale Itanium 2 processors were also added under the Exploration Systems Mission Directorate (ESMD) which brought Columbia to its highest peak performance of 88.9 teraflops.<ref name="25th" /> As the petascale Pleiades supercomputer expanded, Columbia was slowly phased out and consisted of only the four Altix 4700 systems until it was decommissioned March 2013. |

|||

===Concurrent Visualization=== |

|||

'''Schirra''', a 640-core IBM [[POWER5+]] system with a 4.8 teraflops peak was installed in 2007 along with the an SGI Altix 4700 system later integrated into Columbia as part of a study by the staff at NAS to determine its potential to help meet the NASA's future computing needs and ultimately replace the Columbia supercomputer that was the agency's main supercomputing resource at the time.<ref name="25th" /><ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2007/06-06-07.html|title=NASA Selects IBM for Next-Generation Supercomputing Applications|publisher=[http://www.nasa.gov NASA] June 2007}}</ref> NAS tested Schirra using a broad spectrum of codes and in late 2007 migrated some users onto the system to test its compatibility with the established [[Linux]] client interface.<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2007/08-05-07.html|title=NAS Completes IBM System Acceptance Tests|publisher=[http://www.nas.nasa.gov NAS] August 2007}}</ref> The supercomputer was named for NASA astronaut [[Schirra|Walter Marty Schirra, Jr.]], who is the only person to fly in the first three US space programs ([[Project Mercury|Mercury]], [[Gemini program|Gemini]], and [[Apollo program|Apollo]]). |

|||

An important feature of the hyperwall technology developed at NAS is that it allows for "concurrent visualization" of data, which enables scientists and engineers to analyze and interpret data while the calculations are running on the supercomputers. Not only does this show the current state of the calculation for runtime monitoring, steering, and termination, but it also "allows higher temporal resolution visualization compared to post-processing because I/O and storage space requirements are largely obviated... [and] may show features in a simulation that would otherwise not be visible."<ref>{{cite journal|last=Ellsworth|first=David|coauthors=Bryan Green, Chris Henze, Patrick Moran, and Timothy Sandstrom|title=Concurrent Visualization in a Production Supercomputing Environment|journal=IEEE Transactions on Visualization and Computer Graphics|volume=12|issue=5|date=September/October 2006|url=http://www.nas.nasa.gov/assets/pdf/techreports/2007/nas-07-002.pdf}}</ref> |

|||

The NAS visualization team developed a configurable concurrent [[Pipeline (computing)|pipeline]] for use with a massively parallel forecast model run on Columbia in 2005 to help predict the Atlantic hurricane season for the [[National Hurricane Center]]. Because of the deadlines to submit each of the forecasts, it was important that the visualization process would not significantly impede the simulation or cause it to fail. |

|||

Along with the 22nd Columbia node and Schirra, '''RT Jones''' was a testbed system built in 2007 with SGI [[Altix#Altix_ICE|Alitx ICE]] racks consisting of 4,092 Intel [[Intel Xeon|Xeon]] quad-core processors as part of NASA's Aeronautics Research Mission Directive. Named in honor of Robert Thomas "RT" Jones, a famed NASA Aerodynamicist who died in 1999,<ref>{{cite web|url=http://www.nasa.gov/centers/ames/news/releases/1999/99_50AR.html|title=Famed NASA aerodynamicist R.T. Jones Dies After Lengthy Illness|publisher=[http://www.nasa.gov NASA] 1999}}</ref> the system had a peak performance of 43.5 teraflops and ultimately became the architectural basis for the Pleiades supercomputer. |

|||

==References== |

|||

===Current Supercomputers=== |

|||

{{reflist|2}} |

|||

[[Image:Pleiades_two_row.jpg|left|thumb|250px|Two rows of the 182 SGI Altix ICE racks that make up the Pleiades supercomputer at the NAS facility.]] |

|||

'''Pleiades''', named for the [[Pleiades|Pleiades open star cluster]] and built in 2008, is currently the main supercomputing resource at the NAS facility. It originally contained 100 SGI Altix ICE 8200EX racks with 12,800 Intel Xeon quad-core [[Xeon#5400-series_.22Harpertown.22|Harpertown]] processors, debuting in the third spot on the TOP500 list in November 2008.<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2008/11-18-08.html|title=NASA Supercomputer Ranks Among World’s Fastest – November 2008|publisher=[http://www.nasa.gov NASA] November 2008}}</ref> With the addition of ten more racks of quad-core [[Xeon#5500-series_.22Gainestown.22|Nehalem]] processors in 2009, a "live integration" of another ICE 8200 rack in January 2010,<ref>{{cite web|url= http://www.nas.nasa.gov/publications/news/2010/02-08-10.html|title=’Live’ Integration of Pleiades Rack Saves 2 Million Hours|publisher=[http://www.nas.nasa.gov NAS] February 2010}}</ref> and another expansion in 2010 that added 32 new SGI Altix ICE 8400 racks with Intel Xeon six-core [[Xeon#3600.2F5600-series_.22Gulftown.22|Westmere]] processors, Pleiades achieved a peak of 973 teraflops and a sustained perfomance of 773 teraflops with 18,432 processors (81,920 cores in 144 racks).<ref>{{cite web|url=http://www.nas.nasa.gov/publications/news/2010/06-02-10.html|title=NASA Supercomputer Doubles Capacity, Increases Efficiency|publisher=[http://www.nasa.gov NASA] June 2010}}</ref> After another 14 Alitx 8400 racks containing Westmere processors were added in 2011, Pleiades ranked seventh on the TOP500 list for both June and November 2011 lists with a sustained performance of 1.09 petaflops, or 1.09 quadrillion floating point operations per second.<ref>{{cite web|url=http://www.nasa.gov/home/hqnews/2011/jun/HQ-11-194_Supercomputer_Ranks.html|title=NASA's Pleiades Supercomputer Ranks Among World's Fastest|publisher=[http://www.nasa.gov NASA] June 2011}}</ref> Pleiades also has the world's largest InfiniBand interconnect network fabric, made up of over 65 miles of double data rate (DDR) and quad data rate (QDR) InfiniBand cabling. |

|||

==External links== |

|||

In June 2012, NAS successfully expanded Pleiades once again by replacing 27 of the original Altix ICE 8200 racks containing quad-core Harpertown processors with 24 new Alitx ICE X racks containing eight-core Intel Xeon Sandy Bridge processors, increasing the supercomputer's capabilities by 40 percent.<ref>{{cite web|url=http://www.nasa.gov/home/hqnews/2012/jun/HQ_12_206_Pleiades_Supercomputer.html|title=Pleiades Supercomputer Gets a Little More Oomph|publisher=[http://www.nasa.gov NASA] June 2012}}</ref> The processors are connected to the rest of the system by fourteen data rate (FDR) InfiniBand fiber cable, enabling each of the two-processor nodes to transfer data at 56 gigabits (about 7 gigabytes) per second.<ref>{{cite web|url=http://www.nas.nasa.gov/hecc/support/kb/Sandy-Bridge-Processors_301.html|title=HECC Project Hardware Overview: Sandy Bridge Processors|publisher=[http://www.nas.nasa.gov NAS]}}</ref> Because the new ICE X racks are taller and thinner than the ICE 8200/8400 racks, the staff at NAS developed a unique harness method to raise the lines of InfiniBand that run in channels across the tops of the racks away from the section being replaced so that the other 90 percent of the supercomputer was still operable and available to users during the transition.<ref>{{cite web|url=http://www.flickr.com/photos/69612157@N06/6857971296/|title=NASA Advanced Supercomputing Flicker page|publisher=[http://www.nas.nasa.gov NAS]}}</ref> It currently has a theoretical performance of 1.79 petaflops (sustained performance of 1.24 petaflops) and ranks at number fourteen on the Top500 list (November 2012). |

|||

====NASA Advanced Supercomputing Resources==== |

|||

* [http://www.nas.nasa.gov/ NASA Advanced Supercomputing (NAS) Division homepage] |

|||

* [http://www.nas.nasa.gov/hecc/resources/columbia.html NAS Columbia Supercomputer homepage] |

|||

* [http://www.nas.nasa.gov/hecc/resources/pleiades.html NAS Pleiades Supercomputer homepage] |

|||

* [http://www.nas.nasa.gov/hecc/resources/storage_systems.html NAS Archive and Storage Systems homepage] |

|||

* [http://www.nas.nasa.gov/hecc/resources/viz_systems.html NAS hyperwall-2 homepage] |

|||

====Other Online Resources==== |

|||

The newest system to the NAS facility is '''Endeavour''', an SGI [[Altix#Altix_UV|UV]] 2000 shared-memory system that was installed in early 2013 when the remaining Columbia nodes were taken offline. Named after the [[Space Shuttle Endeavour]], it is comprised of two nodes using Intel [[Sandy Bridge-E|Xeon E5-4650L]] "Sandy Bridge" processors running Linux, providing a theoretical 32 teraflops of processing power across three physical racks, more processing power than Columbia at the time it was decommissioned in about 10% of the physical space.<ref>{{cite web|url=http://www.nas.nasa.gov/hecc/resources/endeavour.html|title=NAS Endeavour Resource Page}}</ref> Like with Columbia, the shared-memory environment of Endeavour allows a single process to access the memory of other processors in order to run large, data-intensive jobs that would not be possible on other NASA systems. |

|||

* [http://www.nasa.gov NASA Official Website] |

|||

* [http://www.hec.nasa.gov/index.html NASA's High-End Computing Program homepage] |

|||

* [http://www.nas.nasa.gov/hecc NASA's High-End Computing Capability Project homepage] |

|||

* [http://www.top500.org TOP500 official website] |

|||

Revision as of 21:10, 8 January 2014

| |

| Agency overview | |

|---|---|

| Formed | 1982 |

| Preceding agencies |

|

| Headquarters | NASA Ames Research Center, Moffett Field, California |

| Parent agency | NASA |

| Website | www |

| Current Supercomputing Systems | |

| Pleiades | SGI Altix ICE supercluster |

| Endeavour | SGI UV shared-memory system |

The NASA Advanced Supercomputing (NAS) Division is located at NASA Ames Research Center, Moffett Field in the heart of Silicon Valley in Mountain View, California. It has been the major supercomputing resource for NASA missions in aerodynamics, space exploration, studies in weather patterns and ocean currents, and space shuttle and aircraft research and development for over thirty years.

It currently houses the petascale Pleiades and terascale Endeavour supercomputers based on SGI architecture and Intel processors, as well as disk and archival tape storage systems with a capacity of over 100 petabytes of data, the hyperwall-2 visualization system, and the largest InfiniBand network fabric in the world. The NAS Division is part of NASA's Exploration Technology Directorate and operates NASA's High-End Computing Capability Project.[1]

History

Founding

In the mid-1970s, a group of aerospace engineers at Ames Research Center began to look into transferring aerospace research and development from costly and time-consuming wind tunnel testing to simulation-based design and engineering using computational fluid dynamics (CFD) models on supercomputers more powerful than those commercially available at the time. This endeavor was later named the Numerical Aerodynamic Simulator (NAS) Project and the first computer was installed at the Central Computing Facility at Ames Research Center in 1984.

Groundbreaking on a state-of-the-art supercomputing facility took place on March 14, 1985 in order to construct a building where CFD experts, computer scientists, visualization specialists, and network and storage engineers could be under one roof in a collaborative environment. In 1986, NAS transitioned into a full-fledged NASA division and in 1987, NAS staff and equipment, including a second supercomputer, a Cray-2 named Navier, were relocated to the new facility, which was dedicated on March 9, 1987.[2]

In 1995, NAS changed its name to the Numerical Aerospace Simulation Division, and in 2001 to the name it has today.

Industry Leading Innovations

NAS has been one of the leading innovators in the supercomputing world, developing many tools and processes that became widely used in commercial supercomputing. Some of these firsts include:[3]

- UNIX-based supercomputers

- A client/server interface by linking the supercomputers and workstations together to distribute computation and visualization

- Developed and implemented a high-speed wide-area network (WAN) connecting supercomputing resources to remote users (AEROnet)

- Successfully dynamically distributed production loads across supercomputing resources in geographically distant locations (NASA Metacenter)

- Implementation of TCP/IP networking in a supercomputing environment

- A batch-queuing system for supercomputers (NQS)

- A UNIX-based hierarchical mass storage system (NAStore)

- IRIX single-system image 256-, 512-, and 1,024-processor supercomputers

- Linux-based single-system image 512- and 1,024-processor supercomputers

- A 2,048-processor shared memory environment

Software Development

NAS develops and adapts software in order to "compliment and enhance the work performed on its supercomputers, including software for systems support, monitoring systems, security, and scientific visualization," and often provides this software to its users through the NASA Open Source Agreement (NOSA).[4]

A few of the important software developments from NAS include:

- NAS Parallel Benchmarks (NPB/NGB) were developed for the evaluation of highly parallel supercomputers and mimic the characteristics of large-scale CFD applications.

- Portable Batch System (PBS) was the first batch queuing software for parallel and distributed systems. It was released commercially in 1998 and is still widely used in the industry.

- PLOT3D was created by NASA in 1982 and is a computer graphics program still used today to visualize the grid and solutions of structured CFD datasets. The PLOT3D team was awarded the fourth largest prize ever given by the NASA Space Act Program for the development of their software, which revolutionized scientific visualization and analysis of 3D CFD solutions.[2]

- FAST (Flow Analysis Software Toolkit) is a software environment based off of PLOT3D and used to analyze data from numerical simulations which, though tailored to CFD visualization, can be used to visualize almost any scalar and vector data. It was awarded the NASA Software of the Year Award in 1995.

- INS2D and INS3D are codes developed by NAS engineers to solve incomprehensible Navier-Stokes equations in two- and three-dimentional generalized coordinates, respectively, for steady-state and time varying flow. In 1994, the INS3D code won the NASA Software of the Year Award.

- Cart3D is a high-fidelity analysis package for aerodynamic design which allows users to perform automated CFD simulations on complex forms. It is still used at NASA and other government agencies to test conceptual and preliminary air- and spacecraft designs.[5] The Cart3D team won the NASA Software of the Year award in 2002.

- OVERFLOW (Overset grid flow solver) is a software package developed to simulate fluid flow around solid bodies using Reynolds-averaged, Navier-Stokes CFD equations. It was the first general-purpose NASA CFD code for overset (Chimera) grid systems and was released outside of NASA in 1992.

- Chimera Grid Tools (CGT)is a software package containing a variety of tools for the Chimera overset grid approach for solving CFD problems of surface and volume grid generation; as well as grid manipulation, smoothing, and projection.

Supercomputing History

The facility at the NASA Advanced Supercomputing Divison has housed and operated some of the most powerful supercomputers in the world since its construction in 1987. Many of these computers include testbed systems built to test new architecture, hardware, or networking set-ups which might be utilized on a larger scale.[6][2] Peak performance is shown in Floating Point Operations Per Second (FLOPS).

| Computer Name | Architecture | Peak Performance | Number of CPUs | Installation Date |

|---|---|---|---|---|

| Cray XMP-12 | 210.53 megaflops | 1 | 1984 | |

| Navier | Cray 2 | 1.95 gigaflops | 4 | 1985 |

| Chuck | Convex 3820 | 1.9 gigaflops | 8 | 1987 |

| Pierre | Thinking Machines CM2 | 14.34 gigaflops | 16,000 | 1987 |

| 43 gigaflops | 48,000 | 1991 | ||

| Stokes | Cray 2 | 1.95 gigaflops | 4 | 1988 |

| Piper | CDC/ETA-10Q | 840 megaflops | 4 | 1988 |

| Reynolds | Cray Y-MP | 2.54 gigaflops | 8 | 1988 |

| 2.67 gigaflops | 8 | 1990 | ||

| Lagrange | Intel iPSC/860 | 7.68 gigaflops | 128 | 1990 |

| Gamma | Intel iPSC/860 | 7.68 gigaflops | 128 | 1990 |

| von Karman | Convex 3240 | 200 megaflops | 4 | 1991 |

| Boltzman | Thinking Machines CM5 | 16.38 gigaflops | 128 | 1993 |

| Sigma | Intel Paragon | 15.60 gigaflops | 208 | 1993 |

| von Neumann | Cray C90 | 15.36 gigaflops | 16 | 1993 |

| Eagle | Cray C90 | 7.68 gigaflops | 8 | 1993 |

| Grace | Intel Paragon | 15.6 gigaflops | 209 | 1993 |

| Babbage | IBM SP-2 | 34.05 gigaflops | 128 | 1994 |

| 42.56 gigaflops | 160 | 1994 | ||

| da Vinci | SGI Power Challenge | 16 | 1994 | |

| SGI Power Challenge XL | 11.52 gigaflops | 32 | 1995 | |

| Newton | Cray J90 | 7.2 gigaflops | 36 | 1996 |

| Piglet | SGI Origin 2000/250 MHz | 4 gigaflops | 8 | 1997 |

| Turing | SGI Origin 2000/195 MHz | 9.36 gigaflops | 24 | 1997 |

| 25 gigaflops | 64 | 1997 | ||

| Fermi | SGI Origin 2000/195 MHz | 3.12 gigaflops | 8 | 1997 |

| Hopper | SGI Origin 2000/250 MHz | 32 gigaflops | 64 | 1997 |

| Evelyn | SGI Origin 2000/250 MHz | 4 gigaflops | 8 | 1997 |

| Steger | SGI Origin 2000/250 MHz | 64 gigaflops | 128 | 1997 |

| 128 gigaflops | 256 | 1998 | ||

| Lomax | SGI Origin 2800/300 MHz | 307.2 gigaflops | 512 | 1999 |

| 409.6 gigaflops | 512 | 2000 | ||

| Lou | SGI Origin 2000/250 MHz | 4.68 gigaflops | 12 | 1999 |

| Ariel | SGI Origin 2000/250 MHz | 4 gigaflops | 8 | 2000 |

| Sebastian | SGI Origin 2000/250 MHz | 4 gigaflops | 8 | 2000 |

| SN1-512 | SGI Origin 3000/400 MHz | 409.6 gigaflops | 512 | 2001 |

| Bright | Cray SVe1/500 MHz | 64 gigaflops | 32 | 2001 |

| Chapman | SGI Origin 3800/400 MHz | 819.2 gigaflops | 1,024 | 2001 |

| 1.23 teraflops | 1,024 | 2002 | ||

| Lomax II | SGI Origin 3800/400 MHz | 409.6 gigaflops | 512 | 2002 |

| Kalpana | SGI Altix 3000 | 2.66 teraflops | 512 | 2003 |

| Columbia | SGI Altix 3000 | 63 teraflops | 10,240 | 2004 |

| SGI Altix 4700 | 10,296 | 2006 | ||

| 85.8 teraflops | 13,824 | 2007 | ||

| Schirra | IBM POWER5+ | 4.8 teraflops | 640 | 2007 |

| RT Jones | SGI ICE 8200, Intel Xeon "Harpertown" Processors | 43.5 teraflops | 4,096 | 2007 |

| Pleiades | SGI ICE 8200, Intel Xeon "Harpertown" Processors | 487 teraflops | 51,200 | 2008 |

| 544 teraflops | 56,320 | 2009 | ||

| SGI ICE 8200, Intel Xeon "Nehalem" Processors | 773 teraflops | 81,920 | 2010 | |

| SGI ICE 8200/8400, Intel Xeon "Harpertown"/"Nehalem"/"Westmere" Processors | 1.09 petaflops | 111,104 | 2011 | |

| SGI ICE 8200/8400/X, Intel Xeon "Harpertown"/"Nehalem"/"Westmere"/"Sandy Bridge" Processors | 1.24 petaflops | 125,9580 | 2012 | |

| SGI ICE 8200/8400/X, Intel Xeon "Nehalem"/"Westmere"/"Sandy Bridge"/"Ivy Bridge" Processors [7] | 1.54 petaflops | 162,496 | 2013 | |

| Endeavour | SGI UV 2000, Intel Xeon "Sandy Bridge" Processors [8] | 32 teraflops | 1,536 | 2013 |

Quantum Artificial Intelligence Laboratory

Storage Resources

Disk Storage

In 1987, NAS partnered with the Defense Advanced Research Projects Agency (DARPA) and the University of California, Berkeley in the Redundant Array of Inexpensive Disks (RAID) project, which sought to create a storage technology that combined multiple disk drive components into one logical unit. Completed in 1992, the resulting RAID storage technology is still used today.[2]

NAS currently houses disk mass storage on an SGI parallel DMF cluster with high-availability software consisting of four 32-processor front-end systems, which are connected to the supercomputers and the archival tape storage system. The system has 64 GB of memory per front-end and 9 petabytes (PB) of RAID disk capacity.[9] Data stored on disk is regularly migrated to the tape archival storage systems at the facility to free up space for other user projects being run on the supercomputers.

Archive and Storage Systems

In 1987, NAS developed the first UNIX-based hierarchical mass storage system, named NAStore. It contained two StorageTek 4400 cartridge tape robots, each with a storage capacity of approximately 1.1 terabytes, cutting tape-archived data retrieval from four minutes to fifteen seconds.[2]

With the installation of the Pleiades supercomputer in 2008, the StorageTek systems that NAS had been using for twenty years were unable to meet the needs of the greater number of users and increasing file sizes of each project's datasets.[10] In 2009, NAS brought in Spectra Logic robotic tape systems which increased the maximum capacity at the facility to 115 petabytes of space available for users to archive their data from the supercomputers, which consist of extremely large datasets. SGI's Data Migration Facility (DMF) and OpenVault manage disk-to-tape data migration and tape-to-disk de-migration for the NAS facility.[9]

As of January 2014, there is over 30 petabytes of unique data stored in the NAS archival storage system.[9]

Data Visualization Systems

In 1984, NAS purchased 25 SGI IRIS 1000 graphics terminals, the beginning of their long partnership with the Silicon Valley-based company, which made a significant impact on post-processing and visualization of CFD results run on the supercomputers at the facility.[2] Visualization became a key process in the analysis of simulation data run on the supercomputers, allowing engineers and scientists to view their results spatially and in ways that allowed for a greater understanding of the CFD forces at work in their designs.

The hyperwall

In 2002, NAS visualization experts developed a visualization system called the hyperwall which included 49 linked LCD panels that allowed scientists to view complex datasets on a large, dynamic seven-by-seven screen array. Each screen has its own processing power, allowing each one to display, process, and share datasets so that a single image can be displayed across all screens or configured so that data can be displayed in "cells" like a giant visual spreadsheet.[11]

The hyperwall-2

The second generation hyperwall-2 was developed in 2008 by NAS in partnership with Colfax International and is made up of 128 LCD screens arranged in an 8x16 grid 23 feet wide by 10 feet tall. It is capable of rendering one quarter billion pixels, making it the highest resolution scientific visualization system in the world.[12] It contains 128 nodes, each with two quad-core AMD Opteron (Barcelona) processors and a Nvidia GeForce 480 GTX graphics processing unit (GPU) for a dedicated peak processing power of 128 teraflops across the entire system, 100 times more powerful than the original hyperwall.[13] The hyperwall-2 is directly connected to the Pleiades supercomputer's filesystem over an InfiniBand network, which allows the system to read data directly from the filesystem without needing to copy files onto the hyperwall-2's memory.

Concurrent Visualization

An important feature of the hyperwall technology developed at NAS is that it allows for "concurrent visualization" of data, which enables scientists and engineers to analyze and interpret data while the calculations are running on the supercomputers. Not only does this show the current state of the calculation for runtime monitoring, steering, and termination, but it also "allows higher temporal resolution visualization compared to post-processing because I/O and storage space requirements are largely obviated... [and] may show features in a simulation that would otherwise not be visible."[14]

The NAS visualization team developed a configurable concurrent pipeline for use with a massively parallel forecast model run on Columbia in 2005 to help predict the Atlantic hurricane season for the National Hurricane Center. Because of the deadlines to submit each of the forecasts, it was important that the visualization process would not significantly impede the simulation or cause it to fail.

References

- ^ "NAS Homepage - About the NAS Division". NAS.

{{cite web}}: External link in|publisher= - ^ a b c d e f "NASA Advanced Supercomputing Division 25th Anniversary Brochure (PDF)" (PDF). NAS 2008.

{{cite web}}: External link in|publisher= - ^ "NAS homepage: Division History". NAS.

{{cite web}}: External link in|publisher= - ^ "NAS Software and Datasets". NAS.

{{cite web}}: External link in|publisher= - ^ "NASA Cart3D Homepage".

- ^ "NAS High-Performance Computer History". Gridpoints: 1A–12A. Spring 2002.

{{cite journal}}: CS1 maint: date and year (link) - ^ "Pleiades Supercomputer Resource homepage". NAS.

{{cite web}}: External link in|publisher= - ^ "Endeavour Supercomputer Resource homepage". NAS.

{{cite web}}: External link in|publisher= - ^ a b c "HECC Archival Storage System Resource homepage". NAS.

{{cite web}}: External link in|publisher= - ^ "NAS Silo, Tape Drive, and Storage Upgrades - SC09" (PDF). NAS November 2009.

{{cite web}}: External link in|publisher= - ^ "Mars Flyer Debuts on Hyperwall". NAS September 2003.

{{cite web}}: External link in|publisher= - ^ "NASA Develops World's Highest Resolution Visualization System". NAS June 2008.

{{cite web}}: External link in|publisher= - ^ "NAS Visualization Systems Overview". NAS.

{{cite web}}: External link in|publisher= - ^ Ellsworth, David (September/October 2006). "Concurrent Visualization in a Production Supercomputing Environment" (PDF). IEEE Transactions on Visualization and Computer Graphics. 12 (5).

{{cite journal}}: Check date values in:|date=(help); Unknown parameter|coauthors=ignored (|author=suggested) (help)

External links

NASA Advanced Supercomputing Resources

- NASA Advanced Supercomputing (NAS) Division homepage

- NAS Columbia Supercomputer homepage

- NAS Pleiades Supercomputer homepage

- NAS Archive and Storage Systems homepage

- NAS hyperwall-2 homepage