Sensitivity and specificity

This article may be too technical for most readers to understand. (June 2017) |

Sensitivity and specificity are statistical measures of the performance of a binary classification test, also known in statistics as a classification function, that are widely used in medicine:

- Sensitivity (also called the true positive rate, the epidemiological/clinical sensitivity, the recall, or probability of detection[1] in some fields) measures the proportion of actual positives that are correctly identified as such (e.g., the percentage of sick people who are correctly identified as having the condition). It is often mistakenly confused with the detection limit[2][3], while the detection limit is calculated from the analytical sensitivity, not from the epidemiological sensitivity.

- Specificity (also called the true negative rate) measures the proportion of actual negatives that are correctly identified as such (e.g., the percentage of healthy people who are correctly identified as not having the condition).

Note that the terms "positive" and "negative" do not refer to the value of the condition of interest, but to its presence or absence; the condition itself could be a disease, so that "positive" might mean "diseased", while "negative" might mean "healthy".

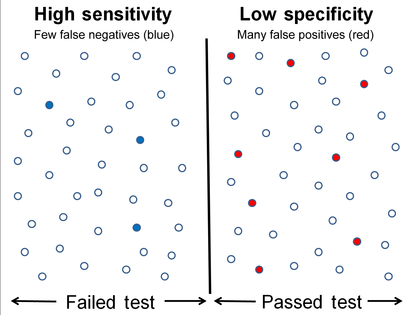

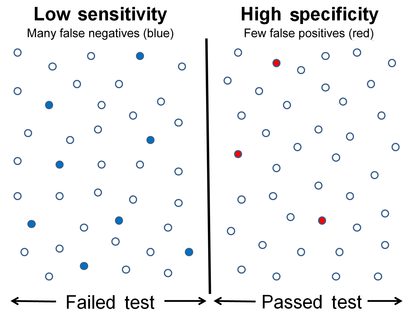

In many tests, including diagnostic medical tests, sensitivity is the extent to which actual positives are not overlooked (so false negatives are few), and specificity is the extent to which actual negatives are classified as such (so false positives are few). Thus, a highly sensitive test rarely overlooks an actual positive (for example, showing "nothing bad" despite something bad existing); a highly specific test rarely registers a positive classification for anything that is not the target of testing (for example, finding one bacterial species and mistaking it for another closely related one that is the true target); and a test that is highly sensitive and highly specific does both, so it "rarely overlooks a thing that it is looking for" and it "rarely mistakes anything else for that thing."

Sensitivity, therefore, quantifies the avoidance of false negatives and specificity does the same for false positives. For any test, there is usually a trade-off between the measures – for instance, in airport security, since testing of passengers is for potential threats to safety, scanners may be set to trigger alarms on low-risk items like belt buckles and keys (low specificity) in order to increase the probability of identifying dangerous objects and minimize the risk of missing objects that do pose a threat (high sensitivity). This trade-off can be represented graphically using a receiver operating characteristic curve. A perfect predictor would be described as 100% sensitive, meaning all sick individuals are correctly identified as sick, and 100% specific, meaning no healthy individuals are incorrectly identified as sick. In reality, however, any non-deterministic predictor will possess a minimum error bound known as the Bayes error rate. The values of sensitivity and specificity are agnostic to the percent of positive cases in the population of interest (as opposed to, for example, precision).

The terms "sensitivity" and "specificity" were introduced by the American biostatistician Jacob Yerushalmy in 1947.[4]

Definitions

In the terminology true/false positive/negative, true or false refers to the assigned classification being correct or incorrect, while positive or negative refers to assignment to the positive or the negative category.

| Predicted condition | Sources: [5][6] [7][8][9][10][11][12] | ||||

| Total population = P + N |

Predicted Positive (PP) | Predicted Negative (PN) | Informedness, bookmaker informedness (BM) = TPR + TNR − 1 |

Prevalence threshold (PT) = √TPR × FPR - FPR/TPR - FPR | |

Actual condition

|

Positive (P) [a] | True positive (TP), hit[b] |

False negative (FN), miss, underestimation |

True positive rate (TPR), recall, sensitivity (SEN), probability of detection, hit rate, power = TP/P = 1 − FNR |

False negative rate (FNR), miss rate type II error [c] = FN/P = 1 − TPR |

| Negative (N)[d] | False positive (FP), false alarm, overestimation |

True negative (TN), correct rejection[e] |

False positive rate (FPR), probability of false alarm, fall-out type I error [f] = FP/N = 1 − TNR |

True negative rate (TNR), specificity (SPC), selectivity = TN/N = 1 − FPR | |

| Prevalence = P/P + N |

Positive predictive value (PPV), precision = TP/PP = 1 − FDR |

False omission rate (FOR) = FN/PN = 1 − NPV |

Positive likelihood ratio (LR+) = TPR/FPR |

Negative likelihood ratio (LR−) = FNR/TNR | |

| Accuracy (ACC) = TP + TN/P + N |

False discovery rate (FDR) = FP/PP = 1 − PPV |

Negative predictive value (NPV) = TN/PN = 1 − FOR |

Markedness (MK), deltaP (Δp) = PPV + NPV − 1 |

Diagnostic odds ratio (DOR) = LR+/LR− | |

| Balanced accuracy (BA) = TPR + TNR/2 |

F1 score = 2 PPV × TPR/PPV + TPR = 2 TP/2 TP + FP + FN |

Fowlkes–Mallows index (FM) = √PPV × TPR |

Matthews correlation coefficient (MCC) = √TPR × TNR × PPV × NPV - √FNR × FPR × FOR × FDR |

Threat score (TS), critical success index (CSI), Jaccard index = TP/TP + FN + FP | |

- ^ the number of real positive cases in the data

- ^ A test result that correctly indicates the presence of a condition or characteristic

- ^ Type II error: A test result which wrongly indicates that a particular condition or attribute is absent

- ^ the number of real negative cases in the data

- ^ A test result that correctly indicates the absence of a condition or characteristic

- ^ Type I error: A test result which wrongly indicates that a particular condition or attribute is present

Application to screening study

Imagine a study evaluating a new test that screens people for a disease. Each person taking the test either has or does not have the disease. The test outcome can be positive (classifying the person as having the disease) or negative (classifying the person as not having the disease). The test results for each subject may or may not match the subject's actual status. In that setting:

- True positive: Sick people correctly identified as sick

- False positive: Healthy people incorrectly identified as sick

- True negative: Healthy people correctly identified as healthy

- False negative: Sick people incorrectly identified as healthy

In general, Positive = identified and negative = rejected. Therefore:

- True positive = correctly identified

- False positive = incorrectly identified

- True negative = correctly rejected

- False negative = incorrectly rejected

Confusion matrix

Consider a group with P positive instances and N negative instances of some condition. The four outcomes can be formulated in a 2×2 contingency table or confusion matrix, as follows:

| This Wikipedia page has been superseded by template:diagnostic_testing_diagram and is retained primarily for historical reference. |

| True condition | |||||||

| Total population | Condition positive | Condition negative | Prevalence = Σ Condition positive/Σ Total population | Accuracy (ACC) = Σ True positive + Σ True negative/Σ Total population | |||

Predicted condition

|

Predicted condition positive |

True positive | False positive, Type I error |

Positive predictive value (PPV), Precision = Σ True positive/Σ Predicted condition positive | False discovery rate (FDR) = Σ False positive/Σ Predicted condition positive | ||

| Predicted condition negative |

False negative, Type II error |

True negative | False omission rate (FOR) = Σ False negative/Σ Predicted condition negative | Negative predictive value (NPV) = Σ True negative/Σ Predicted condition negative | |||

| True positive rate (TPR), Recall, Sensitivity (SEN), probability of detection, Power = Σ True positive/Σ Condition positive | False positive rate (FPR), Fall-out, probability of false alarm = Σ False positive/Σ Condition negative | Positive likelihood ratio (LR+) = TPR/FPR | Diagnostic odds ratio (DOR) = LR+/LR− | Matthews correlation coefficient (MCC) = √TPR·TNR·PPV·NPV − √FNR·FPR·FOR·FDR |

F1 score = 2 · PPV · TPR/PPV + TPR = 2 · Precision · Recall/Precision + Recall | ||

| False negative rate (FNR), Miss rate = Σ False negative/Σ Condition positive | Specificity (SPC), Selectivity, True negative rate (TNR) = Σ True negative/Σ Condition negative | Negative likelihood ratio (LR−) = FNR/TNR | |||||

Sensitivity

Consider the example of a medical test for diagnosing a disease. Sensitivity refers to the test's ability to correctly detect ill patients who do have the condition.[13] In the example of a medical test used to identify a disease, the sensitivity (sometimes also named as detection rate in a clinical setting) of the test is the proportion of people who test positive for the disease among those who have the disease. Mathematically, this can be expressed as:

A negative result in a test with high sensitivity is useful for ruling out disease.[13] A high sensitivity test is reliable when its result is negative, since it rarely misdiagnoses those who have the disease. A test with 100% sensitivity will recognize all patients with the disease by testing positive. A negative test result would definitively rule out presence of the disease in a patient.

A positive result in a test with high sensitivity is not necessarily useful for ruling in disease. Suppose a 'bogus' test kit is designed to always give a positive reading. When used on diseased patients, all patients test positive, giving the test 100% sensitivity. However, sensitivity by definition does not take into account false positives. The bogus test also returns positive on all healthy patients, giving it a false positive rate of 100%, rendering it useless for detecting or "ruling in" the disease.

Sensitivity is not the same as the precision or positive predictive value (ratio of true positives to combined true and false positives), which is as much a statement about the proportion of actual positives in the population being tested as it is about the test.

The calculation of sensitivity does not take into account indeterminate test results. If a test cannot be repeated, indeterminate samples either should be excluded from the analysis (the number of exclusions should be stated when quoting sensitivity) or can be treated as false negatives (which gives the worst-case value for sensitivity and may therefore underestimate it).

Specificity

Consider the example of a medical test for diagnosing a disease. Specificity relates to the test's ability to correctly reject healthy patients without a condition. Specificity of a test is the proportion of healthy patients known not to have the disease, who will test negative for it. Mathematically, this can also be written as:

A positive result in a test with high specificity is useful for ruling in disease. The test rarely gives positive results in healthy patients. A positive result signifies a high probability of the presence of disease.[14]

A test with a higher specificity has a lower type I error rate.

Graphical illustration

-

High sensitivity and low specificity

-

Low sensitivity and high specificity

Medical examples

In medical diagnosis, test sensitivity is the ability of a test to correctly identify those with the disease (true positive rate), whereas test specificity is the ability of the test to correctly identify those without the disease (true negative rate). If 100 patients known to have a disease were tested, and 43 test positive, then the test has 43% sensitivity. If 100 with no disease are tested and 96 return a completely negative result, then the test has 96% specificity. Sensitivity and specificity are prevalence-independent test characteristics, as their values are intrinsic to the test and do not depend on the disease prevalence in the population of interest.[15] Positive and negative predictive values, but not sensitivity or specificity, are values influenced by the prevalence of disease in the population that is being tested. These concepts are illustrated graphically in this applet Bayesian clinical diagnostic model which show the positive and negative predictive values as a function of the prevalence, the sensitivity and specificity.

Prevalence Threshold

The relationship between a screening tests' positive predictive value, and its target prevalence, is proportional - though not linear in all but a special case. In consequence, there is a point of local extrema and maximum curvature defined only as a function of the sensitivity and specificity beyond which the rate of change of a test's positive predictive value drops at a differential pace relative to the disease prevalence. Using differential equations, this point was first defined by Balayla et al. [16] and is termed the prevalence threshold (). The equation for the prevalence threshold is given by the following formula, where a = sensitivity and b = specificity:

Where this point lies in the screening curve has critical implications for clinicians and the interpretation of positive screening tests in real time.

Misconceptions

It is often claimed that a highly specific test is effective at ruling in a disease when positive, while a highly sensitive test is deemed effective at ruling out a disease when negative.[17][18] This has led to the widely used mnemonics SPPIN and SNNOUT, according to which a highly specific test, when positive, rules in disease (SP-P-IN), and a highly 'sensitive' test, when negative rules out disease (SN-N-OUT). Both rules of thumb are, however, inferentially misleading, as the diagnostic power of any test is determined by both its sensitivity and its specificity.[19][20][21]

The tradeoff between specificity and sensitivity is explored in ROC analysis as a trade off between TPR and FPR (that is, recall and fallout).[22] Giving them equal weight optimizes informedness = specificity+sensitivity-1 = TPR-FPR, the magnitude of which gives the probability of an informed decision between the two classes (>0 represents appropriate use of information, 0 represents chance-level performance, <0 represents perverse use of information).[23]

Sensitivity index

The sensitivity index or d' (pronounced 'dee-prime') is a statistic used in signal detection theory. It provides the separation between the means of the signal and the noise distributions, compared against the standard deviation of the noise distribution. For normally distributed signal and noise with mean and standard deviations and , and and , respectively, d' is defined as:

An estimate of d' can be also found from measurements of the hit rate and false-alarm rate. It is calculated as:

- d' = Z(hit rate) – Z(false alarm rate),[25]

where function Z(p), p ∈ [0,1], is the inverse of the cumulative Gaussian distribution.

d' is a dimensionless statistic. A higher d' indicates that the signal can be more readily detected.

Worked example

- A worked example

- A diagnostic test with sensitivity 67% and specificity 91% is applied to 2030 people to look for a disorder with a population prevalence of 1.48%

| Fecal occult blood screen test outcome | |||||

| Total population (pop.) = 2030 |

Test outcome positive | Test outcome negative | Accuracy (ACC) = (TP + TN) / pop.

= (20 + 1820) / 2030 ≈ 90.64% |

F1 score = 2 × precision × recall/precision + recall

≈ 0.174 | |

| Patients with bowel cancer (as confirmed on endoscopy) |

Actual condition positive (AP) = 30 (2030 × 1.48%) |

True positive (TP) = 20 (2030 × 1.48% × 67%) |

False negative (FN) = 10 (2030 × 1.48% × (100% − 67%)) |

True positive rate (TPR), recall, sensitivity = TP / AP

= 20 / 30 ≈ 66.7% |

False negative rate (FNR), miss rate = FN / AP

= 10 / 30 ≈ 33.3% |

| Actual condition negative (AN) = 2000 (2030 × (100% − 1.48%)) |

False positive (FP) = 180 (2030 × (100% − 1.48%) × (100% − 91%)) |

True negative (TN) = 1820 (2030 × (100% − 1.48%) × 91%) |

False positive rate (FPR), fall-out, probability of false alarm = FP / AN

= 180 / 2000 = 9.0% |

Specificity, selectivity, true negative rate (TNR) = TN / AN

= 1820 / 2000 = 91% | |

| Prevalence = AP / pop.

= 30 / 2030 ≈ 1.48% |

Positive predictive value (PPV), precision = TP / (TP + FP)

= 20 / (20 + 180) = 10% |

False omission rate (FOR) = FN / (FN + TN)

= 10 / (10 + 1820) ≈ 0.55% |

Positive likelihood ratio (LR+) = TPR/FPR

= (20 / 30) / (180 / 2000) ≈ 7.41 |

Negative likelihood ratio (LR−) = FNR/TNR

= (10 / 30) / (1820 / 2000) ≈ 0.366 | |

| False discovery rate (FDR) = FP / (TP + FP)

= 180 / (20 + 180) = 90.0% |

Negative predictive value (NPV) = TN / (FN + TN)

= 1820 / (10 + 1820) ≈ 99.45% |

Diagnostic odds ratio (DOR) = LR+/LR−

≈ 20.2 | |||

Related calculations

- False positive rate (α) = type I error = 1 − specificity = FP / (FP + TN) = 180 / (180 + 1820) = 9%

- False negative rate (β) = type II error = 1 − sensitivity = FN / (TP + FN) = 10 / (20 + 10) ≈ 33%

- Power = sensitivity = 1 − β

- Positive likelihood ratio = sensitivity / (1 − specificity) ≈ 0.67 / (1 − 0.91) ≈ 7.4

- Negative likelihood ratio = (1 − sensitivity) / specificity ≈ (1 − 0.67) / 0.91 ≈ 0.37

- Prevalence threshold = ≈ 0.2686 ≈ 26.9%

This hypothetical screening test (fecal occult blood test) correctly identified two-thirds (66.7%) of patients with colorectal cancer.[a] Unfortunately, factoring in prevalence rates reveals that this hypothetical test has a high false positive rate, and it does not reliably identify colorectal cancer in the overall population of asymptomatic people (PPV = 10%).

On the other hand, this hypothetical test demonstrates very accurate detection of cancer-free individuals (NPV ≈ 99.5%). Therefore, when used for routine colorectal cancer screening with asymptomatic adults, a negative result supplies important data for the patient and doctor, such as ruling out cancer as the cause of gastrointestinal symptoms or reassuring patients worried about developing colorectal cancer.

Estimation of errors in quoted sensitivity or specificity

Sensitivity and specificity values alone may be highly misleading. The 'worst-case' sensitivity or specificity must be calculated in order to avoid reliance on experiments with few results. For example, a particular test may easily show 100% sensitivity if tested against the gold standard four times, but a single additional test against the gold standard that gave a poor result would imply a sensitivity of only 80%. A common way to do this is to state the binomial proportion confidence interval, often calculated using a Wilson score interval.

Confidence intervals for sensitivity and specificity can be calculated, giving the range of values within which the correct value lies at a given confidence level (e.g., 95%).[28]

Terminology in information retrieval

In information retrieval, the positive predictive value is called precision, and sensitivity is called recall. Unlike the Specificity vs Sensitivity tradeoff, these measures are both independent of the number of true negatives, which is generally unknown and much larger than the actual numbers of relevant and retrieved documents. This assumption of very large numbers of true negatives versus positives is rare in other applications.[23]

The F-score can be used as a single measure of performance of the test for the positive class. The F-score is the harmonic mean of precision and recall:

In the traditional language of statistical hypothesis testing, the sensitivity of a test is called the statistical power of the test, although the word power in that context has a more general usage that is not applicable in the present context. A sensitive test will have fewer Type II errors.

See also

References

- ^ "Detector Performance Analysis Using ROC Curves – MATLAB & Simulink Example". www.mathworks.com. Retrieved 11 August 2016.

- ^ Lozano, Diego. "Difference Between Analytical Sensitivity and Detection Limit". American Journal of Clinical Pathology.

- ^ Armbruster, David (2008). "Limit of Blank, Limit of Detection and Limit of Quantitation". Clinical Biochemical Reviews. 29 (1): 49–52. PMC 2556583. PMID 18852857.

- ^ Yerushalmy J (1947). "Statistical problems in assessing methods of medical diagnosis with special reference to x-ray techniques". Public Health Reports. 62 (2): 1432–39. doi:10.2307/4586294. JSTOR 4586294. PMID 20340527.

- ^ Fawcett, Tom (2006). "An Introduction to ROC Analysis" (PDF). Pattern Recognition Letters. 27 (8): 861–874. doi:10.1016/j.patrec.2005.10.010. S2CID 2027090.

- ^ Provost, Foster; Tom Fawcett (2013-08-01). "Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking". O'Reilly Media, Inc.

- ^ Powers, David M. W. (2011). "Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness & Correlation". Journal of Machine Learning Technologies. 2 (1): 37–63.

- ^ Ting, Kai Ming (2011). Sammut, Claude; Webb, Geoffrey I. (eds.). Encyclopedia of machine learning. Springer. doi:10.1007/978-0-387-30164-8. ISBN 978-0-387-30164-8.

- ^ Brooks, Harold; Brown, Barb; Ebert, Beth; Ferro, Chris; Jolliffe, Ian; Koh, Tieh-Yong; Roebber, Paul; Stephenson, David (2015-01-26). "WWRP/WGNE Joint Working Group on Forecast Verification Research". Collaboration for Australian Weather and Climate Research. World Meteorological Organisation. Retrieved 2019-07-17.

- ^ Chicco D, Jurman G (January 2020). "The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation". BMC Genomics. 21 (1): 6-1–6-13. doi:10.1186/s12864-019-6413-7. PMC 6941312. PMID 31898477.

- ^ Chicco D, Toetsch N, Jurman G (February 2021). "The Matthews correlation coefficient (MCC) is more reliable than balanced accuracy, bookmaker informedness, and markedness in two-class confusion matrix evaluation". BioData Mining. 14 (13): 13. doi:10.1186/s13040-021-00244-z. PMC 7863449. PMID 33541410.

- ^ Tharwat A. (August 2018). "Classification assessment methods". Applied Computing and Informatics. 17: 168–192. doi:10.1016/j.aci.2018.08.003.

- ^ a b Altman DG, Bland JM (June 1994). "Diagnostic tests. 1: Sensitivity and specificity". BMJ. 308 (6943): 1552. doi:10.1136/bmj.308.6943.1552. PMC 2540489. PMID 8019315.

- ^ "SpPins and SnNouts". Centre for Evidence Based Medicine (CEBM). Retrieved 26 December 2013.

- ^ Mangrulkar, Rajesh. "Diagnostic Reasoning I and II". Retrieved 24 January 2012.

{{cite web}}: Unknown parameter|name-list-format=ignored (|name-list-style=suggested) (help) - ^ Balayla, Jacques. "Prevalence Threshold and the Geometry of Screening Curves." arXiv preprint arXiv:2006.00398 (2020).

- ^ "Evidence-Based Diagnosis". Michigan State University. Archived from the original on 2013-07-06. Retrieved 2013-08-23.

- ^ "Sensitivity and Specificity". Emory University Medical School Evidence Based Medicine course.

- ^ Baron JA (Apr–Jun 1994). "Too bad it isn't true". Medical Decision Making. 14 (2): 107. doi:10.1177/0272989X9401400202. PMID 8028462.

- ^ Boyko EJ (Apr–Jun 1994). "Ruling out or ruling in disease with the most sensitive or specific diagnostic test: short cut or wrong turn?". Medical Decision Making. 14 (2): 175–9. doi:10.1177/0272989X9401400210. PMID 8028470.

- ^ Pewsner D, Battaglia M, Minder C, Marx A, Bucher HC, Egger M (July 2004). "Ruling a diagnosis in or out with "SpPIn" and "SnNOut": a note of caution". BMJ. 329 (7459): 209–13. doi:10.1136/bmj.329.7459.209. PMC 487735. PMID 15271832.

- ^ Cite error: The named reference

Fawcett2006was invoked but never defined (see the help page). - ^ a b Cite error: The named reference

Powers2011was invoked but never defined (see the help page). - ^ Gale SD, Perkel DJ (January 2010). "A basal ganglia pathway drives selective auditory responses in songbird dopaminergic neurons via disinhibition". The Journal of Neuroscience. 30 (3): 1027–37. doi:10.1523/JNEUROSCI.3585-09.2010. PMC 2824341. PMID 20089911.

- ^ Macmillan, Neil A.; Creelman, C. Douglas (15 September 2004). Detection Theory: A User's Guide. Psychology Press. p. 7. ISBN 978-1-4106-1114-7.

{{cite book}}: Unknown parameter|name-list-format=ignored (|name-list-style=suggested) (help) - ^ Lin, Jennifer S.; Piper, Margaret A.; Perdue, Leslie A.; Rutter, Carolyn M.; Webber, Elizabeth M.; O'Connor, Elizabeth; Smith, Ning; Whitlock, Evelyn P. (21 June 2016). "Screening for Colorectal Cancer". JAMA. 315 (23): 2576–2594. doi:10.1001/jama.2016.3332. ISSN 0098-7484. PMID 27305422.

- ^ Bénard, Florence; Barkun, Alan N.; Martel, Myriam; Renteln, Daniel von (7 January 2018). "Systematic review of colorectal cancer screening guidelines for average-risk adults: Summarizing the current global recommendations". World Journal of Gastroenterology. 24 (1): 124–138. doi:10.3748/wjg.v24.i1.124. PMC 5757117. PMID 29358889.

- ^ "Diagnostic test online calculator calculates sensitivity, specificity, likelihood ratios and predictive values from a 2x2 table – calculator of confidence intervals for predictive parameters". medcalc.org.

Further reading

- Altman DG, Bland JM (June 1994). "Diagnostic tests. 1: Sensitivity and specificity". BMJ. 308 (6943): 1552. doi:10.1136/bmj.308.6943.1552. PMC 2540489. PMID 8019315.

- Loong TW (September 2003). "Understanding sensitivity and specificity with the right side of the brain". BMJ. 327 (7417): 716–9. doi:10.1136/bmj.327.7417.716. PMC 200804. PMID 14512479.

External links

- UIC Calculator

- Vassar College's Sensitivity/Specificity Calculator

- MedCalc Free Online Calculator

- Bayesian clinical diagnostic model applet

Cite error: There are <ref group=lower-alpha> tags or {{efn}} templates on this page, but the references will not show without a {{reflist|group=lower-alpha}} template or {{notelist}} template (see the help page).

![{\displaystyle {\begin{aligned}{\text{sensitivity}}&={\frac {\text{number of true positives}}{{\text{number of true positives}}+{\text{number of false negatives}}}}\\[8pt]&={\frac {\text{number of true positives}}{\text{total number of sick individuals in population}}}\\[8pt]&={\text{probability of a positive test given that the patient has the disease}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/12ec58e26222c7c528150ce69c86e2aa91ddc4c2)

![{\displaystyle {\begin{aligned}{\text{specificity}}&={\frac {\text{number of true negatives}}{{\text{number of true negatives}}+{\text{number of false positives}}}}\\[8pt]&={\frac {\text{number of true negatives}}{\text{total number of well individuals in population}}}\\[8pt]&={\text{probability of a negative test given that the patient is well}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d48cee2cea0745bcc29d228f8c2783e4cb34547c)