Dynamic array

In computer science, a dynamic array, growable array, resizable array, dynamic table, mutable array, or array list is a random access, variable-size list data structure that allows elements to be added or removed. It is supplied with standard libraries in many modern mainstream programming languages.

A dynamic array is not the same thing as a dynamically allocated array, which is an array whose size is fixed when the array is allocated, although a dynamic array may use such a fixed-size array as a back end.[1]

Bounded-size dynamic arrays and capacity

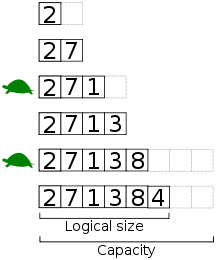

The simplest dynamic array is constructed by allocating a fixed-size array and then dividing it into two parts: the first stores the elements of the dynamic array and the second is reserved, or unused. We can then add or remove elements at the end of the dynamic array in constant time by using the reserved space, until this space is completely consumed. The number of elements used by the dynamic array contents is its logical size or size, while the size of the underlying array is called the dynamic array's capacity or physical size, which is the maximum possible size without relocating data.[2]

In applications where the logical size is bounded, the fixed-size data structure suffices. This may be short-sighted, as more space may be needed later. A philosophical programmer may prefer to write the code to make every array capable of resizing from the outset, then return to using fixed-size arrays during program optimization. Resizing the underlying array is an expensive task, typically involving copying the entire contents of the array.

Geometric expansion and amortized cost

To avoid incurring the cost of resizing many times, dynamic arrays resize by a large amount, such as doubling in size, and use the reserved space for future expansion. The operation of adding an element to the end might work as follows:

function insertEnd(dynarray a, element e)

if (a.size = a.capacity)

// resize a to twice its current capacity:

a.capacity ← a.capacity * 2

// (copy the contents to the new memory location here)

a[a.size] ← e

a.size ← a.size + 1

As n elements are inserted, the capacities form a geometric progression. Expanding the array by any constant proportion a ensures that inserting n elements takes O(n) time overall, meaning that each insertion takes amortized constant time. Many dynamic arrays also deallocate some of the underlying storage if its size drops below a certain threshold, such as 30% of the capacity. This threshold must be strictly smaller than 1/a in order to provide hysteresis (provide a stable band to avoiding repeatedly growing and shrinking) and support mixed sequences of insertions and removals with amortized constant cost.

Dynamic arrays are a common example when teaching amortized analysis.[3][4]

Growth factor

The growth factor for the dynamic array depends on several factors including a space-time trade-off and algorithms used in the memory allocator itself. For growth factor a, the average time per insertion operation is about a/(a−1), while the number of wasted cells is bounded above by (a−1)n. If memory allocator uses a first-fit allocation algorithm, then growth factor values such as a=2 can cause dynamic array expansion to run out of memory even though a significant amount of memory may still be available.[5] There have been various discussions on ideal growth factor values, including proposals for the Golden Ratio as well as the value 1.5.[6] Many textbooks, however, use a = 2 for simplicity and analysis purposes.[3][4]

Below are growth factors used by several popular implementations:

| Implementation | Growth factor (a) |

|---|---|

| Java ArrayList[1] | 1.5 (3/2) |

| Python PyListObject[7] | 1.125 (9/8) |

| Microsoft Visual C++ 2013[8] | 1.5 (3/2) |

| G++ 5.2.0[5] | 2 |

| Clang 3.6[5] | 2 |

| Facebook folly/FBVector[9] | 1.5 (3/2) |

Performance

| Peek (index) |

Mutate (insert or delete) at … | Excess space, average | |||

|---|---|---|---|---|---|

| Beginning | End | Middle | |||

| Linked list | Θ(n) | Θ(1) | Θ(1), known end element; Θ(n), unknown end element |

Θ(n) | Θ(n) |

| Array | Θ(1) | — | — | — | 0 |

| Dynamic array | Θ(1) | Θ(n) | Θ(1) amortized | Θ(n) | Θ(n)[10] |

| Balanced tree | Θ(log n) | Θ(log n) | Θ(log n) | Θ(log n) | Θ(n) |

| Random-access list | Θ(log n)[11] | Θ(1) | —[11] | —[11] | Θ(n) |

| Hashed array tree | Θ(1) | Θ(n) | Θ(1) amortized | Θ(n) | Θ(√n) |

The dynamic array has performance similar to an array, with the addition of new operations to add and remove elements:

- Getting or setting the value at a particular index (constant time)

- Iterating over the elements in order (linear time, good cache performance)

- Inserting or deleting an element in the middle of the array (linear time)

- Inserting or deleting an element at the end of the array (constant amortized time)

Dynamic arrays benefit from many of the advantages of arrays, including good locality of reference and data cache utilization, compactness (low memory use), and random access. They usually have only a small fixed additional overhead for storing information about the size and capacity. This makes dynamic arrays an attractive tool for building cache-friendly data structures. However, in languages like Python or Java that enforce reference semantics, the dynamic array generally will not store the actual data, but rather it will store references to the data that resides in other areas of memory. In this case, accessing items in the array sequentially will actually involve accessing multiple non-contiguous areas of memory, so the many advantages of the cache-friendliness of this data structure are lost.

Compared to linked lists, dynamic arrays have faster indexing (constant time versus linear time) and typically faster iteration due to improved locality of reference; however, dynamic arrays require linear time to insert or delete at an arbitrary location, since all following elements must be moved, while linked lists can do this in constant time. This disadvantage is mitigated by the gap buffer and tiered vector variants discussed under Variants below. Also, in a highly fragmented memory region, it may be expensive or impossible to find contiguous space for a large dynamic array, whereas linked lists do not require the whole data structure to be stored contiguously.

A balanced tree can store a list while providing all operations of both dynamic arrays and linked lists reasonably efficiently, but both insertion at the end and iteration over the list are slower than for a dynamic array, in theory and in practice, due to non-contiguous storage and tree traversal/manipulation overhead.

Variants

Gap buffers are similar to dynamic arrays but allow efficient insertion and deletion operations clustered near the same arbitrary location. Some deque implementations use array deques, which allow amortized constant time insertion/removal at both ends, instead of just one end.

Goodrich[12] presented a dynamic array algorithm called Tiered Vectors that provided O(n1/2) performance for order preserving insertions or deletions from the middle of the array.

Hashed Array Tree (HAT) is a dynamic array algorithm published by Sitarski in 1996.[13] Hashed Array Tree wastes order n1/2 amount of storage space, where n is the number of elements in the array. The algorithm has O(1) amortized performance when appending a series of objects to the end of a Hashed Array Tree.

In a 1999 paper,[14] Brodnik et al. describe a tiered dynamic array data structure, which wastes only n1/2 space for n elements at any point in time, and they prove a lower bound showing that any dynamic array must waste this much space if the operations are to remain amortized constant time. Additionally, they present a variant where growing and shrinking the buffer has not only amortized but worst-case constant time.

Bagwell (2002)[15] presented the VList algorithm, which can be adapted to implement a dynamic array.

Language support

C++'s std::vector is an implementation of dynamic arrays, as are the ArrayList[16] class supplied with the Java API and the .NET Framework.[17] The generic List<> class supplied with version 2.0 of the .NET Framework is also implemented with dynamic arrays. Smalltalk's OrderedCollection is a dynamic array with dynamic start and end-index, making the removal of the first element also O(1). Python's list datatype implementation is a dynamic array. Delphi and D implement dynamic arrays at the language's core. Ada's Ada.Containers.Vectors generic package provides dynamic array implementation for a given subtype. Many scripting languages such as Perl and Ruby offer dynamic arrays as a built-in primitive data type. Several cross-platform frameworks provide dynamic array implementations for C, including CFArray and CFMutableArray in Core Foundation, and GArray and GPtrArray in GLib.

References

- ^ a b See, for example, the source code of java.util.ArrayList class from OpenJDK 6.

- ^ Lambert, Kenneth Alfred (2009), "Physical size and logical size", Fundamentals of Python: From First Programs Through Data Structures, Cengage Learning, p. 510, ISBN 1423902181

- ^ a b Goodrich, Michael T.; Tamassia, Roberto (2002), "1.5.2 Analyzing an Extendable Array Implementation", Algorithm Design: Foundations, Analysis and Internet Examples, Wiley, pp. 39–41.

- ^ a b Cormen, Thomas H.; Leiserson, Charles E.; Rivest, Ronald L.; Stein, Clifford (2001) [1990]. "17.4 Dynamic tables". Introduction to Algorithms (2nd ed.). MIT Press and McGraw-Hill. pp. 416–424. ISBN 0-262-03293-7.

- ^ a b c "C++ STL vector: definition, growth factor, member functions". Retrieved 2015-08-05.

- ^ "vector growth factor of 1.5". comp.lang.c++.moderated. Google Groups.

- ^ List object implementation from python.org, retrieved 2011-09-27.

- ^ Brais, Hadi. "Dissecting the C++ STL Vector: Part 3 - Capacity & Size". Micromysteries. Retrieved 2015-08-05.

- ^ "facebook/folly". GitHub. Retrieved 2015-08-05.

- ^ Brodnik, Andrej; Carlsson, Svante; Sedgewick, Robert; Munro, JI; Demaine, ED (1999), Resizable Arrays in Optimal Time and Space (Technical Report CS-99-09) (PDF), Department of Computer Science, University of Waterloo

- ^ a b c Chris Okasaki (1995). "Purely Functional Random-Access Lists". Proceedings of the Seventh International Conference on Functional Programming Languages and Computer Architecture: 86–95. doi:10.1145/224164.224187.

- ^ Goodrich, Michael T.; Kloss II, John G. (1999), "Tiered Vectors: Efficient Dynamic Arrays for Rank-Based Sequences", Workshop on Algorithms and Data Structures, Lecture Notes in Computer Science, 1663: 205–216, doi:10.1007/3-540-48447-7_21, ISBN 978-3-540-66279-2

- ^ Sitarski, Edward (September 1996), "HATs: Hashed array trees", Algorithm Alley, Dr. Dobb's Journal, 21 (11)

- ^ Cite error: The named reference

brodnikwas invoked but never defined (see the help page). - ^ Bagwell, Phil (2002), Fast Functional Lists, Hash-Lists, Deques and Variable Length Arrays, EPFL

- ^ Javadoc on

ArrayList - ^ ArrayList Class

External links

- NIST Dictionary of Algorithms and Data Structures: Dynamic array

- VPOOL - C language implementation of dynamic array.

- CollectionSpy — A Java profiler with explicit support for debugging ArrayList- and Vector-related issues.

- Open Data Structures - Chapter 2 - Array-Based Lists