Information technology

| Information science |

|---|

| General aspects |

| Related fields and subfields |

Information technology (IT) is the use of computers to create, process, store, retrieve, and exchange all kinds of data[1] and information. IT forms part of information and communications technology (ICT).[2] An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a computer system — including all hardware, software, and peripheral equipment — operated by a limited group of IT users.

Although humans have been storing, retrieving, manipulating, and communicating information since the earliest writing systems were developed,[3] the term information technology in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that "the new technology does not yet have a single established name. We shall call it information technology (IT)."[4] Their definition consists of three categories: techniques for processing, the application of statistical and mathematical methods to decision-making, and the simulation of higher-order thinking through computer programs.[4]

The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones. Several products or services within an economy are associated with information technology, including computer hardware, software, electronics, semiconductors, internet, telecom equipment, and e-commerce.[5][a]

Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC — 1450 AD), mechanical (1450—1840), electromechanical (1840—1940), and electronic (1940 to present).[3]

Information technology is also a branch of computer science, which can be defined as the overall study of procedure, structure, and the processing of various types of data. As this field continues to evolve across the world, the overall priority and importance has also grown, which is where we begin to see the introduction of computer science-related courses in K-12 education.

History of Computer Technology

Ideas of computer science were first mentioned before the 1950s under the Massachusetts Institute of Technology (MIT) and Harvard University, where they had discussed and began thinking of computer circuits and numerical calculations. As time went on, the field of information technology and computer science became more complex and was able to handle the processing of more data. Scholarly articles began to be published from different organizations.[7]

Looking at early computing, Alan Turing, J. Presper Eckert, and John Mauchly were considered to be some of the major pioneers of computer technology in the mid-1900s. Giving them such credit for their developments, most of their efforts were focused on designing the first digital computer. Along with that, topics such as artificial intelligence began to be brought up as Turing was beginning to question such technology of the time period.[8]

Devices have been used to aid computation for thousands of years, probably initially in the form of a tally stick.[9] The Antikythera mechanism, dating from about the beginning of the first century BC, is generally considered to be the earliest known mechanical analog computer, and the earliest known geared mechanism.[10] Comparable geared devices did not emerge in Europe until the 16th century, and it was not until 1645 that the first mechanical calculator capable of performing the four basic arithmetical operations was developed.[11]

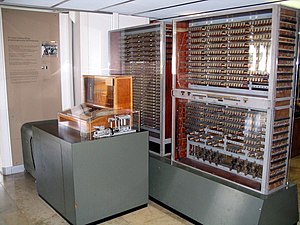

Electronic computers, using either relays or valves, began to appear in the early 1940s. The electromechanical Zuse Z3, completed in 1941, was the world's first programmable computer, and by modern standards one of the first machines that could be considered a complete computing machine. During the Second World War, Colossus developed the first electronic digital computer to decrypt German messages. Although it was programmable, it was not general-purpose, being designed to perform only a single task. It also lacked the ability to store its program in memory; programming was carried out using plugs and switches to alter the internal wiring.[12] The first recognizably modern electronic digital stored-program computer was the Manchester Baby, which ran its first program on 21 June 1948.[13]

The development of transistors in the late 1940s at Bell Laboratories allowed a new generation of computers to be designed with greatly reduced power consumption. The first commercially available stored-program computer, the Ferranti Mark I, contained 4050 valves and had a power consumption of 25 kilowatts. By comparison, the first transistorized computer developed at the University of Manchester and operational by November 1953, consumed only 150 watts in its final version.[14]

Several other breakthroughs in semiconductor technology include the integrated circuit (IC) invented by Jack Kilby at Texas Instruments and Robert Noyce at Fairchild Semiconductor in 1959, the metal-oxide-semiconductor field-effect transistor (MOSFET) invented by Mohamed Atalla and Dawon Kahng at Bell Laboratories in 1959, and the microprocessor invented by Ted Hoff, Federico Faggin, Masatoshi Shima, and Stanley Mazor at Intel in 1971. These important inventions led to the development of the personal computer (PC) in the 1970s, and the emergence of information and communications technology (ICT).[15]

By the year of 1984, according to the National Westminster Bank Quarterly Review, the term 'information technology' had been redefined as "The development of cable television was made possible by the convergence of telecommunications and computing technology (…generally known in Britain as information technology).” We then begin to see the appearance of the term in 1990 contained within documents for the International Organization for Standardization (ISO).[16]

Innovations in technology have already revolutionized the world by the twenty-first century as people were able to access different online services. This has changed the workforce drastically as thirty percent of U.S. workers were already in careers of this profession. 136.9 million people were personally connected to the Internet, which was equivalent to 51 million households.[17] Along with Internet, new types of technology were also being introduced across the globe, which has improved efficiency and made things easier across the globe.

Along with technology revolutionizing society, millions of processes could be done in seconds. Innovations in communication were also crucial as people began to rely on the computer to communicate through telephone lines and cable. The introduction of email was a really big thing as "companies in one part of the world could communicate by e-mail with suppliers and buyers in another part of the world..."[18]

Not only personally, computers and technology have also revolutionized the marketing industry, resulting in more buyers of their products. During the year of 2002, Americans have exceeded $28 billion in goods just over the Internet alone when e-commerce a decade later resulted in $289 billion in sales.[18] And as computers are rapidly becoming more sophisticated by the day, they are becoming more used as people are becoming more reliant on them during the twenty-first century.

Electronic Data Processing

Data Storage

Early electronic computers such as Colossus made use of punched tape, a long strip of paper on which data was represented by a series of holes, a technology now obsolete.[19] Electronic data storage, which is used in modern computers, dates from World War II, when a form of delay-line memory was developed to remove the clutter from radar signals, the first practical application of which was the mercury delay line.[20] The first random-access digital storage device was the Williams tube, which was based on a standard cathode ray tube.[21] However, the information stored in it and delay-line memory was volatile in the fact that it had to be continuously refreshed, and thus was lost once power was removed. The earliest form of non-volatile computer storage was the magnetic drum, invented in 1932[22] and used in the Ferranti Mark 1, the world's first commercially available general-purpose electronic computer.[23]

IBM introduced the first hard disk drive in 1956, as a component of their 305 RAMAC computer system.[24]: 6 Most digital data today is still stored magnetically on hard disks, or optically on media such as CD-ROMs.[25]: 4–5 Until 2002 most information was stored on analog devices, but that year digital storage capacity exceeded analog for the first time. As of 2007, almost 94% of the data stored worldwide was held digitally:[26] 52% on hard disks, 28% on optical devices, and 11% on digital magnetic tape. It has been estimated that the worldwide capacity to store information on electronic devices grew from less than 3 exabytes in 1986 to 295 exabytes in 2007,[27] doubling roughly every 3 years.[28]

Databases

Database Management Systems (DMS) emerged in the 1960s to address the problem of storing and retrieving large amounts of data accurately and quickly. An early such system was IBM's Information Management System (IMS),[29] which is still widely deployed more than 50 years later.[30] IMS stores data hierarchically,[29] but in the 1970s Ted Codd proposed an alternative relational storage model based on set theory and predicate logic and the familiar concepts of tables, rows, and columns. In 1981, the first commercially available relational database management system (RDBMS) was released by Oracle.[31]

All DMS consist of components, they allow the data they store to be accessed simultaneously by many users while maintaining its integrity.[32] All databases are common in one point that the structure of the data they contain is defined and stored separately from the data itself, in a database schema.[29]

In recent years, the extensible markup language (XML) has become a popular format for data representation. Although XML data can be stored in normal file systems, it is commonly held in relational databases to take advantage of their "robust implementation verified by years of both theoretical and practical effort."[33] As an evolution of the Standard Generalized Markup Language (SGML), XML's text-based structure offers the advantage of being both machine and human-readable.[34]

Data Retrieval

The relational database model introduced a programming-language independent Structured Query Language (SQL), based on relational algebra.

The terms "data" and "information" are not synonymous. Anything stored is data, but it only becomes information when it is organized and presented meaningfully.[35]: 1–9 Most of the world's digital data is unstructured, and stored in a variety of different physical formats[36][b] even within a single organization. Data warehouses began to be developed in the 1980s to integrate these disparate stores. They typically contain data extracted from various sources, including external sources such as the Internet, organized in such a way as to facilitate decision support systems (DSS).[37]: 4–6

Data Transmission

Data transmission has three aspects: transmission, propagation, and reception.[38] It can be broadly categorized as broadcasting, in which information is transmitted unidirectionally downstream, or telecommunications, with bidirectional upstream and downstream channels.[27]

XML has been increasingly employed as a means of data interchange since the early 2000s,[39] particularly for machine-oriented interactions such as those involved in web-oriented protocols such as SOAP,[34] describing "data-in-transit rather than... data-at-rest".[39]

Data Manipulation

Hilbert and Lopez identify the exponential pace of technological change (a kind of Moore's law): machines' application-specific capacity to compute information per capita roughly doubled every 14 months between 1986 and 2007; the per capita capacity of the world's general-purpose computers doubled every 18 months during the same two decades; the global telecommunication capacity per capita doubled every 34 months; the world's storage capacity per capita required roughly 40 months to double (every 3 years); and per capita broadcast information has doubled every 12.3 years.[27]

Massive amounts of data are stored worldwide every day, but unless it can be analyzed and presented effectively it essentially resides in what have been called data tombs: "data archives that are seldom visited".[40] To address that issue, the field of data mining — "the process of discovering interesting patterns and knowledge from large amounts of data"[41] — emerged in the late 1980s.[42]

Database Problems

As technology is becoming more sophisticated by the day, there are increasing problems for security as everyone relies on storing information into computers more than ever. With data and databases becoming more dependent in businesses and organizations, it is considered to be the "backbone" of those businesses and organizations, hence resulting in the development of different technological departments like IT departments and personnel.[43]

Along with IT Departments and personnel, there are also different types of agencies that "strengthen" the workforce. The Department of Homeland Security (DHS) is one of those examples that do just that as they make sure all organizations have all of the different necessities to add infrastructure and security to protect them in the future from the different challenges that may lie head. Branching off of the DHS, many programs are in place as well to build cybersecurity awareness across the organization or workforce.

- Identify and quantify your cybersecurity workforce

- Understand workforce needs and skills gaps

- Hire the right people for clearly defined roles

- Enhance employee skills with training and professional development

- Create programs and experiences to retain top talent[44]

Services

The technology and services it provides for sending and receiving electronic messages (called "letters" or "electronic letters") over a distributed (including global) computer network. In terms of the composition of elements and the principle of operation, electronic mail practically repeats the system of regular (paper) mail, borrowing both terms (mail, letter, envelope, attachment, box, delivery, and others) and characteristic features — ease of use, message transmission delays, sufficient reliability and at the same time no guarantee of delivery. The advantages of e-mail are: easily perceived and remembered by a person addresses of the form user_name@domain_name (for example, somebody@example.com); the ability to transfer both plain text and formatted, as well as arbitrary files; independence of servers (in the general case, they address each other directly); sufficiently high reliability of message delivery; ease of use by humans and programs.

Disadvantages of e-mail: the presence of such a phenomenon as spam (massive advertising and viral mailings); the theoretical impossibility of guaranteed delivery of a particular letter; possible delays in message delivery (up to several days); limits on the size of one message and on the total size of messages in the mailbox (personal for users).

Search System

A software and hardware complex with a web interface that provides the ability to search for information on the Internet. A search engine usually means a site that hosts the interface (front-end) of the system. The software part of a search engine is a search engine (search engine) — a set of programs that provides the functionality of a search engine and is usually a trade secret of the search engine developer company. Most search engines look for information on World Wide Web sites, but there are also systems that can look for files on FTP servers, items in online stores, and information on Usenet newsgroups. Improving search is one of the priorities of the modern Internet (see the Deep Web article about the main problems in the work of search engines).

According to Statista, in October 2021, search engine usage was distributed as follows:

- Google — 86,64%;

- Bing — 7%;

- Yahoo! — 2.75%.[45]

According to Statcounter Global Stats for August 2021, in Asia, the Chinese resource Baidu managed to take almost 3% of the Internet market. In turn, Yandex in the same region bypassed Yahoo, receiving a share of almost 2% and third place in the ranking.[46]

Perspectives

Academic Perspective

This section possibly contains original research. (May 2022) |

In an academic context, the Association for Computing Machinery defines Information Technology as "undergraduate degree programs that prepare students to meet the computer technology needs of business, government, healthcare, schools, and other kinds of organizations IT specialists assume responsibility for selecting hardware and software products appropriate for an organization, integrating those products with organizational needs and infrastructure, and installing, customizing, and maintaining those applications for the organization’s computer users."[47]

Undergraduate degrees in IT (B.S., A.S.) are similar to other computer science degrees. In fact, they oftentimes have the same foundational level courses. Computer science (CS) programs tend to focus more on theory and design, whereas Information Technology programs are structured to equip the graduate with expertise in the practical application of technology solutions to support modern business and user needs. [citation needed][original research?]

However this not true in all cases. For example, in India an engineering degree in Information Technology (B.Tech IT) is a 4-year professional course and it is considered as an equivalent degree to a degree in Computer Science and Engineering since they share strikingly similar syllabus across many universities in India.[48][49][50][51][original research?]

B.Tech IT degree focuses heavily on mathematical foundations of computer science since students are taught calculus, linear algebra, graph theory and discrete mathematics in first two years. B.Tech. IT program also contains core computer science courses like Data Structures, Algorithm Analysis And Design, Compiler Design, Automata Theory, Computer Architecture, Operating Systems, Computer Networks etc.[52][53][original research?] And the graduate level entrance examination which is required for masters in engineering in India-GATE-is common for both CS and IT undergraduates.[54][original research?]

Concerns have been raised about this fact that most schools are lacking advanced-placement courses in this field.[55]

Commercial and Employment Perspective

Companies in the information technology field are often discussed as a group as the "tech sector" or the "tech industry."[56][57][58] These titles can be misleading at times and should not be mistaken for “tech companies;" which are generally large scale, for-profit corporations that sell consumer technology and software. It is also worth noting that from a business perspective, Information Technology departments are a “cost center” the majority of the time. A cost center is a department or staff which incurs expenses, or “costs," within a company rather than generating profits or revenue streams. Modern businesses rely heavily on technology for their day-to-day operations, so the expenses delegated to cover technology that facilitates business in a more efficient manner are usually seen as “just the cost of doing business." IT departments are allocated funds by senior leadership and must attempt to achieve the desired deliverables while staying within that budget. Government and the private sector might have different funding mechanisms, but the principles are more-or-less the same. This is an often overlooked reason for the rapid interest in automation and Artificial Intelligence, but the constant pressure to do more with less is opening the door for automation to take control of at least some minor operations in large companies.

Many companies now have IT departments for managing the computers, networks, and other technical areas of their businesses. Companies have also sought to integrate IT with business outcomes and decision-making through a BizOps or business operations department.[59]

In a business context, the Information Technology Association of America has defined information technology as "the study, design, development, application, implementation, support, or management of computer-based information systems".[60][page needed] The responsibilities of those working in the field include network administration, software development and installation, and the planning and management of an organization's technology life cycle, by which hardware and software are maintained, upgraded, and replaced.

Information services

Information services is a term somewhat loosely applied to a variety of IT-related services offered by commercial companies,[61][62][63] as well as data brokers.

-

U.S. Employment distribution of computer systems design and related services, 2011[64]

-

U.S. Employment in the computer systems and design related services industry, in thousands, 1990-2011[64]

-

U.S. Occupational growth and wages in computer systems design and related services, 2010-2020[64]

-

U.S. projected percent change in employment in selected occupations in computer systems design and related services, 2010-2020[64]

-

U.S. projected average annual percent change in output and employment in selected industries, 2010-2020[64]

Ethical Perspectives

The field of information ethics was established by mathematician Norbert Wiener in the 1940s.[65]: 9 Some of the ethical issues associated with the use of information technology include:[66]: 20–21

- Breaches of copyright by those downloading files stored without the permission of the copyright holders

- Employers monitoring their employees' emails and other Internet usage

- Unsolicited emails

- Hackers accessing online databases

- Web sites installing cookies or spyware to monitor a user's online activities, which may be used by data brokers

The Need for Computer Security

When talking about computer security, most of us think about computers being hacked or taken over by cybercriminals. However, computer security doesn't only deal with protecting the internal components of a computer, but also protecting them from natural disasters like tornadoes, floods, fires, etc. Computer security basically is short for "protecting the computer from harm," meaning from natural disasters, cyber criminals, or pretty much anything that can hurt the computer internally or externally.[43]

Cybersecurity is a big topic under the computer security category as this basically deals with protecting technology from unwanted cyber criminals or trolls. It is a very important aspect to businesses and organizations and is critical to the overall health and well-being of the organization's structure. As technology is getting more sophisticated by the day, the rate of cyber attacks and security breaches are also increasing, meaning that maintaining proper cybersecurity awareness in the work force is very important.[44] With maintaining proper cyber security from around the workplace, there are people who have to have a special skill set to be able to "protect" the business or organization. There are several different categories that this can be broken down into, from networking and databases to information systems.[44]

With computer security, there comes a need for professionals, or people who have educational experience in the different fields of this profession to help protect networks, databases, and computer systems from internal and external threats that could potentially cause harm. With technology getting more sophisticated, there comes an increased threat for internal harm to computers and technology, meaning from ransomware, malware, spyware, and phishing attacks. These are actually just some of the different issues that these professionals deal with as there is a wide variety of different types of attacks across the globe. Along with different issues and attacks, there are also a numerous amount of specialties under the profession. These contain everything from the general topic of computer science, computer engineering, software engineering, information systems, and computer systems.[67] As the world continues to advance in technology, there is a desperate need for people in these professions to help "make" and "execute" those upgrades as they help make technology and software more secure and reliable worldwide.

See also

- Center for Minorities and People with Disabilities in Information Technology

- Computing

- Computer science

- Cybernetics

- Data processing

- Health information technology

- Information and communications technology (ICT)

- Information management

- Journal of Cases on Information Technology

- Knowledge society

- List of largest technology companies by revenue

- Operational technology

- Outline of information technology

- World Information Technology and Services Alliance

References

Notes

- ^ On the later more broad application of the term IT, Keary comments: "In its original application 'information technology' was appropriate to describe the convergence of technologies with application in the vast field of data storage, retrieval, processing, and dissemination. This useful conceptual term has since been converted to what purports to be of great use, but without the reinforcement of definition ... the term IT lacks substance when applied to the name of any function, discipline, or position."[6]

- ^ "Format" refers to the physical characteristics of the stored data such as its encoding scheme; "structure" describes the organisation of that data.

Citations

- ^ Daintith, John, ed. (2009), "IT", A Dictionary of Physics, Oxford University Press, ISBN 9780199233991, retrieved 1 August 2012 (subscription required).

- ^ "Computer Technology Definition". Law Insider. Retrieved 11 July 2022.

- ^ a b Butler, Jeremy G., A History of Information Technology and Systems, University of Arizona, archived from the original on 5 August 2012, retrieved 2 August 2012

- ^ a b Leavitt, Harold J.; Whisler, Thomas L. (1958), "Management in the 1980s", Harvard Business Review, 11.

- ^ Chandler, Daniel; Munday, Rod (10 February 2011), "Information technology", A Dictionary of Media and Communication (first ed.), Oxford University Press, ISBN 978-0199568758, retrieved 1 August 2012,

Commonly a synonym for computers and computer networks but more broadly designating any technology that is used to generate, store, process, and/or distribute information electronically, including television and telephone.

. - ^ Ralston, Hemmendinger & Reilly (2000), p. 869.

- ^ Slotten, Hugh Richard (1 January 2014). The Oxford Encyclopedia of the History of American Science, Medicine, and Technology. Oxford University Press. doi:10.1093/acref/9780199766666.001.0001. ISBN 978-0-19-976666-6.

- ^ Henderson, H. (2017). computer science. In H. Henderson, Facts on File science library: Encyclopedia of computer science and technology. (3rd ed.). [Online]. New York: Facts On File.

- ^ Schmandt-Besserat, Denise (1981), "Decipherment of the earliest tablets", Science, 211 (4479): 283–285, Bibcode:1981Sci...211..283S, doi:10.1126/science.211.4479.283, PMID 17748027.

- ^ Wright (2012), p. 279.

- ^ Chaudhuri (2004), p. 3.

- ^ Lavington (1980), p. 11.

- ^ Enticknap, Nicholas (Summer 1998), "Computing's Golden Jubilee", Resurrection (20), ISSN 0958-7403, archived from the original on 9 January 2012, retrieved 19 April 2008.

- ^ Cooke-Yarborough, E. H. (June 1998), "Some early transistor applications in the UK", Engineering Science & Education Journal, 7 (3): 100–106, doi:10.1049/esej:19980301, ISSN 0963-7346.

- ^ "Advanced information on the Nobel Prize in Physics 2000" (PDF). Nobel Prize. June 2018. Retrieved 17 December 2019.

- ^ Information technology. (2003). In E.D. Reilly, A. Ralston & D. Hemmendinger (Eds.), Encyclopedia of computer science. (4th ed.).

- ^ Stewart, C.M. (2018). Computers. In S. Bronner (Ed.), Encyclopedia of American studies. [Online]. Johns Hopkins University Press.

- ^ a b Northrup, C.C. (2013). Computers. In C. Clark Northrup (Ed.), Encyclopedia of world trade: from ancient times to the present. [Online]. London: Routledge.

- ^ Alavudeen & Venkateshwaran (2010), p. 178.

- ^ Lavington (1998), p. 1.

- ^ "Early computers at Manchester University", Resurrection, 1 (4), Summer 1992, ISSN 0958-7403, archived from the original on 28 August 2017, retrieved 19 April 2008.

- ^ Universität Klagenfurt (ed.), "Magnetic drum", Virtual Exhibitions in Informatics, retrieved 21 August 2011.

- ^ The Manchester Mark 1, University of Manchester, archived from the original on 21 November 2008, retrieved 24 January 2009.

- ^ Khurshudov, Andrei (2001), The Essential Guide to Computer Data Storage: From Floppy to DVD, Prentice Hall, ISBN 978-0-130-92739-2.

- ^ Wang, Shan X.; Taratorin, Aleksandr Markovich (1999), Magnetic Information Storage Technology, Academic Press, ISBN 978-0-12-734570-3.

- ^ Wu, Suzanne, "How Much Information Is There in the World?", USC News, University of Southern California, retrieved 10 September 2013.

- ^ a b c Hilbert, Martin; López, Priscila (1 April 2011), "The World's Technological Capacity to Store, Communicate, and Compute Information", Science, 332 (6025): 60–65, Bibcode:2011Sci...332...60H, doi:10.1126/science.1200970, PMID 21310967, S2CID 206531385, retrieved 10 September 2013.

- ^ "Americas events – Video animation on The World's Technological Capacity to Store, Communicate, and Compute Information from 1986 to 2010". The Economist. Archived from the original on 18 January 2012.

- ^ a b c Ward & Dafoulas (2006), p. 2.

- ^ Olofson, Carl W. (October 2009), A Platform for Enterprise Data Services (PDF), IDC, retrieved 7 August 2012.

- ^ Ward & Dafoulas (2006), p. 3.

- ^ Silberschatz, Abraham (2010). Database System Concepts. McGraw-Hill Higher Education. ISBN 978-0-07-741800-7..

- ^ Pardede (2009), p. 2.

- ^ a b Pardede (2009), p. 4.

- ^ Kedar, Rahul (2009). Database Management System. Technical Publications. ISBN 9788184316049.

- ^ van der Aalst (2011), p. 2.

- ^ Dyché, Jill (2000), Turning Data Into Information With Data Warehousing, Addison Wesley, ISBN 978-0-201-65780-7.

- ^ Weik (2000), p. 361.

- ^ a b Pardede (2009), p. xiii.

- ^ Han, Kamber & Pei (2011), p. 5.

- ^ Han, Kamber & Pei (2011), p. 8.

- ^ Han, Kamber & Pei (2011), p. xxiii.

- ^ a b Computer technology and information security issues. (2012). In R. Fischer, E. Halibozek & D. Walters, Introduction to security. (9th ed.). [Online]. Oxford: Elsevier Science & Technology.

- ^ a b c National Cybersecurity workforce framework. (2019). In I. Gonzales, K. Joaquin Jay & Roger L. (Eds.), Cybersecurity: current writings on threats and protection. [Online]. Jefferson: McFarland.

- ^ Worldwide desktop market share of leading search engines from January 2010 to September 2021 от октября 2021 на Statista

- ^ "Где пользователи предпочитают искать информацию". rspectr.com. 3 September 2021. Retrieved 9 November 2021.

- ^ The Joint Task Force for Computing Curricula 2005.Computing Curricula 2005: The Overview Report. Archived 21 October 2014 at the Wayback Machine.

- ^ "Anna University(India)-IT syllabus" (PDF).

- ^ "Anna University(India)-Computer Science-Syllabus" (PDF).

- ^ "Delhi Technical University-IT-Syllabus" (PDF).

- ^ "Delhi Technical University-Computer Science Syllabus" (PDF).

- ^ "Delhi Technical University-IT-Syllabus" (PDF).

- ^ "Anna University(India)-B.Tech IT Syllabus" (PDF).

- ^ "Gate 2022-Official Web Site".

- ^ Henderson, H. (2017). computer science. In H. Henderson, Facts on File science library: Encyclopedia of computer science and technology. (3rd ed.).

- ^ "Technology Sector Snapshot". The New York Times. Archived from the original on 13 January 2017. Retrieved 12 January 2017.

- ^ "Our programmes, campaigns and partnerships". TechUK. Retrieved 12 January 2017.

- ^ "Cyberstates 2016". CompTIA. Retrieved 12 January 2017.

- ^ "Manifesto Hatched to Close Gap Between Business and IT". TechNewsWorld. 22 October 2020. Retrieved 22 March 2021.

- ^ Proctor, K. Scott (2011), Optimizing and Assessing Information Technology: Improving Business Project Execution, John Wiley & Sons, ISBN 978-1-118-10263-3.

- ^ "Top Information Services companies". VentureRadar. Retrieved 8 March 2021.

- ^ "Follow Information Services on Index.co". Index.co. Retrieved 8 March 2021.

- ^ Publishing, Value Line. "Industry Overview: Information Services". Value Line. Retrieved 8 March 2021.

- ^ a b c d e Lauren Csorny (9 April 2013). "U.S. Careers in the growing field of information technology services". U.S. Bureau of Labor Statistics.

- ^ Bynum, Terrell Ward (2008), "Norbert Wiener and the Rise of Information Ethics", in van den Hoven, Jeroen; Weckert, John (eds.), Information Technology and Moral Philosophy, Cambridge University Press, ISBN 978-0-521-85549-5.

- ^ Reynolds, George (2009), Ethics in Information Technology, Cengage Learning, ISBN 978-0-538-74622-9.

- ^ Computer science. (2003). In E.D. Reilly, A. Ralston & D. Hemmendinger (Eds.), Encyclopedia of computer science. (4th ed.). [Online]. Hoboken: Wiley.

Bibliography

- Alavudeen, A.; Venkateshwaran, N. (2010), Computer Integrated Manufacturing, PHI Learning, ISBN 978-81-203-3345-1

- Chaudhuri, P. Pal (2004), Computer Organization and Design, PHI Learning, ISBN 978-81-203-1254-8

- Han, Jiawei; Kamber, Micheline; Pei, Jian (2011), Data Mining: Concepts and Techniques (3rd ed.), Morgan Kaufmann, ISBN 978-0-12-381479-1

- Lavington, Simon (1980), Early British Computers, Manchester University Press, ISBN 978-0-7190-0810-8

- Lavington, Simon (1998), A History of Manchester Computers (2nd ed.), The British Computer Society, ISBN 978-1-902505-01-5

- Pardede, Eric (2009), Open and Novel Issues in XML Database Applications, Information Science Reference, ISBN 978-1-60566-308-1

- Ralston, Anthony; Hemmendinger, David; Reilly, Edwin D., eds. (2000), Encyclopedia of Computer Science (4th ed.), Nature Publishing Group, ISBN 978-1-56159-248-7

- van der Aalst, Wil M. P. (2011), Process Mining: Discovery, Conformance and Enhancement of Business Processes, Springer, ISBN 978-3-642-19344-6

- Ward, Patricia; Dafoulas, George S. (2006), Database Management Systems, Cengage Learning EMEA, ISBN 978-1-84480-452-8

- Weik, Martin (2000), Computer Science and Communications Dictionary, vol. 2, Springer, ISBN 978-0-7923-8425-0

- Wright, Michael T. (2012), "The Front Dial of the Antikythera Mechanism", in Koetsier, Teun; Ceccarelli, Marco (eds.), Explorations in the History of Machines and Mechanisms: Proceedings of HMM2012, Springer, pp. 279–292, ISBN 978-94-007-4131-7

Further reading

- Allen, T.; Morton, M. S. Morton, eds. (1994), Information Technology and the Corporation of the 1990s, Oxford University Press.

- Gitta, Cosmas and South, David (2011). Southern Innovator Magazine Issue 1: Mobile Phones and Information Technology: United Nations Office for South-South Cooperation. ISSN 2222—9280.

- Gleick, James (2011).The Information: A History, a Theory, a Flood. New York: Pantheon Books.

- Price, Wilson T. (1981), Introduction to Computer Data Processing, Holt-Saunders International Editions, ISBN 978-4-8337-0012-2.

- Shelly, Gary, Cashman, Thomas, Vermaat, Misty, and Walker, Tim. (1999). Discovering Computers 2000: Concepts for a Connected World. Cambridge, Massachusetts: Course Technology.

- Webster, Frank, and Robins, Kevin. (1986). Information Technology — A Luddite Analysis. Norwood, NJ: Ablex.

External links

Learning materials related to Information technology at Wikiversity

Learning materials related to Information technology at Wikiversity Media related to Information technology at Wikimedia Commons

Media related to Information technology at Wikimedia Commons Quotations related to Information technology at Wikiquote

Quotations related to Information technology at Wikiquote

![U.S. Employment distribution of computer systems design and related services, 2011[64]](http://upload.wikimedia.org/wikipedia/commons/thumb/e/e6/ComputerSystemsEmployment_distribution_.png/120px-ComputerSystemsEmployment_distribution_.png)

![U.S. Employment in the computer systems and design related services industry, in thousands, 1990-2011[64]](http://upload.wikimedia.org/wikipedia/commons/thumb/7/7a/EmploymentComputerSystems.png/120px-EmploymentComputerSystems.png)

![U.S. projected percent change in employment in selected occupations in computer systems design and related services, 2010-2020[64]](http://upload.wikimedia.org/wikipedia/commons/thumb/9/9a/ProjectedEmploymentChangeComputerSystems.png/120px-ProjectedEmploymentChangeComputerSystems.png)

![U.S. projected average annual percent change in output and employment in selected industries, 2010-2020[64]](http://upload.wikimedia.org/wikipedia/commons/thumb/3/35/ProjectedAverageAnnualEmploymentChangeSelectedIndustries.png/120px-ProjectedAverageAnnualEmploymentChangeSelectedIndustries.png)