g factor (psychometrics)

The g factor[a] is a construct developed in psychometric investigations of cognitive abilities and human intelligence. It is a variable that summarizes positive correlations among different cognitive tasks, reflecting the fact that an individual's performance on one type of cognitive task tends to be comparable to that person's performance on other kinds of cognitive tasks.[citation needed] The g factor typically accounts for 40 to 50 percent of the between-individual performance differences on a given cognitive test, and composite scores ("IQ scores") based on many tests are frequently regarded as estimates of individuals' standing on the g factor.[1] The terms IQ, general intelligence, general cognitive ability, general mental ability, and simply intelligence are often used interchangeably to refer to this common core shared by cognitive tests.[2] However, the g factor itself is a mathematical construct indicating the level of observed correlation between cognitive tasks.[3] The measured value of this construct depends on the cognitive tasks that are used, and little is known about the underlying causes of the observed correlations.

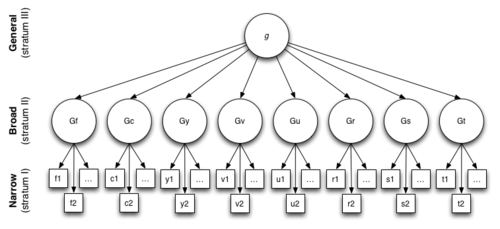

The existence of the g factor was originally proposed by the English psychologist Charles Spearman in the early years of the 20th century. He observed that children's performance ratings, across seemingly unrelated school subjects, were positively correlated, and reasoned that these correlations reflected the influence of an underlying general mental ability that entered into performance on all kinds of mental tests. Spearman suggested that all mental performance could be conceptualized in terms of a single general ability factor, which he labeled g, and many narrow task-specific ability factors. Soon after Spearman proposed the existence of g, it was challenged by Godfrey Thomson, who presented evidence that such intercorrelations among test results could arise even if no g-factor existed.[4] Today's factor models of intelligence typically represent cognitive abilities as a three-level hierarchy, where there are many narrow factors at the bottom of the hierarchy, a handful of broad, more general factors at the intermediate level, and at the apex a single factor, referred to as the g factor, which represents the variance common to all cognitive tasks.

Traditionally, research on g has concentrated on psychometric investigations of test data, with a special emphasis on factor analytic approaches. However, empirical research on the nature of g has also drawn upon experimental cognitive psychology and mental chronometry, brain anatomy and physiology, quantitative and molecular genetics, and primate evolution.[5] Research in the field of behavioral genetics has shown that the construct of g is highly heritable in measured populations. It has a number of other biological correlates, including brain size. It is also a significant predictor of individual differences in many social outcomes, particularly in education and employment.

Critics have contended that an emphasis on g is misplaced and entails a devaluation of other important abilities. Some scientists, including Stephen J. Gould, have argued that the concept of g is a merely reified construct rather than a valid measure of human intelligence.

Cognitive ability testing

[edit]| Classics | French | English | Math | Pitch | Music | |

|---|---|---|---|---|---|---|

| Classics | – | |||||

| French | .83 | – | ||||

| English | .78 | .67 | – | |||

| Math | .70 | .67 | .64 | – | ||

| Pitch discrimination | .66 | .65 | .54 | .45 | – | |

| Music | .63 | .57 | .51 | .51 | .40 | – |

| g | .958 | .882 | .803 | .750 | .673 | .646 |

| V | S | I | C | PA | BD | A | PC | DSp | OA | DS | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| V | – | ||||||||||

| S | .67 | - | |||||||||

| I | .72 | .59 | - | ||||||||

| C | .70 | .58 | .59 | - | |||||||

| PA | .51 | .53 | .50 | .42 | - | ||||||

| BD | .45 | .46 | .45 | .39 | .43 | - | |||||

| A | .48 | .43 | .55 | .45 | .41 | .44 | – | ||||

| PC | .49 | .52 | .52 | .46 | .48 | .45 | .30 | - | |||

| DSp | .46 | .40 | .36 | .36 | .31 | .32 | .47 | .23 | - | ||

| OA | .32 | .40 | .32 | .29 | .36 | .58 | .33 | .41 | .14 | - | |

| DS | .32 | .33 | .26 | .30 | .28 | .36 | .28 | .26 | .27 | .25 | - |

| g | .83 | .80 | .80 | .75 | .70 | .70 | .68 | .68 | .56 | .56 | .48 |

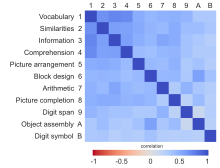

Cognitive ability tests are designed to measure different aspects of cognition. Specific domains assessed by tests include mathematical skill, verbal fluency, spatial visualization, and memory, among others. However, individuals who excel at one type of test tend to excel at other kinds of tests, too, while those who do poorly on one test tend to do so on all tests, regardless of the tests' contents.[8] The English psychologist Charles Spearman was the first to describe this phenomenon.[9] In a famous research paper published in 1904,[10] he observed that children's performance measures across seemingly unrelated school subjects were positively correlated. This finding has since been replicated numerous times. The consistent finding of universally positive correlation matrices of mental test results (or the "positive manifold"), despite large differences in tests' contents, has been described as "arguably the most replicated result in all psychology".[11] Zero or negative correlations between tests suggest the presence of sampling error or restriction of the range of ability in the sample studied.[12]

Using factor analysis or related statistical methods, it is possible to identify a single common factor that can be regarded as a summary variable characterizing the correlations between all the different tests in a test battery. Spearman referred to this common factor as the general factor, or simply g. (By convention, g is always printed as a lower case italic.) Mathematically, the g factor is a source of variance among individuals, which means that one cannot meaningfully speak of any one individual's mental abilities consisting of g or other factors to any specified degree. One can only speak of an individual's standing on g (or other factors) compared to other individuals in a relevant population.[12][13][14]

Different tests in a test battery may correlate with (or "load onto") the g factor of the battery to different degrees. These correlations are known as g loadings. An individual test taker's g factor score, representing their relative standing on the g factor in the total group of individuals, can be estimated using the g loadings. Full-scale IQ scores from a test battery will usually be highly correlated with g factor scores, and they are often regarded as estimates of g. For example, the correlations between g factor scores and full-scale IQ scores from David Wechsler's tests have been found to be greater than .95.[1][12][15] The terms IQ, general intelligence, general cognitive ability, general mental ability, or simply intelligence are frequently used interchangeably to refer to the common core shared by cognitive tests.[2]

The g loadings of mental tests are always positive and usually range between .10 and .90, with a mean of about .60 and a standard deviation of about .15. Raven's Progressive Matrices is among the tests with the highest g loadings, around .80. Tests of vocabulary and general information are also typically found to have high g loadings.[16][17] However, the g loading of the same test may vary somewhat depending on the composition of the test battery.[18]

The complexity of tests and the demands they place on mental manipulation are related to the tests' g loadings. For example, in the forward digit span test the subject is asked to repeat a sequence of digits in the order of their presentation after hearing them once at a rate of one digit per second. The backward digit span test is otherwise the same except that the subject is asked to repeat the digits in the reverse order to that in which they were presented. The backward digit span test is more complex than the forward digit span test, and it has a significantly higher g loading. Similarly, the g loadings of arithmetic computation, spelling, and word reading tests are lower than those of arithmetic problem solving, text composition, and reading comprehension tests, respectively.[12][19]

Test difficulty and g loadings are distinct concepts that may or may not be empirically related in any specific situation. Tests that have the same difficulty level, as indexed by the proportion of test items that are failed by test takers, may exhibit a wide range of g loadings. For example, tests of rote memory have been shown to have the same level of difficulty but considerably lower g loadings than many tests that involve reasoning.[19][20]

Theories

[edit]While the existence of g as a statistical regularity is well-established and uncontroversial among experts, there is no consensus as to what causes the positive intercorrelations. Several explanations have been proposed.[21]

Mental energy or efficiency

[edit]Charles Spearman reasoned that correlations between tests reflected the influence of a common causal factor, a general mental ability that enters into performance on all kinds of mental tasks. However, he thought that the best indicators of g were those tests that reflected what he called the eduction of relations and correlates, which included abilities such as deduction, induction, problem solving, grasping relationships, inferring rules, and spotting differences and similarities. Spearman hypothesized that g was equivalent with "mental energy". However, this was more of a metaphorical explanation, and he remained agnostic about the physical basis of this energy, expecting that future research would uncover the exact physiological nature of g.[22]

Following Spearman, Arthur Jensen maintained that all mental tasks tap into g to some degree. According to Jensen, the g factor represents a "distillate" of scores on different tests rather than a summation or an average of such scores, with factor analysis acting as the distillation procedure.[17] He argued that g cannot be described in terms of the item characteristics or information content of tests, pointing out that very dissimilar mental tasks may have nearly equal g loadings. Wechsler similarly contended that g is not an ability at all but rather some general property of the brain. Jensen hypothesized that g corresponds to individual differences in the speed or efficiency of the neural processes associated with mental abilities.[23] He also suggested that given the associations between g and elementary cognitive tasks, it should be possible to construct a ratio scale test of g that uses time as the unit of measurement.[24]

Sampling theory

[edit]The so-called sampling theory of g, originally developed by Edward Thorndike and Godfrey Thomson, proposes that the existence of the positive manifold can be explained without reference to a unitary underlying capacity. According to this theory, there are a number of uncorrelated mental processes, and all tests draw upon different samples of these processes. The intercorrelations between tests are caused by an overlap between processes tapped by the tests.[25][26] Thus, the positive manifold arises due to a measurement problem, an inability to measure more fine-grained, presumably uncorrelated mental processes.[14]

It has been shown that it is not possible to distinguish statistically between Spearman's model of g and the sampling model; both are equally able to account for intercorrelations among tests.[27] The sampling theory is also consistent with the observation that more complex mental tasks have higher g loadings, because more complex tasks are expected to involve a larger sampling of neural elements and therefore have more of them in common with other tasks.[28]

Some researchers have argued that the sampling model invalidates g as a psychological concept, because the model suggests that g factors derived from different test batteries simply reflect the shared elements of the particular tests contained in each battery rather than a g that is common to all tests. Similarly, high correlations between different batteries could be due to them measuring the same set of abilities rather than the same ability.[29]

Critics have argued that the sampling theory is incongruent with certain empirical findings. Based on the sampling theory, one might expect that related cognitive tests share many elements and thus be highly correlated. However, some closely related tests, such as forward and backward digit span, are only modestly correlated, while some seemingly completely dissimilar tests, such as vocabulary tests and Raven's matrices, are consistently highly correlated. Another problematic finding is that brain damage frequently leads to specific cognitive impairments rather than a general impairment one might expect based on the sampling theory.[14][30]

Mutualism

[edit]The "mutualism" model of g proposes that cognitive processes are initially uncorrelated, but that the positive manifold arises during individual development due to mutual beneficial relations between cognitive processes. Thus there is no single process or capacity underlying the positive correlations between tests. During the course of development, the theory holds, any one particularly efficient process will benefit other processes, with the result that the processes will end up being correlated with one another. Thus similarly high IQs in different persons may stem from quite different initial advantages that they had.[14][31] Critics have argued that the observed correlations between the g loadings and the heritability coefficients of subtests are problematic for the mutualism theory.[32]

Factor structure of cognitive abilities

[edit]

Factor analysis is a family of mathematical techniques that can be used to represent correlations between intelligence tests in terms of a smaller number of variables known as factors. The purpose is to simplify the correlation matrix by using hypothetical underlying factors to explain the patterns in it. When all correlations in a matrix are positive, as they are in the case of IQ, factor analysis will yield a general factor common to all tests. The general factor of IQ tests is referred to as the g factor, and it typically accounts for 40 to 50 percent of the variance in IQ test batteries.[33] The presence of correlations between many widely varying cognitive tests has often been taken as evidence for the existence of g, but McFarland (2012) showed that such correlations do not provide any more or less support for the existence of g than for the existence of multiple factors of intelligence.[34]

Charles Spearman developed factor analysis in order to study correlations between tests. Initially, he developed a model of intelligence in which variations in all intelligence test scores are explained by only two kinds of variables: first, factors that are specific to each test (denoted s); and second, a g factor that accounts for the positive correlations across tests. This is known as Spearman's two-factor theory. Later research based on more diverse test batteries than those used by Spearman demonstrated that g alone could not account for all correlations between tests. Specifically, it was found that even after controlling for g, some tests were still correlated with each other. This led to the postulation of group factors that represent variance that groups of tests with similar task demands (e.g., verbal, spatial, or numerical) have in common in addition to the shared g variance.[35]

Through factor rotation, it is, in principle, possible to produce an infinite number of different factor solutions that are mathematically equivalent in their ability to account for the intercorrelations among cognitive tests. These include solutions that do not contain a g factor. Thus factor analysis alone cannot establish what the underlying structure of intelligence is. In choosing between different factor solutions, researchers have to examine the results of factor analysis together with other information about the structure of cognitive abilities.[36]

There are many psychologically relevant reasons for preferring factor solutions that contain a g factor. These include the existence of the positive manifold, the fact that certain kinds of tests (generally the more complex ones) have consistently larger g loadings, the substantial invariance of g factors across different test batteries, the impossibility of constructing test batteries that do not yield a g factor, and the widespread practical validity of g as a predictor of individual outcomes. The g factor, together with group factors, best represents the empirically established fact that, on average, overall ability differences between individuals are greater than differences among abilities within individuals, while a factor solution with orthogonal factors without g obscures this fact. Moreover, g appears to be the most heritable component of intelligence.[37] Research utilizing the techniques of confirmatory factor analysis has also provided support for the existence of g.[36]

A g factor can be computed from a correlation matrix of test results using several different methods. These include exploratory factor analysis, principal components analysis (PCA), and confirmatory factor analysis. Different factor-extraction methods produce highly consistent results, although PCA has sometimes been found to produce inflated estimates of the influence of g on test scores.[18][38]

There is a broad contemporary consensus that cognitive variance between people can be conceptualized at three hierarchical levels, distinguished by their degree of generality. At the lowest, least general level there are many narrow first-order factors; at a higher level, there are a relatively small number – somewhere between five and ten – of broad (i.e., more general) second-order factors (or group factors); and at the apex, there is a single third-order factor, g, the general factor common to all tests.[39][40][41] The g factor usually accounts for the majority of the total common factor variance of IQ test batteries.[42] Contemporary hierarchical models of intelligence include the three stratum theory and the Cattell–Horn–Carroll theory.[43]

"Indifference of the indicator"

[edit]Spearman proposed the principle of the indifference of the indicator, according to which the precise content of intelligence tests is unimportant for the purposes of identifying g, because g enters into performance on all kinds of tests. Any test can therefore be used as an indicator of g.[44] Following Spearman, Arthur Jensen more recently argued that a g factor extracted from one test battery will always be the same, within the limits of measurement error, as that extracted from another battery, provided that the batteries are large and diverse.[45] According to this view, every mental test, no matter how distinctive, calls on g to some extent. Thus a composite score of a number of different tests will load onto g more strongly than any of the individual test scores, because the g components cumulate into the composite score, while the uncorrelated non-g components will cancel each other out. Theoretically, the composite score of an infinitely large, diverse test battery would, then, be a perfect measure of g.[46]

In contrast, L. L. Thurstone argued that a g factor extracted from a test battery reflects the average of all the abilities called for by the particular battery, and that g therefore varies from one battery to another and "has no fundamental psychological significance."[47] Along similar lines, John Horn argued that g factors are meaningless because they are not invariant across test batteries, maintaining that correlations between different ability measures arise because it is difficult to define a human action that depends on just one ability.[48][49]

To show that different batteries reflect the same g, one must administer several test batteries to the same individuals, extract g factors from each battery, and show that the factors are highly correlated. This can be done within a confirmatory factor analysis framework.[21] Wendy Johnson and colleagues have published two such studies.[50][51] The first found that the correlations between g factors extracted from three different batteries were .99, .99, and 1.00, supporting the hypothesis that g factors from different batteries are the same and that the identification of g is not dependent on the specific abilities assessed. The second study found that g factors derived from four of five test batteries correlated at between .95–1.00, while the correlations ranged from .79 to .96 for the fifth battery, the Cattell Culture Fair Intelligence Test (the CFIT). They attributed the somewhat lower correlations with the CFIT battery to its lack of content diversity for it contains only matrix-type items, and interpreted the findings as supporting the contention that g factors derived from different test batteries are the same provided that the batteries are diverse enough. The results suggest that the same g can be consistently identified from different test batteries.[39][52] This approach has been criticized by psychologist Lazar Stankov in the Handbook of Understanding and Measuring Intelligence, who councluded "Correlations between the g factors from different test batteries are not unity."[53]

A study authored by Scott Barry Kaufman and colleagues showed that the general factor extracted from the Woodjock-Johnson cognitive abilities test, and the general factor extracted from the Achievement test batteries are highly correlated, but not isomorphic.[54]

Population distribution

[edit]The form of the population distribution of g is unknown, because g cannot be measured on a ratio scale[clarification needed]. (The distributions of scores on typical IQ tests are roughly normal, but this is achieved by construction, i.e., by normalizing the raw scores.) It has been argued[who?] that there are nevertheless good reasons for supposing that g is normally distributed in the general population, at least within a range of ±2 standard deviations from the mean. In particular, g can be thought of as a composite variable that reflects the additive effects of many independent genetic and environmental influences, and such a variable should, according to the central limit theorem, follow a normal distribution.[55]

Spearman's law of diminishing returns

[edit]A number of researchers have suggested that the proportion of variation accounted for by g may not be uniform across all subgroups within a population. Spearman's law of diminishing returns (SLODR), also termed the cognitive ability differentiation hypothesis, predicts that the positive correlations among different cognitive abilities are weaker among more intelligent subgroups of individuals. More specifically, SLODR predicts that the g factor will account for a smaller proportion of individual differences in cognitive tests scores at higher scores on the g factor.

SLODR was originally proposed in 1927 by Charles Spearman,[56] who reported that the average correlation between 12 cognitive ability tests was .466 in 78 normal children, and .782 in 22 "defective" children. Detterman and Daniel rediscovered this phenomenon in 1989.[57] They reported that for subtests of both the WAIS and the WISC, subtest intercorrelations decreased monotonically with ability group, ranging from approximately an average intercorrelation of .7 among individuals with IQs less than 78 to .4 among individuals with IQs greater than 122.[58]

SLODR has been replicated in a variety of child and adult samples who have been measured using broad arrays of cognitive tests. The most common approach has been to divide individuals into multiple ability groups using an observable proxy for their general intellectual ability, and then to either compare the average interrelation among the subtests across the different groups, or to compare the proportion of variation accounted for by a single common factor, in the different groups.[59] However, as both Deary et al. (1996).[59] and Tucker-Drob (2009)[60] have pointed out, dividing the continuous distribution of intelligence into an arbitrary number of discrete ability groups is less than ideal for examining SLODR. Tucker-Drob (2009)[60] extensively reviewed the literature on SLODR and the various methods by which it had been previously tested, and proposed that SLODR could be most appropriately captured by fitting a common factor model that allows the relations between the factor and its indicators to be nonlinear in nature. He applied such a factor model to a nationally representative data of children and adults in the United States and found consistent evidence for SLODR. For example, Tucker-Drob (2009) found that a general factor accounted for approximately 75% of the variation in seven different cognitive abilities among very low IQ adults, but only accounted for approximately 30% of the variation in the abilities among very high IQ adults.

A recent meta-analytic study by Blum and Holling[61] also provided support for the differentiation hypothesis. As opposed to most research on the topic, this work made it possible to study ability and age variables as continuous predictors of the g saturation, and not just to compare lower- vs. higher-skilled or younger vs. older groups of testees. Results demonstrate that the mean correlation and g loadings of cognitive ability tests decrease with increasing ability, yet increase with respondent age. SLODR, as described by Charles Spearman, could be confirmed by a g-saturation decrease as a function of IQ as well as a g-saturation increase from middle age to senescence. Specifically speaking, for samples with a mean intelligence that is two standard deviations (i.e., 30 IQ-points) higher, the mean correlation to be expected is decreased by approximately .15 points. The question remains whether a difference of this magnitude could result in a greater apparent factorial complexity when cognitive data are factored for the higher-ability sample, as opposed to the lower-ability sample. It seems likely that greater factor dimensionality should tend to be observed for the case of higher ability, but the magnitude of this effect (i.e., how much more likely and how many more factors) remains uncertain.

Practical validity

[edit]The extent of the practical validity of g as a predictor of educational, economic, and social outcomes is the subject of ongoing debate.[62] Some researchers have argued that it is more far-ranging and universal than any other known psychological variable,[63] and that the validity of g increases as the complexity of the measured task increases.[64][65] Others have argued that tests of specific abilities outperform g factor in analyses fitted to certain real-world situations.[66][67][68]

A test's practical validity is measured by its correlation with performance on some criterion external to the test, such as college grade-point average, or a rating of job performance. The correlation between test scores and a measure of some criterion is called the validity coefficient. One way to interpret a validity coefficient is to square it to obtain the variance accounted by the test. For example, a validity coefficient of .30 corresponds to 9 percent of variance explained. This approach has, however, been criticized as misleading and uninformative, and several alternatives have been proposed. One arguably more interpretable approach is to look at the percentage of test takers in each test score quintile who meet some agreed-upon standard of success. For example, if the correlation between test scores and performance is .30, the expectation is that 67 percent of those in the top quintile will be above-average performers, compared to 33 percent of those in the bottom quintile.[69][70]

Academic achievement

[edit]The predictive validity of g is most conspicuous in the domain of scholastic performance. This is apparently because g is closely linked to the ability to learn novel material and understand concepts and meanings.[64]

In elementary school, the correlation between IQ and grades and achievement scores is between .60 and .70. At more advanced educational levels, more students from the lower end of the IQ distribution drop out, which restricts the range of IQs and results in lower validity coefficients. In high school, college, and graduate school the validity coefficients are .50–.60, .40–.50, and .30–.40, respectively. The g loadings of IQ scores are high, but it is possible that some of the validity of IQ in predicting scholastic achievement is attributable to factors measured by IQ independent of g. According to research by Robert L. Thorndike, 80 to 90 percent of the predictable variance in scholastic performance is due to g, with the rest attributed to non-g factors measured by IQ and other tests.[71]

Achievement test scores are more highly correlated with IQ than school grades. This may be because grades are more influenced by the teacher's idiosyncratic perceptions of the student.[72] In a longitudinal English study, g scores measured at age 11 correlated with all the 25 subject tests of the national GCSE examination taken at age 16. The correlations ranged from .77 for the mathematics test to .42 for the art test. The correlation between g and a general educational factor computed from the GCSE tests was .81.[73]

Research suggests that the SAT, widely used in college admissions, is primarily a measure of g. A correlation of .82 has been found between g scores computed from an IQ test battery and SAT scores. In a study of 165,000 students at 41 U.S. colleges, SAT scores were found to be correlated at .47 with first-year college grade-point average after correcting for range restriction in SAT scores (the correlation rises to .55 when course difficulty is held constant, i.e., if all students attended the same set of classes).[69][74]

Job attainment

[edit]There is a high correlation of .90 to .95 between the prestige rankings of occupations, as rated by the general population, and the average general intelligence scores of people employed in each occupation. At the level of individual employees, the association between job prestige and g is lower – one large U.S. study reported a correlation of .65 (.72 corrected for attenuation). Mean level of g thus increases with perceived job prestige. It has also been found that the dispersion of general intelligence scores is smaller in more prestigious occupations than in lower level occupations, suggesting that higher level occupations have minimum g requirements.[75][76]

Job performance

[edit]Research indicates that tests of g are the best single predictors of job performance, with an average validity coefficient of .55 across several meta-analyses of studies based on supervisor ratings and job samples. The average meta-analytic validity coefficient for performance in job training is .63.[77] The validity of g in the highest complexity jobs (professional, scientific, and upper management jobs) has been found to be greater than in the lowest complexity jobs, but g has predictive validity even for the simplest jobs. Research also shows that specific aptitude tests tailored for each job provide little or no increase in predictive validity over tests of general intelligence. It is believed that g affects job performance mainly by facilitating the acquisition of job-related knowledge. The predictive validity of g is greater than that of work experience, and increased experience on the job does not decrease the validity of g.[64][75]

In a 2011 meta-analysis, researchers found that general cognitive ability (GCA) predicted job performance better than personality (Five factor model) and three streams of emotional intelligence. They examined the relative importance of these constructs on predicting job performance and found that cognitive ability explained most of the variance in job performance.[78] Other studies suggested that GCA and emotional intelligence have a linear independent and complementary contribution to job performance. Côté and Miners (2015)[79] found that these constructs are interrelated when assessing their relationship with two aspects of job performance: organisational citizenship behaviour (OCB) and task performance. Emotional intelligence is a better predictor of task performance and OCB when GCA is low and vice versa. For instance, an employee with low GCA will compensate his/her task performance and OCB, if emotional intelligence is high.

Although these compensatory effects favour emotional intelligence, GCA still remains as the best predictor of job performance. Several researchers have studied the correlation between GCA and job performance among different job positions. For instance, Ghiselli (1973)[80] found that salespersons had a higher correlation than sales clerk. The former obtained a correlation of 0.61 for GCA, 0.40 for perceptual ability and 0.29 for psychomotor abilities; whereas sales clerk obtained a correlation of 0.27 for GCA, 0.22 for perceptual ability and 0.17 for psychomotor abilities.[81] Other studies compared GCA – job performance correlation between jobs of different complexity. Hunter and Hunter (1984)[82] developed a meta-analysis with over 400 studies and found that this correlation was higher for jobs of high complexity (0.57). Followed by jobs of medium complexity (0.51) and low complexity (0.38).

Job performance is measured by objective rating performance and subjective ratings. Although the former is better than subjective ratings, most of studies in job performance and GCA have been based on supervisor performance ratings. This rating criterion is considered problematic and unreliable, mainly because of its difficulty to define what is a good and bad performance. Rating of supervisors tends to be subjective and inconsistent among employees.[83] Additionally, supervisor rating of job performance is influenced by different factors, such as halo effect,[84] facial attractiveness,[85] racial or ethnic bias, and height of employees.[86] However, Vinchur, Schippmann, Switzer and Roth (1998)[81] found in their study with sales employees that objective sales performance had a correlation of 0.04 with GCA, while supervisor performance rating got a correlation of 0.40. These findings were surprising, considering that the main criterion for assessing these employees would be the objective sales.

In understanding how GCA is associated job performance, several researchers concluded that GCA affects acquisition of job knowledge, which in turn improves job performance. In other words, people high in GCA are capable to learn faster and acquire more job knowledge easily, which allow them to perform better. Conversely, lack of ability to acquire job knowledge will directly affect job performance. This is due to low levels of GCA. Also, GCA has a direct effect on job performance. In a daily basis, employees are exposed constantly to challenges and problem solving tasks, which success depends solely on their GCA. These findings are discouraging for governmental entities in charge of protecting rights of workers.[87] Because of the high correlation of GCA on job performance, companies are hiring employees based on GCA tests scores. Inevitably, this practice is denying the opportunity to work to many people with low GCA.[88] Previous researchers have found significant differences in GCA between race / ethnicity groups. For instance, there is a debate whether studies were biased against Afro-Americans, who scored significantly lower than white Americans in GCA tests.[89] However, findings on GCA-job performance correlation must be taken carefully. Some researchers have warned the existence of statistical artifacts related to measures of job performance and GCA test scores. For example, Viswesvaran, Ones and Schmidt (1996)[90] argued that is quite impossible to obtain perfect measures of job performance without incurring in any methodological error. Moreover, studies on GCA and job performance are always susceptible to range restriction, because data is gathered mostly from current employees, neglecting those that were not hired. Hence, sample comes from employees who successfully passed hiring process, including measures of GCA.[91]

Income

[edit]The correlation between income and g, as measured by IQ scores, averages about .40 across studies. The correlation is higher at higher levels of education and it increases with age, stabilizing when people reach their highest career potential in middle age. Even when education, occupation and socioeconomic background are held constant, the correlation does not vanish.[92]

Other correlates

[edit]The g factor is reflected in many social outcomes. Many social behavior problems, such as dropping out of school, chronic welfare dependency, accident proneness, and crime, are negatively correlated with g independent of social class of origin.[93] Health and mortality outcomes are also linked to g, with higher childhood test scores predicting better health and mortality outcomes in adulthood (see Cognitive epidemiology).[94]

In 2004, psychologist Satoshi Kanazawa argued that g was a domain-specific, species-typical, information processing psychological adaptation,[95] and in 2010, Kanazawa argued that g correlated only with performance on evolutionarily unfamiliar rather than evolutionarily familiar problems, proposing what he termed the "Savanna-IQ interaction hypothesis".[96][97] In 2006, Psychological Review published a comment reviewing Kanazawa's 2004 article by psychologists Denny Borsboom and Conor Dolan that argued that Kanazawa's conception of g was empirically unsupported and purely hypothetical and that an evolutionary account of g must address it as a source of individual differences,[98] and in response to Kanazawa's 2010 article, psychologists Scott Barry Kaufman, Colin G. DeYoung, Deirdre Reis, and Jeremy R. Gray published a study in 2011 in Intelligence of 112 subjects taking a 70-item computer version of the Wason selection task (a logic puzzle) in a social relations context as proposed by evolutionary psychologists Leda Cosmides and John Tooby in The Adapted Mind,[99] and found instead that "performance on non-arbitrary, evolutionarily familiar problems is more strongly related to general intelligence than performance on arbitrary, evolutionarily novel problems".[100][101]

Genetic and environmental determinants

[edit]Heritability is the proportion of phenotypic variance in a trait in a population that can be attributed to genetic factors. The heritability of g has been estimated to fall between 40 and 80 percent using twin, adoption, and other family study designs as well as molecular genetic methods. Estimates based on the totality of evidence place the heritability of g at about 50%.[102] It has been found to increase linearly with age. For example, a large study involving more than 11,000 pairs of twins from four countries reported the heritability of g to be 41 percent at age nine, 55 percent at age twelve, and 66 percent at age seventeen. Other studies have estimated that the heritability is as high as 80 percent in adulthood, although it may decline in old age. Most of the research on the heritability of g has been conducted in the United States and Western Europe, but studies in Russia (Moscow), the former East Germany, Japan, and rural India have yielded similar estimates of heritability as Western studies.[39][103][104][105]

As with heritability in general, the heritability of g can be understood in reference to a specific population at a specific place and time, and findings for one population do not apply to a different population that is exposed to different environmental factors.[106] A population that is exposed to strong environmental factors can be expected to have a lower level of heritability than a population that is exposed to only weak environmental factors. For example, one twin study found that genotype differences almost completely explain the variance in IQ scores within affluent families, but make close to zero contribution towards explaining IQ score differences in impoverished families.[107] Notably, heritability findings also only refer to total variation within a population and do not support a genetic explanation for differences between groups.[108] It is theoretically possible for the differences between the average g of two groups to be 100% due to environmental factors even if the variance within each group is 100% heritable.

Behavioral genetic research has also established that the shared (or between-family) environmental effects on g are strong in childhood, but decline thereafter and are negligible in adulthood. This indicates that the environmental effects that are important to the development of g are unique and not shared between members of the same family.[104]

The genetic correlation is a statistic that indicates the extent to which the same genetic effects influence two different traits. If the genetic correlation between two traits is zero, the genetic effects on them are independent, whereas a correlation of 1.0 means that the same set of genes explains the heritability of both traits (regardless of how high or low the heritability of each is). Genetic correlations between specific mental abilities (such as verbal ability and spatial ability) have been consistently found to be very high, close to 1.0. This indicates that genetic variation in cognitive abilities is almost entirely due to genetic variation in whatever g is. It also suggests that what is common among cognitive abilities is largely caused by genes, and that independence among abilities is largely due to environmental effects. Thus it has been argued that when genes for intelligence are identified, they will be "generalist genes", each affecting many different cognitive abilities.[104][109][110]

Much research points to g being a highly polygenic trait influenced by many common genetic variants, each having only small effects. Another possibility is that heritable differences in g are due to individuals having different "loads" of rare, deleterious mutations, with genetic variation among individuals persisting due to mutation–selection balance.[110][111]

A number of candidate genes have been reported to be associated with intelligence differences, but the effect sizes have been small and almost none of the findings have been replicated. No individual genetic variants have been conclusively linked to intelligence in the normal range so far. Many researchers believe that very large samples will be needed to reliably detect individual genetic polymorphisms associated with g.[39][111] However, while genes influencing variation in g in the normal range have proven difficult to find, many single-gene disorders with intellectual disability among their symptoms have been discovered.[112]

It has been suggested that the g loading of mental tests have been found to correlate with heritability,[32] but both the empirical data and statistical methodology bearing on this question are matters of active controversy.[113][114][115] Several studies suggest that tests with larger g loadings are more affected by inbreeding depression lowering test scores.[citation needed] There is also evidence that tests with larger g loadings are associated with larger positive heterotic effects on test scores, which has been suggested to indicate the presence of genetic dominance effects for g.[116]

Neuroscientific findings

[edit]g has a number of correlates in the brain. Studies using magnetic resonance imaging (MRI) have established that g and total brain volume are moderately correlated (r~.3–.4). External head size has a correlation of ~.2 with g. MRI research on brain regions indicates that the volumes of frontal, parietal and temporal cortices, and the hippocampus are also correlated with g, generally at .25 or more, while the correlations, averaged over many studies, with overall grey matter and overall white matter have been found to be .31 and .27, respectively. Some but not all studies have also found positive correlations between g and cortical thickness. However, the underlying reasons for these associations between the quantity of brain tissue and differences in cognitive abilities remain largely unknown.[2]

Most researchers believe that intelligence cannot be localized to a single brain region, such as the frontal lobe. Brain lesion studies have found small but consistent associations indicating that people with more white matter lesions tend to have lower cognitive ability. Research utilizing NMR spectroscopy has discovered somewhat inconsistent but generally positive correlations between intelligence and white matter integrity, supporting the notion that white matter is important for intelligence.[2]

Some research suggests that aside from the integrity of white matter, also its organizational efficiency is related to intelligence. The hypothesis that brain efficiency has a role in intelligence is supported by functional MRI research showing that more intelligent people generally process information more efficiently, i.e., they use fewer brain resources for the same task than less intelligent people.[2]

Small but relatively consistent associations with intelligence test scores include also brain activity, as measured by EEG records or event-related potentials, and nerve conduction velocity.[117][118]

g in non-humans

[edit]Evidence of a general factor of intelligence has also been observed in non-human animals. Studies have shown that g is responsible for 47% of the variance at the species level in primates[119] and around 55% of the individual variance observed in mice.[120][121] A review and meta-analysis of general intelligence, however, found that the average correlation among cognitive abilities was 0.18 and suggested that overall support for g is weak in non-human animals.[122]

Although it is not assessable using the same intelligence measures used in humans, cognitive ability can be measured with a variety of interactive and observational tools focusing on innovation, habit reversal, social learning, and responses to novelty. Non-human models of g such as mice are used to study genetic influences on intelligence and neurological developmental research into the mechanisms behind and biological correlates of g.[123]

g (or c) in human groups

[edit]Similar to g for individuals, a new research path aims to extract a general collective intelligence factor c for groups displaying a group's general ability to perform a wide range of tasks.[124] Definition, operationalization and statistical approach for this c factor are derived from and similar to g. Causes, predictive validity as well as additional parallels to g are investigated.[125]

Other biological associations

[edit]Height is correlated with intelligence (r~.2), but this correlation has not generally been found within families (i.e., among siblings), suggesting that it results from cross-assortative mating for height and intelligence, or from another factor that correlates with both (e.g. nutrition). Myopia is known to be associated with intelligence, with a correlation of around .2 to .25, and this association has been found within families, too.[126]

Group similarities and differences

[edit]Cross-cultural studies indicate that the g factor can be observed whenever a battery of diverse, complex cognitive tests is administered to a human sample. The factor structure of IQ tests has also been found to be consistent across sexes and ethnic groups in the U.S. and elsewhere.[118] The g factor has been found to be the most invariant of all factors in cross-cultural comparisons. For example, when the g factors computed from an American standardization sample of Wechsler's IQ battery and from large samples who completed the Japanese translation of the same battery were compared, the congruence coefficient was .99, indicating virtual identity. Similarly, the congruence coefficient between the g factors obtained from white and black standardization samples of the WISC battery in the U.S. was .995, and the variance in test scores accounted for by g was highly similar for both groups.[127]

Most studies suggest that there are negligible differences in the mean level of g between the sexes, but that sex differences in cognitive abilities are to be found in more narrow domains. For example, males generally outperform females in spatial tasks, while females generally outperform males in verbal tasks.[128] Another difference that has been found in many studies is that males show more variability in both general and specific abilities than females, with proportionately more males at both the low end and the high end of the test score distribution.[129]

Differences in g between racial and ethnic groups have been found, particularly in the U.S. between black- and white-identifying test takers, though these differences appear to have diminished significantly over time,[114] and to be attributable to environmental (rather than genetic) causes.[114][130] Some researchers have suggested that the magnitude of the black-white gap in cognitive test results is dependent on the magnitude of the test's g loading, with tests showing higher g loading producing larger gaps (see Spearman's hypothesis),[131] while others have criticized this view as methodologically unfounded.[132][133] Still others have noted that despite the increasing g loading of IQ test batteries over time, the performance gap between racial groups continues to diminish.[114] Comparative analysis has shown that while a gap of approximately 1.1 standard deviation in mean IQ (around 16 points) between white and black Americans existed in the late 1960s, between 1972 and 2002 black Americans gained between 4 and 7 IQ points relative to non-Hispanic Whites, and that "the g gap between Blacks and Whites declined virtually in tandem with the IQ gap."[114] In contrast, Americans of East Asian descent generally slightly outscore white Americans.[134] It has been claimed that racial and ethnic differences similar to those found in the U.S. can be observed globally,[135] but the significance, methodological grounding, and truth of such claims have all been disputed.[136][137][138][139][140][141]

Relation to other psychological constructs

[edit]Elementary cognitive tasks

[edit]

Elementary cognitive tasks (ECTs) also correlate strongly with g. ECTs are, as the name suggests, simple tasks that apparently require very little intelligence, but still correlate strongly with more exhaustive intelligence tests. Determining whether a light is red or blue and determining whether there are four or five squares drawn on a computer screen are two examples of ECTs. The answers to such questions are usually provided by quickly pressing buttons. Often, in addition to buttons for the two options provided, a third button is held down from the start of the test. When the stimulus is given to the subject, they remove their hand from the starting button to the button of the correct answer. This allows the examiner to determine how much time was spent thinking about the answer to the question (reaction time, usually measured in small fractions of second), and how much time was spent on physical hand movement to the correct button (movement time). Reaction time correlates strongly with g, while movement time correlates less strongly.[142] ECT testing has allowed quantitative examination of hypotheses concerning test bias, subject motivation, and group differences. By virtue of their simplicity, ECTs provide a link between classical IQ testing and biological inquiries such as fMRI studies.

Working memory

[edit]One theory holds that g is identical or nearly identical to working memory capacity. Among other evidence for this view, some studies have found factors representing g and working memory to be perfectly correlated. However, in a meta-analysis the correlation was found to be considerably lower.[143] One criticism that has been made of studies that identify g with working memory is that "we do not advance understanding by showing that one mysterious concept is linked to another."[144]

Piagetian tasks

[edit]Psychometric theories of intelligence aim at quantifying intellectual growth and identifying ability differences between individuals and groups. In contrast, Jean Piaget's theory of cognitive development seeks to understand qualitative changes in children's intellectual development. Piaget designed a number of tasks to verify hypotheses arising from his theory. The tasks were not intended to measure individual differences, and they have no equivalent in psychometric intelligence tests.[145][146] For example, in one of the best-known Piagetian conservation tasks a child is asked if the amount of water in two identical glasses is the same. After the child agrees that the amount is the same, the investigator pours the water from one of the glasses into a glass of different shape so that the amount appears different although it remains the same. The child is then asked if the amount of water in the two glasses is the same or different.

Notwithstanding the different research traditions in which psychometric tests and Piagetian tasks were developed, the correlations between the two types of measures have been found to be consistently positive and generally moderate in magnitude. A common general factor underlies them. It has been shown that it is possible to construct a battery consisting of Piagetian tasks that is as good a measure of g as standard IQ tests.[145][147]

Personality

[edit]The traditional view in psychology is that there is no meaningful relationship between personality and intelligence, and that the two should be studied separately. Intelligence can be understood in terms of what an individual can do, or what his or her maximal performance is, while personality can be thought of in terms of what an individual will typically do, or what his or her general tendencies of behavior are. Large-scale meta-analyses have found that there are hundreds of connections >.20 in magnitude between cognitive abilities and personality traits across the Big Five. This is despite the fact that correlations with the global Big Five factors themselves being small, except for Openness (.26).[148] More interesting relations emerge at other levels (e.g., .23 for the activity facet of extraversion with general mental ability, -.29 for the uneven-tempered facet of neuroticism, .32 for the industriousness aspect of conscientiousness, .26 for the compassion aspect of agreeableness).[149]

The associations between intelligence and personality have generally been interpreted in two main ways. The first perspective is that personality traits influence performance on intelligence tests. For example, a person may fail to perform at a maximal level on an IQ test due to his or her anxiety and stress-proneness. The second perspective considers intelligence and personality to be conceptually related, with personality traits determining how people apply and invest their cognitive abilities, leading to knowledge expansion and greater cognitive differentiation.[150][151] Other theories (e.g., Cybernetic Trait Complexes Theory) view personality and cognitive ability as intertwined parameters of individuals that co-evolved and are also co-influenced during development (e.g., by early life starvation).[152]

Creativity

[edit]Some researchers believe that there is a threshold level of g below which socially significant creativity is rare, but that otherwise there is no relationship between the two. It has been suggested that this threshold is at least one standard deviation above the population mean. Above the threshold, personality differences are believed to be important determinants of individual variation in creativity.[153][154]

Others have challenged the threshold theory. While not disputing that opportunity and personal attributes other than intelligence, such as energy and commitment, are important for creativity, they argue that g is positively associated with creativity even at the high end of the ability distribution. The longitudinal Study of Mathematically Precocious Youth has provided evidence for this contention. It has showed that individuals identified by standardized tests as intellectually gifted in early adolescence accomplish creative achievements (for example, securing patents or publishing literary or scientific works) at several times the rate of the general population, and that even within the top 1 percent of cognitive ability, those with higher ability are more likely to make outstanding achievements. The study has also suggested that the level of g acts as a predictor of the level of achievement, while specific cognitive ability patterns predict the realm of achievement.[155][156]

Criticism

[edit]Connection with eugenics and racialism

[edit]Research on the G-factor, as well as other psychometric values, has been widely criticized for not properly taking into account the eugenicist background of its research practices.[157] The reductionism of the G-factor has been attributted to having evolved from "pseudoscientific theories" about race and intelligence.[158] Spearman's g and the concept of inherited, immutable intelligence were a boon for eugenicists and pseudoscientists alike.[159]

Joseph Graves Jr. and Amanda Johnson have argued that g "...is to the psychometricians what Huygens' ether was to early physicists: a nonentity taken as an article of faith instead of one in need of verification by real data."[160]

Some especially harsh critics have referred to the g factor, and psychometrics, as a form of pseudoscience.[161]

Gf-Gc theory

[edit]Raymond Cattell, a student of Charles Spearman's, modified the unitary g factor model and divided g into two broad, relatively independent domains: fluid intelligence (Gf) and crystallized intelligence (Gc). Gf is conceptualized as a capacity to figure out novel problems, and it is best assessed with tests with little cultural or scholastic content, such as Raven's matrices. Gc can be thought of as consolidated knowledge, reflecting the skills and information that an individual acquires and retains throughout his or her life. Gc is dependent on education and other forms of acculturation, and it is best assessed with tests that emphasize scholastic and cultural knowledge.[2][43][162] Gf can be thought to primarily consist of current reasoning and problem solving capabilities, while Gc reflects the outcome of previously executed cognitive processes.[163]

The rationale for the separation of Gf and Gc was to explain individuals' cognitive development over time. While Gf and Gc have been found to be highly correlated, they differ in the way they change over a lifetime. Gf tends to peak at around age 20, slowly declining thereafter. In contrast, Gc is stable or increases across adulthood. A single general factor has been criticized as obscuring this bifurcated pattern of development. Cattell argued that Gf reflected individual differences in the efficiency of the central nervous system. Gc was, in Cattell's thinking, the result of a person "investing" his or her Gf in learning experiences throughout life.[2][29][43][164]

Cattell, together with John Horn, later expanded the Gf-Gc model to include a number of other broad abilities, such as Gq (quantitative reasoning) and Gv (visual-spatial reasoning). While all the broad ability factors in the extended Gf-Gc model are positively correlated and thus would enable the extraction of a higher order g factor, Cattell and Horn maintained that it would be erroneous to posit that a general factor underlies these broad abilities. They argued that g factors computed from different test batteries are not invariant and would give different values of g, and that the correlations among tests arise because it is difficult to test just one ability at a time.[2][48][165]

However, several researchers have suggested that the Gf-Gc model is compatible with a g-centered understanding of cognitive abilities. For example, John B. Carroll's three-stratum model of intelligence includes both Gf and Gc together with a higher-order g factor. Based on factor analyses of many data sets, some researchers have also argued that Gf and g are one and the same factor and that g factors from different test batteries are substantially invariant provided that the batteries are large and diverse.[43][166][167]

Theories of uncorrelated abilities

[edit]Several theorists have proposed that there are intellectual abilities that are uncorrelated with each other. Among the earliest was L.L. Thurstone who created a model of primary mental abilities representing supposedly independent domains of intelligence. However, Thurstone's tests of these abilities were found to produce a strong general factor. He argued that the lack of independence among his tests reflected the difficulty of constructing "factorially pure" tests that measured just one ability. Similarly, J.P. Guilford proposed a model of intelligence that comprised up to 180 distinct, uncorrelated abilities, and claimed to be able to test all of them. Later analyses have shown that the factorial procedures Guilford presented as evidence for his theory did not provide support for it, and that the test data that he claimed provided evidence against g did in fact exhibit the usual pattern of intercorrelations after correction for statistical artifacts.[168][169]

Gardner's theory of multiple intelligences

[edit]More recently, Howard Gardner has developed the theory of multiple intelligences. He posits the existence of nine different and independent domains of intelligence, such as mathematical, linguistic, spatial, musical, bodily-kinesthetic, meta-cognitive, and existential intelligences, and contends that individuals who fail in some of them may excel in others. According to Gardner, tests and schools traditionally emphasize only linguistic and logical abilities while neglecting other forms of intelligence.

While popular among educationalists, Gardner's theory has been much criticized by psychologists and psychometricians. One criticism is that the theory does violence to both scientific and everyday usages of the word "intelligence". Several researchers have argued that not all of Gardner's intelligences fall within the cognitive sphere. For example, Gardner contends that a successful career in professional sports or popular music reflects bodily-kinesthetic intelligence and musical intelligence, respectively, even though one might usually talk of athletic and musical skills, talents, or abilities instead.

Another criticism of Gardner's theory is that many of his purportedly independent domains of intelligence are in fact correlated with each other. Responding to empirical analyses showing correlations between the domains, Gardner has argued that the correlations exist because of the common format of tests and because all tests require linguistic and logical skills. His critics have in turn pointed out that not all IQ tests are administered in the paper-and-pencil format, that aside from linguistic and logical abilities, IQ test batteries contain also measures of, for example, spatial abilities, and that elementary cognitive tasks (for example, inspection time and reaction time) that do not involve linguistic or logical reasoning correlate with conventional IQ batteries, too.[73][170][171][172]

Sternberg's three classes of intelligence

[edit]Robert Sternberg, working with various colleagues, has also suggested that intelligence has dimensions independent of g. He argues that there are three classes of intelligence: analytic, practical, and creative. According to Sternberg, traditional psychometric tests measure only analytic intelligence, and should be augmented to test creative and practical intelligence as well. He has devised several tests to this effect. Sternberg equates analytic intelligence with academic intelligence, and contrasts it with practical intelligence, defined as an ability to deal with ill-defined real-life problems. Tacit intelligence is an important component of practical intelligence, consisting of knowledge that is not explicitly taught but is required in many real-life situations. Assessing creativity independent of intelligence tests has traditionally proved difficult, but Sternberg and colleagues have claimed to have created valid tests of creativity, too.

The validation of Sternberg's theory requires that the three abilities tested are substantially uncorrelated and have independent predictive validity. Sternberg has conducted many experiments which he claims confirm the validity of his theory, but several researchers have disputed this conclusion. For example, in his reanalysis of a validation study of Sternberg's STAT test, Nathan Brody showed that the predictive validity of the STAT, a test of three allegedly independent abilities, was almost solely due to a single general factor underlying the tests, which Brody equated with the g factor.[173][174]

Flynn's model

[edit]James Flynn has argued that intelligence should be conceptualized at three different levels: brain physiology, cognitive differences between individuals, and social trends in intelligence over time. According to this model, the g factor is a useful concept with respect to individual differences but its explanatory power is limited when the focus of investigation is either brain physiology, or, especially, the effect of social trends on intelligence. Flynn has criticized the notion that cognitive gains over time, or the Flynn effect, are "hollow" if they cannot be shown to be increases in g. He argues that the Flynn effect reflects shifting social priorities and individuals' adaptation to them. To apply the individual differences concept of g to the Flynn effect is to confuse different levels of analysis. On the other hand, according to Flynn, it is also fallacious to deny, by referring to trends in intelligence over time, that some individuals have "better brains and minds" to cope with the cognitive demands of their particular time. At the level of brain physiology, Flynn has emphasized both that localized neural clusters can be affected differently by cognitive exercise, and that there are important factors that affect all neural clusters.[175]

The Mismeasure of Man

[edit]Paleontologist and biologist Stephen Jay Gould presented a critique in his 1981 book The Mismeasure of Man. He argued that psychometricians fallaciously reified the g factor into an ineluctable "thing" that provided a convenient explanation for human intelligence, grounded only in mathematical theory rather than the rigorous application of mathematical theory to biological knowledge.[176] An example is provided in the work of Cyril Burt, published posthumously in 1972: "The two main conclusions we have reached seem clear and beyond all question. The hypothesis of a general factor entering into every type of cognitive process, tentatively suggested by speculations derived from neurology and biology, is fully borne out by the statistical evidence; and the contention that differences in this general factor depend largely on the individual's genetic constitution appears incontestable.The concept of an innate, general cognitive ability, which follows from these two assumptions, though admittedly sheerly an abstraction, is thus wholly consistent with the empirical facts."[177]

Critique of Gould

[edit]Several researchers have criticized Gould's arguments. For example, they have rejected the accusation of reification, maintaining that the use of extracted factors such as g as potential causal variables whose reality can be supported or rejected by further investigations constitutes a normal scientific practice that in no way distinguishes psychometrics from other sciences. Critics have also suggested that Gould did not understand the purpose of factor analysis, and that he was ignorant of relevant methodological advances in the field. While different factor solutions may be mathematically equivalent in their ability to account for intercorrelations among tests, solutions that yield a g factor are psychologically preferable for several reasons extrinsic to factor analysis, including the phenomenon of the positive manifold, the fact that the same g can emerge from quite different test batteries, the widespread practical validity of g, and the linkage of g to many biological variables.[36][37][page needed]

Other critiques of g

[edit]John Horn and John McArdle have argued that the modern g theory, as espoused by, for example, Arthur Jensen, is unfalsifiable, because the existence of a common factor like g follows tautologically from positive correlations among tests. They contrasted the modern hierarchical theory of g with Spearman's original two-factor theory which was readily falsifiable (and indeed was falsified).[29]

See also

[edit]- Charles Spearman – English psychologist (1863–1945)

- Factor analysis in psychometrics – Statistical method

- Fluid and crystallized intelligence – Factors of general intelligence

- Flynn effect – 20th-century rise in intelligence test scores

- Intelligence – Ability to acquire, understand, and apply knowledge

- Intelligence quotient – Score from a test designed to assess intelligence

- Malleability of intelligence – Processes by which intelligence can change over time

- Spearman's hypothesis – Hypothesis in intelligence research

- Eugenics – Effort to improve purported human genetic quality

Notes

[edit]- ^ Also known as general intelligence, general mental ability or general intelligence factor.

References

[edit]- ^ a b Kamphaus et al. 2005

- ^ a b c d e f g h Deary et al. 2010

- ^ Schlinger, Henry D. (2003). "The myth of intelligence". The Psychological Record. 53 (1): 15–32.

- ^ THOMSON, GODFREY H. (September 1916). "A Hierarchy Without a General Factor1". British Journal of Psychology. 8 (3): 271–281. doi:10.1111/j.2044-8295.1916.tb00133.x. ISSN 0950-5652.

- ^ Jensen 1998, 545

- ^ Adapted from Jensen 1998, 24. The correlation matrix was originally published in Spearman 1904, and it is based on the school performance of a sample of English children. While this analysis is historically important and has been highly influential, it does not meet modern technical standards. See Mackintosh 2011, 44ff. and Horn & McArdle 2007 for discussion of Spearman's methods.

- ^ Adapted from Chabris 2007, Table 19.1.

- ^ Gottfredson 1998

- ^ Deary, I. J. (2001). Intelligence. A Very Short Introduction. Oxford University Press. p. 12. ISBN 9780192893215.

- ^ Spearman 1904

- ^ Deary 2000, 6

- ^ a b c d Jensen 1992

- ^ Jensen 1998, 28

- ^ a b c d van deer Maas et al. 2006

- ^ Jensen 1998, 26, 36–39

- ^ Jensen 1998, 26, 36–39, 89–90

- ^ a b Jensen 2002

- ^ a b Floyd et al. 2009

- ^ a b Jensen 1980, 213

- ^ Jensen 1998, 94

- ^ a b Hunt 2011, 94

- ^ Jensen 1998, 18–19, 35–36, 38. The idea of a general, unitary mental ability was introduced to psychology by Herbert Spencer and Francis Galton in the latter half of the 19th century, but their work was largely speculative, with little empirical basis.

- ^ Jensen 1998, 91–92, 95

- ^ Jensen 2000

- ^ Mackintosh 2011, 157

- ^ Jensen 1998, 117

- ^ Bartholomew et al. 2009

- ^ Jensen 1998, 120

- ^ a b c Horn & McArdle 2007

- ^ Jensen 1998, 120–121

- ^ Mackintosh 2011, 157–158

- ^ a b Rushton & Jensen 2010

- ^ Mackintosh 2011, 44–45

- ^ McFarland, Dennis J. (2012). "A single g factor is not necessary to simulate positive correlations between cognitive tests". Journal of Clinical and Experimental Neuropsychology. 34 (4): 378–384. doi:10.1080/13803395.2011.645018. ISSN 1744-411X. PMID 22260190. S2CID 4694545.

The fact that diverse cognitive tests tend to be positively correlated has been taken as evidence for a single general ability or "g" factor...the presence of a positive manifold in the correlations between diverse cognitive tests does not provide differential support for either single factor or multiple factor models of general abilities.

- ^ Jensen 1998, 18, 31–32

- ^ a b c Carroll 1995

- ^ a b Jensen 1982

- ^ Jensen 1998, 73

- ^ a b c d Deary 2012

- ^ Mackintosh 2011, 57

- ^ Jensen 1998, 46

- ^ Carroll 1997. The total common factor variance consists of the variance due to the g factor and the group factors considered together. The variance not accounted for by the common factors, referred to as uniqueness, comprises subtest-specific variance and measurement error.

- ^ a b c d Davidson & Kemp 2011

- ^ Warne, Russell T.; Burningham, Cassidy (2019). "Spearman's g found in 31 non-Western nations: Strong evidence that g is a universal phenomenon". Psychological Bulletin. 145 (3): 237–272. doi:10.1037/bul0000184. PMID 30640496. S2CID 58625266.

- ^ Mackintosh 2011, 151

- ^ Jensen 1998, 31

- ^ Mackintosh 2011, 151–153

- ^ a b McGrew 2005

- ^ Kvist & Gustafsson 2008

- ^ Johnson et al. 2004

- ^ Johnson et al. 2008

- ^ Mackintosh 2011, 150–153. See also Keith et al. 2001 where the g factors from the CAS and WJ III test batteries were found to be statistically indistinguishable, and Stauffer et al. 1996 where similar results were found for the ASVAB battery and a battery of cognitive-components-based tests.

- ^ "G factor: Issue of design and interpretation".

- ^ Kaufman, Scott Barry; Reynolds, Matthew R.; Liu, Xin; Kaufman, Alan S.; McGrew, Kevin S. (2012). "Are cognitive g and academic achievement g one and the same g? An exploration on the Woodcock–Johnson and Kaufman tests". Intelligence. 40 (2): 123–138. doi:10.1016/j.intell.2012.01.009.

- ^ Jensen 1998, 88, 101–103

- ^ Spearman, C. (1927). The abilities of man. New York: MacMillan.

- ^ Detterman, D.K.; Daniel, M.H. (1989). "Correlations of mental tests with each other and with cognitive variables are highest for low IQ groups". Intelligence. 13 (4): 349–359. doi:10.1016/s0160-2896(89)80007-8.

- ^ Deary & Pagliari 1991

- ^ a b Deary et al. 1996

- ^ a b Tucker-Drob 2009

- ^ Blum, D.; Holling, H. (2017). "Spearman's Law of Diminishing Returns. A meta-analysis". Intelligence. 65: 60–66. doi:10.1016/j.intell.2017.07.004.

- ^ Kell, Harrison J.; Lang, Jonas W. B. (September 2018). "The Great Debate: General Ability and Specific Abilities in the Prediction of Important Outcomes". Journal of Intelligence. 6 (3): 39. doi:10.3390/jintelligence6030039. PMC 6480721. PMID 31162466.

- ^ Neubauer, Aljoscha C.; Opriessnig, Sylvia (January 2014). "The Development of Talent and Excellence - Do Not Dismiss Psychometric Intelligence, the (Potentially) Most Powerful Predictor". Talent Development & Excellence. 6 (2): 1–15.

- ^ a b c Jensen 1998, 270

- ^ Gottfredson 2002

- ^ Coyle, Thomas R. (September 2018). "Non-g Factors Predict Educational and Occupational Criteria: More than g". Journal of Intelligence. 6 (3): 43. doi:10.3390/jintelligence6030043. PMC 6480787. PMID 31162470.

- ^ Ziegler, Matthias; Peikert, Aaron (September 2018). "How Specific Abilities Might Throw 'g' a Curve: An Idea on How to Capitalize on the Predictive Validity of Specific Cognitive Abilities". Journal of Intelligence. 6 (3): 41. doi:10.3390/jintelligence6030041. PMC 6480727. PMID 31162468.

- ^ Kell, Harrison J.; Lang, Jonas W. B. (April 2017). "Specific Abilities in the Workplace: More Important Than g?". Journal of Intelligence. 5 (2): 13. doi:10.3390/jintelligence5020013. PMC 6526462. PMID 31162404.

- ^ a b Sackett et al. 2008

- ^ Jensen 1998, 272, 301

- ^ Jensen 1998, 279–280

- ^ Jensen 1998, 279

- ^ a b Brody 2006

- ^ Frey & Detterman 2004

- ^ a b Schmidt & Hunter 2004

- ^ Jensen 1998, 292–293

- ^ Schmidt & Hunter 2004. These validity coefficients have been corrected for measurement error in the dependent variable (i.e., job or training performance) and for range restriction but not for measurement error in the independent variable (i.e., measures of g).

- ^ O'Boyle Jr., E. H.; Humphrey, R. H.; Pollack, J. M.; Hawver, T. H.; Story, P. A. (2011). "The relation between emotional intelligence and job performance: A meta-analysis". Journal of Organizational Behavior. 32 (5): 788–818. doi:10.1002/job.714. S2CID 6010387.

- ^ Côté, Stéphane; Miners, Christopher (2006). "Emotional Intelligence, Cognitive Intelligence and Job Performance". Administrative Science Quarterly. 51: 1–28. doi:10.2189/asqu.51.1.1. S2CID 142971341.

- ^ Ghiselli, E. E. (1973). "The validity of aptitude tests in personnel selection". Personnel Psychology. 26 (4): 461–477. doi:10.1111/j.1744-6570.1973.tb01150.x.

- ^ a b Vinchur, Andrew J.; Schippmann, Jeffery S.; S., Fred; Switzer, III; Roth, Philip L. (1998). "A meta-analytic review of predictors of job performance for salespeople". Journal of Applied Psychology. 83 (4): 586–597. doi:10.1037/0021-9010.83.4.586. S2CID 19093290.

- ^ Hunter, John E.; Hunter, Ronda F. (1984). "Validity and utility of alternative predictors of job performance". Psychological Bulletin. 96 (1): 72–98. doi:10.1037/0033-2909.96.1.72. S2CID 26858912.

- ^ Gottfredson, L. S. (1991). "The evaluation of alternative measures of job performance". Performance Assessment for the Workplace: 75–126.

- ^ Murphy, Kevin R.; Balzer, William K. (1986). "Systematic distortions in memory-based behavior ratings and performance evaluations: Consequences for rating accuracy". Journal of Applied Psychology. 71 (1): 39–44. doi:10.1037/0021-9010.71.1.39.

- ^ Hosoda, Megumi; Stone-Romero, Eugene F.; Coats, Gwen (1 June 2003). "The Effects of Physical Attractiveness on Job-Related Outcomes: A Meta-Analysis of Experimental Studies". Personnel Psychology. 56 (2): 431–462. doi:10.1111/j.1744-6570.2003.tb00157.x. ISSN 1744-6570.

- ^ Stauffer, Joseph M.; Buckley, M. Ronald (2005). "The Existence and Nature of Racial Bias in Supervisory Ratings". Journal of Applied Psychology. 90 (3): 586–591. doi:10.1037/0021-9010.90.3.586. PMID 15910152.

- ^ Schmidt, Frank L. (1 April 2002). "The Role of General Cognitive Ability and Job Performance: Why There Cannot Be a Debate". Human Performance. 15 (1–2): 187–210. doi:10.1080/08959285.2002.9668091. ISSN 0895-9285. S2CID 214650608.