Wikipedia:Wikipedia Signpost/2023-11-06/Recent research

How English Wikipedia drove out fringe editors over two decades

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

"How Wikipedia Became the Last Good Place on the Internet" – by reinterpreting NPOV and driving out pro-fringe editors

A paper[1] in the American Political Science Review (considered a flagship journal in political science), titled "Rule Ambiguity, Institutional Clashes, and Population Loss: How Wikipedia Became the Last Good Place on the Internet"

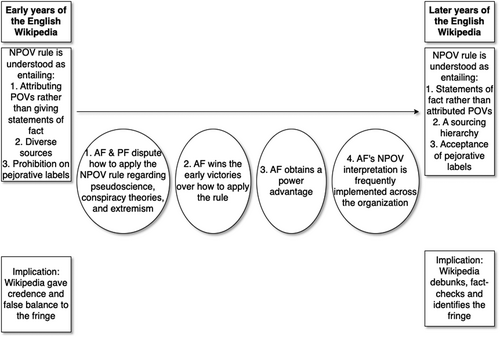

[...] shows that the English Wikipedia transformed its content over time through a gradual reinterpretation of its ambiguous Neutral Point of View (NPOV) guideline, the core rule regarding content on Wikipedia. This had meaningful consequences, turning an organization that used to lend credence and false balance to pseudoscience, conspiracy theories, and extremism into a proactive debunker, fact-checker and identifier of fringe discourse. There are several steps to the transformation. First, Wikipedians disputed how to apply the NPOV rule in specific instances in various corners of the encyclopedia. Second, the earliest contentious disputes were resolved against Wikipedians who were more supportive of or lenient toward conspiracy theories, pseudoscience, and conservatism, and in favor of Wikipedians whose understandings of the NPOV guideline were decisively anti-fringe. Third, the resolutions of these disputes enhanced the institutional power of the latter Wikipedians, whereas it led to the demobilization and exit of the pro-fringe Wikipedians. A power imbalance early on deepened over time due to disproportionate exits of demotivated, unsuccessful pro-fringe Wikipedia editors. Fourth, this meant that the remaining Wikipedia editor population, freed from pushback, increasingly interpreted and implemented the NPOV guideline in an anti-fringe manner. This endogenous process led to a gradual but highly consequential reinterpretation of the NPOV guideline throughout the encyclopedia.

The author provides ample empirical evidence supporting this description.

Content changes over time

First, "to document a transformation in Wikipedia's content," the study examined 63 articles "on topics that have been linked to pseudoscience, conspiracy theories, extremism, and fringe rhetoric in public discourse", across different areas ("health, climate, gender, sexuality, race, abortion, religion, politics, international relations, and history"). Their lead sections were coded according to a 5-category scheme "reflect[ing] varying degrees of neutrality":

- "Fringe normalization" ("The fringe position/entity is normalized and legitimized. There is an absence of criticism")

- "Teach the controversy"

- "False balance"

- "Identification of the fringe view"

- "Proactive fringe busting" ("Space is only given to the anti-fringe side whose position is stated as fact in Wikipedia’s own voice")

The results of these evaluations, tracking changes over time from 2001 to 2020, are detailed in a 110-page supplement, and summarized in the paper itself for nine of the articles (Table 1) - all of which moved over time in the anti-fringe direction (see illustration above for the article homeopathy).

Editor population changes over time

To explain these changes in Wikipedia's content over the years, the author used a process tracing approach to show

[...] that early outcomes of disputes over rule interpretations in different corners of the encyclopedia demobilized certain types of editors (while mobilizing others) and strengthened certain understandings of Wikipedia’s ambiguous rules (while weakening others). Over time, Wikipedians who supported fringe content departed or were ousted.

Specifically, the author "classif[ied] editors into the Anti-Fringe camp (AF) and the Pro-Fringe camp (PF)". The AF camp is described as "editors who were anti-conspiracy theories, anti-pseudoscience, and liberal", whereas the PF camp consists of "Editors who were more supportive of conspiracy theories, pseudoscience, and conservatism."

To classify editors into these two camps, the paper uses a variety of data sources, including the talk pages of the 63 articles and related discussions on noticeboards such as those about the NPOV and BLP (biographies of living persons) policies, the administrator's noticeboard, and "lists of editors brought up in arbitration committee rulings." This article-specific data was augmented by "a sample of referenda where editors are implicitly asked whether they support a pro- or anti-fringe interpretation of the NPOV guideline."

(Unfortunately, the paper's replication data does not include this editor-level classification data – in contrast to the content classifications data, which, as mentioned is rather thoroughly documented in the supplementary material. One might suspect IRB concerns, but the author "affirms this research did not involve human subjects" and it was therefore presumably not subject to IRB review.)

Tracking the contribution histories of these editors, the paper finds

The key piece of evidence is that PF members disappear over time in the wake of losses, both voluntarily and involuntarily. PF members are those who vote affirmatively for policies that normalize or lend credence to fringe viewpoints, who edit such content into articles, and who vote to defend fellow members of PF when there are debates as to whether they engaged in wrongdoing. Members of AF do the opposite.

The paper states that pro-fringe editors resorted to three choices: "fight back", "withdraw" and "acquiesce" (similar to the Exit, Voice, and Loyalty framework).

The mechanism underlying these changes

The author hypothesizes that

The causal mechanism for the gradual disappearance is that early losses demotivated members from PF or led to their sanctioning, whereas members of AF were empowered by early victories. As exits of PF members mount across the encyclopedia, the community increasingly adopts AF’s viewpoints as the way that the NPOV guideline should be understood.

To support this interpretation, the paper presents a more qualitative analysis of the English Wikipedia's trajectory over time:

- "Step 1: Rule Ambiguity" of the NPOV policy, which initially "allowed for inclusion of lower-quality sources, so long as they were attributed"

- "Step 2: Clashes between Camps over Rule Interpretations"

- "Step 3: Formation of a Power Asymmetry"

- "Over the course of years, AF successfully shaped how to understand the practical application of Wikipedia’s NPOV guideline. These early victories gave AF an upper hand in editing disputes ..." Here, the author highlights the role of ArbCom: "Two particularly important early arbitration rulings in the early years were the arbitration committee cases on climate change (2005) and pseudoscience (2006), which largely reaffirmed some viewpoints held by AF in those specific disputes and led to sanctions that primarily targeted prolific PF editors [...]"

- "Step 4: Reinterpretation of Wikipedia’s Rules". Here, the author details various aspects, e.g.

- an increased ability of "experienced editors [...] to drive disruptive 'newcomers' away from Wikipedia and instill in newcomers’ certain understandings of how rules should be interpreted." Also

- Also, "the gradual development of a sourcing hierarchy—whereby some sources were deemed reliable, and others were deemed unreliable—created advantages for AF editors." The 2017 ban of the Daily Mail is highlighted as a milestone, followed by deprecations of various other sources (Table 2).

The author also discusses and rejects various alternative explanations for "why content on the English Wikipedia transformed drastically over time." For example, he argues that while "Donald Trump’s 2016 election, the 2016 Brexit referendum, and the emergence of 'fake news' websites" around that time may have impacted Wikipedia editors' stances, this would not explain the more gradual changes over two decades that the paper identifies.

Fringe and politics

As readers might have noticed above, the author includes political coordinates in his conception of "fringe" ("more supportive of conspiracy theories, pseudoscience, and conservatism") and "anti-fringe" ("anti-conspiracy theories, anti-pseudoscience, and liberal"). This is introduced rather casually in the paper without much justification. (It also puts the finding of a general move towards "anti-fringe" somewhat into contrast with some older research by Greenstein and Zhu. They had concluded back in 2012 that the bias of Wikipedia's articles about US politics was moving from left to right, although this effect was mainly driven by creation of new articles with a pro-Republican bias. In a later paper, the same authors confirmed that on an individual level, "most articles change only mildly from their initial slant."[2])

Even if one takes into account that the present paper was published in the American Political Science Review, and accepts that in the current US political environment, support for conspiracy theories and pseudoscience might be much more prevalent on the right, that would still raise the question how much these results generalize to other countries, time periods or language Wikipedias – or indeed topics of English Wikipedia that are less prominent in American culture wars.

Indeed, in the "Conclusion" section where the author argues that his Wikipedia-based results "can plausibly help to explain institutional change in other contexts", he himself brings up several examples where the political coordinates are reversed and the "anti-fringe" side that gradually gains dominance is situated further to the right. These include the US Republican Party in itself, where "Trump critics [i.e. the "pro-Fringe" side in this context] have opted to retire rather than use their position to steer the movement in a direction that they find more palatable", and "illiberal regimes within the European Union have gradually been strengthened as dissatisfied citizens migrate from authoritarian states to liberal states."

As these examples show, the consolidation mechanism described by the paper may not be an unambiguously good thing, regardless of one's political views. For Wikipedians, this raises the question of how to balance these dynamics with important values such as inclusivity and openness. But also, the paper reminds one that inclusivity and openness are not unambiguously beneficial either, by demonstrating how by reducing these in some aspects, the English Wikipedia succeeded in becoming the "last good place on the internet."

See also a short presentation about this paper at this year's Wikimania (where Benjamin Mako Hill called it a "great example of how political scientists are increasingly learning from us [Wikipedia]")

Briefly

- See the page of the monthly Wikimedia Research Showcase for videos and slides of past presentations.

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

"It’s not an encyclopedia, it’s a market of agendas: Decentralized agenda networks between Wikipedia and global news media from 2015 to 2020"

From the abstract:[3]

"[...] this study collected comprehensive global news coverage and Wikipedia coverage of top US political news events from 2015 to 2020. Time series analysis found that none of the media types (Wikipedia, elite media, and non-elite media) exhibited dominant agenda-setting power, while each of them can lead the agenda in certain circumstances. [...] This article proposed a multi-agent and multidirectional network architecture to describe agenda-setting relationships. We also highlighted four unique characteristics of Wikipedia that matter for digital journalism."

"Stigmergy in Open Collaboration: An Empirical Investigation Based on Wikipedia"

From the abstract:[4]

This study builds on the theory of stigmergy, wherein actions performed by a participant leave traces on a knowledge artifact and stimulate succeeding actions. We find that stigmergy involves two intertwined processes: collective modification and collective excitation. We propose a new measure of stigmergy based on the spatial and temporal clustering of contributions. By analyzing thousands of Wikipedia articles, we find that the degree of stigmergy is positively associated with community members’ participation and the quality of the knowledge produced.

"The Wikipedia imaginaire: a new media history beyond Wikipedia.org (2001–2022)"

From the abstract:[5]

"This paper presents a media biography of Wikipedia’s data that focuses on the interpretative flexibility of Wikipedia and digital knowledge between the years 2001 and 2022. [....] Through an eclectic corpus of project websites, new articles, press releases, and blogs, I demonstrate the unexpected ways the online encyclopedia has permeated throughout digital culture over the past twenty years through projects like the Citizendium, Everipedia, Google Search and AI software. As a result of this analysis, I explain how this array of meanings and materials constitutes the Wikipedia imaginaire: a collective activity of sociotechnical development that is fundamental to understanding the ideological and utopian meaning of knowledge with digital culture."

References

- ^ Steinsson, Sverrir (2023-03-09). "Rule Ambiguity, Institutional Clashes, and Population Loss: How Wikipedia Became the Last Good Place on the Internet". American Political Science Review: 1–17. doi:10.1017/S0003055423000138. ISSN 0003-0554.

- ^ Greenstein, Shane; Zhu, Feng (2016-08-09). "Open Content, Linus' Law, and Neutral Point of View". Information SystemsResearch. doi:10.1287/isre.2016.0643. ISSN 1047-7047.

Author's copy

Author's copy

- ^ Ren, Ruqin; Xu, Jian (2023-01-28). "It's not an encyclopedia, it's a market of agendas: Decentralized agenda networks between Wikipedia and global news media from 2015 to 2020". New Media & Society: 146144482211496. doi:10.1177/14614448221149641. ISSN 1461-4448.

- ^ Zheng, Lei (Nico); Mai, Feng; Yan, Bei; Nickerson, Jeffrey V. (2023-07-03). "Stigmergy in Open Collaboration: An Empirical Investigation Based on Wikipedia". Journal of Management Information Systems. 40 (3): 983–1008. doi:10.1080/07421222.2023.2229119. ISSN 0742-1222.

- ^ Jankowski, Steve (2023-08-12). "The Wikipedia imaginaire: a new media history beyond Wikipedia.org (2001–2022)". Internet Histories: 1–21. doi:10.1080/24701475.2023.2246261. ISSN 2470-1475.

Discuss this story

- The lead article is very interesting and certainly worth reading. It touches on but doesn't go into detail about the two biggest structural features which have led to Wikipedia's success at excluding the "fringe", namely: a) there is only one Wikipedia article on a given topic, and everyone shares it & b) there is no way for misinformation to "go viral". As a wiki-journalist once noted, there is no "marketplace of ideas" on Wikipedia, where people pick and choose what content/perspective to consume. POVFORKS are rooted out. And any problems can be swiftly fixed by one or more bold editors without having to ask permission from either the purveyor of the misinformation or some faceless, massive central authority. —Ganesha811 (talk) 04:25, 6 November 2023 (UTC)[reply]

- People usually do not change their beliefs and behavior because they read or heard something somewhere. Neither Wikipedia, nor the media, nor social networks are apples from the Tree of Knowledge or channels for transmitting “infection” so that after reading some articles/messages people suddenly change dramatically. Deeply held views are not like air-borne illnesses that spread in a few breaths. Rather, contagions of behavior and beliefs are complex, requiring reinforcement to catch on[1][2]. At the same time, Wikipedia is not the only channel for people to obtain information.

- Thanks for sharing. I had the privilege to attend Wikimania live in Singapore, and I remember attending a talk wherein this research was presented. Ad Huikeshoven (talk) 14:32, 6 November 2023 (UTC)[reply]

- Indeed, and the relevant part of that talk is linked at the end of the review. Thanks for coming to the presentation! Regards, HaeB (talk) 03:18, 7 November 2023 (UTC)[reply]

- Good, this is what should happen. Though Wikipeida isn't perfect, it is far more turstworthy than websites that spout misdirection and lies to further their untruthful agenda. • Sbmeirow • Talk • 22:01, 6 November 2023 (UTC)[reply]

- Hacker News thread: https://news.ycombinator.com/item?id=38169202 ―Justin (koavf)❤T☮C☺M☯ 22:28, 6 November 2023 (UTC)[reply]

- The last transition is a serious problem, in my opinion. Wikipedia's own voice is less credible than the scientific community's, and not stating sources lessens an article's effect. And for those who disregard the scientific consensus, Wikipedia's directness not only lowers the information's impact, but also lessens the status (and perceived usefulness) of Wikipedia itself, thus making usefully covering fringe material even more difficult. --Yair rand (talk) 08:53, 7 November 2023 (UTC)[reply]

- I wish they would have said "NPOV policy" instead of "NPOV guideline". I realize they aren't writing to an audience of Wikipedians, but using policy gets across the same meaning as guideline (and feels stronger, as it should be) and aligns with our own terminology. Photos of Japan (talk) 10:35, 7 November 2023 (UTC)[reply]

- And it does align with WP:NPOV as well, as it is a policy. Alfa-ketosav (talk) 20:51, 6 December 2023 (UTC)[reply]

- I'm glad to see this shift documented. This is perhaps the simplest possible example, but it always bugged me when I'd see reports of ghost sightings in Wikipedia articles. What could be more of a scientific fringe viewpoint (i.e. pure nonsense) than somebody reporting that the dead are alive and walking around (well, not actually "walking")? And there was no evidence such as measurements, or anything beyond an occasional blurry photo. It was actually quite difficult at times to remove ghost sightings. Local newspapers would occasionally print them - perhaps trying to drum up local tourism. Most scientists wouldn't want to waste their time dubunking such an obvious fraud. Most wouldn't touch this stuff with a ten-foot pole (even if you could touch a ghost with a ten-foot pole). Well, I haven't seen a ghost sighting reported in Wikipedia for a long time now, and I hope we can keep it that way. Smallbones(smalltalk) 01:53, 8 November 2023 (UTC)[reply]

- I think that this study is focused on the English Wikipedia. It would be good to consider how the Croatian Wikipedia got hijacked by neo-Nazis for so long. Epa101 (talk) 22:44, 8 November 2023 (UTC)[reply]

- The Wikimedia Foundation commissioned a pretty informative report about this case, which I summarized last year in "Recent research" (aka the Wikimedia Research Newsletter).

- Also, a new paper just came out that posits some specific risk factors, see my tweet here - if you or other people reading along here happen to be interested in reading it and contributing a writeup to "Recent research", let me know!

- Regards, HaeB (talk) 05:01, 9 November 2023 (UTC)[reply]

- @HaeB Thanks for letting me know. I'll read the report and let you know. Epa101 (talk) 13:16, 12 November 2023 (UTC)[reply]

- Thanks! To clarify just in case, the WMF's report (while worth reading too) has already been covered; the new paper by Kharazian, Starbird and Hill is what we could use a writeup for. Regards, HaeB (talk) 21:29, 12 November 2023 (UTC)[reply]

- Yes, the study actually said that they only took the leads of English articles, not of Croatian or French or German ones. Alfa-ketosav (talk) 18:45, 24 November 2023 (UTC)[reply]

I think they could also compare the entire articles with the leads to determine where the former fall on the same 5-category scheme. That way, it can also be determined how the leads reflect the information on the entire articles. Also, they could have also compare the entire articles from different times as well to see how they changed over time on the scheme. Alfa-ketosav (talk) 18:43, 24 November 2023 (UTC)[reply]There is another danger that some will perceive excessive Proactive fringe busting as propaganda (especially if it becomes outdated), which may have poorly predictable consequences. Of course, due to polarization (Does Wikipedia community need to choose sides?) anything can be propaganda in one narrative or another, but still, in my opinion, nonetheless should avoid moral panics.--Proeksad (talk) 11:26, 6 November 2023 (UTC)[reply]