User:Jennyjiang95/sandbox

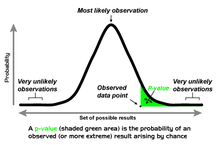

In statistical hypothesis testing,[1][2] a result has statistical significance when it is very unlikely to have occurred given the null hypothesis.[3] More precisely, the significance level defined for a study, α, is the probability of the study rejecting the null hypothesis, given that it were true;[4] and the p-value of a result, p, is the probability of obtaining a result at least as extreme, given that the null hypothesis were true. The result is statistically significant, by the standards of the study, when p < α.[5][6][7][8][9][10][11]

The significance level for a study is chosen before data collection, and typically set to 5%[12] or much lower, depending on the field of study.[13] In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone.[14][15] But if the p-value of an observed effect is less than the significance level, an investigator may conclude that the effect reflects the characteristics of the whole population,[1] thereby rejecting the null hypothesis.[16] This technique for testing the significance of results was developed in the early 20th century.

The term significance does not imply importance here, and the term statistical significance is not the same as research, theoretical, or practical significance.[1][2][17] For example, the term clinical significance refers to the practical importance of a treatment effect.

History[edit]

In 1925, Ronald Fisher advanced the idea of statistical hypothesis testing, which he called "tests of significance", in his publication Statistical Methods for Research Workers.[18][19][20] Fisher suggested a probability of one in twenty (0.05) as a convenient cutoff level to reject the null hypothesis.[21] In a 1933 paper, Jerzy Neyman and Egon Pearson called this cutoff the significance level, which they named α. They recommended that α be set ahead of time, prior to any data collection.[21][22]

Despite his initial suggestion of 0.05 as a significance level, Fisher did not intend this cutoff value to be fixed. In his 1956 publication Statistical methods and scientific inference, he recommended that significance levels be set according to specific circumstances.[21]

Related concepts[edit]

The significance level α is the threshold for p below which the experimenter assumes the null hypothesis is false, and something else is going on. This means α is also the probability of mistakenly rejecting the null hypothesis, if the null hypothesis is true.[23]

Sometimes researchers talk about the confidence level γ = (1 − α) instead. This is the probability of not rejecting the null hypothesis given that it is true.[24][25] Confidence levels and confidence intervals were introduced by Neyman in 1937.[26]

Role in statistical hypothesis testing[edit]

Statistical significance plays a pivotal role in statistical hypothesis testing. It is used to determine whether the null hypothesis should be rejected or retained. The null hypothesis is the default assumption that nothing happened or changed.[27] For the null hypothesis to be rejected, an observed result has to be statistically significant, i.e. the observed p-value is less than the pre-specified significance level.

To determine whether a result is statistically significant, a researcher calculates a p-value, which is the probability of observing an effect given that the null hypothesis is true.[11] The null hypothesis is rejected if the p-value is less than a predetermined level, α. α is called the significance level, and is the probability of rejecting the null hypothesis given that it is true (a type I error). It is usually set at or below 5%.

For example, when α is set to 5%, the conditional probability of a type I error, given that the null hypothesis is true, is 5%,[28] and a statistically significant result is one where the observed p-value is less than 5%.[29] When drawing data from a sample, this means that the rejection region comprises 5% of the sampling distribution.[30] These 5% can be allocated to one side of the sampling distribution, as in a one-tailed test, or partitioned to both sides of the distribution as in a two-tailed test, with each tail (or rejection region) containing 2.5% of the distribution.

The use of a one-tailed test is dependent on whether the research question or alternative hypothesis specifies a direction such as whether a group of objects is heavier or the performance of students on an assessment is better.[3] A two-tailed test may still be used but it will be less powerful than a one-tailed test because the rejection region for a one-tailed test is concentrated on one end of the null distribution and is twice the size (5% vs. 2.5%) of each rejection region for a two-tailed test. As a result, the null hypothesis can be rejected with a less extreme result if a one-tailed test was used.[31] The one-tailed test is only more powerful than a two-tailed test if the specified direction of the alternative hypothesis is correct. If it is wrong, however, then the one-tailed test has no power.

Stringent significance thresholds in specific fields[edit]

In specific fields such as particle physics and manufacturing, statistical significance is often expressed in multiples of the standard deviation or sigma (σ) of a normal distribution, with significance thresholds set at a much stricter level (e.g. 5σ).[32][33] For instance, the certainty of the Higgs boson particle's existence was based on the 5σ criterion, which corresponds to a p-value of about 1 in 3.5 million.[33][34]

In other fields of scientific research such as genome-wide association studies significance levels as low as 5×10−8 are not uncommon,[35][36] because the number of tests performed is extremely large.

Limitations[edit]

Researchers focusing solely on whether their results are statistically significant might report findings that are not substantive[37] and not replicable.[38][39] There is also a difference between statistical significance and practical significance. A study that is found to be statistically significant, may not necessarily be practically significant.[40]

Effect size[edit]

Effect size is a measure of a study's practical significance.[40] A statistically significant result may have a weak effect. To gauge the research significance of their result, researchers are encouraged to always report an effect size along with p-values. An effect size measure quantifies the strength of an effect, such as the distance between two means in units of standard deviation (cf. Cohen's d), the correlation between two variables or its square, and other measures.[41]

Disputes on the common threshold of statistically significance[edit]

So far, the convention states that any result with a p-value<0.05 is statistically significant. However, given the reproducibility crisis from the last years (scientists all over the world are failing to reproduce the results from other teams) and the number of false-positives (an effect claimed to be real but actually false) reported in scientific literature, a group of researchers has recently proposed to increase this statistical threshold to p<0.005, an order of magnitude smaller, thus aiming for higher stringency in the consideration of scientific data.

Nick Thieme discusses p-value and threshold in a Slate article. "P-value use varies by scientific field and by journal policies within those fields. Several journals in epidemiology, where the stakes of bad science are perhaps higher than in, say, psychology (if they mess up, people die), have discouraged the use of p-values for years. And even psychology journals are following suit: In 2015, Basic and Applied Social Psychology, a journal that has been accused of bad statistical (and experimental) practice, banned the use of p-values. Many other journals, including PLOS Medicine and Journal of Allergy and Clinical Immunology, actively discourage the use of p-values and significance testing already."

The team, led by Daniel Benjamin, a behavioral economist from the University of Southern California, is advocating that the “probability value” (p-value) threshold for statistical significance be lowered from the current standard of 0.05 to a much stricter threshold of 0.005.

Reproducibility[edit]

A statistically significant result may not be easy to reproduce.[39] In particular, some statistically significant results will in fact be false positives. Each failed attempt to reproduce a result increases the likelihood that the result was a false positive.[42]

Challenges[edit]

Overuse in some journals[edit]

Starting in the 2010s, some journals began questioning whether significance testing, and particularly using a threshold of α=5%, was being relied on too heavily as the primary measure of validity of a hypothesis.[43] Some journals encouraged authors to do more detailed analysis than just a statistical significance test. In social psychology, the Journal of Basic and Applied Social Psychology banned the use of significance testing altogether from papers it published,[44] requiring authors to use other measures to evaluate hypotheses and impact.[45][46]

Other editors, commenting on this ban have noted: "Banning the reporting of p-values, as Basic and Applied Social Psychology recently did, is not going to solve the problem because it is merely treating a symptom of the problem. There is nothing wrong with hypothesis testing and p-values per se as long as authors, reviewers, and action editors use them correctly." [47] Using Bayesian statistics can improve confidence levels but also requires making additional assumptions,[48] and may not necessarily improve practice regarding statistical testing.[49]

Redefining significance[edit]

In 2016, the American Statistical Association (ASA) published a statement on p-values, saying that "the widespread use of 'statistical significance' (generally interpreted as 'p≤0.05') as a license for making a claim of a scientific finding (or implied truth) leads to considerable distortion of the scientific process".[50] In 2017, a group of 72 authors proposed to enhance reproducibility by changing the p-value threshold for statistical significance from 0.05 to 0.005.[51] Other researchers responded that imposing a more stringent significance threshold would aggravate problems such as data dredging; alternative propositions are thus to select and justify flexible p-value thresholds before collecting data,[52] or to interpret p-values as continuous indices, thereby discarding thresholds and statistical significance.[53]

Andrew Gelman discussed the redefinition of statistical significance in a 2009 paper with David Weakliem

"Throughout, we use the term statistically significant in the conventional way, to mean that an estimate is at least two standard errors away from some “null hypothesis” or prespecified value that would indicate no effect present. An estimate is statistically insignificant if the observed value could reasonably be explained by simple chance variation, much in the way that a sequence of 20 coin tosses might happen to come up 8 heads and 12 tails; we would say that this result is not statistically significantly different from chance. More precisely, the observed proportion of heads is 40 percent but with a standard error of 11 percent—thus, the data are less than two standard errors away from the null hypothesis of 50 percent, and the outcome could clearly have occurred by chance. Standard error is a measure of the variation in an estimate and gets smaller as a sample size gets larger, converging on zero as the sample increases in size." This definition didn't talk about p-values and directly tie it into standard error and sample size.

See also[edit]

- A/B testing, ABX test

- Fisher's method for combining independent tests of significance

- Look-elsewhere effect

- Multiple comparisons problem

- Sample size

- Texas sharpshooter fallacy (gives examples of tests where the significance level was set too high)

References[edit]

- ^ a b c Sirkin, R. Mark (2005). "Two-sample t tests". Statistics for the Social Sciences (3rd ed.). Thousand Oaks, CA: SAGE Publications, Inc. pp. 271–316. ISBN 1-412-90546-X.

- ^ a b Borror, Connie M. (2009). "Statistical decision making". The Certified Quality Engineer Handbook (3rd ed.). Milwaukee, WI: ASQ Quality Press. pp. 418–472. ISBN 0-873-89745-5.

- ^ a b Myers, Jerome L.; Well, Arnold D.; Lorch, Jr., Robert F. (2010). "Developing fundamentals of hypothesis testing using the binomial distribution". Research design and statistical analysis (3rd ed.). New York, NY: Routledge. pp. 65–90. ISBN 0-805-86431-8.

- ^ Schlotzhauer, Sandra (2007). Elementary Statistics Using JMP (SAS Press) (PAP/CDR ed.). Cary, NC: SAS Institute. pp. 166–169. ISBN 1-599-94375-1.

- ^ Johnson, Valen E. (October 9, 2013). "Revised standards for statistical evidence". Proceedings of the National Academy of Sciences. 110. National Academies of Science: 19313–19317. doi:10.1073/pnas.1313476110. Retrieved 3 July 2014.

- ^ Redmond, Carol; Colton, Theodore (2001). "Clinical significance versus statistical significance". Biostatistics in Clinical Trials. Wiley Reference Series in Biostatistics (3rd ed.). West Sussex, United Kingdom: John Wiley & Sons Ltd. pp. 35–36. ISBN 0-471-82211-6.

- ^ Cumming, Geoff (2012). Understanding The New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis. New York, USA: Routledge. pp. 27–28.

- ^ Krzywinski, Martin; Altman, Naomi (30 October 2013). "Points of significance: Significance, P values and t-tests". Nature Methods. 10 (11). Nature Publishing Group: 1041–1042. doi:10.1038/nmeth.2698. Retrieved 3 July 2014.

- ^ Sham, Pak C.; Purcell, Shaun M (17 April 2014). "Statistical power and significance testing in large-scale genetic studies". Nature Reviews Genetics. 15 (5). Nature Publishing Group: 335–346. doi:10.1038/nrg3706. Retrieved 3 July 2014.

- ^ Altman, Douglas G. (1999). Practical Statistics for Medical Research. New York, USA: Chapman & Hall/CRC. p. 167. ISBN 978-0412276309.

- ^ a b Devore, Jay L. (2011). Probability and Statistics for Engineering and the Sciences (8th ed.). Boston, MA: Cengage Learning. pp. 300–344. ISBN 0-538-73352-7.

- ^ Craparo, Robert M. (2007). "Significance level". In Salkind, Neil J. (ed.). Encyclopedia of Measurement and Statistics. Vol. 3. Thousand Oaks, CA: SAGE Publications. pp. 889–891. ISBN 1-412-91611-9.

- ^ Sproull, Natalie L. (2002). "Hypothesis testing". Handbook of Research Methods: A Guide for Practitioners and Students in the Social Science (2nd ed.). Lanham, MD: Scarecrow Press, Inc. pp. 49–64. ISBN 0-810-84486-9.

- ^ Babbie, Earl R. (2013). "The logic of sampling". The Practice of Social Research (13th ed.). Belmont, CA: Cengage Learning. pp. 185–226. ISBN 1-133-04979-6.

- ^ Faherty, Vincent (2008). "Probability and statistical significance". Compassionate Statistics: Applied Quantitative Analysis for Social Services (With exercises and instructions in SPSS) (1st ed.). Thousand Oaks, CA: SAGE Publications, Inc. pp. 127–138. ISBN 1-412-93982-8.

- ^ McKillup, Steve (2006). "Probability helps you make a decision about your results". Statistics Explained: An Introductory Guide for Life Scientists (1st ed.). Cambridge, United Kingdom: Cambridge University Press. pp. 44–56. ISBN 0-521-54316-9.

- ^ Myers, Jerome L.; Well, Arnold D.; Lorch Jr, Robert F. (2010). "The t distribution and its applications". Research Design and Statistical Analysis: Third Edition (3rd ed.). New York, NY: Routledge. pp. 124–153. ISBN 0-805-86431-8.

- ^ Cumming, Geoff (2011). "From null hypothesis significance to testing effect sizes". Understanding The New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis. Multivariate Applications Series. East Sussex, United Kingdom: Routledge. pp. 21–52. ISBN 0-415-87968-X.

- ^ Fisher, Ronald A. (1925). Statistical Methods for Research Workers. Edinburgh, UK: Oliver and Boyd. p. 43. ISBN 0-050-02170-2.

- ^ Poletiek, Fenna H. (2001). "Formal theories of testing". Hypothesis-testing Behaviour. Essays in Cognitive Psychology (1st ed.). East Sussex, United Kingdom: Psychology Press. pp. 29–48. ISBN 1-841-69159-3.

- ^ a b c Quinn, Geoffrey R.; Keough, Michael J. (2002). Experimental Design and Data Analysis for Biologists (1st ed.). Cambridge, UK: Cambridge University Press. pp. 46–69. ISBN 0-521-00976-6.

- ^ Neyman, J.; Pearson, E.S. (1933). "The testing of statistical hypotheses in relation to probabilities a priori". Mathematical Proceedings of the Cambridge Philosophical Society. 29: 492–510. doi:10.1017/S030500410001152X.

- ^ Schlotzhauer, Sandra (2007). Elementary Statistics Using JMP (SAS Press) (PAP/CDR ed.). Cary, NC: SAS Institute. pp. 166–169. ISBN 1-599-94375-1.

- ^ "Conclusions about statistical significance are possible with the help of the confidence interval. If the confidence interval does not include the value of zero effect, it can be assumed that there is a statistically significant result." "Confidence Interval or P-Value?". doi:10.3238/arztebl.2009.0335.

{{cite journal}}: Cite journal requires|journal=(help) - ^ StatNews #73: Overlapping Confidence Intervals and Statistical Significance

- ^ Neyman, J. (1937). "Outline of a Theory of Statistical Estimation Based on the Classical Theory of Probability". Philosophical Transactions of the Royal Society A. 236: 333–380. doi:10.1098/rsta.1937.0005.

- ^ Meier, Kenneth J.; Brudney, Jeffrey L.; Bohte, John (2011). Applied Statistics for Public and Nonprofit Administration (3rd ed.). Boston, MA: Cengage Learning. pp. 189–209. ISBN 1-111-34280-6.

- ^ Healy, Joseph F. (2009). The Essentials of Statistics: A Tool for Social Research (2nd ed.). Belmont, CA: Cengage Learning. pp. 177–205. ISBN 0-495-60143-8.

- ^ McKillup, Steve (2006). Statistics Explained: An Introductory Guide for Life Scientists (1st ed.). Cambridge, UK: Cambridge University Press. pp. 32–38. ISBN 0-521-54316-9.

- ^ Health, David (1995). An Introduction To Experimental Design And Statistics For Biology (1st ed.). Boston, MA: CRC press. pp. 123–154. ISBN 1-857-28132-2.

- ^ Hinton, Perry R. (2010). "Significance, error, and power". Statistics explained (3rd ed.). New York, NY: Routledge. pp. 79–90. ISBN 1-848-72312-1.

- ^ Vaughan, Simon (2013). Scientific Inference: Learning from Data (1st ed.). Cambridge, UK: Cambridge University Press. pp. 146–152. ISBN 1-107-02482-X.

- ^ a b Bracken, Michael B. (2013). Risk, Chance, and Causation: Investigating the Origins and Treatment of Disease (1st ed.). New Haven, CT: Yale University Press. pp. 260–276. ISBN 0-300-18884-6.

- ^ Franklin, Allan (2013). "Prologue: The rise of the sigmas". Shifting Standards: Experiments in Particle Physics in the Twentieth Century (1st ed.). Pittsburgh, PA: University of Pittsburgh Press. pp. Ii–Iii. ISBN 0-822-94430-8.

- ^ Clarke, GM; Anderson, CA; Pettersson, FH; Cardon, LR; Morris, AP; Zondervan, KT (February 6, 2011). "Basic statistical analysis in genetic case-control studies". Nature Protocols. 6 (2): 121–33. doi:10.1038/nprot.2010.182. PMC 3154648. PMID 21293453.

{{cite journal}}:|access-date=requires|url=(help) - ^ Barsh, GS; Copenhaver, GP; Gibson, G; Williams, SM (July 5, 2012). "Guidelines for Genome-Wide Association Studies". PLoS Genetics. 8 (7): e1002812. doi:10.1371/journal.pgen.1002812. PMC 3390399. PMID 22792080.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Carver, Ronald P. (1978). "The Case Against Statistical Significance Testing". Harvard Educational Review. 48: 378–399.

- ^ Ioannidis, John P. A. (2005). "Why most published research findings are false". PLoS Medicine. 2: e124. doi:10.1371/journal.pmed.0020124. PMC 1182327. PMID 16060722.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ a b Amrhein, Valentin; Korner-Nievergelt, Fränzi; Roth, Tobias (2017). "The earth is flat (p > 0.05): significance thresholds and the crisis of unreplicable research". PeerJ. 5: e3544. doi:10.7717/peerj.3544.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ a b Hojat, Mohammadreza; Xu, Gang (2004). "A Visitor's Guide to Effect Sizes". Advances in Health Sciences Education.

{{cite journal}}:|access-date=requires|url=(help) - ^ Pedhazur, Elazar J.; Schmelkin, Liora P. (1991). Measurement, Design, and Analysis: An Integrated Approach (Student ed.). New York, NY: Psychology Press. pp. 180–210. ISBN 0-805-81063-3.

- ^ Stahel, Werner (2016). "Statistical Issue in Reproducibility". Principles, Problems, Practices, and Prospects Reproducibility: Principles, Problems, Practices, and Prospects: 87–114.

{{cite journal}}:|access-date=requires|url=(help) - ^ "CSSME Seminar Series: The argument over p-values and the Null Hypothesis Significance Testing (NHST) paradigm » School of Education » University of Leeds". www.education.leeds.ac.uk. Retrieved 2016-12-01.

- ^ Novella, Steven (February 25, 2015). "Psychology Journal Bans Significance Testing". Science-Based Medicine.

- ^ Woolston, Chris (2015-03-05). "Psychology journal bans P values". Nature. 519 (7541): 9–9. doi:10.1038/519009f.

- ^ Siegfried, Tom (2015-03-17). "P value ban: small step for a journal, giant leap for science". Science News. Retrieved 2016-12-01.

- ^ Antonakis, John (February 2017). "On doing better science: From thrill of discovery to policy implications". The Leadership Quarterly. 28 (1): 5–21. doi:10.1016/j.leaqua.2017.01.006.

- ^ Wasserstein, Ronald L.; Lazar, Nicole A. (2016-04-02). "The ASA's Statement on p-Values: Context, Process, and Purpose". The American Statistician. 70 (2): 129–133. doi:10.1080/00031305.2016.1154108. ISSN 0003-1305.

- ^ García-Pérez, Miguel A. (2016-10-05). "Thou Shalt Not Bear False Witness Against Null Hypothesis Significance Testing". Educational and Psychological Measurement: 0013164416668232. doi:10.1177/0013164416668232. ISSN 0013-1644.

- ^ Wasserstein, Ronald L.; Lazar, Nicole A. (2016-04-02). "The ASA's Statement on p-Values: Context, Process, and Purpose". The American Statistician. 70 (2): 129–133. doi:10.1080/00031305.2016.1154108. ISSN 0003-1305.

- ^ Benjamin, Daniel; et al. (2017). "Redefine statistical significance". Nature Human Behaviour. 1: 0189. doi:10.1038/s41562-017-0189-z.

{{cite journal}}: Explicit use of et al. in:|first=(help) - ^ Chawla, Dalmeet (2017). "'One-size-fits-all' threshold for P values under fire". Nature. doi:10.1038/nature.2017.22625.

- ^ Amrhein, Valentin; Greenland, Sander (2017). "Remove, rather than redefine, statistical significance". Nature Human Behaviour. 1: 0224. doi:10.1038/s41562-017-0224-0.

Further reading[edit]

- Ziliak, Stephen and Deirdre McCloskey (2008), The Cult of Statistical Significance: How the Standard Error Costs Us Jobs, Justice, and Lives. Ann Arbor, University of Michigan Press, 2009. ISBN 978-0-472-07007-7. Reviews and reception: (compiled by Ziliak)

- Thompson, Bruce (2004). "The "significance" crisis in psychology and education". Journal of Socio-Economics. 33: 607–613. doi:10.1016/j.socec.2004.09.034.

- Chow, Siu L., (1996). Statistical Significance: Rationale, Validity and Utility, Volume 1 of series Introducing Statistical Methods, Sage Publications Ltd, ISBN 978-0-7619-5205-3 – argues that statistical significance is useful in certain circumstances.

- Kline, Rex, (2004). Beyond Significance Testing: Reforming Data Analysis Methods in Behavioral Research Washington, DC: American Psychological Association.

- Nuzzo, Regina (2014). Scientific method: Statistical errors. Nature Vol. 506, p. 150-152 (open access). Highlights common misunderstandings about the p value.

- Cohen, Joseph (1994). [1]. The earth is round (p<.05). American Psychologist. Vol 49, p. 997-1003. Reviews problems with null hypothesis statistical testing.

External links[edit]

- The article "Earliest Known Uses of Some of the Words of Mathematics (S)" contains an entry on Significance that provides some historical information.

- "The Concept of Statistical Significance Testing" (February 1994): article by Bruce Thompon hosted by the ERIC Clearinghouse on Assessment and Evaluation, Washington, D.C.

- "What does it mean for a result to be "statistically significant"?" (no date): an article from the Statistical Assessment Service at George Mason University, Washington, D.C.