User:Timrb/Ray tracing

In computer graphics, ray tracing is a technique for generating an image by tracing the path of light through pixels in an image plane. The technique is capable of producing a very high degree of photorealism; usually higher than that of typical scanline rendering methods, but at a greater computational cost. This makes ray tracing best suited for applications where the image can be rendered slowly ahead of time, such as in still images and film and television special effects, and more poorly suited for real-time applications like computer games where speed is critical. Ray tracing is capable of simulating a wide variety of optical effects, such as reflection and refraction, scattering, and chromatic aberration.

Algorithm

[edit]

simple overview, screen door, closest object "blocking" pixel, etc.

Tracing

[edit]The ray tracing algorithm must first determine what is visible through each pixel. This is done by extending a ray from the camera or eye position, through the pixel's position in an imaginary image plane. This ray is then tested for intersection with all objects in the potentially visible set. Among those objects that collide with the ray, the collision point that is nearest to the camera and its corresponding object are chosen. All other objects and their collision points are then ignored, since they are occluded by the closer object. Objects that are "behind" the camera must be discarded as well; this check can easily be performed during the distance test step.

There are many algorithms for testing ray-object intersection; most of them are for simple primitives such as triangles, cubes, spheres, planes, and quadric surfaces. More complicated objects may be broken down into many of these primitives, which are then tested against the ray individually.

A ray tracer may improve efficiency by using spatial partitioning to reduce the number of objects in the potentially visible set, and hence the number of ray-object intersection tests that must be performed.

Shading

[edit]Once the closest visible object and its point of intersection with the test ray have been identified, the pixel must be properly colored, or shaded. The goal of the shading step is to determine the appearance of the rendered object's surface. An artist or programmer can simulate a wide variety of textures and materials simply by varying the surface reflectance properties of an object, such as color, reflectivity, transparency, or surface normal direction.

Material appearance is usually highly dependent on lighting conditions. Thus, during the shading step, an approximation of the incident illumination must be obtained; often this is done by extending other rays into the scene (see the sections on lighting models and recursion). A description of the surface's light-scattering properties, called a BRDF, is then used to determine how much light is directed back toward the camera. The properties of the BRDF are what give the surface its overall appearance. The simplest BRDF assumes that light is scattered uniformly in all directions, and this results in the Lambertian reflectance model. Transparency and translucency effects may be computed by extending the BRDF to cover the unit sphere and gathering light incident from "underneath" the surface, or by applying a more complicated BSDF. Other terms, such as those for emissivity, may be applied at this stage as well to obtain the final brightness of the pixel.

Typically, the above steps will be performed on a per-channel basis to obtain a full-color image. However, a simple RGB color model may be insufficient to accurately produce more advanced effects like chromatic aberration. In these cases, an absorption spectrum technique may be used, in which multiple wavelengths are sampled.

Sub-programs that perform these coloring steps are called shaders.

Pseudocode

[edit]Pseudocode for the ray tracing algorithm, in Java syntax:

static Light[] lights;

static SceneObject[] objects;

public void render(Camera cam, Image image){

Color pixel_color;

for (int y = 0; y < image.height; y++){

for (int x = 0; x < image.width; x++){

//compute this pixel's location in the image plane:

Vector3D pixel_location = cam.coordinatesOfPixel(x, y, image);

//generate a ray passing from the camera through the current pixel:

Ray r = new Ray(cam.location, pixel_location.minus(cam.location));

//find the closest object intersecting r:

SceneObject hitObject = trace(r);

if (hitObject != null){

//figure out the color of the object hit:

pixel_color = hitObject.shade(r, lights, objects);

} else {

//no object hit; use background color

pixel_color = Color.BLACK;

}

//store the result into the image

image.setPixel(x, y, pixel_color);

}

}

}

public SceneObject trace(Ray r){

SceneObject bestHit = null;

double closest = Double.POSITIVE_INFINITY;

for (SceneObject o : scene){

if (o.intersectsWith(r)){

double rayDistance = o.distanceAlongRay(r);

if (rayDistance > 0 && rayDistance < closest){

bestHit = o;

closest = rayDistance;

}

}

}

return bestHit;

}

Ray direction

[edit]Comparison with nature

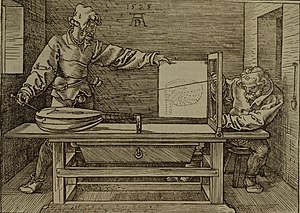

[edit]Let us take the perspective that a ray tracer generates an image by modeling the principles of a camera. In real life, light rays start at a light source, reflect off of objects in the scene, and come in through the camera lens to form an image on the film. However, to simulate this directly on a computer would be inefficient: the overwhelming majority of photons will never enter the camera lens at all. We have no way of knowing ahead of time whether a photon leaving its source will eventually strike the film, and to compute those unrecorded paths would be tremendously wasteful.

Instead, the ray tracing algorithm starts at the "film" (image plane), and sends rays outward into the scene, in order to determine where they came from. This method assures that all tested light paths will intersect the image plane and hence be recorded by the camera, tremendously improving efficiency. This trick is made possible by the fortuitous fact that a light path is correct regardless of the direction of travel.

Terminology

[edit]The process of shooting rays from the eye to a light source is sometimes referred to as backwards ray tracing, since it is the opposite direction photons actually travel. However, there is confusion with this terminology: Early ray tracing was always done from the eye, and researchers such as James Arvo used the term backwards ray tracing to refer to shooting rays away from light sources and gathering the results. As such, it is clearer to distinguish eye-based versus light-based ray tracing.

Light-based ray tracing

[edit]Computer simulations that generate images by casting rays away from the light source do exist; these techniques are often necessary to accurately produce optical effects such as caustics. A common form of light-based ray tracing method is photon mapping, which combines a "forward" simulation of light paths with standard ray tracing techniques. In photon mapping, irradiance information is generated by propagating light rays away from a light source and storing photon "impacts" on the surfaces they strike. The resulting data is then read during the ray tracing pass.[1][2] Bidirectional path tracing is also popular, wherein light paths are traced from both the camera and the scene's light sources, and are subsequently joined by a connecting ray after some length.[3][4] Other projects which stochastically simulate the complete path of a photon from source to film have been tried (see photon soup), but they are generally valuable only as curiosities due to their extreme inefficiency.

Lighting models

[edit]There are a number of methods for estimating incident light, with wide variation in complexity and efficiency. "Local" illumination models make only rough estimates of irradiance and do not consider indirect light contributions, such as those from brightly-lit nearby objects. These models are therefore much less computationally expensive to implement, and achieve their efficiency at the expense of realism. Global illumination models attempt to more accurately solve the rendering equation, and produce much more realistic results at the expense of efficiency.

A particular lighting model is an expression for irradiance (incoming light) in the rendering equation, as a function of position () and incoming direction ().

Local illumination models

[edit]Local illumination models derive their speed from the fact that incident illumination can be calculated without sampling the surrounding scene. In order for this to be possible, the simplifying assumption is made that if a surface faces a light, the light will reach that surface and not be blocked or in shadow.

For each of the following models, the brightness of a pixel may be written only in terms of the surface point , the surface normal , the surface reflectance properties , the direction to the camerea , and the position of the light source .

- Ambient light sources are perhaps the simplest possible model. In this case, illumination is assumed to be of constant color and brightness in all directions. For an ambient light source:

- Directional light sources are modeled as a point light source at infinite distance; incoming light rays are parallel and have constant brightness. For a directional light source with inward directionality , the function for incident light is:

- where:

- is the Dirac delta function with a singularity at

- In this case, the rendering equation may be solved to reveal the total brightness of a pixel:

- is taken to account for the case where ; for an opaque object there can be no illumination on a surface directed away from the light source.

- Point light sources emit light uniformly in all directions, however, their brightness falls off according to the inverse square law. For a point light source at position , the function for incident light is:

- where

- ; the inward direction from the light source at to

- is the brightness of the light source at unit distance

- is the Dirac delta function with a singularity at

- In this case, the total brightness works out to:

- is taken to account for the case where ; for an opaque object there can be no illumination on a surface directed away from the light source.

- Spot light sources emit a cone of light pointed in a particular direction , with a spread angle . The incident light from a simple spotlight may be written as:

- where:

- ; the inward direction from the light source at to

- is the Dirac delta function with a singularity at

- In this case, total brightness is:

- Additionally, a spotlight may implement the inverse square law, or define a "hot spot" angle inside of which the brightness does not fall off.

- Environment light sources define a map of incoming light. This map may be thought of as an environment or "skybox" at infinite distance which contributes light to the scene. Typically is a two-dimensional image mapped to the sphere of incoming directions, in the form of a cube map or spherical map. In this case, incident light is:

- The above may be plugged into the rendering equation and simplified into a local lighting model, provided the BRDF is constant with respect to viewing direction . In this case, it is possible to factor out and pre-compute as a function of , then store it back into a new map . Thus we have a simplified rendering equation:

- , where accounts for other effects such as specular highlight, reflection, or other additional light sources.

Global illumination models

[edit]

Unlike local illumination models, global illumination models may account for complex environment-dependent effects like shadows, refraction, and diffuse interreflection. The most accurate global illumination models can produce near-photorealistic results. By definition, a global illumination model must sample the surrounding scene in order to estimate incident light. This involves recursively tracing additional rays into the scene, possibly a very large number of times, producing the computational complexity that makes global illumination models so much slower than local ones.

- Shadows can be added to any of the above local illumination models by testing to see if the light source at is occluded by another object in the scene. This may be done by sending out a ray along the vector traveling from to and testing for intersection with an intervening object :

- where , the vector connecting to

- is the brightness ordinarily given by the local illumination model

- Area light sources are modeled as luminous objects with finite dimensions. For an area light source defined by the luminous object :

- In a ray tracer implementation, it is generally advisable to have a method of quickly generating a ray that intersects in a small or constant time, to avoid wasting computation on parts of the hemisphere where is 0. The generated ray can then be traced into the scene and tested for intersection with other objects to determine if is occluded. The process is repeated with many such rays in order to estimate how much of is visible, giving an approximation for the necessary integral of over the solid angle subtended by (see #Recusion).

Shading models

[edit]There are a variety of shading models with a wide degree of complexity. All but the simplest ones require the use of at least one surface normal, whose computation is specific to the primitive being rendered. Additionally, this normal may be perturbed by a bump map or a normal map to produce the effect of texture.

- normals

- example: taking the normal of a sphere

- shadow rays

links:

Surface properties

[edit]can all be thought of as interesting ways of perturbing the BRDF

- color

- texture maps

- bumps

- bump map

- normals maps and other normal perturbation

- specularity

- transparency

Recursion

[edit]

- transparency

- reflectivity

- brdfs

- simplified with environment map

History

[edit]

Ray casting

[edit]The first ray casting algorithm used for rendering was presented by Arthur Appel in 1968.[citation needed] One important advantage ray casting offered over previous scanline rendering algorithms was its ability to easily deal with non-planar surfaces and solids, such as cones and spheres. If a mathematical surface can be intersected by a ray, it can be rendered using ray casting. Scanline renderers, on the other hand, can only construct objects out of planar polygons. Thus, the new algorithm allowed the construction and rendering of elaborate objects using solid modeling techniques that were not possible before. Ray casting, however, is not recursive: no additional rays are sent out from a surface to compute shadows, reflection, or refraction. For this reason, the illumination models used are exclusively local, and there is an inherent limit to the realism possible with a ray caster.

Ray casting for producing computer graphics was first used by scientists at Mathematical Applications Group, Inc., (MAGI) of Elmsford, New York, New York. In 1966, the company was created to perform radiation exposure calculations for the Department of Defense.[citation needed] MAGI's software calculated not only how the gamma rays bounced off of surfaces (ray casting for radiation had been done since the 1940s), but also how they penetrated and refracted within. These studies helped the government to determine certain military applications; constructing military vehicles that would protect troops from radiation, designing re-entry vehicles for space exploration. Under the direction of Dr. Philip Mittelman, the scientists developed a method of generating images using the same basic software. In 1972, MAGI became a commercial animation studio. This studio used ray casting to generate 3-D computer animation for television commercials, educational films, and eventually feature films – they created much of the animation in the film Tron using ray casting exclusively. MAGI went out of business in 1985.

Ray Tracing

[edit]The next important research breakthrough came from Turner Whitted in 1979.[citation needed] Previous algorithms cast rays from the eye into the scene, but the rays were traced no further. Whitted continued the process by adding recursion: When a ray hits a surface, it could generate up to three new types of rays: reflection, refraction, and shadow. Each of these rays would then be traced back into the scene and rendered. Reflected rays could model shiny surfaces, refracted rays could model transparent refractive objects, and shadow rays could test if a light source was obstructed, casting the surface in shadow.

In real-time

[edit]The first implementation of a "real-time" ray-tracer was credited at the 2005 SIGGRAPH computer graphics conference as the REMRT/RT tools developed by Mike Muuss for the BRL-CAD solid modeling system. Initially implemented in 1986 and later published in 1987 at USENIX, the BRL-CAD ray-tracer is the first known implementation of a parallel network distributed ray-tracing system that achieved several frames per second in rendering performance.[5] This performance was attained by leveraging the highly-optimized yet platform agnostic LIBRT ray-tracing engine in BRL-CAD and by using solid implicit CSG geometry on several shared memory parallel machines over a commodity network. BRL-CAD's ray-tracer, including REMRT/RT tools, continue to be available and developed today as Open source software.[6]

Since then, there have been considerable efforts and research towards implementing ray tracing in real time speeds for a variety of purposes on stand-alone desktop configurations. These purposes include interactive 3D graphics applications such as demoscene productions, computer and video games, and image rendering. Some real-time software 3D engines based on ray tracing have been developed by hobbyist demo programmers since the late 1990s.[7]

The OpenRT project includes a highly-optimized software core for ray tracing along with an OpenGL-like API in order to offer an alternative to the current rasterisation based approach for interactive 3D graphics. Ray tracing hardware, such as the experimental Ray Processing Unit developed at the Saarland University, has been designed to accelerate some of the computationally intensive operations of ray tracing. On March 16 2007, the University of Saarland revealed an implementation of a high-performance ray tracing engine that allowed computer games to be rendered via ray tracing without intensive resource usage.[8]

Thursday, June 12, 2008 Intel demonstrated Enemy Territory: Quake Wars running in basic HD (720p) resolution, which is the first time the company was able to render the game using a standard video resolution, instead of 1024 x 1024 or 512 x 512 pixels. ETQW operated at 14-29 frames per second. The demonstration ran on a 16-core (4 socket, 4 core) Tigerton system running at 2.93 GHz.[9]

Work these in somehow:

[edit]- Phong illumination model

- phong shading

- Terms of rendering function can be added to each other

External Links

[edit]- 3D Object Intersection - A large table of object intersection algorithms

- The Internet Ray Tracing Competition – Yearly image/animation contest

- PixelMachine - Article on building a ray tracer "over a weekend"

- What is ray tracing? - An ongoing tutorial of ray tracing techniques, from simple to advanced

- The Ray Tracing News – Short research articles, and links to resources

- Interactive Ray Tracing: The replacement of rasterization? – A 2006 thesis about the state of real time ray tracing

- Quake 4 Raytraced by Daniel Pohl – An implementation of real time ray tracing in an actual game

- Games using real time ray tracing

- A series of tutorials on implementing a raytracer using C++

Citations

[edit]- ^ http://graphics.ucsd.edu/~henrik/papers/photon_map/global_illumination_using_photon_maps_egwr96.pdf Global Illumination using Photon Maps

- ^ http://web.cs.wpi.edu/~emmanuel/courses/cs563/write_ups/zackw/photon_mapping/PhotonMapping.html

- ^ Eric P. Lafortune and Yves D. Willems (December 1993). "Bi-Directional Path Tracing". Proceedings of Compugraphics '93: 145–153.

- ^ Péter Dornbach. "Implementation of bidirectional ray tracing algorithm". Retrieved 2008-06-11.

- ^ See Proceedings of 4th Computer Graphics Workshop, Cambridge, MA, USA, October 1987. Usenix Association, 1987. pp 86–98.

- ^ "BRL-CAD Overview". Retrieved 2007-09-17.

- ^ Piero Foscari. "The Realtime Raytracing Realm". ACM Transactions on Graphics. Retrieved 2007-09-17.

- ^ Mark Ward (March 16, 2007). "Rays light up life-like graphics". BBC News. Retrieved 2007-09-17.

{{cite news}}: Check date values in:|date=(help) - ^ Theo Valich (June 12, 2008). "Intel converts ET: Quake Wars to ray-tracing". TG Daily. Retrieved 2008-06-16.

{{cite web}}: Check date values in:|date=(help)

http://www.siggraph.org/education/materials/HyperGraph/illumin/reflect2.htm

- dirac delta

- lighting models

- rendering equation