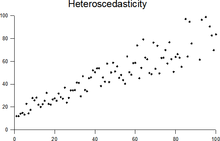

Heteroscedasticity

This article focuses only on one specialized aspect of the subject. (October 2009) |

In statistics, a sequence of random variables is heteroscedastic, or heteroskedastic, if the random variables have different variances. The term means "differing variance" and comes from the Greek "hetero" ('different') and "skedasis" ('dispersion'). In contrast, a sequence of random variables is called homoscedastic if it has constant variance.

Suppose there is a sequence of random variables {Yt}t=1n and a sequence of vectors of random variables, {Xt}t=1n. In dealing with conditional expectations of Yt given Xt, the sequence {Yt}t=1n is said to be heteroskedastic if the conditional variance of Yt given Xt, changes with t. Some authors refer to this as conditional heteroscedasticity to emphasize the fact that it is the sequence of conditional variance that changes and not the unconditional variance. In fact it is possible to observe conditional heteroscedasticity even when dealing with a sequence of unconditional homoscedastic random variables, however, the opposite does not hold.

When using some statistical techniques, such as ordinary least squares (OLS), a number of assumptions are typically made. One of these is that the error term has a constant variance. This might not be true even if the error term is assumed to be drawn from identical distributions.

For example, the error term could vary or increase with each observation, something that is often the case with cross-sectional or time series measurements. Heteroscedasticity is often studied as part of econometrics, which frequently deals with data exhibiting it. White's influential paper (White 1980) used "heteroskedasticity" instead of "heteroscedasticity" whereas subsequent Econometrics textbooks such as Gujarati et al. Basic Econometrics (2009) use "heteroscedasticity."

With the advent of robust standard errors allowing for inference without specifying the conditional second moment of error term, testing conditional homoscedasticity is not as important as in the past.[citation needed]

The econometrician Robert Engle won the 2003 Nobel Memorial Prize for Economics for his studies on regression analysis in the presence of heteroscedasticity, which led to his formulation of the Autoregressive conditional heteroskedasticity (ARCH) modeling technique.

Consequences

Heteroscedasticity does not cause ordinary least squares coefficient estimates to be biased, although it can cause ordinary least squares estimates of the variance (and, thus, standard errors) of the coefficients to be biased, possibly above or below the true or population variance. Thus, regression analysis using heteroscedastic data will still provide an unbiased estimate for the relationship between the predictor variable and the outcome, but standard errors and therefore inferences obtained from data analysis are suspect. Biased standard errors lead to biased inference, so results of hypothesis tests are possibly wrong. An example of the consequence of biased standard error estimation which OLS will produce if heteroskedasticity is present, is that a researcher may find at a selected confidence level, results compelling against the rejection of a null hypothesis as statistically significant when that null hypothesis was in fact uncharacteristic of the actual population (i.e., make a type I error).

It is widely known that, under certain assumptions, the OLS estimator has a normal asymptotic distribution when properly normalized and centered (even when the data does not come from a normal distribution). This result is used to justify using a normal distribution, or a chi square distribution (depending on how the test statistic is calculated), when conducting a hypothesis test. This holds even under heteroscedasticity. More precisely, the OLS estimator in the presence of heteroscedasticity is asymptotically normal, when properly normalized and centered, with a variance-covariance matrix that differs from the case of homoscedasticity. White (1980) [1] proposed a consistent estimator for the variance-covariance matrix of the asymptotic distribution of the OLS estimator. This validates the use of hypothesis testing using OLS estimators and White's variance-covariance estimator under heteroscedasticity.

Heteroscedasticity is also a major practical issue encountered in ANOVA problems.[2] The F test can still be used in some circumstances.[3]

However, as Gujarati et al. noted, (Gujarati et al. 2009, p. 400)[4] students in Econometrics should not overreact to heteroskedasticity. John Fox (the author of Applied Regression Analysis) wrote, "unequal error variance is worth correcting only when the problem is severe." (Fox 1997, p. 306)[5] (Cited in Gujarati et al. 2009, p. 400) And another word of caution from Mankiw, "heteroscedasticity has never been a reason to throw out an otherwise good model." (Mankiw 1990, p. 1648)[6] (Cited in Gujarati et al. 2009, p. 400)

Detection

There are several methods to test for the presence of heteroscedasticity:

- Park test (1966)[7]

- Glejser test (1969)

- White test (1980)

- Breusch–Pagan test

- Goldfeld–Quandt test

- Cook–Weisberg test

- Harrison–McCabe test

- Spearman rank correlation coefficient

- Brown–Forsythe test

- Levene test

These methods consist, in general, in performing hypothesis tests. These tests consist of a statistic (a mathematical expression), a hypothesis that is going to be tested (the null hypothesis), an alternative hypothesis, and a distributional statement about the statistic (the mathematical expression).

Many introductory statistics and econometrics books, for pedagogical reasons, present these tests under the assumption that the data set in hand comes from a normal distribution. A great misconception is the thought that this assumption is necessary; however, in most cases, this assumption can be relaxed by using asymptotic distribution theory (a.k.a. asymptotic theory).

Most of the methods of detecting heteroscedasticity presented here can be used even when the data do not come from a normal distribution. This is done by realizing that the distributional statement about the statistic (the mathematical expression) can be approximated by using asymptotic theory[citation needed].

Fixes

There are three common corrections for heteroscedasticity:

- View Logged data. Unlogged series that are growing exponentially often appear to have increasing variability as the series rises over time. The variability in percentage terms may, however, be rather stable.

- Use a different specification for the model (different X variables, or perhaps non-linear transformations of the X variables).

- Apply a weighted least squares estimation method, in which OLS is applied to transformed or weighted values of X and Y. The weights vary over observations, depending on the changing error variances.

- Heteroscedasticity-consistent standard errors (HCSE), while still biased, improve upon OLS estimates (White 1980). Generally, HCSEs are greater than their OLS counterparts, resulting in lower t-scores and a reduced probability of statistically significant coefficients. The White method corrects for heteroscedasticity without altering the values of the coefficients. This method may be superior to regular OLS because if heteroscedasticity is present it corrects for it, however, if it is not present you have not made any error.

Examples

Heteroscedasticity often occurs when there is a large difference among the sizes of the observations.

- A classic example of heteroscedasticity is that of income versus expenditure on meals. As one's income increases, the variability of food consumption will increase. A poorer person will spend a rather constant amount by always eating fast food; a wealthier person may occasionally buy fast food and other times eat an expensive meal. Those with higher incomes display a greater variability of food consumption.

- Imagine you are watching a rocket take off nearby and measuring the distance it has traveled once each second. In the first couple of seconds your measurements may be accurate to the nearest centimeter, say. However, 5 minutes later as the rocket recedes into space, the accuracy of your measurements may only be good to 100 m, because of the increased distance, atmospheric distortion and a variety of other factors. The data you collect would exhibit heteroscedasticity.

See also

- Kurtosis (peakedness)

- Breusch–Pagan test of heteroscedasticity of the residuals of a linear regression

- Regression analysis

- homoscedasticity

- Autoregressive conditional heteroscedasticity (ARCH)

- White test

- Heteroscedasticity-consistent standard errors

References

- ^ White, Halbert (1980). "A heteroscedasticity-consistent covariance matrix estimator and a direct test for heteroscedasticity". Econometrica. 48 (4): 817–838. doi:10.2307/1912934.

- ^ Gamage, Jinadasa; Weerahandi, Sam (1998). "Size performance of some tests in one-way anova". Communications in Statistics - Simulation and Computation. 27: 625. doi:10.1080/03610919808813500. ISBN 0919808813500.

{{cite journal}}: Check|isbn=value: invalid prefix (help) - ^ Bathke, A (2004). "The ANOVA F test can still be used in some balanced designs with unequal variances and nonnormal data". Journal of Statistical Planning and Inference. 126: 413. doi:10.1016/j.jspi.2003.09.010.

- ^ Gujarati, D. N. & Porter, D. C. (2009) Basic Econometrics. Ninth Edition. McGraw-Hill.

- ^ Fox, J. (1997) Applied Regression Analysis, Linear Models, and Related Methods. California:Sage Publications.

- ^ Mankiw, N. G. (1990) A Quick Refresher Course in Macroeconomics. Journal of Economics Literature. Vol. XXVIII, December.

- ^ R. E. Park (1966). "Estimation with Heteroscedastic Error Terms". Econometrica. 34 (4): 888. doi:10.2307/1910108.

Further reading

Most statistics textbooks will include at least some material on heteroscedasticity. Some examples are:

- Studenmund AH. Using Econometrics (2nd ed.). ISBN 0-673-52125-7. (devotes a chapter to heteroscedasticity)

- Verbeek, Marno (2004): A Guide to Modern Econometrics, 2. ed., Chichester: John Wiley & Sons, 2004, pages

- Greene, W.H. (1993), Econometric Analysis, Prentice–Hall, ISBN 0-13-013297-7, an introductory but thorough general text, considered the standard for a pre-doctorate university Econometrics course;

- Hamilton, J.D. (1994), Time Series Analysis, Princeton University Press ISBN 0-691-04289-6, the text of reference for historical series analysis; it contains an introduction to ARCH models.

- Vinod, H.D. (2008): Hands On Intermediate Econometrics Using R: Templates for Extending Dozens of Practical Examples. ISBN 10-981-281-885-5 (Section 2.8 provides R snippets) World Scientific Publishers: Hackensack, NJ .

- White, Halbert (1980). "A heteroscedasticity-Consistent Covariance Matrix Estimator and a Direct Test for Heteroscedasticity". Econometrica. 48 (4): 817–838. doi:10.2307/1912934.

Special subjects

- Glejser test: Furno, Marilena (2005). "The Glejser Test and the Median Regression" (PDF). Sankhya – the Indian Journal of Statistics, Special Issue on Quantile Regression and Related Methods. 67 (2): 335–358. (work done at Universita di Cassino, Italy, 2005)

- heteroscedasticity in QSAR Modeling