Talk:Second law of thermodynamics/Archive 2

| This page is an archive of past discussions. Do not edit the contents of this page. If you wish to start a new discussion or revive an old one, please do so on the current talk page. |

Cut sloppy definitions

I cut the following ("consumed" was a word used back in the 17th century):

- If thermodynamic work is to be done at a finite rate, free energy must be consumed [1]

- The entropy of a closed system will not decrease for any sustained period of time (see Maxwell's demon)

- A downside to this last description is that it requires an understanding of the concept of entropy. There are, however, consequences of the second law that are understandable without a full understanding of entropy. These are described in the first section below.

For such an important & confusing law in science, we need textbook definitions with sources.--Sadi Carnot 04:58, 3 March 2006 (UTC)

- I don't understand why these two were selected for removal and no textbook definitions with sources were added. One way to make things clear to the general reader is to limit the way the law is expressed to a very strict definition used in modern physics textbooks. A second way is to express the law multiple times so that maybe one or two of them will "stick" in the mind of a reader with a certain background. I think the second approach is better. The word "consumed" was used and referenced to an article from 2001 about biochemical thermodynamics by someone at a department of surgery. When the second law is applied to metabolism, the word "consumed" seems to me to be current and appropriate. Flying Jazz 12:49, 3 March 2006 (UTC)

I don't understand why the entropy definition was deleted. It's not wrong or confusing. -- infinity0 16:17, 3 March 2006 (UTC)

- Regarding the first statement, in 1824 Sadi Carnot, the originator of the 2nd Law mind you, states: “The production of motive power is then due in steam-engines not to an actual consumption of the caloric, but to its transportation from a warm body to a cold body, that is to its re-establishment of equilibrium.” Furthermore, for the main statements of the 2nd Law we should be referencing someone from a department of engineering not someone from the department of surgery; certainly this reference is good for discussion, but not as principle source for one of the grandest laws in the history of science. Regarding the second statement, to within an approximation, the earth delineated at the Karman line can be modeled as a closed system, i.e. heat flows across the boundary daily in a significant manner, but matter, aside for the occasional asteroid input and negligible atmospheric mass loss, does not. Hence, entropy has decreased significantly over time. This model contradicts the above 2nd Law statement. Regarding the layperson viewpoint, certainly it is advisable to re-word things to make them stick, but when it comes to laws precise wording is a scientific imperative.--Sadi Carnot 18:37, 3 April 2006 (UTC)

New "definition"

User talk:24.93.101.70 keeps inserting this in:

- If thermodynamic work is to be done at a finite rate, free energy must be consumed. (This statement is based on the fact that free energy can be accurately defined as that portion of any First-Law energy that is available for doing thermodynamic work; i.e., work mediated by thermal energy. Since free energy is subject to irreversible loss in the course of thermodynamic work and First-Law energy is not, it is evident that free energy is an expendable, Second-Law kind of energy.)

This definition is neither succinct nor clear. Also, it's very confusing and very very long winded, and it makes no sense (to me, and therefore the reader too). "First-Law" energy? What's that?? -- infinity0 22:41, 21 March 2006 (UTC)

- Agree, replaced the word "consumed" accordingly; 1st Law = "Energy is Conserved" (not consumed).--Sadi Carnot 19:12, 3 April 2006 (UTC)

Mathematical definition

I tweaked the mathematical definition a little, which probably isn't the best way to do it. However I was thinking that a much more accurate mathematical description would be:

"As the time over which the average is taken goes to ∞:

where

- is the instantanious change in entropy per time"

Comments? Fresheneesz 10:43, 4 April 2006 (UTC)

- I feel this is a worth while point; however, it should go in a separate "header" section (such as: Hypothetical violations of 2nd Law) and there should be reference to such articles as: 2nd Law Beads of Doubt. That is, the main section should be clear and strong. Esoteric deviations should be discussed afterwards. --Sadi Carnot 15:54, 4 April 2006 (UTC)

- If you correctly state the second law such that the entropy increases on average, then there are no violations of the 2nd law. (The terminology used in your reference is dodgy.) That the entropy can sometimes go down is no longer hypothetical, since transient entropy reductions have been observed. Really there should be links to the Fluctuation theorem and Jarzynski equality, since these are, essentially, new and improved, cutting edge, extensions of the second law. Nonsuch 19:18, 4 April 2006 (UTC)

- The idea that the entropy increases on the average (Not all of the time) is very old, and should be included. It is not necessary to average over infinite time. On average, the entropy increases with time, for any time interval. The reason that this point is normally glossed over is that for a macroscopic system we can generally ignore the differences between average and instantaneous values of energy and entropy. But these days we can do experimental thermodynamics on microsopic systems. Nonsuch 19:18, 4 April 2006 (UTC)

See also "Treatise with Reasoning Proof of the Second Law of Energy Degradation"

"Treatise with Reasoning Proof of the Second Law of Energy Degradation: The Carnot Cycle Proof, and Entropy Transfer and Generation," by Milivoje M. Kostic, Northern Illinois University http://www.kostic.niu.edu/Kostic-2nd-Law-Proof.pdf and http://www.kostic.niu.edu/energy

“It is crystal-clear (to me) that all confusions related to the far-reaching fundamental Laws of Thermodynamics, and especially the Second Law, are due to the lack of their genuine and subtle comprehension.” (Kostic, 2006).

There are many statements of the Second Law which in essence describe the same natural phenomena about the spontaneous direction of all natural processes towards a stable equilibrium with randomized redistribution and equi-partition of energy within the elementary structure of all interacting systems (thus the universe). Therefore, the Second Law could be expressed in many forms reflecting impossibility of creating or increasing non-equilibrium and thus work potential between the systems within an isolated enclosure or the universe:

1. No heat transfer from low to high temperature of no-work process (like isochoric thermo-mechanical process).

2. No work transfer from low to high pressure of no-heat process (adiabatic thermo-mechanical process).

3. No work-producing from a single heat reservoir, i.e., no more efficient work-producing heat engine cycle than the Carnot cycle.

4. Etc, etc … No creation or increase of non-equilibrium and thus work potential, but only decrease of work potential and non-equilibrium towards a common equilibrium (equalization of all energy-potentials) accompanied with entropy generation due to loss of work potential at system absolute temperature, resulting in maximum equilibrium entropy.

All the Second Law statements are equivalent since they reflect equality of work potential between all system states reached by any and all reversible processes (reversibility is measure of equivalency) and impossibility of creating or increasing systems non-equilibrium and work potential.

About Carnot Cycle: For given heat reservoirs’ temperatures, no other heat engine could be more efficient than a (any) reversible cycle, since otherwise such reversible cycle could be reversed and coupled with that higher efficiency cycle to produce work permanently (create non-equilibrium) from a single low-temperature reservoir in equilibrium (with no net-heat-transfer to the high-temperature reservoir). This implies that all reversible cycles, regardless of the cycle medium, must have the same efficiency which is also the maximum possible, and that irreversible cycles may and do have smaller, down to zero (no net-work) or even negative efficiency (consuming work, thus no longer power cycle). Carnot reasoning opened the way to generalization of reversibility and energy process equivalency, definition of absolute thermodynamic temperature and a new thermodynamic material property “entropy,” as well as the Gibbs free energy, one of the most important thermodynamic functions for the characterization of electro-chemical systems and their equilibriums, thus resulting in formulation of the universal and far-reaching Second Law of Thermodynamics. It is reasoned and proven here that the net-cycle-work is due to the net-thermal expansion-compression, thus necessity for the thermal cooling and compression, since the net-mechanical expansion-compression is zero for any reversible cycle exposed to a single thermal reservoir only.

In conclusion, it is only possible to produce work during energy exchange between systems in non-equilibrium. Actually, the work potential (maximum possible work to be extracted in any reversible process from that systems' non-equilibrium to the common equilibrium) is measure of the systems’ non-equilibrium, thus the work potential could be conserved only in processes if the non-equilibrium is preserved (conserved, i.e. rearranged), and such ideal processes could be reversed. When the systems come to the equilibrium there is no potential for any process to produce (extract) work. Therefore, it is impossible to produce work from a single thermal reservoir in equilibrium (then non-equilibrium will be spontaneously created leading to a “black-hole-like energy singularity,” instead to the equilibrium with randomized equi-partition of energy). It is only possible to produce work from thermal energy in a process between two thermal reservoirs in non-equilibrium (with different temperatures). Maximum work for a given heat transfer from high to low temperature thermal reservoir will be produced during ideal, reversible cyclic process, in order to prevent any other impact to the surrounding, (like net-volume expansion, etc.; net-cyclic change is zero). All real natural processes between systems in non-equilibrium have tendency towards common equilibrium and thus loss of the original work potential, by converting other energy forms into the thermal energy accompanied with increase of entropy (randomized equi-partition of energy per absolute temperature level). Due to loss of work potential in a real process, the resulting reduced work cannot reverse back the process to the original non-equilibrium, as is possible with ideal reversible processes. Since non-equilibrium cannot be created or increased spontaneously (by itself and without interaction with the rest of the surroundings) then all reversible processes must be the most and equally efficient (will equally conserve work potential, otherwise will create non-equilibrium by coupling with differently efficient reversible processes). The irreversible processes will loose work potential to thermal energy with increase of entropy, thus will be less efficient than corresponding reversible processes … this will be further elaborated and generalized in other Sections of this “Treatise with Reasoning Proof of the Second Law of Energy Degradation,” -- the work is in final stage to be finished soon.

http://www.kostic.niu.edu and http://www.kostic.niu.edu/energy

- Kostic, what is the point of all this verbiage? Why is it at the top of the talk page? Why does it make no sense? Nonsuch 04:20, 12 April 2006 (UTC)

Miscellany - Cables in a box

This description of the 2nd Law was quoted in a slightly mocking letter in the UK The Guardian newspaper on Wednesday 3rd May 2006. It has been in the article unchanged since 12 September 2004 and at best needs editing if not deleting. To me it adds little in the way of clarity. Malcolma 11:50, 4 May 2006 (UTC)

- Deleted. MrArt 05:19, 14 November 2006 (UTC)

Why not a simple statement ?

I would propose to add the following statement of the 2nd law to the "general description" section, or even the intro : "Heat cannot of itself pass from a colder to a hotter body. " (Clausius, 1850). Unlike all others, this statement of the law is understandable by everybody: any reason why it's not in the article ? Pcarbonn 20:22, 6 June 2006 (UTC)

- Excellent idea! LeBofSportif 20:44, 6 June 2006 (UTC

Order in open versus closed systems

Propose adding the following section:

Order in open versus closed systems Granville Sewell has developed the equations for entropy change in open versus closed systems.[1] [2] He shows. e.g., St >= - integral of heat flux vector J through the boundary.

In summary:

- Order cannot increase in a closed system.

- In an open system, order cannot increase faster than it is imported through the boundary.

Sewell observes:

The thermal order in an open system can decrease in two ways -- it can be converted to disorder, or it can be exported through the boundary. It can increase in only one way: by importation through the boundary.[3]

DLH 03:41, 13 July 2006 (UTC)

Added link to Sewell's appendix D posted on his site. Sewell, Granville (2005) Can "ANYTHING" Happen in an Open System? And his key equation D.5 ( ie St >= - integral of heat flux vector J through the boundary.) This is a key formulation that is not shown so far in the Wiki article. I will add the explicit equations especially D.4 and D.5 DLH 14:05, 13 July 2006 (UTC)

- Notable? FeloniousMonk 05:54, 13 July 2006 (UTC)

- Not highly, I should say, but he most certainly has a clear bias: idthefuture.

- Besides, I'm not sure what the relevance of talking about a closed system is as it's inapplicable to Earth; and the open system quotation sounds like words in search of an idea.

- In any case, this is yet another example of a creationist trying to manipulate 2LOT to support his conclusion, generally by misrepresenting both 2LOT and evolution. To wit:

- "The evolutionist, therefore, cannot avoid the question of probability by saying that anything can happen in an open system, he is finally forced to argue that it only seems extremely improbable, but really isn’t, that atoms would rearrange themselves into spaceships and computers and TV sets."page 4

- •Jim62sch• 09:35, 13 July 2006 (UTC)

- Please address Sewell's derivation of the heat flux through the boundary, equations D.1-D.5, as I believe they still apply.See: Sewell, Granville (2005) Can "ANYTHING" Happen in an Open System?DLH 14:46, 13 July 2006 (UTC)

- A mainstream way of treating DLH's point about open systems (which perhaps should be reviewed in the article) is that

- in any process the entropy of the {system + its surroundings} must increase.

- Entropy of the system can decrease, but only if that amount of entropy or more is exported into the surroundings. For example, think of a lump of ice growing in freezing-cold water. The entropy of the water molecules freezing in to the lump of ice fall; but this is more than offset by the energy released by the process (the latent heat), warming up the remaining liquid water. Such reactions, with a favourable ΔH, unfavourable ΔSsystem, but overall favourable ΔG, are very common in chemistry.

- A mainstream way of treating DLH's point about open systems (which perhaps should be reviewed in the article) is that

- I'm afraid Sewell's article is a bit bonkers. There's nothing in the second law against people doing useful work (or creating computers etc), "paid for" by a degradation of energy. Similarly there's nothing in the second law against cells doing useful work (building structures, or establishing ion concentration gradients etc), "paid for" by a degradation of energy.

- Of course, Sewell has a creationist axe to grind. Boiled down, his argument is not really about the Second Law, by which all the above processes are possible. Instead, what I think he's really trying to drive towards is the notion that we can distinguish the idea of 'a process taking advantage of the possibilities of such energy degradation in an organised way to perpetuate its own process' as a key indicator of "life"; and then ask the question, if we understand "life" in such terms, is a transition from "no life" to "life" a discontinuous qualitative step-change, which cannot be explained by a gradualist process of evolution?

- This is an interesting question to think about; but the appropriate place to analyse it is really under abiogenesis. It doesn't have a lot to do with the second law of thermodynamics. For myself, I think the creationists are wrong. I think we can imagine simple boundaries coming into being naturally, creating a separation between 'system' and 'surroundings' - for example, perhaps in semi-permeable clays, or across a simple lipid boundary; and then I think we can imagine there could be quite simple chemical positive feedback mechanisms that could make use of eg external temperature gradients to reinforce that boundary; and the whole process of gradually increasing complexity could pull itself up from its own bootstraps from there.

- But that's not really an argument for here. The only relevant thing as regards the 2nd law is that the 2nd law does not forbid increasing order or structure or complexity in part of a system, so long as it is associated with an increase in overall entropy. -- Jheald 10:05, 13 July 2006 (UTC).

- Putting aside whatever ad hominem by association arguments you have against Sewell, please address the proposed statement summarizing Sewell's formulation of the entropy vs flux through the boundary, and the links provided. DLH 14:05, 13 July 2006 (UTC)DLH 14:23, 13 July 2006 (UTC)

Scientifically Based

Removed religious comments in article, please let us keep these articles scientifically based, I have never opened a textbook and come across those lines in any section on the second law. Physical Chemist 19:26, 18 July 2006 (UTC)

References

- ^ Sewell, Granville (2005). The numerical Solution of Ordinary and Partial Differential Equations, 2nd Edition,. ISBN 0471735809.

{{cite book}}: Text "John Wiley & Sons" ignored (help); Text "Publisher" ignored (help) Appendix D. - ^ Sewell, Granville (2005) Can "ANYTHING" Happen in an Open System?

- ^ Sewell, Granville (2005) A Second Look at the Second Law

maximize energy degradation ?

The article currently says: " [The system tends to evolve towards a structure ...] which very nearly maximise the rate of energy degradation (the rate of entropy production". I've added a request for source for this, and it would be interesting to expand this statement further (in this or other related articles; I could not find any description of this statement in wikipedia). Pcarbonn 11:16, 25 July 2006 (UTC)

- Prigogine and co-workers first proposed the Principle of Maximum Entropy Production in the 1940s and 50s, for near-equilibrium steady-state dissipative systems. This may or may not extend cleanly to more general further-from-equilibrium systems. Cosma Shalizi quotes P.W. Anderson (and others) being quite negative about it [2]. On the other hand, the Lorenz and Dewar papers cited at the end of Maximum entropy thermodynamics seem much more positive, both about how the principle can be rigorously defined and derived, and about how it does appear to explain some real quantitative observations. Christian Maes has also done some good work in this area, which (ISTR) says it does pretty well work for the right definition of "entropy production", but there may be some quite subtle bodies buried.

- A proper response to you probably Needs An Expert; I'm afraid my knowledge is fairly narrow in this area, and not very deep. But hope the paragraph above gives a start, all the same. Jheald 12:23, 25 July 2006 (UTC).

- Note also, just to complicate things,that in effect there's a minimax going on, so Maximum Entropy Production with respect to one set of variables can also be Minimum Entropy Production (as discussed at the end of the Dewar 2005 paper, a bit mathematically). It all depends what variables you're imagining holding constant, and what ones can vary (and what Legendre transformtions you've done). In fact it was always "Minimum" Entropy Production that Prigogine talked about; but re-casting that in MaxEnt terms is probably a more general way of thinking about what's going on. Jheald 13:09, 25 July 2006 (UTC).

Air conditioning?

I asked this question on the AC page, but didn't get an answer, but how does air conditioning NOT violate the 2nd law? Isn't it taking heat from a warm enviroment (inside of a building), and transfering it to a hotter enviroment (outside the building)? Doesn't this process in effect reverse the effects of entropy? Inforazer 13:19, 13 September 2006 (UTC)

- The AC unit also plugs into the wall. Drawing electricity and converting it to heat increases entropy. Hope this helps. 192.75.48.150 14:44, 13 September 2006 (UTC)

- That's right - The entropy of a CLOSED SYSTEM always increases. A closed system is one in which no material or energy goes in or out. You have electricity flowing into your room and besides, the air conditioner is dumping heat to the outside. The entropy loss you experience in the cool room is more than offset by the entropy transferred to the outside and the entropy gain at the electrical power plant. PAR 15:51, 13 September 2006 (UTC)

- An AC unit does not violate the 2nd law. In fact, it is a net emitter of heat; for every 1 unit of heat taken from inside a room, roughly 3 units of heat are added to the outside air (depending on the energy efficiency). Internally, the AC unit always transfers heat from warm to cold, by playing with the pressure of the fluid inside the AC unit. Roughly summarised assume the refrigerant, say Freon, starts at the hot outside temperature. The Freon is compressed, thus raising its temperature. The Freon is then cooled to the outside hot temperature. The Freon is then decompressed, which cools it well below the outside air temperature; the system is also designed to cool the Freon below the inside room temperature. This cool Freon is then used to absorb as much heat from the inside room. And from there the cycle continues. The AC unit taken individually, it may seem like a violation of 2nd law, but as a whole, a lot more energy was wasted on making that one room cooler. Frisettes 06:01, 8 November 2006 (UTC)

A Tendency, Not a Law

I cut the following false part, being that it is a mathematical fact that when two systems of unequal temperature are adjoined, heat will flow between the two of them, then at thermodynamic equilibrium ΔS will be positive (see: entropy). Hence, the 2nd law is a mathematical consequence of the definition S = Q/T.--Sadi Carnot 14:55, 17 September 2006 (UTC):

- The second law states that there is a statistical tendency for entropy to increase. Expressions of the second law always include terms such as "on average" or "tends to". The second law is not an absolute rule. The entropy of a closed system has a certain amount of statistical variation, it is never a smoothly increasing quantity. Some of these statistical variations will actually cause a drop in entropy. The fact that the law is expressed probabilistically causes some people to question whether the law can be used as the basis for proofs or other conclusions. This concern is well placed for systems with a small number of particles but for everyday situations, it is not worth worrying about.

- I restored the above paragraph, not because I think it deserves to be in the article, but it certainly should not be deleted for being false. The above statements are not false. The second law is not absolutely, strictly true at all points in time. The explanation of the second law is given by statistical mechanics, and that means there will be statisitical varitions in all thermodynamic parameters. The pressure in a vessel at equilibrium is not constant. It is the result of particles randomly striking the walls of the vessel, and sometimes a few more will strike than the average, sometimes a few less. The same is true of every thermodynamic quantity. The variations are roughly of the order of 1/√N where N is the number of particles. For macroscopic situations, this is something like one part in ten billion - not worth worrying about, but for systems composed of, say, 100 particles it can be of the order of ten percent. PAR 15:15, 17 September 2006 (UTC)

Par, I assume you are referring to the following article: Second law of thermodynamics “broken”. In any case, the cut paragraph is not sourced and doesn’t sound anything like this article; the cut paragraph seems to contradict the entire Wiki article, essentially stating that the second law is not a law. If you feel it needs to stay in the article, I would think that it needs to be sourced at least twice and cleaned.

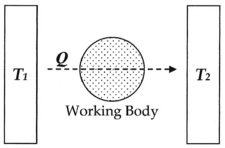

Furthermore, both the cut section and the article I linked two and the points you are noting are very arguable. The basis of the second law derives from the following set-up, as detailed by Carnot (1824), Clapeyron (1832), and Clausius (1854):

With this diagram, we can calculate the entropy change ΔS for the passage of the quantity of heat Q from the temperature T1, through the "working body" of fluid (see heat engine), which was typically a body of steam, to the temperature T2. Moreover, let us assume, for the sake of argument, that the working body contains only two molecules of water.

Next, if we make the assignment:

Then, the entropy change or "equivalence-value" for this transformation is:

which equals:

and by factoring out Q, we have the following form, as was derived by Clausius:

Thus, for example, if Q was 50 units, T1 was 100 degrees, and T2 was 1 degree, then the entropy change for this process would be 49.5. Hence, entropy increased for this process, it did not “tend” to increase. For this system configuration, subsequently, it is an "absolute rule". This rule is based on the fact that all natural processes are irreversible by virtue of the fact that molecules of a system, for example two molecules in a tank, will not only do external work (such as to push a piston), but will also do internal work on each other, in proportion to the heat used to do work (see: Mechanical equivalent of heat) during the process. Entropy accounts for the fact that internal inter-molecular friction exists.

Now, this law has been solid for 152 years and counting. Thus, if, in the article, you want to say “so and so scientist argues that the second law is invalid…” than do so and source it and all will be fine. But to put some random, supposedly invalid and un-sourced, argument in the article is not a good idea. It will give the novice reader the wrong opinion or possibly the wrong information. --Sadi Carnot 16:55, 17 September 2006 (UTC)

- I think that the second law of thermodynamics is definitely supposed to be a law, considering that it is the convergence to a final value that is of interest here, not intrinsic statistical variations. There are three possible cases. Convergence over time (for an isolated system etc.) to a state of (1) lower entropy, (2) constant entropy, (3) higher entropy. The second law of thermodynamics states that only (2) and (3) are possible. If it would have stated that all (1), (2) and (3) are possible, just that (2) and (3) are more probable than (1), it would have probably not been taken seriously by contemporary scientists. --Hyperkraft 18:43, 17 September 2006 (UTC)

First, let me say that I agree, the title of the section should not have been "A tendency, not a law". I don't think we should give the impression that the second law is seriously in question for macroscopic situations.

Regarding the article "Second Law of thermodynamics broken", I think that is just a flashy, misleading title for what is essentially a valid experiment. I had not seen that article, however, so thank you for the reference. Note at the end of the article, the statement that the results are in good agreement with the fluctuation theorem, which is just a way of saying that the degree of deviation from the second law is just what would be expected from statistical mechanics. The difference in this experiment is that entropy is being measured on a very fine time scale. This makes all the difference. Entropy variations are not being averaged out by the measuring process, and so the "violations" of the second law are more likely to be revealed, not because stat mech is wrong, but because the measurement process is so good. Check out the "Relation to thermodynamic variables" section in the Wikipedia article on the Partition function to see how statistical variations in some thermodynamic variables at equilibrium are calculated.

Regarding the example you gave, I am not sure how it relates to what we are talking about. The two bodies on either side of the working body are, I assume, macroscopic, containing very many particles, so the second law will of course hold very well for them, even if the working body only has two molecules. Its the working body which will have large variations in thermodynamic parameters, assuming that thermodynamic parameters can even be defined for such a small body. If you divide the working body into four quadrants, then having both particles in the same quadrant will be unlikely, and such a state will have a low entropy. Statistical mechanics says, yes, it will happen once every few minutes, and the entropy will drop, but on average it will be higher. When you say the second law is strict, you are effectively saying that both particles in the same quadrant will never happen, which is wrong. If the working body contained 10^20 particles, then statistical mechanics says they will all wind up in the same quadrant maybe once every 10^300 years and the entropy will drop, but on average it will be higher. When you say the second law is strict, you are effectively saying that it will never happen, and you are practically correct. PAR 15:24, 18 September 2006 (UTC)

- I gave the article a good cleaning, added five sources, and new historical material. Your points are valid. My point is that classical thermodynamics came before statistical thermodynamics and the second law is absolute in the classical sense, which makes no use of particle location. Talk later: --Sadi Carnot 15:45, 18 September 2006 (UTC)

Information entropy

I removed "information entropy" as an "improper application of the second law". I've never heard it said that all information entropy is maximized somehow. Just because physical entropy (as defined by statistical mechanics) is a particular application of information entropy to a physical system does not imply that the second law statements about that entropy should apply to all types of information entropy. PAR 06:38, 22 September 2006 (UTC)

- Thanks for removing the sentence "One such independent law is the law of information entropy." As you say, there's no such law. However this is a common misunderstanding of evolution and while I appreciate the reluctance to mention the creation-evolution controversy, the point needs to be clarified and I've added a couple of sentences to deal with it. ..dave souza, talk 10:30, 27 September 2006 (UTC)

Intro

While the whole article's looking much clearer, the intro jumped from heat to energy without any explanation, so I've cited Lambert's summary which introduces the more general statement without having to argue the equivalence of heat and energy. The preceding statement that "Heat cannot of itself pass from a colder to a hotter body." is the most common enunciation may well be true, but it may be an incorrect statement as is being discussed at Talk:Entropy#Utterly ridiculous nitpick. Perhaps this should be reconsidered. ..dave souza, talk 09:36, 27 September 2006 (UTC)

- The section header says it all. Let's not get carried away. We don't get this sort of thing with the first law because of general relativistic considerations, for example. 192.75.48.150 14:55, 27 September 2006 (UTC)

- I have reverted this, Lambert's energy dispersal theories are at Votes for Deletion; see also: Talk:Entropy. --Sadi Carnot 16:08, 7 October 2006 (UTC)

Applications Beyond Thermodynamics + Information entropy

Jim62sch, I may guess where you are coming from, but I'm unclear whether the direction you are heading to is a good idea. What is the meaning of "beyond thermodynamics" where most notable physicists think, that whatever else happens, thermodynamics will hold true?

Also, defined in terms of heat, is a bit single minded. It can well be defined in terms of imformation loss or neglect.

Obviously (to all but the contributors of The Creation Science Wiki, perhaps), nothing of this prohibits evolution and requires divine interverntion.

Pjacobi 20:22, 7 October 2006 (UTC)

- "Beyond thermodynamics is a place-holder" -- it can be called whatever works best for the article (I didn't like "improper" though). Also, I don't think the section is perfect, it can clearly be improved, but it does raise a very valid point see below.

- Information loss in thermodynamics? Neglect in thermodynamics? Nah, that's going somewhere else with 2LOT that one needn't go. Problem is, entropy (so far as thermodynamics) really isn't as complex as many people want to make it.

- I agree wholeheartedly with your last statement, and I recoil in disgust everytime I see the alleged entropy/divine intervention nexus, but I'm not sure where you're going with the comment. Did I miss something? •Jim62sch• 20:46, 7 October 2006 (UTC)

- I recently fell in love with the lucent explanation given by Jaynes in this article. Otherwise - I hope at least - I'm firmly based on the classic treatises given by Boltzmann, Sommerfeld and others (Becker, "Theorie der Wärme", was a popular textbook when I did hear thermodynamics). --Pjacobi 21:09, 7 October 2006 (UTC)

- I removed a sentence from this section - the "prevention of increasing complexity or order in life without divine intervention" absolutely does not stem from the idea that the second law applies to information entropy. PAR 00:01, 8 October 2006 (UTC)

- Also - absolutely, that article by Jaynes is one of the most interesting thermodynamics articles I have ever read. PAR 23:02, 9 October 2006 (UTC)

I removed the following:

- The typical claim is that any closed system initially in a heterogeneous state tends to a more homogenous state. Although such extensions of the second law may be true, they are not, strictly speaking, proven by the second law of thermodynamics, and require independent proofs.

Last time I checked the laws of science are true until proven otherwise and they do not "claim" they "state". This sentance is unsourced; I replaced it with two textbook sources. --Sadi Carnot 11:15, 9 October 2006 (UTC)

General remark

I've just compared an old version [3] and have to say, that I wouldn't judge article evolution to be uniformly positive since then. What happened? --Pjacobi 13:13, 9 October 2006 (UTC)

- Crap editing is my guess. I would support your reversion to that version if you feel so inclined. KillerChihuahua?!? 13:16, 9 October 2006 (UTC)

- The last ten months of edits have been crap? I'm taking a wiki-break, I had enough of you and your derogatory comments. --Sadi Carnot 13:35, 9 October 2006 (UTC)

- Probably not all; however if the article has degraded yes I'd say crap editing is the culprit. It happens to many articles. Reverting to a previous version and then incorporating the few edits which were improvements is one method of dealing with overall article degradation. Why on earth is my agreeing with Pjacobi and offering a suggested approach to dealing with article degradation be a "derogatory comment"? It is a positive approach to cleaning out a cumulative morass of largely poor edits. KillerChihuahua?!? 13:54, 9 October 2006 (UTC)

The Onion

Sadi recently added[4] The Onion as a source. While I agree the statement might need sourcing, The Onion is a parody site and as such does not meet WP:RS, and cannot be used here. I request an alternative ref be located which meets RS if one is deemed necessary for the statement. Alternatively, a brief statement in the article such as "This has been parodied on the humor site, The Onion" with the ref there would keep the pointer to the Onion article without using it as a source for a serious statement. KillerChihuahua?!? 14:19, 9 October 2006 (UTC)

- Yeah, I saw that one too. This quote is embarassing... either this is a stunt, or the person who initially wrote this has no sense of humour at all and a very poor view of god-fearing folks. I am deleting the reference now.Frisettes 06:03, 8 November 2006 (UTC)

Entropy

- Until recently this statement was included:

- A common misunderstanding of evolution arises from the misconception that the second law applies to information entropy and so prevents increasing complexity or order in life without divine intervention. Information entropy does not support this claim which also fails to appreciate that the second law as a physical or scientific law is a scientific generalization based on empirical observations of physical behaviour, and not a "law" in the meaning of a set of rules which forbid, permit or mandate specified actions.

- PAR deleted it as stated above: I've not reinstated it as in many cases it may be a misunderstanding about "disorder", but as shown here the common argument is the opposite of the article statement of some "reasoning that thermodynamics does not apply to the process of life" which I've only seen in the Onion parody. The Complex systems section later addresses the point indirectly, but the Applications to living systems at present could give comfort to this creationist position as its reference to "complexity of life, or orderliness" links to a book without clarification of the point being made. ..dave souza, talk 15:41, 9 October 2006 (UTC)

- Good grief, I remember that - it took what, about six months of discussion with Wade et al to get that wording worked out? From what I see PAR's argument for removing it is that he hasn't ever heard of this misunderstanding - is that correct? KillerChihuahua?!? 18:49, 9 October 2006 (UTC)

- No, his argument is [5] and I'm inclined to agree. --Pjacobi 19:04, 9 October 2006 (UTC)

- 2LOT is used by creationists though, and it is via a misunderstanding. The big bruhaha was about 1) whether to include this in the 2LOT article at all, and 2) How to phrase it if included. Wade was the big protagonist for inclusion as I recall - and I dropped out of the arguments here for a while and wasn't part of any phrasing discussion. Oddly enough, on Talk:Second law of thermodynamics/creationism#Straw poll PAR was for inclusion (I was against) so I'm wondering why he didn't just edit it to make better sense rather than removing it. KillerChihuahua?!? 19:22, 9 October 2006 (UTC)

- I'm in favor of a reasoned discussion of the creationists point of view, but the statement I removed was simply false. I don't know how to make it make better sense. PAR 22:59, 9 October 2006 (UTC)

- Well I was not for including it in the first place, but the last straw poll showed opinion was fairly evenly split so I'm not going to argue this. Unfortunately, I cannot seem to figure out a way to write the position clearly. Can anyone write a simple statement which gives the Creationist use, and makes clear it is erroneous? KillerChihuahua?!? 23:05, 9 October 2006 (UTC)

- I'm in favor of a reasoned discussion of the creationists point of view, but the statement I removed was simply false. I don't know how to make it make better sense. PAR 22:59, 9 October 2006 (UTC)

- 2LOT is used by creationists though, and it is via a misunderstanding. The big bruhaha was about 1) whether to include this in the 2LOT article at all, and 2) How to phrase it if included. Wade was the big protagonist for inclusion as I recall - and I dropped out of the arguments here for a while and wasn't part of any phrasing discussion. Oddly enough, on Talk:Second law of thermodynamics/creationism#Straw poll PAR was for inclusion (I was against) so I'm wondering why he didn't just edit it to make better sense rather than removing it. KillerChihuahua?!? 19:22, 9 October 2006 (UTC)

- No, his argument is [5] and I'm inclined to agree. --Pjacobi 19:04, 9 October 2006 (UTC)

- Good grief, I remember that - it took what, about six months of discussion with Wade et al to get that wording worked out? From what I see PAR's argument for removing it is that he hasn't ever heard of this misunderstanding - is that correct? KillerChihuahua?!? 18:49, 9 October 2006 (UTC)

- Suggestion:

- A common misunderstanding of evolution arises from the misconception that the second law requires increasing "disorder" and so prevents the evolution of complex life forms without divine intervention. This arises from a misunderstanding of "disorder" and also fails to appreciate that the second law as a physical or scientific law is a scientific generalization based on empirical observations of physical behaviour, and not a "law" in the meaning of a set of rules which forbid, permit or mandate specified actions.

- For an example of the reasoning that this deals with, see User:Sangil's comment of 22:04, 14 May 2006, at Talk:Evolution/Archive 016#Kinds. Any suggestions for clarification welcome. ..dave souza, talk 15:06, 11 October 2006 (UTC)

Mysterious edit comment:

Kenosis wrote "Removing "laws of thermodynamics" template until admins can fix the inadvertent insertion of Jane Duncan into the text"

Do you men this: [6]

That is obviously plain minor vandalism and should be reverted by any user, no admin intervention needed.

Pjacobi 08:54, 11 October 2006 (UTC)

- Thank you for repairing it. I didn't know until now that we could access the templates without admin privileges. Appreciate it. ... Kenosis 13:11, 11 October 2006 (UTC)

Mathematical origin ?

I've cut the following addition by Enormousdude:

- Second law of thermodynamics mathematically follows from the definitions of heat and entropy in statistical mechanics of large ensemble of identical particles.

Apart from the quibble that ensembles are made of large numbers of identical systems not large numbers of identical particles, it seems to me the statement above is not true. The 2nd law does not follow mathematically from the definitions of heat and entropy in statistical mechanics. Mathematically it requires additional assumptions establishing how information is being thrown away from unusually low entropy initial conditions.

Even if the statement were correct, it adds little value to the article in the absence of any indication as to how the second law might "follow directly from the definitions of heat and entropy". Jheald 21:10, 2 November 2006 (UTC)

Axiom of Nature

Since when has nature had axioms? This seems very poorly worded. LeBofSportif 13:57, 14 November 2006 (UTC)

Please give a better statement of the second law

Please give a better statement. The statement of the second law as it is given at this time (nov 27 2006) on wikipedia english is inaccurate. Unfortunately, this form seems to have become more and more fashionable. This has to change!!!!!

Proper statements could be:

"The entropy of a closed adiabatic system cannot decrease".

"For a closed adiabatic system, it is impossible to imagine a process that could result in a decrease of its entropy"

This is documented in a number of textbooks, such as Reif, fundamental of statistical and thermal physics, Ira N Levine, Physical chemistry and others.

Consider a system that exchanges heat with a single constant temperature heat source. For a cyclic process of such a system, we have w>=0 and q=<0. (Kelvin, equalities correspond to a reversible process, the inequalities correspond to an irreversible process)

Now we consider an irreversible change of a closed adiabatic system from state I to state F. Let WIF be the work done (algebraic)on the system during this process. Let us now imagine a reversible process that brings the system back from state F to state I and during which we allow the system to eventually exchange heat with a heat revervoir at temperature Ttherm.

- The first law allows us to write:

wIF +wFI +qFI=0. If the system were to be adiabatic during this reversible process, then qFI would be zero and the total work done would be be zero as well. This would imply that the cycle is reversible (Kelvin)and this cannot be the case since the process IF is irreversible. Hence qFI cannot be zero. It is therefore negative. The reversible FI process can only be a reversible adiabatic process to bring the temperature of the system to the temperature of the heat reservoir, followed by an isothermal process at the temperature of the heat reservoir and finally an other reversible adiabatic process to bring the system back to state I. The entropy change of the system during the reversible process is SI - SF= qFI/Ttherm <0.

This demonstrates that SF - SI>0 even if the closed adiabatic system exchanges energy with its surroundings under the form of work.

This shows the validity of the second law as stated just above. It is much more powerful than the "isolated" system form since the system can exchange work with its environnment.

A simple example would be the compression (p outside larger than p inside)or the expansion (p inside larger that p outside)of a gas contained in an adiabatic cylinder closed by an adiabatic piston. With the "isolated system" formulation, you cannot predict the evolution of such a simple system!! Ppithermo 23:50, 27 November 2006 (UTC)

- An isolated system cannot transfer energy as work.

- open system - transfers mass and energy

- closed system - transfers energy only, no mass. The transfer may be as heat or work or whatever.

- isolated system - transfers no mass, no energy.

- PAR 01:35, 28 November 2006 (UTC)

- I think that Ppithermo's point is that a more useful statement of the second law would involve the relationship between work and heat as types of energy transfer, and an isolated system involves neither. An isolated system represents only one special case (the work-free case) of a closed, thermally insulated system. In the more general sense, imagine a system that is the contents of a sealed, insulated box with a crank attached to get shaft work involved. No matter what is inside that box (generators, refrigerators, engines, fuels, gases, or anything else), I can turn the crank all I want to perform work on the system or I can let the system do all it can to turn the crank and perform work for me, and the second law says that neither case can possibly decrease the total entropy of the system inside that box. Entropy can decrease locally, but system entropy can't when the system is closed and thermally insulated, whether it's isolated or not. All my input of shaft work won't decrease entropy because it's easy for work to make things hotter but it's impossible for hot things to do work unless they exchange heat with cold things. If I want to decrease entropy in there, heat or mass must be transfered to the outside. Work won't do it.

- If the statement of the second law uses an isolated system, that information is lost. I wouldn't favor a statement that uses the phrase "it is impossible to imagine..." but I do like the idea of replacing "isolated system" with "closed system with an adiabatic boundary". Still, there always has to be a balance between making general, powerful statements and overly-specific, easily understood statements. Flying Jazz 04:33, 28 November 2006 (UTC)

- Well, I put that statement in because that's the statement I've always heard, and I knew it was true. However, I do think you have pointed out a lack generality. I can't come up with a counter argument (right now :)). Why not change it to the "adiabatic closed" form, and meanwhile I will (or we could) could research it some more?

- I looked at Callen (Thermodynamics and thermostatistics) which is what I use as the bottom line unless otherwise noted, and he does not deal with "the laws". He has a number of postulates concerning entropy, and the one that is closest to the second law states:

"There exists a function (called the entropy S) of the extensive parameters of any cmmposite system, defined for all equilibrium states and having the following property: The values assumed by the extensive parameters in the absence of an internal constraint are those that maximize the entropy over the manifold of constrained equilibrium states."

- I've come to realize that my understanding of the role of constraints in thermodynamic processes is not what it should be. I don't fully get the above statement, so I will not put it into the article, but perhaps you can use it to modify the second law statement. PAR 14:42, 28 November 2006 (UTC)

- Ppithermo 22:02, 28 November 2006 (UTC)Well. This is the problem. So many books especially the more recent ones have it wrong. I have had many hard times from so many people who do not aggree with the closed adiabatic statement because they were taught the isolated form and never questionned it. They often think it's a simple detail. If PAR wrote the statement then I guess should modify it. I find that this is not a difficult concept and closed adiabatic is as simple to comprehend as isolated I believe. I think the small demonstration of the the fact that SF - SI>0 should be included below the statement since it illustrates it well. Ppithermo 22:02, 28 November 2006 (UTC)

- I've come to realize that my understanding of the role of constraints in thermodynamic processes is not what it should be. I don't fully get the above statement, so I will not put it into the article, but perhaps you can use it to modify the second law statement. PAR 14:42, 28 November 2006 (UTC)

- I think it should be included but not in the introduction, better in the overview. The introduction is for people who want to get a quick idea of the article. PAR 01:12, 29 November 2006 (UTC)

Rigor about isolated vs closed adiabatic

I've found a source that make me think Ppithermo may be too emphatic about some of this. First off, "isolated" is not wrong. It is true. It just seemed to be more specific than it needed to be. Second, the equation SF - SI>0 is not needed when dS/dt >= 0 is in the current version. I think PAR's post of Callen's statement is too dense for a Wikipedia article. When I googled and found http://jchemed.chem.wisc.edu/Journal/issues/2002/Jul/abs884.html yesterday, I thought of simply changing the words "isolated system" to "closed system with adiabatic walls" in the introduction, but there was a problem with that. I don't think a closed adiabatic system involved in a work interaction can reach equilibrium until the work interaction stops, so refering to a maximum entropy at equilibrium would be inappropriate unless the system is isolated. And the introduction should refer to equilibrium in my view. Another huge advantage of using the "isolated system" second law statement is that it's commonly used. But that still doesn't mean it's a good thing for Wikipedia.

So I was confused and looking for some rigor about this until I saw page 5 here: http://www.ma.utexas.edu/mp_arc/c/98/98-459.ps.gz. It says the following (I've simplified the state function algebra some and I'm not bothering with MathML here):

"Second law: For any system there exists a state function, the entropy S, which is extensive, and satisfies the following two conditions:

- a) if the system is adiabatically closed, dS/dt >= 0

- b) if the system is isolated, lim (t->infinity) S = max(S)

...Part (a) of the 2nd law, together with the 1st law, gives the time evolution. On the other hand, part (b), which defines the arrow of time, expresses the fact that the isolated system evolves towards a stable equilibrium in the distant future....Furthermore, part (b) of the second law, together with the 1st law, yields all the basic axioms of thermostatics, as well as the zeroth law of thermodynamics."

The article that contains this quote is about a problem of thermostatics, so that might be why part (b) is emphasized, but it looks to me like we don't have a general/specific issue here, but we have two sides of the same (or a similar) coin, and if one side is presented more often in other sources, I think Wikipedia should follow suit in the intro. Presenting both (a) and (b) in the Mathematical Description section will make things clear. For now, I've changed "isolated" to "closed" in the Mathematical Description section and I hope someone else can add the math. I think the current statement of the law in the introduction is fine. Flying Jazz 04:44, 29 November 2006 (UTC)

- The statement with "isolated" is correct and a common statement, so its ok to keep it there. What bothers me is that I am under the impression that the laws of thermodynamics, properly stated, give all the "physics" needed for thermodynamics. If you have a rigorous description of the mathematics of the space of thermodynamic parameters, and a tabulataion of properties, then that, in combination with "the laws" should enable you to solve any thermodynamics problem. I haven't gone through the argument above enough to be sure that the "isolated" statement is insufficient in this respect. If it is not sufficient, and there is an alternative, accepted statement of the second law which IS sufficient, that would be an improvement.

- I also agree that simply replacing "isolated" with "closed adiabatic" would be wrong, thanks for pointing that out. The system would never equilibrate if work was being done on it. PAR 07:21, 29 November 2006 (UTC)

- Sorry , i am not sure how to make this a new paragraph.

- I aggre with everyone here that the isolated statement, as it is, is true, never said it was not. My point is that it does not constitute a proper second law.

- I actually am not too happy with the presence of dS/dt. Remember, themodynamics is supposed to be about equilibrium states. If there is an evolution of a system (in general irreversible), the thermodynamic variables may not be defined or ill defined.

- The following sentence in the above comment in certainly not justified.

I also agree that simply replacing "isolated" with "closed adiabatic" would be wrong, thanks for pointing that out. The system would never equilibrate if work was being done on it.

- The argument that "the system would never equilibrate" is rather bizarre. The system evolves from a state of equilibrium to another state of equilibrium when a constraint is modified(for example external pressure). It may take a very long time for this evolution to take place, but we only consider the states that correspond to equilibrium. For example, if the external pressure is different from the internal pressure(mobile boundary), the (closed adiabatic)system evolves and tends to bring the internal pressure to the same value as the external pressure. When this is achieved work interaction will cease. And the system can reach equilibrium.

- How could you possibly deal with a closed adiabatic system with work exchange using the "isolated formulation"...Please explain if you can. My opinion is that you cannot.

- The other point is that the isolated statement is not equivalent to the Kelvin formulation(you cannot have w>0 and q<0 for a system in contact with a thermal reservoir for a cyclic process. Everyone seems to agree with the Kelvin formulation). The Kelvin formulation cannot be derived from the "isolated formulation". How would you bring about the work term ??

- If you feel that the derivation I outlined to show that SF - SI>0 is erroneous please indicate so and possibly why. (It is so straight forward...)

- I have various sources which have similar or equivalent derivations. (Please look ar the Reif book which is a classic for physicists he writes that that the entropy of a closedd thermally insulated system...).

- The paper cited by PAR asserts the fact with no proof, and unfortunately, I could not find a derivation...

- Ppithermo 11:25, 29 November 2006 (UTC)

- I hope you don't mind that I formatted your post above. When you respond, just indent your response from the previous, using colons, until about four or five colons, then start back at the margin. Each new paragraph has to be indented.

- Regarding your response above:

- I don't understand your objection to the sentence "The system would never equilibrate if work was being done on it." Then you give an example which says that a closed, adiabatic system can come to equilibrium as long as no more work is being done on or by it, which is just what the sentence you object to says. A closed, adiabatic system which has no work done on or by it is effectively an isolated system.

- Please understand that I am not rejecting the point you are making, I just haven't had time to understand it completely. In fact, I think you may have a point. I cannot dispute your explanation right now, but I will look at it more carefully and get back to you.

- The reference that I cited is not a paper, its a book by Herbert B. Callen. It is the most widely cited thermodynamics reference in the world, and its the best thermodynamics book I've ever seen. (I will check out the Reif book and see how they compare). The statement I quoted was not a theorem, it was a postulate, much like the second law. There is no "proof" of the second law other than experience.

- PAR 14:39, 29 November 2006 (UTC)

- Regarding your response above:

- Thanks for the formatting. I was hoping one of you would help me with that.

- I guess confusion sets in because I am trying to reply to two persons and I do not say who I am responding to. I will now respond to PAR and the different points made in the last response.

- The statement I objected to seemed to imply that if work is done on a system, the system cannot reach equilibrium, which made no sense to me. Clearly when the system has reached its final state, it is in a state of equilibrium. No work is done on it anymore, in fact no change takes palce anymore. But work may have been done between the initial and final state. (good old example of the cylinder and piston both adiabatic and different pressure inside and ouside in the initial state prior to releasing the piston).

- I know Callen's book. I have the second edition.

- When the second law is stated for an "isolated system", you cannot derive from it the Kelvin form of the second law. When stated for a "closed adaibatic system", you can.

- In the demonstration I presented, the system spontaneously goes from an initial state of equilibrium I to a new equilibrium state F after a constraint is modified. If the entropy S had not attained its maximum the spontaneous process would carry on. The process thus stops because the entropy of the system has reached its maximum, given the constraints.

- Take time to reflect on the stuff. With this statement of the second law, it is a breeze to derive the Clausius inequality for system that can enter in contact with multiple thermal reservoir.

- Also note that I favor writing equations for finite processes rather than differential forms since as I mentioned it earlier thermodynamic variables are well defined for equilibrium states.

- Reif's book is also one of the most known thermo stat book. I do not have my copy here, but I remember that he gives a summary of the four laws and there, he clearly states that the maximum entropy principle holds for a thermally insulated system. Ppithermo 22:31, 29 November 2006 (UTC)

- It's my view that complete rigor in both thermodynamics and thermostatics (a "proper second law") cannot be attained without both part (a) and part (b) as stated above. Either work interactions are permitted (closed adiabatic) or they're not (isolated). Of course, Ppithermo is correct when he says that closed adiabatic systems are ABLE to reach equilibrium after the work interaction stops, but in a discussion about closed adiabatic vs isolated in a second-law statement, this is a little bit sneaky. Open systems with everything changing are able to reach equilibrium too--after the mass transfer and thermal contact and work interactions stop. Whether to express equations in differential form or not is a matter of taste and I think it depends on the specific equation. dS/dt >= 0 looks more elegant in my opinion than Sf - Si >= 0. In the Carnot cycle article, using differentials instead of Sa and Sb would be silly.

- I hope you both focus on parts a and b of the second law statement above. For a closed, adiabatic system, entropy will increase (part a), but, as long as the work interaction continues, this says nothing about whether a maximum will be achieved. If a work interaction could continue forever then entropy would increase forever. You need part b to discuss equilibrium.

- For an isolated system, entropy will reach a maximum with time after equilibrium is achieved (part b). But this says nothing about the futility of work interactions in creating a perpetual motion machine of the second kind. It says nothing about work interactions at all. You need part a to discuss that. Flying Jazz 23:55, 29 November 2006 (UTC)

- answer to Flying Jazz.

- Actually,I do not like to lean on the paper you refer which is an assertion and does not contain a demo. You can consider it as an axiomatic approach, but the equivalence with other forms is far from demonstrated.

- dS/dt >= 0 may look more elegant but is not appropriate. Suppose you place in contact two objects that are at different temperatures, there will be an irreversible heat exchange and during the process, the temperature of the objects will be inhomogeneous, thermodynamic variables will not be well defined. They become well defined when equilibrium is reached. This is why I object to the dt.

- Now let us get back to the work problem.

- 1) work done on a system or by a system is a frequent occurence.

- 2) consider a cyclic process of a machine during which the machine receives a work w (w may be postive or negative at this stage)

- 3) Suppose that we allow the machine for part of the cycle to come in contact with a single thermal reservoir at temperature T and that during this contact the machine receives an amount of heat q. The thermal reservoir receives an amount of heat

- qtherm= - q

- All the thermodynamic variables of the machine are back to their original values at the end of the cycle. The change in internal energy of the machine is zero. Hence

- w+q=0 and (SF - SI)sys=0

- The entropy change of the thermal reservoir is

- (SF - SI)therm = -q/T

- The composite system machine + thermal reservoir constitutes a closed adiabatic system. We have

- (SF - SI)composite = -q/T

- Now using the closed adiabatic formulation of the second law, we must have

- (SF - SI)composite>0

- and therefore -q/T>0 thus q<0 and w>0

- we get back the Kelvin statement of the second law, which says we cannot have q>0 and w<0.

- With the isolated system formulation, you cannot get anywhere because you have no provision made to deal with work!!

- Hope this is convincing enough. Please read it carefully. Hope PAR reads this too and appreciates

- Ppithermo 14:22, 30 November 2006 (UTC)

- I think you are being a little sneaky again, Ppithermo. Two objects at different tempartures in thermal contact with each other is not a closed adiabatic system, so part (a) above would not apply. If you are against the differential statement, please consider a closed, adiabatic system with a work interaction. I agree with everything you write after that, including your last paragraph, but this does not get to the heart of the issue of what should be in the introduction or the bulk of the article.

- In engineering, the total entropy of systems that are out of equilibrium is found very often. Spatial inhomogeneities are accounted for by making certain assumptions and then you integrate over some spatial dimension, and, presto! You have a value for thermodynamic parameters for the contents of a cylinder of a steam engine at one non-equilibrium moment or another. The idea that it's a challenge to rigorously compute non-equilibrium thermo parameters, and therefore differentials should not be used is not compelling. Differentials are too useful and the mathematical version of the plain-English statement "entropy increases" is both more concise and more general than "entropy's final value is greater than it's initial value." Every result that uses the second statement can be derived from the first, so your cyclic example, while correct, is not an argument against using differentials. Also, a statement of the second law that only uses equilibrium values would permit a perpetual motion machine of the second kind if that machine never achieved equilibrium. Perpetual motion machines are impossible whether they achieve equilibrium or not, and a good second-law statement would reflect this.

- I came accross the abstract for this recent article during a google search: http://www.iop.org/EJ/abstract/1742-5468/2006/09/P09015 that shows that this topic is a subject of current theoretical study. These stat mech guys assumed something-or-other and concluded: "We also compute the rate of change of S, ∂S/∂t, showing that this is non-negative [see part a above] and having a global minimum at equilibrium. [see part b above]." I don't have access to the complete article and I probably wouldn't understand it anyway (I'm not a stat mech guy), but when I see the same things repeated often enough just as I learned it, and it makes sense to me, I don't mind seeing it in Wikipedia even if a rigorous demo with no assumptions doesn't exist at the moment. With my engineering background, these kinds of conversations sometimes make me smile. Why can't these physicists get around to rigorously proving what we engineers have already used to change the world? :) My old chemE thermo text used something called "the postulatory approach" which goes something like this: We postulate this is true. It seems to work and we've used it to make lots of stuff and lots of money. Hey, let's keep using it. Flying Jazz 02:04, 1 December 2006 (UTC)

-

- Answer to Flying Jazz

- first paragraph

- I am not trying to be sneaky. I was just giving an example where temperatures are inhomogeneous. I can have a closed adiabatic system. Two gases at different temperature in a closed adiabatic container and separated by an adiabatic wall. Remove the wall. Clearly temperatures will not be homogeneous...

- I agree of course with the fact that,with certain assumptions, one can calculate entropies and state functions for system where they are not exactly homogenous. The trouble with that is that most people will not remember what the assumptions are!!!

- As a presentation, things should remain simple yet attempt to be accurate. The point I was trying to make in my last message is that from the closed adiabatic statement, you can get the Kelvin formulation and reciprocally(in a previous part) from the Kelvin formulation you get the closed adiabatic statement.

- Please note that the fact people repeat things they heard somewhere else do not make them right.

- I do not understand your statement about the perpetual motion machine of the second kind. I precisely showed that with a single thermal reservoir you cannot get w<0 which is equivalent to no useful work can be produced...

- Consider a machine that undergoes cyclic changes and can exchange heat with 2 heat sources. You can have an engine that runs forever and does not break the second law at all. The global system machine plus thermal reservoirs constitutes a closed adiabatic system. The entropy of the system is unchanged after each cycle. The entropy of the hot reservoir decreases and that of the cold reservoir increases. We have for the entropy change of the global system for one cycle

-

- -qh/Th--qc/Tc>0

- The global enrtopy change is >0 and of course w<0, work is provided to the user. All of this is perfect agreement with the closed adiabatic formulation. As long as your hot reservoir can supply heat and the cold one receive heat, you machine can do work for you.

- No one can show this with the isolated system formulation. Hence the beginning of this discussion. If you know a book where this is shown other that a 100 page paper with hundreds of assumptions please tell me.

- Finally, since you have some engineering background, you might be willing to look at some phys chem books. If you can put you hands of Physical Chemistry, by Robert Alberty, seventh edition look up page 82. Another book is by Ira N Levine, Physical Chemistry. I do not have it handy so I cannot give you the exact page number.

- Ppithermo 10:57, 1 December 2006 (UTC)

- If you look at the paragraph available useful work, the starting point is the second law for adiabatic closed system. If I can find some time I will try to fix that first statement so that it fits as nicely as possible with the rest of the article.

- Ppithermo 14:28, 2 December 2006 (UTC)

Well, I finally had time to go through the above discussion, and I still don't understand everything. When I think of the closed adiabatic system, I think of a system that could be incredibly complex, all different sorts of volumes, pressures, temperatures, mechanisms, whatever. When Ppithermo says "the closed adiabatic system is brought into contact with a constant temperature heat bath" I lose the train of thought. I can't generalize what happens to an arbitrarily complex system when it is brought into contact with a heat bath.

In trying to come up with an argument, using the isolated form of the second law, which shows that a closed adiabatic system cannot have a decrease in entropy, the best I can do so far is this: suppose we have a closed adiabatic system called system 1. It is mechanically connected to system 2, so that only mechanical (work) energy can be exchanged between the two. We can always define system 2 so that system 1 and system 2 together form an isolated system. It follows that with this definition, system 2 is also closed and adiabatic. So now we have two closed adiabatic systems connected mechanically to each other which together form an isolated system. The sum of the changes in their entropies must be greater than or equal to zero by the isolated form of the second law. But if I want to concentrate on the entropy change of system 1, that means I am free to choose system 2 in any way possible. If I am able to find a system 2 which has no change in entropy when a particular amount of mechanical energy is added/subtracted, then it follows that the entropy of system 1 cannot decrease for this case. But system 1 is independent of system 2, except for the mechanical connection, so if I can find such a system 2, then it shows that the entropy of system 1 cannot be negative for ANY choice of system 2.

The bottom line is that, if you assume that there exists a closed adiabatic system which can have work done on/by it with zero change in entropy, then you can prove, using the isolated form of the second law, that no closed adiabatic system can have a decrease in entropy.

This is all well and good, but what about the statement of the second law in the article? I don't think the statement as it stands is adequate. My above argument would indicate that the isolated statement of the second law needs the proviso of a zero-entropy machine just to prove the closed adiabatic statement of the second law. I don't even know if it can prove Kelvin's statement. Another favorite book of mine is "Thermodynamics" by Enrico Fermi. In this book, Kelvin's statement is given as the second law (no work from a constant temperature source) and so is Clausius' statment (heat can't flow from a cold to a hot body). Fermi then goes on to prove that the two statements are equivalent, and then that the isolated form of the second law follows from these two equivalent statements.

I am starting to appreciate more the quantitative value of the Kelvin and Clausius statements, and I am starting to favor them as statements of the second law. I am not clear whether the closed adiabatic statement of the second law is equivalent to these two statements. Or the "amended isolated" form either, for that matter. PAR 17:17, 4 December 2006 (UTC)

- In reading Callen, I find that his statement of the second law (mentioned above) is actually about the same as the isolated statement except more precise, and he then uses "reversible work sources" (what I was calling a "zero entropy machine") to prove the "maximum work theorem". The fact that a system which is only mechanically connected to a work source (reversible or not) cannot have a decrease in entropy is then a special case of the maximum work theorem. Check it out. So the bottom line is that I am ok with the present statement. PAR 01:19, 10 December 2006 (UTC)

Sorry, I have been very busy and unable to come back here.

Your system 1 system 2 thing does not add up for me. A zero entropy change process is a reversible adiabatic process. Note that here you are already making assumptions about a system that exchanges work with its environnement. Note also that the process of system one can be irreversible. How could you posssibly immagine some coupling where work could be done reversibly on one hand and irrversiblys on the other.....

Let's rather concentrate about what could be undestandable to the people at large. An axiomatic statement like that of Callen and many others does not appeal to anyone and can only be understood by a handful of people.

You say that the Kelvin statement is a good one and can be related to easily. I sort of agree with that. How can we make use of that to state the second law. The scheme is the following:

- Kelvin statement - A system that can exchange heat with a single constant temperature heat source cannot povide useful work. w>=0 and q=<0. ( and of course w+q=0, the first law)

- Using this statement, one can show that it is equivalent to a more powerfull form: The entropy of a closed adiabatic system cannot decrease.

For a reversible adiabatic process the entropy change is zero (example a reversible adiabatic process of an ideal gas) and thus any other adiabatic process (irreversible) will imply an increase of the entropy of the system.

Many conclusions can then be obtained very simply.

For a system that can get in contact with one thermal reservoir, The amount of heat(algebraic) that the system can receive during a reversible process is always greater than that it can receive during an irreversible process between the same states.

- qsys rev>= qirrev

One can show the Clausius inequality very easily, for a system undergoing a cyclic process during which it can exhange heat with several thermal reservoirs (don't know how to type an equation)

- ∑ qi/Ti <=0

Another application. Consider an irreversible engine that operates getting in contact with two thermal reservoirs. After a number of cycle, the engine entropy is unchanged. The entropy of the hot thermal reservoir has decreased. The entropy of the cold thermal reservoir has increased.The total entropy change of the closed adiabatic system made of the engine and the reservoirs has increased, as it should according ot the second law for a closed adiabatic sysytem. So clean and simple.

NONE OF THESE CONCLUSIONS CAN BE OBTAINED WITH THE ISOLATED STATEMENT.(for system where work is exchanged).

I hope you guys can see the light at the end of the tunnel, because this probably all I can do to enlighten you. If you still like the statement as it is, then enjoy it. I can tell you that a few months back I read this second law article and there was a statement saying that "the closed adiabatic form would be the appropriate form". Clearly this has been removed and I do not know how to get to old versions to document that. If you cannot understand my arguments, then others will not either and there is no need for me to change that statement since it will be destroyed immediately by individual who "learned it that way....". Ppithermo 14:57, 15 December 2006 (UTC)

- I think you have not understood my argument. Here is a diagram. System 1 is adiabatic and closed. It may or may not be reversible. Its change in entropy may be anything. Now we mechanically connect a reversible work source (system 2) to it. System 2 is reversible, so its change in entropy is zero. The combination of both systems constitutes an isolated system. By the isolated version of the second law,

- Since system 2 is reversible:

- Therefore

- Which proves, using the isolated form of the second law, that a closed adiabatic system (system 1) cannot have its entropy decreased. All of the proofs you showed above then follow. PAR 16:35, 15 December 2006 (UTC)

I understood your argument. There is not such thing as a reversible work source that is isolated... By essence reversible work is only possible if the acting force on the moving part is zero (external force= internal force. Hence a reversible work source cannot be isolated...

I was away for more that 2 weeks and very busy afterward . Sorry, for not answering sooner. Since you like the Callen book, try to get your hand on the first edition and look at appendix C. You will find there that Callen says rightfully I believe that the seocnd law does not allow finding the equilibrium state of two coupled thermally insulated systems.

128.178.16.184 13:31, 26 January 2007 (UTC)

Hi - is this Ppithermo? I agree, a reversible work source is not isolated, but there is no isolated reversible work source in the above diagram. It is an adiabatic closed reversible work source. I am using the following definitions

- adiabatic means TdS=0

- closed means μdN = 0

- isolated means closed, adiabatic, and PdV=0