Software bug: Difference between revisions

m WP:CHECKWIKI error fixes using AWB (11099) |

|||

| Line 96: | Line 96: | ||

Bug management encompasses more than bug tracking, and there exists no industry-wide standard. Proposed changes to software – bugs as well as enhancement requests and even entire releases – are commonly tracked and managed using [[bug tracking system]]s. The items added may be called defects, tickets, issues, or, following the [[agile development]] paradigm, stories and epics. The systems allow or even require some type of categorization of each issue. Categories may be objective, subjective or a combination, such as [[version number]], area of the software, severity and priority, as well as what type of issue it is, such as a feature request or a bug. [[Issue tracking system]]s may also be used, but are more commonly used for tracking external issues such as customer trouble reports. |

Bug management encompasses more than bug tracking, and there exists no industry-wide standard. Proposed changes to software – bugs as well as enhancement requests and even entire releases – are commonly tracked and managed using [[bug tracking system]]s. The items added may be called defects, tickets, issues, or, following the [[agile development]] paradigm, stories and epics. The systems allow or even require some type of categorization of each issue. Categories may be objective, subjective or a combination, such as [[version number]], area of the software, severity and priority, as well as what type of issue it is, such as a feature request or a bug. [[Issue tracking system]]s may also be used, but are more commonly used for tracking external issues such as customer trouble reports. |

||

=== Severity of a Bug === |

|||

Given a bug is impairing an user scenario, one can easily think the impact the bug has. |

|||

This impact can be tangible as tangible as of data loss, immediate losses in terms of money, or can be indirect - loss of goodwill or man hours, and eventual business. |

|||

This impact is said to be the severity of a bug : "the impact a bug causes when encountered by users". |

|||

Thus, severity, as a [[Software metric]] does have a very precise meaning. |

|||

Unfortunately, Severity levels are not standardised in industry and are decided by each software producer, if they are even used. |

|||

This is because impacts differ across the industry. A crash in a video game has a totally different impact than a crash in the browser, or real time monitoring system. |

|||

Irrespective of that, crashes would be generally categorised high severity in the respective fields. |

|||

| ⚫ | For example, bug severity levels might be "crash or hang", "no workaround" (meaning there is no way the customer can accomplish a given task), "has workaround" (meaning there is a way for the user to recover and accomplish the task), "UI" or "visual defect" (for example, a missing image or displaced button or form element), or "documentation error". Some software publishers use more qualified severities such as "critical", "high", "low," "blocker," or "trivial".<ref>[http://www.bugzilla.org/docs/4.4/en/html/bug_page.html "Using Bugzilla: Anatomy of a Bug]</ref> The severity of a bug may be a separate category to its priority for fixing, and the two may be quantified and managed separately. |

||

=== Priority of a Bug === |

|||

Given a bug, how fast it needs to get fixed is defined by the [[Software metric]] Priority. |

|||

How the priority for fixing is used is decided internally by each software producer. |

|||

Priorities are sometimes numerical and sometimes words, such "critical," "high," "low" or "deferred"; note that these can be similar or even identical to severity ratings when looking at different software producers. For example, a software company may decide that priority 1 bugs are always to be fixed for the next release, whereas "5" could mean its fix is put off – sometimes indefinitely. |

|||

=== Connection between Priority and Severity === |

|||

If a flaw is found in an application which causes it to crash, yet the crash is so rare and takes, say, ten extremely unusual or unlikely steps to produce it, management may set its priority as "low" or even "will not fix." Thus it is easily seen that Priority is a function of probability of a bug to occur, and Severity (impact) of the bug. |

|||

In specificity, Priority is an strictly increasing function of both probability of the bug occurrence and severity. |

|||

Given probability p = 1, the severity defines the priority. |

|||

When p = 0, the bug, in all probability needs not to be fixed at all, however we can have a priority strictly proportional to the severity. |

|||

In the same way, when Severity S=0 for a bug, we can have a priority strictly proportional to the probability. |

|||

One can axiomatise the Priority function as any function having above characteristics, |

|||

for example this very simplified function works : <math>P(p,s) = \lceil kpS \rceil </math> where p is the probability while S is the severity, |

|||

with k a scaling constant. The [[Floor_and_ceiling_functions|ceiling function]] is used to get the domain of priority to only integers. |

|||

It also assigns 0 priority to [[almost never]] occurring bugs. |

|||

=== Software Releases === |

|||

It is common practice for software to be released with known bugs that are considered "non-critical" as defined by the software producer(s). While software products may, by definition, contain any number of unknown bugs, measurements during testing can provide an estimate of the number of likely bugs remaining; this becomes more reliable the longer a product is tested and developed. Most big software projects maintain two lists of "known bugs"— those known to the software team, and those to be told to users.{{Citation needed|date=July 2013}} The second list informs users about bugs that are not fixed in the current release, or not fixed at all, and a [[workaround]] may be offered. |

It is common practice for software to be released with known bugs that are considered "non-critical" as defined by the software producer(s). While software products may, by definition, contain any number of unknown bugs, measurements during testing can provide an estimate of the number of likely bugs remaining; this becomes more reliable the longer a product is tested and developed. Most big software projects maintain two lists of "known bugs"— those known to the software team, and those to be told to users.{{Citation needed|date=July 2013}} The second list informs users about bugs that are not fixed in the current release, or not fixed at all, and a [[workaround]] may be offered. |

||

| ⚫ | |||

A software publisher may opt not to fix a particular bug for a number of reasons, including: |

A software publisher may opt not to fix a particular bug for a number of reasons, including: |

||

Revision as of 16:18, 19 June 2015

This article is written like a personal reflection, personal essay, or argumentative essay that states a Wikipedia editor's personal feelings or presents an original argument about a topic. (May 2012) |

| Part of a series on |

| Software development |

|---|

A software bug is an error, flaw, failure, or fault in a computer program or system that causes it to produce an incorrect or unexpected result, or to behave in unintended ways. Most bugs arise from mistakes and errors made by people in either a program's source code or its design, or in frameworks and operating systems used by such programs, and a few are caused by compilers producing incorrect code. A program that contains a large number of bugs, and/or bugs that seriously interfere with its functionality, is said to be buggy. Reports detailing bugs in a program are commonly known as bug reports, defect reports, fault reports, problem reports, trouble reports, change requests, and so forth.

Bugs trigger errors that can in turn have a wide variety of ripple effects, with varying levels of inconvenience to the user of the program. Some bugs have only a subtle effect on the program's functionality, and may thus lie undetected for a long time. More serious bugs may cause the program to crash or freeze. Others qualify as security bugs and might for example enable a malicious user to bypass access controls in order to obtain unauthorized privileges.

The results of bugs may be extremely serious. Bugs in the code controlling the Therac-25 radiation therapy machine were directly responsible for some patient deaths in the 1980s. In 1996, the European Space Agency's US$1 billion prototype Ariane 5 rocket had to be destroyed less than a minute after launch, due to a bug in the on-board guidance computer program. In June 1994, a Royal Air Force Chinook helicopter crashed into the Mull of Kintyre, killing 29. This was initially dismissed as pilot error, but an investigation by Computer Weekly uncovered sufficient evidence to convince a House of Lords inquiry that it may have been caused by a software bug in the aircraft's engine control computer.[1]

In 2002, a study commissioned by the US Department of Commerce' National Institute of Standards and Technology concluded that "software bugs, or errors, are so prevalent and so detrimental that they cost the US economy an estimated $59 billion annually, or about 0.6 percent of the gross domestic product".[2]

Etymology

Use of the term "bug" to describe inexplicable defects has been a part of engineering jargon for many decades and predates computers and computer software; it may have originally been used in hardware engineering to describe mechanical malfunctions. For instance, Thomas Edison wrote the following words in a letter to an associate in 1878:

It has been just so in all of my inventions. The first step is an intuition, and comes with a burst, then difficulties arise — this thing gives out and [it is] then that "Bugs" — as such little faults and difficulties are called — show themselves and months of intense watching, study and labor are requisite before commercial success or failure is certainly reached.[3]

Baffle Ball, the first mechanical pinball game, was advertised as being "free of bugs" in 1931.[4] Problems with military gear during World War II were referred to as bugs (or glitches).[5]

The term "bug" was used in an account by computer pioneer Grace Hopper, who publicized the cause of a malfunction in an early electromechanical computer.[6] A typical version of the story is given by this quote:[7]

In 1946, when Hopper was released from active duty, she joined the Harvard Faculty at the Computation Laboratory where she continued her work on the Mark II and Mark III. Operators traced an error in the Mark II to a moth trapped in a relay, coining the term bug. This bug was carefully removed and taped to the log book. Stemming from the first bug, today we call errors or glitches in a program a bug.

Hopper was not actually the one who found the insect, as she readily acknowledged. The date in the log book was September 9, 1947,[8][9] although sometimes erroneously reported as 1945.[10] The operators who did find it, including William "Bill" Burke, later of the Naval Weapons Laboratory, Dahlgren, Virginia,[11] were familiar with the engineering term and, amused, kept the insect with the notation "First actual case of bug being found." Hopper loved to recount the story.[12] This log book, complete with attached moth, is part of the collection of the Smithsonian National Museum of American History, though it is not currently on display.[9]

The related term "debug" also appears to predate its usage in computing: the Oxford English Dictionary's etymology of the word contains an attestation from 1945, in the context of aircraft engines.[13]

Prevalence

In software development projects, a "mistake" or "fault" can be introduced at any stage during development. Bugs are a consequence of the nature of human factors in the programming task. They arise from oversights or mutual misunderstandings made by a software team during specification, design, coding, data entry and documentation. For example, in creating a relatively simple program to sort a list of words into alphabetical order, one's design might fail to consider what should happen when a word contains a hyphen. Perhaps, when converting the abstract design into the chosen programming language, one might inadvertently create an off-by-one error and fail to sort the last word in the list. Finally, when typing the resulting program into the computer, one might accidentally type a "<" where a ">" was intended, perhaps resulting in the words being sorted into reverse alphabetical order.

Another category of bug is called a race condition that can occur when programs have multiple components executing at the same time, either on the same system or across multiple systems interacting across a network. If the components interact in a different order than the developers intended, it may break the logical flow of the program. These bugs can be difficult to detect or anticipate, since they may not occur during every execution of a program.

More complex bugs can arise from unintended interactions between different parts of a computer program. This frequently occurs because computer programs can be complex — millions of lines long in some cases — often having been programmed by many people over a great length of time, so that programmers are unable to mentally track every possible way in which parts can interact.

Mistake metamorphism

There is ongoing debate over the use of the term "bug" to describe software errors.[14] One argument is that the word "bug" is divorced from a sense that a human being caused the problem, and instead implies that the defect arose on its own, leading to a push to abandon the term "bug" in favor of terms such as "defect", with limited success.

In software engineering, mistake metamorphism (from Greek meta = "change", morph = "form") refers to the evolution of a defect in the final stage of software deployment. [clarification needed] Transformation of a "mistake" committed by an analyst in the early stages of the software development lifecycle, which leads to a "defect" in the final stage of the cycle has been called 'mistake metamorphism'.[15]

Different stages of a "mistake" in the entire cycle may be described by using the following terms:[15]

- Mistake

- Anomaly

- Fault

- Failure

- Error

- Exception

- Crash

- Bug

- Defect

- Incident

- Side Effect

Prevention

The software industry has put much effort into finding methods for preventing programmers from inadvertently introducing bugs while writing software.[16][17] These include:

- Programming style

- While typos in the program code are often caught by the compiler, a bug usually appears when the programmer makes a logic error. Various innovations in programming style and defensive programming are designed to make these bugs less likely, or easier to spot. In some programming languages, so-called typos, especially of symbols or logical/mathematical operators, actually represent logic errors, since the mistyped constructs are accepted by the compiler with a meaning other than that which the programmer intended.

- Programming techniques

- Bugs often create inconsistencies in the internal data of a running program. Programs can be written to check the consistency of their own internal data while running. If an inconsistency is encountered, the program can immediately halt, so that the bug can be located and fixed. Alternatively, the program can simply inform the user, attempt to correct the inconsistency, and continue running.

- Development methodologies

- There are several schemes for managing programmer activity, so that fewer bugs are produced. Many of these fall under the discipline of software engineering (which addresses software design issues as well). For example, formal program specifications are used to state the exact behavior of programs, so that design bugs can be eliminated. Unfortunately, formal specifications are impractical or impossible[citation needed] for anything but the shortest programs, because of problems of combinatorial explosion and indeterminacy.

- In modern times, popular approaches include automated unit testing and automated acceptance testing (sometimes going to the extreme of test-driven development), and agile software development (which is often combined with, or even in some cases mandates, automated testing). All of these approaches are supposed to catch bugs and poorly-specified requirements soon after they are introduced, which should make them easier and cheaper to fix, and to catch at least some of them before they enter into production use.

- Programming language support

- Programming languages often include features which help programmers prevent bugs, such as static type systems, restricted namespaces and modular programming, among others. For example, when a programmer writes (pseudocode)

LET REAL_VALUE PI = "THREE AND A BIT", although this may be syntactically correct, the code fails a type check. Depending on the language and implementation, this may be caught by the compiler or at run-time. In addition, many recently invented languages have deliberately excluded features which can easily lead to bugs, at the expense of making code slower than it need be: the general principle being that, because of Moore's law, computers get faster and software engineers get slower; it is almost always better to write simpler, slower code than "clever", inscrutable code, especially considering that maintenance cost is substantial. For example, the Java programming language does not support pointer arithmetic; implementations of some languages such as Pascal and scripting languages often have runtime bounds checking of arrays, at least in a debugging build.

- Code analysis

- Tools for code analysis help developers by inspecting the program text beyond the compiler's capabilities to spot potential problems. Although in general the problem of finding all programming errors given a specification is not solvable (see halting problem), these tools exploit the fact that human programmers tend to make the same kinds of mistakes when writing software.

- Instrumentation

- Tools to monitor the performance of the software as it is running, either specifically to find problems such as bottlenecks or to give assurance as to correct working, may be embedded in the code explicitly (perhaps as simple as a statement saying

PRINT "I AM HERE"), or provided as tools. It is often a surprise to find where most of the time is taken by a piece of code, and this removal of assumptions might cause the code to be rewritten.

Debugging

Finding and fixing bugs, or "debugging", has always been a major part of computer programming. Maurice Wilkes, an early computing pioneer, described his realization in the late 1940s that much of the rest of his life would be spent finding mistakes in his own programs.[18] As computer programs grow more complex, bugs become more common and difficult to fix. Often programmers spend more time and effort finding and fixing bugs than writing new code. Software testers are professionals whose primary task is to find bugs, or write code to support testing. On some projects, more resources can be spent on testing than in developing the program.

Usually, the most difficult part of debugging is finding the bug in the source code. Once it is found, correcting it is usually relatively easy. Programs known as debuggers exist to help programmers locate bugs by executing code line by line, watching variable values, and other features to observe program behavior. Without a debugger, code can be added so that messages or values can be written to a console (for example with printf in the C programming language) or to a window or log file to trace program execution or show values.

However, even with the aid of a debugger, locating bugs is something of an art. It is not uncommon for a bug in one section of a program to cause failures in a completely different section,[citation needed] thus making it especially difficult to track (for example, an error in a graphics rendering routine causing a file I/O routine to fail), in an apparently unrelated part of the system.

Sometimes, a bug is not an isolated flaw, but represents an error of thinking or planning on the part of the programmer. Such logic errors require a section of the program to be overhauled or rewritten. As a part of Code review, stepping through the code modelling the execution process in one's head or on paper can often find these errors without ever needing to reproduce the bug as such, if it can be shown there is some faulty logic in its implementation.

But more typically, the first step in locating a bug is to reproduce it reliably. Once the bug is reproduced, the programmer can use a debugger or some other tool to monitor the execution of the program in the faulty region, and find the point at which the program went astray.

It is not always easy to reproduce bugs. Some are triggered by inputs to the program which may be difficult for the programmer to re-create. One cause of the Therac-25 radiation machine deaths was a bug (specifically, a race condition) that occurred only when the machine operator very rapidly entered a treatment plan; it took days of practice to become able to do this, so the bug did not manifest in testing or when the manufacturer attempted to duplicate it. Other bugs may disappear when the program is run with a debugger; these are heisenbugs (humorously named after the Heisenberg uncertainty principle).

Debugging is still a tedious task requiring considerable effort. Since the 1990s, particularly following the Ariane 5 Flight 501 disaster, there has been a renewed interest in the development of effective automated aids to debugging. For instance, methods of static code analysis by abstract interpretation have already made significant achievements, while still remaining much of a work in progress.

As with any creative act, sometimes a flash of inspiration will show a solution, but this is rare and, by definition, cannot be relied on.

There are also classes of bugs that have nothing to do with the code itself. If, for example, one relies on faulty documentation or hardware, the code may be written perfectly properly to what the documentation says, but the bug truly lies in the documentation or hardware, not the code. However, it is common to change the code instead of the other parts of the system, as the cost and time to change it is generally less. Embedded systems frequently have workarounds for hardware bugs, since to make a new version of a ROM is much cheaper than remanufacturing the hardware, especially if they are commodity items.

Bug management

This section needs additional citations for verification. (May 2012) |

This section is written like opinion that states a Wikipedia editor's personal feelings or presents an original argument about a topic. (May 2012) |

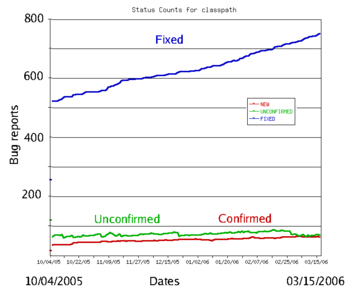

Bug management encompasses more than bug tracking, and there exists no industry-wide standard. Proposed changes to software – bugs as well as enhancement requests and even entire releases – are commonly tracked and managed using bug tracking systems. The items added may be called defects, tickets, issues, or, following the agile development paradigm, stories and epics. The systems allow or even require some type of categorization of each issue. Categories may be objective, subjective or a combination, such as version number, area of the software, severity and priority, as well as what type of issue it is, such as a feature request or a bug. Issue tracking systems may also be used, but are more commonly used for tracking external issues such as customer trouble reports.

Severity of a Bug

Given a bug is impairing an user scenario, one can easily think the impact the bug has. This impact can be tangible as tangible as of data loss, immediate losses in terms of money, or can be indirect - loss of goodwill or man hours, and eventual business. This impact is said to be the severity of a bug : "the impact a bug causes when encountered by users". Thus, severity, as a Software metric does have a very precise meaning. Unfortunately, Severity levels are not standardised in industry and are decided by each software producer, if they are even used. This is because impacts differ across the industry. A crash in a video game has a totally different impact than a crash in the browser, or real time monitoring system. Irrespective of that, crashes would be generally categorised high severity in the respective fields. For example, bug severity levels might be "crash or hang", "no workaround" (meaning there is no way the customer can accomplish a given task), "has workaround" (meaning there is a way for the user to recover and accomplish the task), "UI" or "visual defect" (for example, a missing image or displaced button or form element), or "documentation error". Some software publishers use more qualified severities such as "critical", "high", "low," "blocker," or "trivial".[19] The severity of a bug may be a separate category to its priority for fixing, and the two may be quantified and managed separately.

Priority of a Bug

Given a bug, how fast it needs to get fixed is defined by the Software metric Priority. How the priority for fixing is used is decided internally by each software producer. Priorities are sometimes numerical and sometimes words, such "critical," "high," "low" or "deferred"; note that these can be similar or even identical to severity ratings when looking at different software producers. For example, a software company may decide that priority 1 bugs are always to be fixed for the next release, whereas "5" could mean its fix is put off – sometimes indefinitely.

Connection between Priority and Severity

If a flaw is found in an application which causes it to crash, yet the crash is so rare and takes, say, ten extremely unusual or unlikely steps to produce it, management may set its priority as "low" or even "will not fix." Thus it is easily seen that Priority is a function of probability of a bug to occur, and Severity (impact) of the bug. In specificity, Priority is an strictly increasing function of both probability of the bug occurrence and severity. Given probability p = 1, the severity defines the priority. When p = 0, the bug, in all probability needs not to be fixed at all, however we can have a priority strictly proportional to the severity. In the same way, when Severity S=0 for a bug, we can have a priority strictly proportional to the probability.

One can axiomatise the Priority function as any function having above characteristics, for example this very simplified function works : where p is the probability while S is the severity, with k a scaling constant. The ceiling function is used to get the domain of priority to only integers. It also assigns 0 priority to almost never occurring bugs.

Software Releases

It is common practice for software to be released with known bugs that are considered "non-critical" as defined by the software producer(s). While software products may, by definition, contain any number of unknown bugs, measurements during testing can provide an estimate of the number of likely bugs remaining; this becomes more reliable the longer a product is tested and developed. Most big software projects maintain two lists of "known bugs"— those known to the software team, and those to be told to users.[citation needed] The second list informs users about bugs that are not fixed in the current release, or not fixed at all, and a workaround may be offered.

A software publisher may opt not to fix a particular bug for a number of reasons, including:

- A deadline must be met and priorities are such that all but those above a certain severity are fixed for the current software release.

- The bug is already fixed in an upcoming release, and it is not serious enough to warrant an immediate update or patch

- The changes to the code required to fix the bug are too costly, will take too long for the current release, or affect too many other areas of the software.

- Users may be relying on the undocumented, buggy behavior; it may introduce a breaking change.

- The problem is in an area which will be obsolete with an upcoming release; fixing it is unnecessary.

- It's "not a bug". A misunderstanding has arisen between expected and perceived behavior, when such misunderstanding is not due to confusion arising from design flaws, or faulty documentation.

The amount and type of damage a software bug can cause naturally affects decision-making, processes and policy regarding software quality. In applications such as manned space travel or automotive safety, since software flaws have the potential to cause human injury or even death, such software will have far more scrutiny and quality control than, for example, an online shopping website. In applications such as banking, where software flaws have the potential to cause serious financial damage to a bank or its customers, quality control is also more important than, say, a photo editing application. NASA's SATC managed to reduce the number of errors to fewer than 0.1 per 1000 lines of code (SLOC)[citation needed] but this was not felt to be feasible for projects in the business world.

A school of thought popularized by Eric S. Raymond as Linus's Law says that popular open-source software has more chance of having few or no bugs than other software, because "given enough eyeballs, all bugs are shallow".[20] This assertion has been disputed, however: computer security specialist Elias Levy wrote that "it is easy to hide vulnerabilities in complex, little understood and undocumented source code," because, "even if people are reviewing the code, that doesn't mean they're qualified to do so."[21]

Security vulnerabilities

Malicious software may attempt to exploit known vulnerabilities in a system–which may or may not be bugs. Viruses are not bugs in themselves–they are typically programs that are doing precisely what they were designed to do. However, viruses are occasionally referred to as such in the popular press.[citation needed] In addition, it is often a security bug in a computer program that allows viruses to work in the first place.

Common types of computer bugs

- Conceptual error (code is syntactically correct, but the programmer or designer intended it to do something else).

Arithmetic bugs

- Division by zero.

- Arithmetic overflow or underflow.

- Loss of arithmetic precision due to rounding or numerically unstable algorithms.

Logic bugs

- Infinite loops and infinite recursion.

- Off-by-one error, counting one too many or too few when looping.

Syntax bugs

- Use of the wrong operator, such as performing assignment instead of equality test. For example, in some languages x=5 will set the value of x to 5 while x==5 will check whether x is currently 5 or some other number. In simple cases often the compiler can generate a warning. In many languages, the language syntax is deliberately designed to guard against this error.

Resource bugs

- Null pointer dereference.

- Using an uninitialized variable.

- Using an otherwise valid instruction on the wrong data type (see packed decimal/binary coded decimal).

- Access violations.

- Resource leaks, where a finite system resource (such as memory or file handles) become exhausted by repeated allocation without release.

- Buffer overflow, in which a program tries to store data past the end of allocated storage. This may or may not lead to an access violation or storage violation. These bugs can form a security vulnerability.

- Excessive recursion which — though logically valid — causes stack overflow.

- Use-after-free error, where a pointer is used after the system has freed the memory it references.

- Double free error.

Multi-threading programming bugs

- Deadlock, where task A can't continue until task B finishes, but at the same time, task B can't continue until task A finishes.

- Race condition, where the computer does not perform tasks in the order the programmer intended.

- Concurrency errors in critical sections, mutual exclusions and other features of concurrent processing. Time-of-check-to-time-of-use (TOCTOU) is a form of unprotected critical section.

Interfacing bugs

- Incorrect API usage.

- Incorrect protocol implementation.

- Incorrect hardware handling.

- Incorrect assumptions of a particular platform.

- Incompatible systems. Often a proposed "new API" or new communications protocol may seem to work when both computers use the old version or both computers use the new version, but upgrading only the receiver exposes backward compatibility problems; in other cases upgrading only the transmitter exposes forward compatibility problems. Often it is not feasible to upgrade every computer simultaneously—in particular, in the telecommunication industry[22] or the internet.[23][24][25] Even when it is feasible to update every computer simultaneously, sometimes people accidentally forget to update every computer—the Knight Capital Group#2012 stock trading disruption involved one such incompatibility between the old API and a new API.

Performance bugs

- Too high computational complexity of algorithm.

- Random disk or memory access.

Teamworking bugs

- Unpropagated updates; e.g. programmer changes "myAdd" but forgets to change "mySubtract", which uses the same algorithm. These errors are mitigated by the Don't Repeat Yourself philosophy.

- Comments out of date or incorrect: many programmers assume the comments accurately describe the code.

- Differences between documentation and the actual product.

Well-known bugs

A number of software bugs have become well-known, usually due to their severity: examples include various space and military aircraft crashes. Possibly the most famous bug is the Year 2000 problem, also known as the Y2K bug, in which it was feared that worldwide economic collapse would happen at the start of the year 2000 as a result of computers thinking it was 1900. (In the end, no major problems occurred.)

In popular culture

- In Robert A. Heinlein's 1966 novel The Moon Is a Harsh Mistress, computer technician Manuel Davis blames a real bug for a (non-existent) failure of supercomputer Mike, presenting a dead fly as evidence.

- In the 1968 novel 2001: A Space Odyssey (and the corresponding 1968 film), a spaceship's onboard computer, HAL 9000, attempts to kill all its crew members. In the followup 1982 novel, 2010: Odyssey Two, and the accompanying 1984 film, 2010, it is revealed that this action was caused by the computer having been programmed with two conflicting objectives: to fully disclose all its information, and to keep the true purpose of the flight secret from the crew; this conflict caused HAL to become paranoid and eventually homicidal. In addition HAL's orders were to continue the mission even if the crew and cold-slept specialists were unable to do so. Thus HAL's solution was within the parameters of the orders since by attempting to shut it off the crew were jeopardizing the mission (it was not sure that they would turn it back on as well as no HAL Series computer was ever shut off after activation).

- The 2004 novel The Bug, by Ellen Ullman, is about a programmer's attempt to find an elusive bug in a database application.

- The 2008 Canadian film Control Alt Delete is about a computer programmer at the end of 1999 struggling to fix bugs at his company related to the year 2000 problem.

See also

- Anti-pattern

- Bit rot

- Bug bounty program

- Bug tracking system

- Glitch removal

- ISO/IEC 9126, which classifies a bug as either a defect or a nonconformity

- One-line fix

- Orthogonal Defect Classification

- Racetrack problem

- RISKS Digest

- Software defect indicator

- Software regression

- Unusual software bugs (schroedinbug, heisenbug, Bohr bug, and mandelbug)

Notes

- ^ Prof. Simon Rogerson. "The Chinook Helicopter Disaster". Ccsr.cse.dmu.ac.uk. Retrieved September 24, 2012.

- ^ "Software bugs cost US economy dear". Web.archive.org. June 10, 2009. Retrieved September 24, 2012.

- ^ Edison to Puskas, 13 November 1878, Edison papers, Edison National Laboratory, U.S. National Park Service, West Orange, N.J., cited in Thomas P. Hughes, American Genesis: A History of the American Genius for Invention, Penguin Books, 1989, ISBN 0-14-009741-4, on page 75.

- ^ "Baffle Ball". Internet Pinball Database.

(See image of advertisement in reference entry)

- ^ "Modern Aircraft Carriers are Result of 20 Years of Smart Experimentation". Life. June 29, 1942. p. 25. Retrieved November 17, 2011.

- ^ FCAT NRT Test, Harcourt, March 18, 2008

- ^ "Danis, Sharron Ann: "Rear Admiral Grace Murray Hopper"". ei.cs.vt.edu. February 16, 1997. Retrieved January 31, 2010.

- ^ "Bug", The Jargon File, ver. 4.4.7. Retrieved June 3, 2010.

- ^ a b "Log Book With Computer Bug", National Museum of American History, Smithsonian Institution.

- ^ "The First "Computer Bug", Naval Historical Center. But note the Harvard Mark II computer was not complete until the summer of 1947.

- ^ IEEE Annals of the History of Computing, Vol 22 Issue 1, 2000

- ^ James S. Huggins. "First Computer Bug". Jamesshuggins.com. Retrieved September 24, 2012.

- ^ Journal of the Royal Aeronautical Society. 49, 183/2, 1945 "It ranged ... through the stage of type test and flight test and ‘debugging’ ..."

- ^ Bugs or Defects?

- ^ a b Testing Experience. Germany: testingexperience: 42. March 2012. ISSN 1866-5705.

{{cite journal}}: Missing or empty|title=(help) (subscription required) - ^ Huizinga, Dorota; Kolawa, Adam (2007). Automated Defect Prevention: Best Practices in Software Management. Wiley-IEEE Computer Society Press. p. 426. ISBN 0-470-04212-5.

- ^ McDonald, Marc (2007). The Practical Guide to Defect Prevention. Microsoft Press. p. 480. ISBN 0-7356-2253-1.

{{cite book}}: Unknown parameter|coauthors=ignored (|author=suggested) (help) - ^ Maurice Wilkes Quotes

- ^ "Using Bugzilla: Anatomy of a Bug

- ^ "Release Early, Release Often", Eric S. Raymond, The Cathedral and the Bazaar

- ^ "Wide Open Source", Elias Levy, SecurityFocus, April 17, 2000

- ^ K. Kimbler. "Feature Interactions in Telecommunications and Software Systems V" p. 8.

- ^ Mahbubur Rahman Syed. "Multimedia Networking: Technology, Management and Applications: Technology, Management and Applications". p. 398.

- ^ Chwan-Hwa (John) Wu, J. David Irwin. "Introduction to Computer Networks and Cybersecurity". p. 500.

- ^ RFC 1263: "TCP Extensions Considered Harmful" quote: "the time to distribute the new version of the protocol to all hosts can be quite long (forever in fact). ... If there is the slightest incompatibly between old and new versions, chaos can result."

Further reading

- Allen, Mitch, May/Jun 2002 "Bug Tracking Basics: A beginner’s guide to reporting and tracking defects" The Software Testing & Quality Engineering Magazine. Vol. 4, Issue 3, pp. 20–24.

External links

- Picture of the "first computer bug"[dead link] The error of this term is elaborated above. (Naval Historical Center)

- The First Computer Bug! An email from 1981 about Adm. Hopper's bug