Publication bias

Publication bias is a type of bias with regard to what academic research is likely to be published, among what is available to be published. Publication bias is of interest because literature reviews of claims about support for a hypothesis, or values for a parameter will themselves be biased if the original literature is contaminated by publication bias.[1] While some preferences are desirable—for instance a bias against publication of flawed studies—a tendency of researchers and journal editors to prefer some outcomes rather than others e.g. results showing a significant finding, leads to a problematic bias in the published literature.[2]

Studies with significant results often do not appear to be superior to studies with a null result with respect to quality of design.[3] However, statistically significant results have been shown to be three times more likely to be published compared to papers with null results.[4] Multiple factors contribute to publication bias.[1] For instance, once a result is well established, it may become newsworthy to publish papers affirming the null result.[5] It has been found that the most common reason for non-publication is investigators declining to submit results for publication. Factors cited as underlying this effect include investigators assuming they must have made a mistake, to not find a known finding, loss of interest in the topic, or anticipation that others will be uninterested in the null results.[3]

Attempts to identify unpublished studies often prove difficult or are unsatisfactory.[1] One effort to decrease this problem is reflected in the move by some journals to require that studies submitted for publication are pre-registered (registering a study prior to collection of data and analysis). Several such registries exist, for instance the Center for Open Science.

Strategies are being developed to detect and control for publication bias,[1] for instance down-weighting small and non-randomised studies because of their demonstrated high susceptibility to error and bias,[3] and p-curve analysis [6]

Definition

Publication bias occurs when the publication of research results depends not just on the quality of the research but on the hypothesis tested, and the significance and direction of effects detected.[7] The term "publication bias" appears to have been first used in 1959 by statistician Theodore Sterling to refer to fields in which successful research is more likely to be published. As a result, "the literature of such a field consists in substantial part of false conclusions resulting from [type-I errors]".[8]

Publication bias is sometimes called the "file drawer effect", or "file drawer problem". The origin of this term is that results not supporting the hypotheses of researchers often go no further than the researchers' file drawers, leading to a bias in published research.[9] The term "file drawer problem" was coined by the psychologist Robert Rosenthal in 1979.[10]

Positive-results bias, a type of publication bias, occurs when authors are more likely to submit, or editors accept, positive compared to negative or inconclusive results.[11] Outcome-reporting bias occurs when multiple outcomes are measured and analyzed, but where reporting of these outcomes is dependent on the strength and direction of the result for that outcome. A generic term coined to describe these post-hoc choices is HARKing ("Hypothesizing After the Results are Known").[12]

Evidence

The presence of publication bias in the literature has been most extensively studied in biomedical research. Investigators following clinical trials from the submission of their protocols to ethics committees or regulatory authorities until the publication of their results observed that those with positive results are more likely to be published.[14][15][16] In addition, studies often fail to report negative results when published, as demonstrated by research comparing study protocols with published articles.[17][18]

The presence of publication bias has also been investigated in meta-analyses. The largest study on publication bias in meta-analyses to date investigated the presence of publication bias in systematic reviews of medical treatments from the Cochrane Library.[19] The study showed that positive statistically significant findings are 27% more likely to be included in meta-analyses of efficacy than other findings and that results showing no evidence of adverse effects have a 78% greater probability to enter meta-analyses of safety than statistically significant results showing that adverse effects exist. Evidence of publication bias has also been found in meta-analyses published in prominent medical journals.[20]

Effects on meta-analyses

Where publication bias is present, published studies will not be representative of the valid studies undertaken. Unless controlled, this bias will distort the results of meta-analyses and systematic reviews. This is a severe problem as cumulative science. For example, evidence-based medicine is increasingly reliant on meta-analysis to assess evidence. The problem is particularly significant because research is often conducted by entities (people, research groups, government and corporate sponsors) having a financial or ideological interest in achieving favorable results. [citation needed]

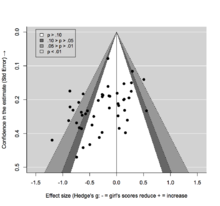

Those undertaking meta-analyses and systematic reviews need to take account of publication bias by performing a thorough search for unpublished studies. Additionally, a number of publication bias methods have been developed, including selection models [19][21][22] and methods based on the funnel plot, such as Begg's test,[23] Egger's test,[24] and the trim and fill method.[25] However, since all publication bias methods are characterized by a relatively low power and are based on strong and unverifiable assumptions [citation needed], their use does not guarantee the validity of conclusions from a meta-analysis.[26][27]

Examples

Two meta-analyses of the efficacy of Reboxetine as an antidepressant provide an example of attempts to detect publication bias in clinical trials. Based on positive trial data, Reboxetine was originally passed as a treatment for depression in many countries in Europe and the UK in 2001 (though in practice it is rarely used for this indication). A 2010 meta-analysis concluded Reboxetine was ineffective and that the preponderance of positive-outcome trials reflected publication bias, mostly due to trials published by the drug manufacturer Pfizer. A subsequent meta-analysis published in 2011, and also based on the original data, found flaws in the 2010 analyses and suggested that the data indicated Reboxetine was effective in severe depression (see Reboxetine - Efficacy). Examples of publication bias are given by Ben Goldacre[28] and Peter Wilmhurst.[29]

In the social sciences, a study of published papers on the relationship between Corporate Social and Financial Performance found that

"In economics, finance, and accounting journals, the average correlations were only about half the magnitude of the findings published in Social Issues Management, Business Ethics, or Business and Society journals".[30]

One example cited as an instance of publication bias is the failure to accept for publication attempted replications of work by Daryl Bem claiming evidence for pre-cognition by The Journal of Personality and Social Psychology (which published the Bem paper).[31]

A study[32] comparing studies of gene-disease associations originating in China to those originating outside China found that "Chinese studies in general reported a stronger gene-disease association and more frequently a statistically significant result".[33] One interpretation of this result is selective publication (publication bias).

Risks

John Ioannidis argues that "claimed research findings may often be simply accurate measures of the prevailing bias".[34] Factors he enumerates as making positive paper likely to enter the literature and causing negative papers to be suppressed are:

- the studies conducted in a field are smaller;

- effect sizes are smaller;

- there is a greater number and lesser preselection of tested relationships;

- there is greater flexibility in designs, definitions, outcomes, and analytical modes;

- there is greater financial and other interest and prejudice;

- more teams are involved in a scientific field in chase of statistical significance.

Other factors include experimenter bias, and white hat bias.

Remedies

Ioannidis' remedies include:

- Better powered studies

- Low-bias meta-analysis

- Large studies where they can be expected to give very definitive results or test major, general concepts

- Enhanced research standards including

- Pre-registration of protocols (as for randomized trials)

- Registration or networking of data collections within fields (as in fields where researchers are expected to generate hypotheses after collecting data)

- Adopting from randomized controlled trials the principles of developing and adhering to a protocol.

- Considering, before running an experiment, what they believe the chances are that they are testing a true or non-true relationship.

- Properly assessing the false positive report probability based on the statistical power of the test[35]

- Reconfirming (whenever ethically acceptable) established findings of "classic" studies, using large studies designed with minimal bias

Study registration

In September 2004, editors of several prominent medical journals (including the New England Journal of Medicine, The Lancet, Annals of Internal Medicine, and JAMA) announced that they would no longer publish results of drug research sponsored by pharmaceutical companies unless that research was registered in a public database from the start.[36] Furthermore, some journals, e.g. Trials, encourage publication of study protocols in their journals.[37] The World Health Organization agreed that basic information about all clinical trials should be registered, at inception, and that this information should be publicly accessible through the WHO International Clinical Trials Registry Platform. Additionally, public availability of full study protocols, alongside reports of trials, is becoming more common for studies.[38]

See also

- Academic bias

- Bad Pharma (2012) by Ben Goldacre

- Adversarial collaboration

- AllTrials

- Confirmation bias

- Counternull

- Experimenter's bias

- Funding bias

- FUTON bias

- List of cognitive biases

- Parapsychology

- Peer review

- Proteus phenomenon

- Replication crisis

- Selection bias

- Scientific journals for null results

- White hat bias

- Woozle effect

References

- ^ a b c d H. Rothstein, A. J. Sutton and M. Borenstein. (2005). Publication bias in meta-analysis: prevention, assessment and adjustments. Wiley. Chichester, England ; Hoboken, NJ.

- ^ Song, F.; Parekh, S.; Hooper, L.; Loke, Y. K.; Ryder, J.; Sutton, A. J.; Hing, C.; Kwok, C. S.; Pang, C.; Harvey, I. (2010). "Dissemination and publication of research findings: An updated review of related biases". Health technology assessment (Winchester, England). 14 (8): iii, iix–xi, iix–193. doi:10.3310/hta14080. PMID 20181324.

- ^ a b c Easterbrook, P. J.; Berlin, J. A.; Gopalan, R.; Matthews, D. R. (1991). "Publication bias in clinical research". Lancet. 337 (8746): 867–872. doi:10.1016/0140-6736(91)90201-Y. PMID 1672966.

- ^ Dickersin, K.; Chan, S.; Chalmers, T. C.; et al. (1987). "Publication bias and clinical trials". Controlled Clinical Trials. 8 (4): 343–353. doi:10.1016/0197-2456(87)90155-3. PMID 3442991.

- ^ Luijendijk, HJ; Koolman, X (May 2012). "The incentive to publish negative studies: how beta-blockers and depression got stuck in the publication cycle". J Clin Epidemiol. 65 (5): 488–92. doi:10.1016/j.jclinepi.2011.06.022.

- ^ "P-curve: A key to the file-drawer". Journal of Experimental Psychology: General. 143: 534–547. doi:10.1037/a0033242.

- ^ K. Dickersin (March 1990). "The existence of publication bias and risk factors for its occurrence". JAMA. 263 (10): 1385–1359. doi:10.1001/jama.263.10.1385. PMID 2406472.

- ^ Sterling, Theodore D. (March 1959). "Publication decisions and their possible effects on inferences drawn from tests of significance—or vice versa". Journal of the American Statistical Association. 54 (285): 30–34. doi:10.2307/2282137. Retrieved 10 April 2011.

- ^ Jeffrey D. Scargle (2000). "Publication bias: the "file-drawer problem" in scientific inference" (PDF). Journal of Scientific Exploration. 14 (2): 94–106.

- ^ Rosenthal R (1979). "File drawer problem and tolerance for null results". Psychol Bull. 86: 638–41. doi:10.1037/0033-2909.86.3.638.

- ^ D.L. Sackett (1979). "Bias in analytic research". J Chronic Dis. 32 (1–2): 51–63. doi:10.1016/0021-9681(79)90012-2. PMID 447779.

- ^ N.L. Kerr (1998). "HARKing: Hypothesizing After the Results are Known". Personality and Social Psychology Review. 2 (3): 196–217. doi:10.1207/s15327957pspr0203_4. PMID 15647155.

- ^ Flore P. C., Wicherts J. M. (2015). "Does stereotype threat influence performance of girls in stereotyped domains? A meta-analysis". J Sch Psychol. 53: 25–44. doi:10.1016/j.jsp.2014.10.002. PMID 25636259.

- ^ Dickersin, K.; Min, Y.I. (1993). "NIH clinical trials and publication bias". Online J Curr Clin Trials. Doc No 50: [4967 words, 53 paragraphs]. PMID 8306005.

- ^ Decullier E, Lheritier V, Chapuis F (2005). "Fate of biomedical research protocols and publication bias in France: retrospective cohort study". BMJ. 331: 19–22. doi:10.1136/bmj.38488.385995.8f.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Song F, Parekh-Bhurke S, Hooper L, Loke Y, Ryder J, Sutton A; et al. (2009). "Extent of publication bias in different categories of research cohorts: a meta-analysis of empirical studies". BMC Med Res Methodol. 9: 79. doi:10.1186/1471-2288-9-79.

{{cite journal}}: CS1 maint: multiple names: authors list (link) CS1 maint: unflagged free DOI (link) - ^ Chan AW, Altman DG (2005). "Identifying outcome reporting bias in randomised trials on PubMed: review of publications and survey of authors". BMJ. 330: 753. doi:10.1136/bmj.38356.424606.8f.

- ^ Riveros C, Dechartres A, Perrodeau E, Haneef R, Boutron I, Ravaud P (2013). "Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals". PLoS Med. 10: e1001566. doi:10.1371/journal.pmed.1001566.

{{cite journal}}: CS1 maint: multiple names: authors list (link) CS1 maint: unflagged free DOI (link) - ^ a b Kicinski, M; Springate, D. A.; Kontopantelis, E (2015). "Publication bias in meta-analyses from the Cochrane Database of Systematic Reviews". Statistics in Medicine. 34: n/a. doi:10.1002/sim.6525. PMID 25988604.

- ^ Kicinski M (2013). "Publication bias in recent meta-analyses". PLoS ONE. 8: e81823. doi:10.1371/journal.pone.0081823.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Silliman N (1997). "Hierarchical selection models with applications in meta-analysis". Journal of American Statistical Association. 92 (439): 926–936. doi:10.1080/01621459.1997.10474047.

- ^ Hedges L, Vevea J (1996). "Estimating effect size under publication bias: small sample properties and robustness of a random effects selection model". Journal of Educational and Behavioral Statistics. 21 (4): 299–332. doi:10.3102/10769986021004299.

- ^ Begg C, Mazumdar M (1994). "Operating characteristics of a rank correlation test for publication bias". Biometrics. 50 (4): 1088–1101. doi:10.2307/2533446.

- ^ Egger M, Smith G, Schneider M, Minder C (1997). "Bias in meta-analysis detected by a simple, graphical test". British Medical Journal. 315: 629–634. doi:10.1136/bmj.315.7109.629. PMC 2127453. PMID 9310563.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Duval S, Tweedie R (2000). "Trim and fill: A simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis". Biometrics. 56 (2): 455–463. doi:10.1111/j.0006-341X.2000.00455.x.

- ^ Sutton AJ, Song F, Gilbody SM, Abrams KR (2000). "Modelling publication bias in meta-analysis: a review". Stat Methods Med Res. 9: 421–445. doi:10.1191/096228000701555244.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ Kicinski, M (2014). "How does under-reporting of negative and inconclusive results affect the false-positive rate in meta-analysis? A simulation study". BMJ Open. 4 (8): e004831. doi:10.1136/bmjopen-2014-004831. PMC 4156818. PMID 25168036.

- ^ Ben Goldacre What doctors don't know about the drugs they prescribe

- ^ Wilmshurst, Peter. "Dishonesty in Medical Research" (PDF).

- ^ Marc Orlitzky Institutional Logics in the Study of Organizations: The Social Construction of the Relationship between Corporate Social and Financial Performance

- ^ Ben Goldacre Backwards step on looking into the future The Guardian, 23 April 2011

- ^ Zhenglun Pan, Thomas A. Trikalinos, Fotini K. Kavvoura, Joseph Lau, John P.A. Ioannidis (2005). "Local literature bias in genetic epidemiology: An empirical evaluation of the Chinese literature". PLoS Medicine. 2 (12): e334. doi:10.1371/journal.pmed.0020334.

{{cite journal}}: CS1 maint: multiple names: authors list (link) CS1 maint: unflagged free DOI (link) - ^ Ling Tang Jin (2005). "Selection Bias in Meta-Analyses of Gene-Disease Associations". PLoS Medicine. 2 (12): e409. doi:10.1371/journal.pmed.0020409.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Ioannidis J (2005). "Why most published research findings are false". PLoS Med. 2 (8): e124. doi:10.1371/journal.pmed.0020124. PMC 1182327. PMID 16060722.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ Wacholder, S.; Chanock, S; Garcia-Closas, M; El Ghormli, L; Rothman, N (March 2004). "Assessing the Probability That a Positive Report is False: An Approach for Molecular Epidemiology Studies". JNCI. 96 (6): 434–42. doi:10.1093/jnci/djh075. PMID 15026468.

- ^ (The Washington Post) (2004-09-10). "Medical journal editors take hard line on drug research". smh.com.au. Retrieved 2008-02-03.

- ^ "Instructions for Trials authors — Study protocol". 2009-02-15.

- ^ Dickersin, K.; Chalmers, I. (2011). "Recognizing, investigating and dealing with incomplete and biased reporting of clinical research: from Francis Bacon to the WHO". J R Soc Med. 104 (12): 532–538. doi:10.1258/jrsm.2011.11k042. PMID 22179297.

External links

- The Truth Wears Off: Is there something wrong with the scientific method? -- Jonah Lehrer

- Register of clinical trials conducted in the US and around the world, maintained by the National Library of Medicine, Bethesda

- Skeptic's Dictionary: positive outcome bias.

- Skeptic's Dictionary: file-drawer effect.

- Journal of Negative Results in Biomedicine

- The All Results Journals

- Journal of Articles in Support of the Null Hypothesis

- Article on 'the decline effect' and the role of publication bias in that

- Psychfiledrawer.org: Archive for replication attempts in experimental psychology