Safety engineering

This article includes a list of general references, but it lacks sufficient corresponding inline citations. (January 2011) |

Safety engineering is an engineering discipline which assures that engineered systems provide acceptable levels of safety. It is strongly related to industrial engineering/systems engineering, and the subset system safety engineering. Safety engineering assures that a life-critical system behaves as needed, even when components fail.

Analysis techniques

Analysis techniques can be split into two categories: qualitative and quantitative methods. Both approaches share the goal of finding causal dependencies between a hazard on system level and failures of individual components. Qualitative approaches focus on the question "What must go wrong, such that a system hazard may occur?", while quantitative methods aim at providing estimations about probabilities, rates and/or severity of consequences.

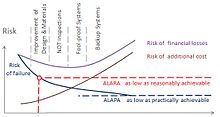

The complexity of the technical systems such as Improvements of Design and Materials, Planned Inspections, Fool-proof design, and Backup Redundancy decreases risk and increases the cost. The risk can be decreased to ALARA (as low as reasonably achievable) or ALAPA (as low as practically achievable) levels.

Traditionally, safety analysis techniques rely solely on skill and expertise of the safety engineer. In the last decade model-based approaches have become prominent. In contrast to traditional methods, model-based techniques try to derive relationships between causes and consequences from some sort of model of the system.

Traditional methods for safety analysis

The two most common fault modeling techniques are called failure mode and effects analysis and fault tree analysis. These techniques are just ways of finding problems and of making plans to cope with failures, as in probabilistic risk assessment. One of the earliest complete studies using this technique on a commercial nuclear plant was the WASH-1400 study, also known as the Reactor Safety Study or the Rasmussen Report.

Failure modes and effects analysis

Failure Mode and Effects Analysis (FMEA) is a bottom-up, inductive analytical method which may be performed at either the functional or piece-part level. For functional FMEA, failure modes are identified for each function in a system or equipment item, usually with the help of a functional block diagram. For piece-part FMEA, failure modes are identified for each piece-part component (such as a valve, connector, resistor, or diode). The effects of the failure mode are described, and assigned a probability based on the failure rate and failure mode ratio of the function or component. This quantiazation is difficult for software ---a bug exists or not, and the failure models used for hardware components do not apply. Temperature and age and manufacturing variability affect a resistor; they do not affect software.

Failure modes with identical effects can be combined and summarized in a Failure Mode Effects Summary. When combined with criticality analysis, FMEA is known as Failure Mode, Effects, and Criticality Analysis or FMECA, pronounced "fuh-MEE-kuh".

Fault tree analysis

Fault tree analysis (FTA) is a top-down, deductive analytical method. In FTA, initiating primary events such as component failures, human errors, and external events are traced through Boolean logic gates to an undesired top event such as an aircraft crash or nuclear reactor core melt. The intent is to identify ways to make top events less probable, and verify that safety goals have been achieved.

Fault trees are a logical inverse of success trees, and may be obtained by applying de Morgan's theorem to success trees (which are directly related to reliability block diagrams).

FTA may be qualitative or quantitative. When failure and event probabilities are unknown, qualitative fault trees may be analyzed for minimal cut sets. For example, if any minimal cut set contains a single base event, then the top event may be caused by a single failure. Quantitative FTA is used to compute top event probability, and usually requires computer software such as CAFTA from the Electric Power Research Institute or SAPHIRE from the Idaho National Laboratory.

Some industries use both fault trees and event trees. An event tree starts from an undesired initiator (loss of critical supply, component failure etc.) and follows possible further system events through to a series of final consequences. As each new event is considered, a new node on the tree is added with a split of probabilities of taking either branch. The probabilities of a range of "top events" arising from the initial event can then be seen.

Safety certification

Usually a failure in safety-certified systems is acceptable[by whom?] if, on average, less than one life per 109 hours of continuous operation is lost to failure.{as per FAA document AC 25.1309-1A} Most Western nuclear reactors, medical equipment, and commercial aircraft are certified[by whom?] to this level.[citation needed] The cost versus loss of lives has been considered appropriate at this level (by FAA for aircraft systems under Federal Aviation Regulations).[2][3][4]

Preventing failure

Once a failure mode is identified, it can usually be mitigated by adding extra or redundant equipment to the system. For example, nuclear reactors contain dangerous radiation, and nuclear reactions can cause so much heat that no substance might contain them. Therefore, reactors have emergency core cooling systems to keep the temperature down, shielding to contain the radiation, and engineered barriers (usually several, nested, surmounted by a containment building) to prevent accidental leakage. Safety-critical systems are commonly required to permit no single event or component failure to result in a catastrophic failure mode.

Most biological organisms have a certain amount of redundancy: multiple organs, multiple limbs, etc.

For any given failure, a fail-over or redundancy can almost always be designed and incorporated into a system.

There are two categories of techniques to reduce the probability of failure: Fault avoidance techniques increase the reliability of individual items (increased design margin, de-rating, etc.). Fault tolerance techniques increase the reliability of the system as a whole (redundancies, barriers, etc.).[5]

Safety and reliability

Safety engineering and reliability engineering have much in common, but safety is not reliability. If a medical device fails, it should fail safely; other alternatives will be available to the surgeon. If the engine on a single-engine aircraft fails, there is no backup. Electrical power grids are designed for both safety and reliability; telephone systems are designed for reliability, which becomes a safety issue when emergency (e.g. US "911") calls are placed.

Probabilistic risk assessment has created a close relationship between safety and reliability. Component reliability, generally defined in terms of component failure rate, and external event probability are both used in quantitative safety assessment methods such as FTA. Related probabilistic methods are used to determine system Mean Time Between Failure (MTBF), system availability, or probability of mission success or failure. Reliability analysis has a broader scope than safety analysis, in that non-critical failures are considered. On the other hand, higher failure rates are considered acceptable for non-critical systems.

Safety generally cannot be achieved through component reliability alone. Catastrophic failure probabilities of 10−9 per hour correspond to the failure rates of very simple components such as resistors or capacitors. A complex system containing hundreds or thousands of components might be able to achieve a MTBF of 10,000 to 100,000 hours, meaning it would fail at 10−4 or 10−5 per hour. If a system failure is catastrophic, usually the only practical way to achieve 10−9 per hour failure rate is through redundancy.

When adding equipment is impractical (usually because of expense), then the least expensive form of design is often "inherently fail-safe". That is, change the system design so its failure modes are not catastrophic. Inherent fail-safes are common in medical equipment, traffic and railway signals, communications equipment, and safety equipment.

The typical approach is to arrange the system so that ordinary single failures cause the mechanism to shut down in a safe way (for nuclear power plants, this is termed a passively safe design, although more than ordinary failures are covered). Alternately, if the system contains a hazard source such as a battery or rotor, then it may be possible to remove the hazard from the system so that its failure modes cannot be catastrophic. The U.S. Department of Defense Standard Practice for System Safety (MIL–STD–882) places the highest priority on elimination of hazards through design selection.[6]

One of the most common fail-safe systems is the overflow tube in baths and kitchen sinks. If the valve sticks open, rather than causing an overflow and damage, the tank spills into an overflow. Another common example is that in an elevator the cable supporting the car keeps spring-loaded brakes open. If the cable breaks, the brakes grab rails, and the elevator cabin does not fall.

Some systems can never be made fail safe, as continuous availability is needed. For example, loss of engine thrust in flight is dangerous. Redundancy, fault tolerance, or recovery procedures are used for these situations (e.g. multiple independent controlled and fuel fed engines). This also makes the system less sensitive for the reliability prediction errors or quality induced uncertainty for the separate items. On the other hand, failure detection & correction and avoidance of common cause failures becomes here increasingly important to ensure system level reliability.[7]

See also

- ARP4761

- Earthquake engineering

- Effective safety training

- Forensic engineering

- Hazard and operability study

- IEC 61508

- Loss-control consultant

- Nuclear safety

- Occupational medicine

- Occupational safety and health

- Process safety management

- Reliability engineering

- Risk assessment

- Risk management

- Safety life cycle

- Zonal safety analysis

Associations

References

Notes

- ^ Kokcharov I. Structural Safety http://www.kokch.kts.ru/me/t6/SIA_6_Structural_Safety.pdf

- ^ ANM-110 (1988). System Design and Analysis (pdf). Federal Aviation Administration. Advisory Circular AC 25.1309-1A. Retrieved 2011-02-20.

{{cite book}}: Cite has empty unknown parameter:|sectionurl=(help)CS1 maint: numeric names: authors list (link) - ^ S–18 (2010). Guidelines for Development of Civil Aircraft and Systems. Society of Automotive Engineers. ARP4754A.

{{cite book}}: Cite has empty unknown parameters:|sectionurl=and|coauthors=(help)CS1 maint: numeric names: authors list (link) - ^ S–18 (1996). Guidelines and methods for conducting the safety assessment process on civil airborne systems and equipment. Society of Automotive Engineers. ARP4761.

{{cite book}}: Cite has empty unknown parameters:|sectionurl=and|coauthors=(help)CS1 maint: numeric names: authors list (link) - ^ Tommaso Sgobba. "Commercial Space Safety Standards: Let’s Not Re-Invent the Wheel". 2015.

- ^ Standard Practice for System Safety (pdf). E. U.S. Department of Defense. 1998. MIL-STD-882. Retrieved 2012-05-11.

{{cite book}}: Cite has empty unknown parameters:|sectionurl=and|coauthors=(help) - ^ Bornschlegl, Susanne (2012). Ready for SIL 4: Modular Computers for Safety-Critical Mobile Applications (pdf). MEN Mikro Elektronik. Retrieved 2015-09-21.

{{cite book}}: Cite has empty unknown parameters:|sectionurl=and|coauthors=(help)

Sources

- Lees, Frank (2005). Loss Prevention in the Process Industries (3 ed.). Elsevier. ISBN 978-0-7506-7555-0.

- Kletz, Trevor (1984). Cheaper, safer plants, or wealth and safety at work: notes on inherently safer and simpler plants. I.Chem.E. ISBN 0-85295-167-1.

- Kletz, Trevor (2001). An Engineer’s View of Human Error (3 ed.). I.Chem.E. ISBN 0-85295-430-1.

- Kletz, Trevor (1999). HAZOP and HAZAN (4 ed.). Taylor & Francis. ISBN 0-85295-421-2.

- Lutz, Robyn R. (2000). Software Engineering for Safety: A Roadmap (PDF). The Future of Software Engineering. ACM Press. ISBN 1-58113-253-0. Retrieved 31 August 2006.

- Grunske, Lars; Kaiser, Bernhard; Reussner, Ralf H. (2005). "Specification and Evaluation of Safety Properties in a Component-based Software Engineering Process". Springer. CiteSeerX 10.1.1.69.7756.

{{cite journal}}: Cite journal requires|journal=(help) - US DOD (10 February 2000). Standard Practice for System Safety (PDF). Washington, DC: US DOD. MIL-STD-882D. Retrieved 7 September 2013.

- US FAA (30 December 2000). System Safety Handbook. Washington, DC: US FAA. Retrieved 7 September 2013.

- NASA (16 December 2008). Agency Risk Management Procedural Requirements. NASA. NPR 8000.4A.

- Leveson, Nancy (2011). Engineering a Safer World - Systems Thinking Applied To Safety. Engineering Systems. The MIT Press. ISBN 978-0-262-01662-9. Retrieved 3 July 2012.