User talk:Janhp78

Facto Post – Issue 10 – 12 March 2018

[edit]Facto Post – Issue 10 – 12 March 2018

Milestone for mix'n'match[edit]Around the time in February when Wikidata clicked past item Q50000000, another milestone was reached: the mix'n'match tool uploaded its 1000th dataset. Concisely defined by its author, Magnus Manske, it works "to match entries in external catalogs to Wikidata". The total number of entries is now well into eight figures, and more are constantly being added: a couple of new catalogs each day is normal. Since the end of 2013, mix'n'match has gradually come to play a significant part in adding statements to Wikidata. Particularly in areas with the flavour of digital humanities, but datasets can of course be about practically anything. There is a catalog on skyscrapers, and two on spiders. These days mix'n'match can be used in numerous modes, from the relaxed gamified click through a catalog looking for matches, with prompts, to the fantastically useful and often demanding search across all catalogs. I'll type that again: you can search 1000+ datasets from the simple box at the top right. The drop-down menu top left offers "creation candidates", Magnus's personal favourite. m:Mix'n'match/Manual for more. For the Wikidatan, a key point is that these matches, however carried out, add statements to Wikidata if, and naturally only if, there is a Wikidata property associated with the catalog. For everyone, however, the hands-on experience of deciding of what is a good match is an education, in a scholarly area, biographical catalogs being particularly fraught. Underpinning recent rapid progress is an open infrastructure for scraping and uploading. Congratulations to Magnus, our data Stakhanovite! Links[edit]

Editor Charles Matthews, for ContentMine. Please leave feedback for him. Back numbers are here. Reminder: WikiFactMine pages on Wikidata are at WD:WFM. If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 12:26, 12 March 2018 (UTC)

Facto Post – Issue 11 – 9 April 2018

[edit]Facto Post – Issue 11 – 9 April 2018

The 100 Skins of the Onion[edit]Open Citations Month, with its eminently guessable hashtag, is upon us. We should be utterly grateful that in the past 12 months, so much data on which papers cite which other papers has been made open, and that Wikidata is playing its part in hosting it as "cites" statements. At the time of writing, there are 15.3M Wikidata items that can do that. Pulling back to look at open access papers in the large, though, there is is less reason for celebration. Access in theory does not yet equate to practical access. A recent LSE IMPACT blogpost puts that issue down to "heterogeneity". A useful euphemism to save us from thinking that the whole concept doesn't fall into the realm of the oxymoron. Some home truths: aggregation is not content management, if it falls short on reusability. The PDF file format is wedded to how humans read documents, not how machines ingest them. The salami-slicer is our friend in the current downloading of open access papers, but for a better metaphor, think about skinning an onion, laboriously, 100 times with diminishing returns. There are of the order of 100 major publisher sites hosting open access papers, and the predominant offer there is still a PDF.  From the discoverability angle, Wikidata's bibliographic resources combined with the SPARQL query are superior in principle, by far, to existing keyword searches run over papers. Open access content should be managed into consistent HTML, something that is currently strenuous. The good news, such as it is, would be that much of it is already in XML. The organisational problem of removing further skins from the onion, with sensible prioritisation, is certainly not insuperable. The CORE group (the bloggers in the LSE posting) has some answers, but actually not all that is needed for the text and data mining purposes they highlight. The long tail, or in other words the onion heart when it has become fiddly beyond patience to skin, does call for a pis aller. But the real knack is to do more between the XML and the heart. Links[edit]

Editor Charles Matthews, for ContentMine. Please leave feedback for him. Back numbers are here. Reminder: WikiFactMine pages on Wikidata are at WD:WFM. If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 16:25, 9 April 2018 (UTC)

Facto Post – Issue 12 – 28 May 2018

[edit]Facto Post – Issue 12 – 28 May 2018

ScienceSource funded[edit]The Wikimedia Foundation announced full funding of the ScienceSource grant proposal from ContentMine on May 18. See the ScienceSource Twitter announcement and 60 second video.

The proposal includes downloading 30,000 open access papers, aiming (roughly speaking) to create a baseline for medical referencing on Wikipedia. It leaves open the question of how these are to be chosen. The basic criteria of WP:MEDRS include a concentration on secondary literature. Attention has to be given to the long tail of diseases that receive less current research. The MEDRS guideline supposes that edge cases will have to be handled, and the premature exclusion of publications that would be in those marginal positions would reduce the value of the collection. Prophylaxis misses the point that gate-keeping will be done by an algorithm. Two well-known but rather different areas where such considerations apply are tropical diseases and alternative medicine. There are also a number of potential downloading troubles, and these were mentioned in Issue 11. There is likely to be a gap, even with the guideline, between conditions taken to be necessary but not sufficient, and conditions sufficient but not necessary, for candidate papers to be included. With around 10,000 recognised medical conditions in standard lists, being comprehensive is demanding. With all of these aspects of the task, ScienceSource will seek community help. Links[edit]

Editor Charles Matthews, for ContentMine. Please leave feedback for him. Back numbers are here. Reminder: WikiFactMine pages on Wikidata are at WD:WFM. ScienceSource pages will be announced there, and in this mass message. If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 10:16, 28 May 2018 (UTC)

Facto Post – Issue 13 – 29 May 2018

[edit]Facto Post – Issue 13 – 29 May 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Facto Post enters its second year, with a Cambridge Blue (OK, Aquamarine) background, a new logo, but no Cambridge blues. On-topic for the ScienceSource project is a project page here. It contains some case studies on how the WP:MEDRS guideline, for the referencing of articles at all related to human health, is applied in typical discussions. Close to home also, a template, called {{medrs}} for short, is used to express dissatisfaction with particular references. Technology can help with patrolling, and this Petscan query finds over 450 articles where there is at least one use of the template. Of course the template is merely suggesting there is a possible issue with the reliability of a reference. Deciding the truth of the allegation is another matter. This maintenance issue is one example of where ScienceSource aims to help. Where the reference is to a scientific paper, its type of algorithm could give a pass/fail opinion on such references. It could assist patrollers of medical articles, therefore, with the templated references and more generally. There may be more to proper referencing than that, indeed: context, quite what the statement supported by the reference expresses, prominence and weight. For that kind of consideration, case studies can help. But an algorithm might help to clear the backlog.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 18:19, 29 June 2018 (UTC)

Facto Post – Issue 14 – 21 July 2018

[edit]Facto Post – Issue 14 – 21 July 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Officially it is "bridging the gaps in knowledge", with Wikimania 2018 in Cape Town paying tribute to the southern African concept of ubuntu to implement it. Besides face-to-face interactions, Wikimedians do need their power sources.  Facto Post interviewed Jdforrester, who has attended every Wikimania, and now works as Senior Product Manager for the Wikimedia Foundation. His take on tackling the gaps in the Wikimedia movement is that "if we were an army, we could march in a column and close up all the gaps". In his view though, that is a faulty metaphor, and it leads to a completely false misunderstanding of the movement, its diversity and different aspirations, and the nature of the work as "fighting" to be done in the open sector. There are many fronts, and as an eventualist he feels the gaps experienced both by editors and by users of Wikimedia content are inevitable. He would like to see a greater emphasis on reuse of content, not simply its volume. If that may not sound like radicalism, the Decolonizing the Internet conference here organized jointly with Whose Knowledge? can redress the picture. It comes with the claim to be "the first ever conference about centering marginalized knowledge online".

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 06:10, 21 July 2018 (UTC)

Facto Post – Issue 15 – 21 August 2018

[edit]Facto Post – Issue 15 – 21 August 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

To grasp the nettle, there are rare diseases, there are tropical diseases and then there are "neglected diseases". Evidently a rare enough disease is likely to be neglected, but neglected disease these days means a disease not rare, but tropical, and most often infectious or parasitic. Rare diseases as a group are dominated, in contrast, by genetic diseases. A major aspect of neglect is found in tracking drug discovery. Orphan drugs are those developed to treat rare diseases (rare enough not to have market-driven research), but there is some overlap in practice with the WHO's neglected diseases, where snakebite, a "neglected public health issue", is on the list. From an encyclopedic point of view, lack of research also may mean lack of high-quality references: the core medical literature differs from primary research, since it operates by aggregating trials. This bibliographic deficit clearly hinders Wikipedia's mission. The ScienceSource project is currently addressing this issue, on Wikidata. Its Wikidata focus list at WD:SSFL is trying to ensure that neglect does not turn into bias in its selection of science papers.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 13:23, 21 August 2018 (UTC)

Facto Post – Issue 16 – 30 September 2018

[edit]Facto Post – Issue 16 – 30 September 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

In an ideal world ... no, bear with your editor for just a minute ... there would be a format for scientific publishing online that was as much a standard as SI units are for the content. Likewise cataloguing publications would not be onerous, because part of the process would be to generate uniform metadata. Without claiming it could be the mythical free lunch, it might be reasonably be argued that sandwiches can be packaged much alike and have barcodes, whatever the fillings. The best on offer, to stretch the metaphor, is the meal kit option, in the form of XML. Where scientific papers are delivered as XML downloads, you get all the ingredients ready to cook. But have to prepare the actual meal of slow food yourself. See Scholarly HTML for a recent pass at heading off XML with HTML, in other words in the native language of the Web. The argument from real life is a traditional mixture of frictional forces, vested interests, and the classic irony of the principle of unripe time. On the other hand, discoverability actually diminishes with the prolific progress of science publishing. No, it really doesn't scale. Wikimedia as movement can do something in such cases. We know from open access, we grok the Web, we have our own horse in the HTML race, we have Wikidata and WikiJournal, and we have the chops to act.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 17:57, 30 September 2018 (UTC)

Facto Post – Issue 17 – 29 October 2018

[edit]Facto Post – Issue 17 – 29 October 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Around 2.7 million Wikidata items have an illustrative image. These files, you might say, are Wikimedia's stock images, and if the number is large, it is still only 5% or so of items that have one. All such images are taken from Wikimedia Commons, which has 50 million media files. One key issue is how to expand the stock. Indeed, there is a tool. WD-FIST exploits the fact that each Wikipedia is differently illustrated, mostly with images from Commons but also with fair use images. An item that has sitelinks but no illustrative image can be tested to see if the linked wikis have a suitable one. This works well for a volunteer who wants to add images at a reasonable scale, and a small amount of SPARQL knowledge goes a long way in producing checklists.  It should be noted, though, that there are currently 53 Wikidata properties that link to Commons, of which P18 for the basic image is just one. WD-FIST prompts the user to add signatures, plaques, pictures of graves and so on. There are a couple of hundred monograms, mostly of historical figures, and this query allows you to view all of them. commons:Category:Monograms and its subcategories provide rich scope for adding more. And so it is generally. The list of properties linking to Commons does contain a few that concern video and audio files, and rather more for maps. But it contains gems such as P3451 for "nighttime view". Over 1000 of those on Wikidata, but as for so much else, there could be yet more. Go on. Today is Wikidata's birthday. An illustrative image is always an acceptable gift, so why not add one? You can follow these easy steps: (i) log in at https://tools.wmflabs.org/widar/, (ii) paste the Petscan ID 6263583 into https://tools.wmflabs.org/fist/wdfist/ and click run, and (iii) just add cake.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 15:01, 29 October 2018 (UTC)

Facto Post – Issue 18 – 30 November 2018

[edit]Facto Post – Issue 18 – 30 November 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

GLAM ♥ data — what is a gallery, library, archive or museum without a catalogue? It follows that Wikidata must love librarians. Bibliography supports students and researchers in any topic, but open and machine-readable bibliographic data even more so, outside the silo. Cue the WikiCite initiative, which was meeting in conference this week, in the Bay Area of California.  In fact there is a broad scope: "Open Knowledge Maps via SPARQL" and the "Sum of All Welsh Literature", identification of research outputs, Library.Link Network and Bibframe 2.0, OSCAR and LUCINDA (who they?), OCLC and Scholia, all these co-exist on the agenda. Certainly more library science is coming Wikidata's way. That poses the question about the other direction: is more Wikimedia technology advancing on libraries? Good point. Wikimedians generally are not aware of the tech background that can be assumed, unless they are close to current training for librarians. A baseline definition is useful here: "bash, git and OpenRefine". Compare and contrast with pywikibot, GitHub and mix'n'match. Translation: scripting for automation, version control, data set matching and wrangling in the large, are on the agenda also for contemporary library work. Certainly there is some possible common ground here. Time to understand rather more about the motivations that operate in the library sector.

Account creation is now open on the ScienceSource wiki, where you can see SPARQL visualisations of text mining.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 11:20, 30 November 2018 (UTC)

Facto Post – Issue 19 – 27 December 2018

[edit]Facto Post – Issue 19 – 27 December 2018

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Zotero is free software for reference management by the Center for History and New Media: see Wikipedia:Citing sources with Zotero. It is also an active user community, and has broad-based language support. Besides the handiness of Zotero's warehousing of personal citation collections, the Zotero translator underlies the citoid service, at work behind the VisualEditor. Metadata from Wikidata can be imported into Zotero; and in the other direction the zotkat tool from the University of Mannheim allows Zotero bibliographies to be exported to Wikidata, by item creation. With an extra feature to add statements, that route could lead to much development of the focus list (P5008) tagging on Wikidata, by WikiProjects. There is also a large-scale encyclopedic dimension here. The construction of Zotero translators is one facet of Web scraping that has a strong community and open source basis. In that it resembles the less formal mix'n'match import community, and growing networks around other approaches that can integrate datasets into Wikidata, such as the use of OpenRefine. Looking ahead, the thirtieth birthday of the World Wide Web falls in 2019, and yet the ambition to make webpages routinely readable by machines can still seem an ever-retreating mirage. Wikidata should not only be helping Wikimedia integrate its projects, an ongoing process represented by Structured Data on Commons and lexemes. It should also be acting as a catalyst to bring scraping in from the cold, with institutional strengths as well as resourceful code.

Diversitech, the latest ContentMine grant application to the Wikimedia Foundation, is in its community review stage until January 2.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 19:08, 27 December 2018 (UTC)

Facto Post – Issue 20 – 31 January 2019

[edit]Facto Post – Issue 20 – 31 January 2019

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Recently Jimmy Wales has made the point that computer home assistants take much of their data from Wikipedia, one way or another. So as well as getting Spotify to play Frosty the Snowman for you, they may be able to answer the question "is the Pope Catholic?" Possibly by asking for disambiguation (Coptic?). Headlines about data breaches are now familiar, but the unannounced circulation of information raises other issues. One of those is Gresham's law stated as "bad data drives out good". Wikipedia and now Wikidata have been criticised on related grounds: what if their content, unattributed, is taken to have a higher standing than Wikimedians themselves would grant it? See Wikiquote on a misattribution to Bismarck for the usual quip about "law and sausages", and why one shouldn't watch them in the making. Wikipedia has now turned 18, so should act like as adult, as well as being treated like one. The Web itself turns 30 some time between March and November this year, per Tim Berners-Lee. If the Knowledge Graph by Google exemplifies Heraclitean Web technology gaining authority, contra GIGO, Wikimedians still have a role in its critique. But not just with the teenage skill of detecting phoniness. There is more to beating Gresham than exposing the factoid and urban myth, where WP:V does do a great job. Placeholders must be detected, and working with Wikidata is a good way to understand how having one statement as data can blind us to replacing it by a more accurate one. An example that is important to open access is that, firstly, the term itself needs considerable unpacking, because just being able to read material online is a poor relation of "open"; and secondly, trying to get Creative Commons license information into Wikidata shows up issues with classes of license (such as CC-BY) standing for the actual license in major repositories. Detailed investigation shows that "everything flows" exacerbates the issue. But Wikidata can solve it.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 10:53, 31 January 2019 (UTC)

Facto Post – Issue 21 – 28 February 2019

[edit]Facto Post – Issue 21 – 28 February 2019

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

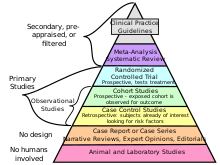

Systematic reviews are basic building blocks of evidence-based medicine, surveys of existing literature devoted typically to a definite question that aim to bring out scientific conclusions. They are principled in a way Wikipedians can appreciate, taking a critical view of their sources.  Ben Goldacre in 2014 wrote (link below) "[...] : the "information architecture" of evidence based medicine (if you can tolerate such a phrase) is a chaotic, ad hoc, poorly connected ecosystem of legacy projects. In some respects the whole show is still run on paper, like it's the 19th century." Is there a Wikidatan in the house? Wouldn't some machine-readable content that is structured data help? Most likely it would, but the arcana of systematic reviews and how they add value would still need formal handling. The PRISMA standard dates from 2009, with an update started in 2018. The concerns there include the corpus of papers used: how selected and filtered? Now that Wikidata has a 20.9 million item bibliography, one can at least pose questions. Each systematic review is a tagging opportunity for a bibliography. Could that tagging be reproduced by a query, in principle? Can it even be second-guessed by a query (i.e. simulated by a protocol which translates into SPARQL)? Homing in on the arcana, do the inclusion and filtering criteria translate into metadata? At some level they must, but are these metadata explicitly expressed in the articles themselves? The answer to that is surely "no" at this point, but can TDM find them? Again "no", right now. Automatic identification doesn't just happen. Actually these questions lack originality. It should be noted though that WP:MEDRS, the reliable sources guideline used here for health information, hinges on the assumption that the usefully systematic reviews of biomedical literature can be recognised. Its nutshell summary, normally the part of a guideline with the highest density of common sense, allows literature reviews in general validity, but WP:MEDASSESS qualifies that indication heavily. Process wonkery about systematic reviews definitely has merit.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 10:02, 28 February 2019 (UTC)

Facto Post – Issue 22 – 28 March 2019

[edit]Facto Post – Issue 22 – 28 March 2019

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Half a century ago, it was the era of the mainframe computer, with its air-conditioned room, twitching tape-drives, and appearance in the title of a spy novel Billion-Dollar Brain then made into a Hollywood film. Now we have the cloud, with server farms and the client–server model as quotidian: this text is being typed on a Chromebook. The term Applications Programming Interface or API is 50 years old, and refers to a type of software library as well as the interface to its use. While a compiler is what you need to get high-level code executed by a mainframe, an API out in the cloud somewhere offers a chance to perform operations on a remote server. For example, the multifarious bots active on Wikipedia have owners who exploit the MediaWiki API. APIs (called RESTful) that allow for the GET HTTP request are fundamental for what could colloquially be called "moving data around the Web"; from which Wikidata benefits 24/7. So the fact that the Wikidata SPARQL endpoint at query.wikidata.org has a RESTful API means that, in lay terms, Wikidata content can be GOT from it. The programming involved, besides the SPARQL language, could be in Python, younger by a few months than the Web. Magic words, such as occur in fantasy stories, are wishful (rather than RESTful) solutions to gaining access. You may need to be a linguist to enter Ali Baba's cave or the western door of Moria (French in the case of "Open Sesame", in fact, and Sindarin being the respective languages). Talking to an API requires a bigger toolkit, which first means you have to recognise the tools in terms of what they can do. On the way to the wikt:impactful or polymathic modern handling of facts, one must perhaps take only tactful notice of tech's endemic problem with documentation, and absorb the insightful point that the code in APIs does articulate the customary procedures now in place on the cloud for getting information. As Owl explained to Winnie-the-Pooh, it tells you The Thing to Do.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 11:45, 28 March 2019 (UTC)

Facto Post – Issue 23 – 30 April 2019

[edit]Facto Post – Issue 23 – 30 April 2019

The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Talk of cloud computing draws a veil over hardware, but also, less obviously but more importantly, obscures such intellectual distinction as matters most in its use. Wikidata begins to allow tasks to be undertaken that were out of easy reach. The facility should not be taken as the real point. Coming in from another angle, the "executive decision" is more glamorous; but the "administrative decision" should be admired for its command of facts. Think of the attitudes ad fontes, so prevalent here on Wikipedia as "can you give me a source for that?", and being prepared to deal with complicated analyses into specified subcases. Impatience expressed as a disdain for such pedantry is quite understandable, but neither dirty data nor false dichotomies are at all good to have around. Issue 13 and Issue 21, respectively on WP:MEDRS and systematic reviews, talk about biomedical literature and computing tasks that would be of higher quality if they could be made more "administrative". For example, it is desirable that the decisions involved be consistent, explicable, and reproducible by non-experts from specified inputs. What gets clouded out is not impossibly hard to understand. You do need to put together the insights of functional programming, which is a doctrinaire and purist but clearcut approach, with the practicality of office software. Loopless computation can be conceived of as a seamless forward march of spreadsheet columns, each determined by the content of previous ones. Very well: to do a backward audit, when now we are talking about Wikidata, we rely on integrity of data and its scrupulous sourcing: and clearcut case analyses. The MEDRS example forces attention on purge attempts such as Beall's list.

If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |

MediaWiki message delivery (talk) 11:27, 30 April 2019 (UTC)

Facto Post – Issue 24 – 17 May 2019

[edit]Facto Post – Issue 24 – 17 May 2019  The Editor is Charles Matthews, for ContentMine. Please leave feedback for him, on his User talk page.

To subscribe to Facto Post go to Wikipedia:Facto Post mailing list. For the ways to unsubscribe, see the footer.

Two dozen issues, and this may be the last, a valediction at least for a while. It's time for a two-year summation of ContentMine projects involving TDM (text and data mining). Wikidata and now Structured Data on Commons represent the overlap of Wikimedia with the Semantic Web. This common ground is helping to convert an engineering concept into a movement. TDM generally has little enough connection with the Semantic Web, being instead in the orbit of machine learning which is no respecter of the semantic. Don't break a taboo by asking bots "and what do you mean by that?" The ScienceSource project innovates in TDM, by storing its text mining results in a Wikibase site. It strives for compliance of its fact mining, on drug treatments of diseases, with an automated form of the relevant Wikipedia referencing guideline MEDRS. Where WikiFactMine set up an API for reuse of its results, ScienceSource has a SPARQL query service, with look-and-feel exactly that of Wikidata's at query.wikidata.org. It also now has a custom front end, and its content can be federated, in other words used in data mashups: it is one of over 50 sites that can federate with Wikidata. The human factor comes to bear through the front end, which combines a link to the HTML version of a paper, text mining results organised in drug and disease columns, and a SPARQL display of nearby drug and disease terms. Much software to develop and explain, so little time! Rather than telling the tale, Facto Post brings you ScienceSource links, starting from the how-to video, lower right.

The review tool requires a log in on sciencesource.wmflabs.org, and an OAuth permission (bottom of a review page) to operate. It can be used in simple and more advanced workflows. Examples of queries for the latter are at d:Wikidata_talk:ScienceSource project/Queries#SS_disease_list and d:Wikidata_talk:ScienceSource_project/Queries#NDF-RT issue. Please be aware that this is a research project in development, and may have outages for planned maintenance. That will apply for the next few days, at least. The ScienceSource wiki main page carries information on practical matters. Email is not enabled on the wiki: use site mail here to Charles Matthews in case of difficulty, or if you need support. Further explanatory videos will be put into commons:Category:ContentMine videos. If you wish to receive no further issues of Facto Post, please remove your name from our mailing list. Alternatively, to opt out of all massmessage mailings, you may add Category:Wikipedians who opt out of message delivery to your user talk page.

Newsletter delivered by MediaWiki message delivery |