Volume rendering: Difference between revisions

Added {{reflist}} |

Converted all references to inline citations, making it possible to use the citation tool in Wikipedia's built in text editor. Put the two references that was not used in a new section: Bibliography |

||

| Line 18: | Line 18: | ||

==Direct volume rendering== |

==Direct volume rendering== |

||

A direct volume renderer |

A direct volume renderer<ref name=_188>Marc Levoy, "Display of Surfaces from Volume Data", IEEE CG&A, May 1988. [http://graphics.stanford.edu/papers/volume-cga88/ Archive of Paper]</ref><ref name=dch88>Drebin, R.A., Carpenter, L., Hanrahan, P., "Volume Rendering", Computer Graphics, SIGGRAPH88. [http://portal.acm.org/citation.cfm?doid=378456.378484 DOI citation link]</ref> requires every sample value to be mapped to opacity and a color. This is done with a "transfer function" which can be a simple ramp, a piecewise linear function or an arbitrary table. Once converted to an '''RGBA''' (for red, green, blue, alpha) value, the composed RGBA result is projected on correspondent pixel of the frame buffer. The way this is done depends on the rendering technique. |

||

A combination of these techniques is possible. For instance, a shear warp implementation could use texturing hardware to draw the aligned slices in the [[off-screen buffer]]. |

A combination of these techniques is possible. For instance, a shear warp implementation could use texturing hardware to draw the aligned slices in the [[off-screen buffer]]. |

||

| Line 33: | Line 33: | ||

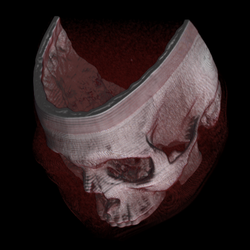

[[Image:volRenderShearWarp.gif|thumb|250px|Example of a mouse skull (CT) rendering using the shear warp algorithm]] |

[[Image:volRenderShearWarp.gif|thumb|250px|Example of a mouse skull (CT) rendering using the shear warp algorithm]] |

||

The shear warp approach to volume rendering was developed by Cameron and Undrill, popularized by Philippe Lacroute and [[Marc Levoy]]. |

The shear warp approach to volume rendering was developed by Cameron and Undrill, popularized by Philippe Lacroute and [[Marc Levoy]].<ref name=FVR>[http://graphics.stanford.edu/papers/shear/ "Fast Volume Rendering Using a Shear-Warp Factorization of the Viewing Transformation"]</ref> In this technique, the [[viewing transformation]] is transformed such that the nearest face of the volume becomes axis aligned with an off-screen [[image buffer]] with a fixed scale of voxels to pixels. The volume is then rendered into this buffer using the far more favorable memory alignment and fixed scaling and blending factors. Once all slices of the volume have been rendered, the buffer is then warped into the desired orientation and scaled in the displayed image. |

||

This technique is relatively fast in software at the cost of less accurate sampling and potentially worse image quality compared to ray casting. There is memory overhead for storing multiple copies of the volume, for the ability to have near axis aligned volumes. This overhead can be mitigated using run length encoding. |

This technique is relatively fast in software at the cost of less accurate sampling and potentially worse image quality compared to ray casting. There is memory overhead for storing multiple copies of the volume, for the ability to have near axis aligned volumes. This overhead can be mitigated using run length encoding. |

||

===Texture mapping=== |

===Texture mapping=== |

||

Many 3D graphics systems use [[texture mapping]] to apply images, or textures, to geometric objects. Commodity PC [[graphics cards]] are fast at texturing and can efficiently render slices of a 3D volume, with real time interaction capabilities. [[Workstation]] [[GPU]]s are even faster, and are the basis for much of the production volume visualization used in [[medical imaging]], oil and gas, and other markets (2007). In earlier years, dedicated 3D texture mapping systems were used on graphics systems such as [[Silicon Graphics]] [[InfiniteReality]], [[Hewlett-Packard|HP]] [[Visualize FX]] [[graphics accelerator]], and others. This technique was first described by [[Bill Hibbard]] and Dave Santek. |

Many 3D graphics systems use [[texture mapping]] to apply images, or textures, to geometric objects. Commodity PC [[graphics cards]] are fast at texturing and can efficiently render slices of a 3D volume, with real time interaction capabilities. [[Workstation]] [[GPU]]s are even faster, and are the basis for much of the production volume visualization used in [[medical imaging]], oil and gas, and other markets (2007). In earlier years, dedicated 3D texture mapping systems were used on graphics systems such as [[Silicon Graphics]] [[InfiniteReality]], [[Hewlett-Packard|HP]] [[Visualize FX]] [[graphics accelerator]], and others. This technique was first described by [[Bill Hibbard]] and Dave Santek.<ref name=HS89>Hibbard W., Santek D., [http://www.ssec.wisc.edu/~billh/p39-hibbard.pdf "Interactivity is the key"], ''Chapel Hill Workshop on Volume Visualization'', University of North Carolina, Chapel Hill, 1989, pp. 39–43.</ref> |

||

These slices can either be aligned with the volume and rendered at an angle to the viewer, or aligned with the viewing plane and sampled from unaligned slices through the volume. Graphics hardware support for 3D textures is needed for the second technique. |

These slices can either be aligned with the volume and rendered at an angle to the viewer, or aligned with the viewing plane and sampled from unaligned slices through the volume. Graphics hardware support for 3D textures is needed for the second technique. |

||

| Line 45: | Line 45: | ||

===Hardware-accelerated volume rendering=== |

===Hardware-accelerated volume rendering=== |

||

Due to the extremely parallel nature of direct volume rendering, special purpose volume rendering hardware was a rich research topic before [[GPU]] volume rendering became fast enough. The most widely cited technology was VolumePro |

Due to the extremely parallel nature of direct volume rendering, special purpose volume rendering hardware was a rich research topic before [[GPU]] volume rendering became fast enough. The most widely cited technology was VolumePro<ref name=phi99>Pfister H., Hardenbergh J., Knittel J., Lauer H., Seiler L.: ''The VolumePro real-time ray-casting system'' In Proceeding of SIGGRAPH99 [http://doi.acm.org/10.1145/311535.311563 DOI]</ref>, which used high memory bandwidth and brute force to render using the ray casting algorithm. |

||

A recently exploited technique to accelerate traditional volume rendering algorithms such as ray-casting is the use of modern graphics cards. Starting with the programmable [[pixel shader]]s, people recognized the power of parallel operations on multiple pixels and began to perform general purpose computations on the graphics chip ([[GPGPU]]). The [[pixel shader]]s are able to read and write randomly from video memory and perform some basic mathematical and logical calculations. These [[SIMD]] processors were used to perform general calculations such as rendering polygons and signal processing. In recent [[GPU]] generations, the pixel shaders now are able to function as [[MIMD]] processors (now able to independently branch) utilizing up to 1 GB of texture memory with floating point formats. With such power, virtually any algorithm with steps that can be performed in parallel, such as [[volume ray casting]] or [[tomographic reconstruction]], can be performed with tremendous acceleration. The programmable [[pixel shaders]] can be used to simulate variations in the characteristics of lighting, shadow, reflection, emissive color and so forth. Such simulations can be written using high level [[shading language]]s. |

A recently exploited technique to accelerate traditional volume rendering algorithms such as ray-casting is the use of modern graphics cards. Starting with the programmable [[pixel shader]]s, people recognized the power of parallel operations on multiple pixels and began to perform general purpose computations on the graphics chip ([[GPGPU]]). The [[pixel shader]]s are able to read and write randomly from video memory and perform some basic mathematical and logical calculations. These [[SIMD]] processors were used to perform general calculations such as rendering polygons and signal processing. In recent [[GPU]] generations, the pixel shaders now are able to function as [[MIMD]] processors (now able to independently branch) utilizing up to 1 GB of texture memory with floating point formats. With such power, virtually any algorithm with steps that can be performed in parallel, such as [[volume ray casting]] or [[tomographic reconstruction]], can be performed with tremendous acceleration. The programmable [[pixel shaders]] can be used to simulate variations in the characteristics of lighting, shadow, reflection, emissive color and so forth. Such simulations can be written using high level [[shading language]]s. |

||

| Line 53: | Line 53: | ||

===Empty space skipping=== |

===Empty space skipping=== |

||

Often, a volume rendering system will have a system for identifying regions of the volume containing no visible material. This information can be used to avoid rendering these transparent regions. |

Often, a volume rendering system will have a system for identifying regions of the volume containing no visible material. This information can be used to avoid rendering these transparent regions.<ref name=shn03>Sherbondy A., Houston M., Napel S.: ''Fast volume segmentation with simultaneous visualization using programmable graphics hardware.'' In Proceedings of IEEE Visualization (2003), pp. 171–176.</ref> |

||

===Early ray termination=== |

===Early ray termination=== |

||

This is a technique used when the volume is rendered in front to back order. For a ray through a pixel, once sufficient dense material has been encountered, further samples will make no significant contribution to the pixel and so may be [[Antiportal| |

This is a technique used when the volume is rendered in front to back order. For a ray through a pixel, once sufficient dense material has been encountered, further samples will make no significant contribution to the pixel and so may be [[Antiportal|neglected]]. |

||

===Octree and BSP space subdivision=== |

===Octree and BSP space subdivision=== |

||

| Line 68: | Line 68: | ||

===Pre-integrated volume rendering=== |

===Pre-integrated volume rendering=== |

||

Pre-integrated volume rendering |

Pre-integrated volume rendering<ref name=mhc90>Max N., Hanrahan P., Crawfis R.: ''Area and volume coherence for efficient visualization of 3D scalar functions.'' In Computer Graphics (San Diego Workshop on Volume Visualization, 1990) vol. 24, pp. 27–33.</ref><ref name=smb94>Stein C., Backer B., Max N.: ''Sorting and hardware assisted rendering for volume visualization.'' In Symposium on Volume Visualization (1994), pp. 83–90.</ref> is a method that can reduce sampling artifacts by pre-computing much of the required data. It is especially useful in hardware-accelerated applications<ref name=eke01>Engel K., Kraus M., Ertl T.: ''High-quality pre-integrated volume rendering using hardware-accelerated pixel shading.'' In Proceedings of Eurographics/SIGGRAPH Workshop on Graphics Hardware (2001), pp. 9–16.</ref><ref name=lwm04>Lum E., Wilson B., Ma K.: ''High-Quality Lighting and Efficient Pre-Integration for Volume Rendering.'' In Eurographics/IEEE Symposium on Visualization 2004.</ref> because it improves quality without a large performance impact. Unlike most other optimizations, this does not skip voxels. Rather it reduces the number of samples needed to accurately display a region of voxels. The idea is to render the intervals between the samples instead of the samples themselves. This technique captures rapidly changing material, for example the transition from muscle to bone with much less computation. |

||

===Image-based meshing=== |

===Image-based meshing=== |

||

| Line 76: | Line 76: | ||

For a complete display view, only one voxel per pixel (the front one) is required to be shown (although more can be used for smoothing the image), if animation is needed, the front voxels to be shown can be cached and their location relative to the camera can be recalculated as it moves. Where display voxels become too far apart to cover all the pixels, new front voxels can be found by ray casting or similar, and where two voxels are in one pixel, the front one can be kept. |

For a complete display view, only one voxel per pixel (the front one) is required to be shown (although more can be used for smoothing the image), if animation is needed, the front voxels to be shown can be cached and their location relative to the camera can be recalculated as it moves. Where display voxels become too far apart to cover all the pixels, new front voxels can be found by ray casting or similar, and where two voxels are in one pixel, the front one can be kept. |

||

== |

==References== |

||

{{reflist}} |

{{reflist}} |

||

== |

==Bibliography== |

||

# {{note|l88}}Marc Levoy, "Display of Surfaces from Volume Data", IEEE CG&A, May 1988. [http://graphics.stanford.edu/papers/volume-cga88/ Archive of Paper] |

|||

| ⚫ | |||

# {{note|dch88}}Drebin, R.A., Carpenter, L., Hanrahan, P., "Volume Rendering", Computer Graphics, SIGGRAPH88. [http://portal.acm.org/citation.cfm?doid=378456.378484 DOI citation link] |

|||

| ⚫ | # {{note|peng2010}} Peng H., Ruan, Z, Long, F, Simpson, JH, Myers, EW: ''V3D enables real-time 3D visualization and quantitative analysis of large-scale biological image data sets.'' Nature Biotechnology, 2010 (DOI: 10.1038/nbt.1612) [http://www.nature.com/nbt/journal/vaop/ncurrent/full/nbt.1612.html Volume Rendering of large high-dimensional image data]. |

||

# {{note|FVR}} [http://graphics.stanford.edu/papers/shear/ "Fast Volume Rendering Using a Shear-Warp Factorization of the Viewing Transformation"] |

|||

#{{note|HS89}}Hibbard W., Santek D., [http://www.ssec.wisc.edu/~billh/p39-hibbard.pdf "Interactivity is the key"], ''Chapel Hill Workshop on Volume Visualization'', University of North Carolina, Chapel Hill, 1989, pp. 39–43. |

|||

# {{note|pfi99}}Pfister H., Hardenbergh J., Knittel J., Lauer H., Seiler L.: ''The VolumePro real-time ray-casting system'' In Proceeding of SIGGRAPH99 [http://doi.acm.org/10.1145/311535.311563 DOI] |

|||

# {{note|shn03}}Sherbondy A., Houston M., Napel S.: ''Fast volume segmentation with simultaneous visualization using programmable graphics hardware.'' In Proceedings of IEEE Visualization (2003), pp. 171–176. |

|||

# {{note|mhc90}}Max N., Hanrahan P., Crawfis R.: ''Area and volume coherence for efficient visualization of 3D scalar functions.'' In Computer Graphics (San Diego Workshop on Volume Visualization, 1990) vol. 24, pp. 27–33. |

|||

# {{note|smb94}} Stein C., Backer B., Max N.: ''Sorting and hardware assisted rendering for volume visualization.'' In Symposium on Volume Visualization (1994), pp. 83–90. |

|||

# {{note|eke01}}Engel K., Kraus M., Ertl T.: ''High-quality pre-integrated volume rendering using hardware-accelerated pixel shading.'' In Proceedings of Eurographics/SIGGRAPH Workshop on Graphics Hardware (2001), pp. 9–16. |

|||

# {{note|lwm04}}Lum E., Wilson B., Ma K.: ''High-Quality Lighting and Efficient Pre-Integration for Volume Rendering.'' In Eurographics/IEEE Symposium on Visualization 2004. |

|||

| ⚫ | |||

| ⚫ | # {{note|peng2010}}Peng H., Ruan, Z, Long, F, Simpson, JH, Myers, EW: ''V3D enables real-time 3D visualization and quantitative analysis of large-scale biological image data sets.'' Nature Biotechnology, 2010 (DOI: 10.1038/nbt.1612) [http://www.nature.com/nbt/journal/vaop/ncurrent/full/nbt.1612.html Volume Rendering of large high-dimensional image data]. |

||

==External links== |

==External links== |

||

Revision as of 21:52, 5 August 2011

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set.

A typical 3D data set is a group of 2D slice images acquired by a CT, MRI, or MicroCT scanner. Usually these are acquired in a regular pattern (e.g., one slice every millimeter) and usually have a regular number of image pixels in a regular pattern. This is an example of a regular volumetric grid, with each volume element, or voxel represented by a single value that is obtained by sampling the immediate area surrounding the voxel.

To render a 2D projection of the 3D data set, one first needs to define a camera in space relative to the volume. Also, one needs to define the opacity and color of every voxel. This is usually defined using an RGBA (for red, green, blue, alpha) transfer function that defines the RGBA value for every possible voxel value.

For example, a volume may be viewed by extracting isosurfaces (surfaces of equal values) from the volume and rendering them as polygonal meshes or by rendering the volume directly as a block of data. The marching cubes algorithm is a common technique for extracting an isosurface from volume data. Direct volume rendering is a computationally intensive task that may be performed in several ways.

Direct volume rendering

A direct volume renderer[1][2] requires every sample value to be mapped to opacity and a color. This is done with a "transfer function" which can be a simple ramp, a piecewise linear function or an arbitrary table. Once converted to an RGBA (for red, green, blue, alpha) value, the composed RGBA result is projected on correspondent pixel of the frame buffer. The way this is done depends on the rendering technique.

A combination of these techniques is possible. For instance, a shear warp implementation could use texturing hardware to draw the aligned slices in the off-screen buffer.

Volume ray casting

The technique of volume ray casting can be derived directly from the rendering equation. It provides results of very high quality, usually considered to provide the best image quality. Volume ray casting is classified as image based volume rendering technique, as the computation emanates from the output image, not the input volume data as is the case with object based techniques. In this technique, a ray is generated for each desired image pixel. Using a simple camera model, the ray starts at the center of projection of the camera (usually the eye point) and passes through the image pixel on the imaginary image plane floating in between the camera and the volume to be rendered. The ray is clipped by the boundaries of the volume in order to save time. Then the ray is sampled at regular or adaptive intervals throughout the volume. The data is interpolated at each sample point, the transfer function applied to form an RGBA sample, the sample is composited onto the accumulated RGBA of the ray, and the process repeated until the ray exits the volume. The RGBA color is converted to an RGB color and deposited in the corresponding image pixel. The process is repeated for every pixel on the screen to form the completed image.

Splatting

This is a technique, which uses texture splatting, trades quality for speed. Here, every volume element is splatted, as Lee Westover said, like a snow ball, on to the viewing surface in back to front order. These splats are rendered as disks whose properties (color and transparency) vary diametrically in normal (Gaussian) manner. Flat disks and those with other kinds of property distribution are also used depending on the application.[3] [4]

Shear warp

The shear warp approach to volume rendering was developed by Cameron and Undrill, popularized by Philippe Lacroute and Marc Levoy.[5] In this technique, the viewing transformation is transformed such that the nearest face of the volume becomes axis aligned with an off-screen image buffer with a fixed scale of voxels to pixels. The volume is then rendered into this buffer using the far more favorable memory alignment and fixed scaling and blending factors. Once all slices of the volume have been rendered, the buffer is then warped into the desired orientation and scaled in the displayed image.

This technique is relatively fast in software at the cost of less accurate sampling and potentially worse image quality compared to ray casting. There is memory overhead for storing multiple copies of the volume, for the ability to have near axis aligned volumes. This overhead can be mitigated using run length encoding.

Texture mapping

Many 3D graphics systems use texture mapping to apply images, or textures, to geometric objects. Commodity PC graphics cards are fast at texturing and can efficiently render slices of a 3D volume, with real time interaction capabilities. Workstation GPUs are even faster, and are the basis for much of the production volume visualization used in medical imaging, oil and gas, and other markets (2007). In earlier years, dedicated 3D texture mapping systems were used on graphics systems such as Silicon Graphics InfiniteReality, HP Visualize FX graphics accelerator, and others. This technique was first described by Bill Hibbard and Dave Santek.[6]

These slices can either be aligned with the volume and rendered at an angle to the viewer, or aligned with the viewing plane and sampled from unaligned slices through the volume. Graphics hardware support for 3D textures is needed for the second technique.

Volume aligned texturing produces images of reasonable quality, though there is often a noticeable transition when the volume is rotated.

Hardware-accelerated volume rendering

Due to the extremely parallel nature of direct volume rendering, special purpose volume rendering hardware was a rich research topic before GPU volume rendering became fast enough. The most widely cited technology was VolumePro[7], which used high memory bandwidth and brute force to render using the ray casting algorithm.

A recently exploited technique to accelerate traditional volume rendering algorithms such as ray-casting is the use of modern graphics cards. Starting with the programmable pixel shaders, people recognized the power of parallel operations on multiple pixels and began to perform general purpose computations on the graphics chip (GPGPU). The pixel shaders are able to read and write randomly from video memory and perform some basic mathematical and logical calculations. These SIMD processors were used to perform general calculations such as rendering polygons and signal processing. In recent GPU generations, the pixel shaders now are able to function as MIMD processors (now able to independently branch) utilizing up to 1 GB of texture memory with floating point formats. With such power, virtually any algorithm with steps that can be performed in parallel, such as volume ray casting or tomographic reconstruction, can be performed with tremendous acceleration. The programmable pixel shaders can be used to simulate variations in the characteristics of lighting, shadow, reflection, emissive color and so forth. Such simulations can be written using high level shading languages.

Optimization techniques

The primary goal of optimization is to skip as much of the volume as possible. A typical medical data set can be 1 GB in size. To render that at 30 frame/s requires an extremely fast memory bus. Skipping voxels means the less memory to read.

Empty space skipping

Often, a volume rendering system will have a system for identifying regions of the volume containing no visible material. This information can be used to avoid rendering these transparent regions.[8]

Early ray termination

This is a technique used when the volume is rendered in front to back order. For a ray through a pixel, once sufficient dense material has been encountered, further samples will make no significant contribution to the pixel and so may be neglected.

Octree and BSP space subdivision

The use of hierarchical structures such as octree and BSP-tree could be very helpful for both compression of volume data and speed optimization of volumetric ray casting process.

Volume segmentation

By sectioning out large portions of the volume that one considers uninteresting before rendering, the amount of calculations that have to be made by ray casting or texture blending can be significantly reduced. This reduction can be as much as from O(n) to O(log n) for n sequentially indexed voxels. Volume segmentation also has significant performance benefits for other ray tracing algorithms.

Multiple and adaptive resolution representation

By representing less interesting regions of the volume in a coarser resolution, the data input overhead can be reduced. On closer observation, the data in these regions can be populated either by reading from memory or disk, or by interpolation. The coarser resolution volume is resampled to a smaller size in the same way as a 2D mipmap image is created from the original. These smaller volume are also used by themselves while rotating the volume to a new orientation.

Pre-integrated volume rendering

Pre-integrated volume rendering[9][10] is a method that can reduce sampling artifacts by pre-computing much of the required data. It is especially useful in hardware-accelerated applications[11][12] because it improves quality without a large performance impact. Unlike most other optimizations, this does not skip voxels. Rather it reduces the number of samples needed to accurately display a region of voxels. The idea is to render the intervals between the samples instead of the samples themselves. This technique captures rapidly changing material, for example the transition from muscle to bone with much less computation.

Image-based meshing

Image-based meshing is the automated process of creating computer models from 3D image data (such as MRI, CT, Industrial CT or microtomography) for computational analysis and design, e.g. CAD, CFD, and FEA.

Temporal reuse of voxels

For a complete display view, only one voxel per pixel (the front one) is required to be shown (although more can be used for smoothing the image), if animation is needed, the front voxels to be shown can be cached and their location relative to the camera can be recalculated as it moves. Where display voxels become too far apart to cover all the pixels, new front voxels can be found by ray casting or similar, and where two voxels are in one pixel, the front one can be kept.

References

- ^ Marc Levoy, "Display of Surfaces from Volume Data", IEEE CG&A, May 1988. Archive of Paper

- ^ Drebin, R.A., Carpenter, L., Hanrahan, P., "Volume Rendering", Computer Graphics, SIGGRAPH88. DOI citation link

- ^ Westover, Lee Alan (July, 1991). "SPLATTING: A Parallel, Feed-Dorward Volume Rendering Algorithm" (PDF). Retrieved 5 August 2011.

{{cite web}}: Check date values in:|date=(help) - ^ Huang, Jian (Spring 2002). "Splatting" (PPT). Retrieved 5 August 2011.

- ^ "Fast Volume Rendering Using a Shear-Warp Factorization of the Viewing Transformation"

- ^ Hibbard W., Santek D., "Interactivity is the key", Chapel Hill Workshop on Volume Visualization, University of North Carolina, Chapel Hill, 1989, pp. 39–43.

- ^ Pfister H., Hardenbergh J., Knittel J., Lauer H., Seiler L.: The VolumePro real-time ray-casting system In Proceeding of SIGGRAPH99 DOI

- ^ Sherbondy A., Houston M., Napel S.: Fast volume segmentation with simultaneous visualization using programmable graphics hardware. In Proceedings of IEEE Visualization (2003), pp. 171–176.

- ^ Max N., Hanrahan P., Crawfis R.: Area and volume coherence for efficient visualization of 3D scalar functions. In Computer Graphics (San Diego Workshop on Volume Visualization, 1990) vol. 24, pp. 27–33.

- ^ Stein C., Backer B., Max N.: Sorting and hardware assisted rendering for volume visualization. In Symposium on Volume Visualization (1994), pp. 83–90.

- ^ Engel K., Kraus M., Ertl T.: High-quality pre-integrated volume rendering using hardware-accelerated pixel shading. In Proceedings of Eurographics/SIGGRAPH Workshop on Graphics Hardware (2001), pp. 9–16.

- ^ Lum E., Wilson B., Ma K.: High-Quality Lighting and Efficient Pre-Integration for Volume Rendering. In Eurographics/IEEE Symposium on Visualization 2004.

Bibliography

- ^ Barthold Lichtenbelt, Randy Crane, Shaz Naqvi, Introduction to Volume Rendering (Hewlett-Packard Professional Books), Hewlett-Packard Company 1998.

- ^ Peng H., Ruan, Z, Long, F, Simpson, JH, Myers, EW: V3D enables real-time 3D visualization and quantitative analysis of large-scale biological image data sets. Nature Biotechnology, 2010 (DOI: 10.1038/nbt.1612) Volume Rendering of large high-dimensional image data.

External links

- The Visualization Toolkit – VTK is a free open source toolkit, which implements several CPU and GPU volume rendering methods in C++ using OpenGL, and can be used from python, tcl and java wrappers.

- Linderdaum Engine is a free open source rendering engine with GPU raycasting capabilities.

- Open Inventor by VSG is a 3D graphics toolkit for developing scientific and industrial applications.

- Avizo is a general-purpose commercial software application for scientific and industrial data visualization and analysis.

See also

- 3D Slicer - a free, open source software package for scientific visualization and image analysis.

- V3D – a free (as in gratis) and fast 3D, 4D and 5D volume rendering and image analysis platform for gigabytes of large images (based on OpenGL). Also cross-platform with Mac, Windows, and Linux versions. Include a comprehensive plugin interface which lets a plugin program control the behaviour of the 3d viewer. Soure code available. Commercial uses requires a different license

- Ambivu 3D Workstation - free medical imaging workstation that offers a range of volume rendering modes (based on OpenGL)

- VoluMedic – a commercial volume slicing and rendering software

- Voreen – volume rendering engine (a framework for rapid prototyping of volume visualizations)

- ImageVis3D – an Open Source GPU volume slicing and ray casting implementation

- Avizo - is a commercial software application for scientific and industrial data visualization and analysis.

- MeVisLab - cross-platform software for medical image processing and visualization (based on OpenGL and Open Inventor)

- MRIcroGL – an open source GPU-accelerated volume renderer for OSX, Windows, and Linux versions.