Wikipedia:Reference desk/Mathematics

of the Wikipedia reference desk.

Main page: Help searching Wikipedia

How can I get my question answered?

- Select the section of the desk that best fits the general topic of your question (see the navigation column to the right).

- Post your question to only one section, providing a short header that gives the topic of your question.

- Type '~~~~' (that is, four tilde characters) at the end – this signs and dates your contribution so we know who wrote what and when.

- Don't post personal contact information – it will be removed. Any answers will be provided here.

- Please be as specific as possible, and include all relevant context – the usefulness of answers may depend on the context.

- Note:

- We don't answer (and may remove) questions that require medical diagnosis or legal advice.

- We don't answer requests for opinions, predictions or debate.

- We don't do your homework for you, though we'll help you past the stuck point.

- We don't conduct original research or provide a free source of ideas, but we'll help you find information you need.

How do I answer a question?

Main page: Wikipedia:Reference desk/Guidelines

- The best answers address the question directly, and back up facts with wikilinks and links to sources. Do not edit others' comments and do not give any medical or legal advice.

November 27

I Can't Post!!!

The LaTeX code that I have input as part of a post that I have been working on for this reference desk won't display in the section creator's preview area most of the time!!! It doesn't matter whether I set my "Appearance → Math" preferences to either "Always render PNG" or use "MathJax (experimental; best for most browsers)" because the former setting results in an error message of "Failed to parse (Lexing error):" followed by my original LaTeX and the latter surrounds my code in dollar signs without rendering it. I've been trying to use my family's iMac, which is a mid-2007, 24" model running Safari 5.0.6 over the Intel version of Mac OS X 10.5.8 Leopard without a local LaTeX installation, to post my question for a few days now, so if anyone can help me figure out what's going on, that would be marvelous! BCG999 Out. (talk) 20:30, 27 November 2012 (UTC)

- There might be a problem with the LaTeX code your using. Wikipedia only supports a subset of LaTeX code and does not suport any packages. See Help:Formula for wikipedia specific LaTex help. If you post the latex your using here, either in plain text or with errors we might be able to help you.--Salix (talk): 21:04, 27 November 2012 (UTC)

I wasn't using any packages, and LaTeX usually renders for me even though I don't personally have it installed on my family's machine, though that probably doesn't matter and is the case because Wikipedia most likely has LaTeX installed on its servers. Here's the code that I was using in the other post that I was going to add to the reference desk as a new question:- Failed to parse (syntax error): {\displaystyle P(E)={}^{\[\text{Number of outcomes in event}\]}/{}_{\[\text{Total number of outcomes in sample space}\]}}

- Failed to parse (syntax error): {\displaystyle \[\text{Rolling an even number}\]=\{2, 4, 6\}}

- Failed to parse (syntax error): {\displaystyle \[\text{Sample space over a 6-sided die}\]=\{1, 2, 3, 4, 5, 6\}}

- Failed to parse (syntax error): {\displaystyle \[\text{Number of outcomes in event}\]}

- Failed to parse (syntax error): {\displaystyle \[Total number of outcomes in sample space}\]}

- Failed to parse (syntax error): {\displaystyle \[\text{Number of outcomes in event}\]=\|A\| \[Total number of outcomes in sample space}\]=\|S\| }

- Shouldn't this render fine?

- Firstly you need \left[ or just [ rather than \[. there also a couple of unmatched {} brackets. The following should render fine.--Salix (talk): 22:25, 27 November 2012 (UTC)

- Thanks a lot, Salix; I should have double checked my code manually instead of just selecting the "Fix Math" command provided by WikiEd. I'll be posting my actual question with this revised LaTeX code soon, thanks again to you.

November 28

A question about generating correlated random variables with non-normal distributions.

I have a Monte-Carlo-type problem requiring that I generate a series of pairs (and maybe n-tuples) of correlated random variables with a correlation coefficient of rho. I know how to do this when they are normally distributed (by using a Cholesky decomposition of the covariance matrix). But what if they are not normally distributed? I’m particularly thinking about multivariate uniform distributions, t distributions, Laplace distributions and some fat-tailed distributions like the Cauchy or Levy.

I found a suggestion to generate two univariate series, X1 and X2, and then define a new series X3 as rho X1 + sqrt(1-rho^2) X2, so that X1 and X3 will be my correlated random variables. But doesn’t this method ONLY work for normally distributed random variables? Is there an equivalent sort of thing that I can do with other distributions, like uniform or t?

And what about fat-tailed distributions with no finite variance? Since they have no finite variance, I presume that the correlation coefficient rho, which is derived from variances and covariances, has no equivalent. How does correlation work in such cases? Thorstein90 (talk) 05:04, 28 November 2012 (UTC)

- I think you're going to have to specify a bit better, and perhaps think a bit more about, what multivariate distribution you want. I doubt that the distribution of the individual variables, combined with a covariance matrix, is actually enough to determine the multivariate distribution. --Trovatore (talk) 03:16, 28 November 2012 (UTC)

- There are a few that I would like to try. I think that I can use an R function for the multivariate t distribution, and with one degree of freedom this will define for me a multivariate cauchy distribution (if I understand correctly). But I'd also like to try drawing randomly from multivariate uniform, laplace, pareto and levy distributions and in the absence of any usable functions I was wondering if there is some way that I can make the univariate draws correlated. Thorstein90 (talk) 05:04, 28 November 2012 (UTC)

- According to stable distribution,

- a random variable is said to be stable (or to have a stable distribution) if it has the property that a linear combination of two independent copies of the variable has the same distribution, up to location and scale parameters. The stable distribution family is also sometimes referred to as the Lévy alpha-stable distribution.

- So if you use a stable distribution with finite variance, you could generate iid variables X and Z, and generate Y = a + bX + cZ. Then cov(Y, X) = cov(bX, X) = b×var(X), and var(Y) = b2var(X) + c2var(Z). Then corr(X, Y) = cov(X, Y)/ stddev(X)stddev(Y) = b×var(X)/sqrt{var(X)[b2var(X) + c2var(Z)]}. Set b and c equal to whatever you want to get the desired corr(X, Y). Duoduoduo (talk) 16:04, 28 November 2012 (UTC)

- And the above gives you the two free parameters b and c, so you can pin down desired values of two things: var(Y) and corr(X, Y). Also the parameter a allows you to control the location of the Y distribution. Duoduoduo (talk) 16:09, 28 November 2012 (UTC)

- OR here: Draw N observations on X from, say, a uniform distribution. Now since the sequence in which these happened to be drawn does not alter the fact that they were drawn from a uniform distribution, you can shuffle these in any way you like into a data series Y that is also drawn from a uniform distribution. For example, using mod N arithmetic, you could generate Yn = Xn–k for any lag value k. Then experimenting around with different values of k, you could find the one that gives you a corr(X, Y) that is closest to what you want. Whether or not this would be appropriate for the use to which you're going to put the simulated data would depend on what that use is.

- In fact, suppose you do the above with data drawn from an infinite-variance distribution. Since you're only drawing a finite number of data points, your sample variances and covariances will be finite, so the above procedure still applies. Duoduoduo (talk) 16:43, 28 November 2012 (UTC)

- You could ask at the Computing Reference Desk whether there are any packages that would have a simple command to draw from, say, a multi-uniform distribution. Duoduoduo (talk) 16:50, 28 November 2012 (UTC)

- As for situations with infinite variances when it can't be finessed with a finite sample covariance, our article Covariance#Definition says

- The covariance between two jointly distributed real-valued random variables x and y with finite second moments is defined as.... [bolding added]

- And I don't see any way to get around that boldfaced restriction by limiting ourselves to one particular definition that doesn't actually involve variances. So regarding your question "How does correlation work in such cases?", I think the answer is that it doesn't -- you can't generate or even conceptualize two infinite-variance random variables with a covariance of rho. Duoduoduo (talk) 17:33, 28 November 2012 (UTC)

- As for situations with infinite variances when it can't be finessed with a finite sample covariance, our article Covariance#Definition says

- However, when variances and covariances don't exist, there can still be measures of the variables' dispersions and co-dispersion. See elliptical distribution -- in that class of distributions, in cases for which the variance does not exist, the on-diagonal parameters of the matrix are measures of dispersion of individual variables, and the off-diagonal parameters are measures of co-dispersion. Duoduoduo (talk) 17:45, 28 November 2012 (UTC)

- A little more OR here: Take a look at Normally distributed and uncorrelated does not imply independent#A symmetric example. It points out that if X is normally distributed, then so is Y=XW where P(W=1) = 1/2 = P(W= –1) and where W is independent of X; but cov(X,Y) = 0. I think this preservation of the form of the distribution holds for any symmetric distribution, not just the normal. And I think that if you specify P(W=1) = p and P(W= –1) = 1–p, then as p varies from 0 to 1/2 to 1 the correlation between X and Y varies from –1 to 0 to 1. If this is right, then this is probably the answer to your question for all finite-variance symmetric distributions. Duoduoduo (talk) 18:20, 28 November 2012 (UTC)

- X is centered on zero of course. Duoduoduo (talk) 18:40, 28 November 2012 (UTC)

- All very interesting suggestions, thank you. I will have a look into these. Am I correct in interpreting your 'stable distribution' suggestion with the X, Y and Z as being something that applies only for sufficiently large N, as in the case of the central limit theorem? I am also curious if you have any opinion on the usefulness of copulas for problems such as these. I don't properly understand copulas and am unsure whether they are necessary for my problem. Thorstein90 (talk) 23:50, 28 November 2012 (UTC)

- I don't know much of anything about copulas, but from a glance at the article I don't see how they could help.

- An analog to the central limit theorem would involve adding together a sufficiently large number of random variables. That's not involved here -- you can just add two random variables from a stable distribution and end up with the sum being of the same distribution. Now if by N you mean the number of simulated points, then even for X and Z, as well as for Y, if N is small then a plot of your simulated points won't look like it's from that distribution, even though you know it is. But for a large number of simulated points, a plot of the X data, the Z data, or the Y data will indeed give a good picture of the underlying distribution. Duoduoduo (talk) 17:34, 29 November 2012 (UTC)

- This describes some interesting methods. You might want to ask on stats.stackexchange.com and if you don't get an answer there, then mathoverflow.net. I don't actually understand what copulas are, but I think they have more to do with the reverse problem: you have an observed joint distribution of several correlated variables, and you want to decompose it to the component variables. 67.119.3.105 (talk) 08:30, 30 November 2012 (UTC) Added: here is an SE thread with some other suggestions. 67.119.3.105 (talk) 08:37, 30 November 2012 (UTC)

- The first of 67.119.3.105's links doesn't, as far as I can see, output variables that both have the same given type of distribution as each other. That objection also applies to some of the answers at his second link here, but I think the answer with the big number 4 beside it may be relevant -- not sure. Duoduoduo (talk) 18:47, 30 November 2012 (UTC)

Request for Help Deriving the Formula Describing the Probability that a Set of Event Outcomes Might Occur for Make-Up Homework

Hello again, could somebody help me figure out how to derive the actual equation for probability so that I can get started on some homework that I'm doing to catch up in my Probability and Statistics class? I would like to directly input the event or events and sample space associated with each probability I need to calculate for my homework–equations with fillable variables, after all, tend to trump those with words in the field of mathematics–, but my textbook, Elementary Statistics: Picturing the World, Fourth Edition by Ron Larson and Betsy Farber, only gives me the formula, as displayed below using my own conventions, in words:

Curiously, this same textbook also gives examples of events whose probability one could find using this equation in the notation used to denote sets in set theory, as one could see if he or she wrote down, say, the event of rolling an even number on a standard, six-sided die as follows:

Similarly, the sample space one would use in the context of an experiment he or she might have begun to investigate how many even numbers he or she could roll using the provided six-sided die would also, as defined by my book as a convention, simply contain every single value one could obtain from rolling this given die:

I found it obvious that one could not directly input the outcome sets given for each event and sample space involved in each assigned probability problem directly into the probability formula given by my text. Therefore, I assumed that I must convert this formula, which unnecessarily requires one to input the number of elements which exist in each given outcome set, into one that accepts these originally-given outcome sets as inputs. The number of inputs in the outcome sets given by each assigned problem as the definition of each involved event and sample space is, of course, the cardinality of each of these sets. If I assign the outcome set whose cardinality gives to the set A and the outcome set whose cardinality gives to the set S, then I can formalize these definitions using mathematics prior to substituting the sets A and S for an event and the sample space that contains it. So:

Plugging these two equations into the original formula given by my textbook for finding the probability of an event results in the following formula, which now accepts sets as arguments just like I wanted:

I now have the equation I was looking for in the first place. However, the sample space acts as a limit on this function's domain such that , and what my book calls the "Range of Probabilities Rule" likewise limits the range of this function to between 0 and 1, inclusive. I would like to explicitly define these limits which exist for the formula for finding probability if possible. The article on functions provides a notation which would allow me to do so, but it unfortunately spans multiple lines as shown below:

So, here's my question: does a single-line, f(x) notation that expresses the same information as the above mapping exist? I tried to create one myself…:

…but neither this nor the same function with the outermost parentheses from each side of the equation replaced with the brackets of set notation seems right to me. Could somebody help me out? Thanks in advance, BCG999 (talk) 20:00, 28 November 2012 (UTC).

- Not sure that I fully understand your question, but here goes. I think your function definition

- looks just fine. You could call the right hand side f(|A|, |S|) so

- Then

- f: N* × N → [0, 1] ∩ Q

- |A|, |S| →

- f: N* × N → [0, 1] ∩ Q

- where Q is the set of rationals, N refers to the set {1, 2,...} and N* × N refers to the set of pairs (a,b) in {0, N}×N such that a≤b. Probably a better notation exists than N* × N. I don't see how you could collapse the last two lines into a single line though. Duoduoduo (talk) 18:22, 29 November 2012 (UTC)

- Hey, Duoduoduo; didn't you mean that I could call the left-hand side ? However, I don't think that this notation could be the basis of what I want to write down as part of my homework because I want the function to take the sets and as its inputs, not their cardinalities and . This is because the function takes these cardinalities internally all by itself. Therefore, shouldn't my basic function use the letter to represent the input event's set of outcomes instead of the letter while also taking the sample space that contains this input event as an input? If I were to make these changes, then the basic function–the one that you said looked fine–should read unless the standard notation used to denote functions needs modifications to take sets as arguments instead of variables. Also, I'm a bit confused about how you mapped the function's domain to its range because I'm not quite sure that I understand how you defined the set denoted by and the product of the sets and . I know that you could have explained that the set of the elements is equivalent to the set of all natural numbers except, but you've lost me after that. As for needing to collapse this map-based function definition into one using the standard notation, I need to do this because my Probability and Statistics class is actually a high school one, which means that my teacher wouldn't expect me to have ever even seen the former notation. Maybe we could express the limits that I need to place on the probability formula's domain and range as conditions on the function to the right of it and a comma? I really need to do this because I'm trying to understand where all of this probability stuff comes from and my book's original, word-based definition didn't really help me that much at all to do this so that I could make sure I have all of the formulas that I need for my homework assignment before I begin.

- P.S.: Is it okay if I fixed how some of your math looks? I made it LaTeX, like mine. Also, your name prompted a little Pokémon-related non sequitur, by the way…

- I'm sure you didn't realize it, but it's bad etiquette to change anyone's post. As for the Pokémon-related non sequitur, I'm from the older generation and, believe it or not, have no idea what pokemon is. I'd be curious to know what the non sequitor is.

- The notation P(E) conventionally means the probability (P for probability) of event E happening. So I think the notation P(A, S) or P(|A|, |S|) is not good. That's why I used f. P(A, S) would mean the probability of both A and S occurring, which is not what is intended.

- You say "I'm not quite sure that I understand how you defined the set denoted by and the product of the sets and .". The notation is not a product -- it means that we're talking about a two-dimensional space we're mapping from; one of the dimensions is N* for one of the arguments of the function, and the other dimension is N for the other argument.

- I'm kind of reluctant to try my hand at helping out with this any more, for two reasons: your question really pushes the boundaries of what I understand about set notation, and we're not really supposed to be helping out with homework anyway. I'm impressed, though, by the sophistication of your high school homework! Duoduoduo (talk) 22:53, 29 November 2012 (UTC)

- I'm sorry I changed your math to look like mine, Duoduoduo. At the time I really only considered how much better this thread's math would all look if its styling was consistent. I also didn't realize that you had meant to change to so as to clarify the function's intentions. Please forgive me for my ignorance and naïveté, and thank you for explaining why you changed my function so that it would not take the probability of both the event and the sample space because of how it took both inputs to be events, which, as you guessed, is exactly what I did not want it to do! What I really intended, of course, was for this formula to take the probability of an event as an argument of a function that maps the sample space to the interval , which you explicitly extended to include all real numbers . Unfortunately, the way you expressed the limit which I wanted to act on the domain of the derived probability function initially confused me because I thought that you had somehow changed the limits which I had originally said that I wanted to place on the probability function 's domain and range. After all, the domain specified by the set didn't seem equivalent to the domain specified by the set because the sample space is a one-dimensional set having as its members every outcome of all events given by each problem in my homework assignment such that is the number of events involved in each of these said problems and is a set of indices which I can use to access all events defined by each problem in my homework assignment such that et cetera; whereas the set contains all integer points on the two-dimensional Euclidean space. Since each problem in my homework assignment may specify a different sample space by defining different sets of outcomes that make up each such problem's multiple, different, mutually-exclusive events , what I really wanted to do in the first place was find a way to input the sample space into my probability function without making it an argument of this function. If all of this confuses you, could you maybe ask somebody else who might be able to continue helping me with my homework, which this reference desk does allow so long as the user in question who asks for help shows significant effort in having already attempted answering their own question?

- Thanks, BCG999 (talk) 23:46, 30 November 2012 (UTC)

- P.S.: Your username reminded me of the names of the Pokémon Doduo and Duosion.

- I'm bothered by your notation P(A). As I understand it A is the set of all possible affirmative outcomes and S is the set of all possible outcomes. Since A is not an outcome itself, you can't talk about its probability. E is then the event in which an outcome is in the affirmative set A. So I think the notation would be P(E). Equivalently, if you let O be the outcome of a drawing, then P(E) is defined as P(O A). Duoduoduo (talk) 13:29, 1 December 2012 (UTC)

- No, Duoduoduo; you misunderstand: is a general instance of the even more general event object such that I can work with multiple events if a problem requires me to do so. So, the function can take the event as an input because both of the events and are…well, events made up of all of the outcomes given by the problem to be members of the event. In other words, it makes sense to me that I can define the events as the set of their members, the outcomes . For example, an event comprises all of the even outcomes that one could possibly obtain by rolling a standard, six-sided die. I gave this event in my initial post as an example of how my textbook denotes events:

- As you can see, I'm defining each instance of this generic object to be the set of all outcomes making up this said event and/or of type , if I may use some computer science terminology to clarify my math even though it won't actually come into play when I do each problem in my homework assignment. I initially defined this object explicitly such that it would guarantee any input event to be a member of the power set of the sample space by attempting to limit the domain of my probability function (or , if you want to make the function's input more abstract; it doesn't matter) to this power set of the given sample space . The only problem is that I don't know how to limit the domain of to the sample space while still allowing this same function to use this sample space to compute the probability of the input event . In essence, knowing how to do this would allow me to do what I basically have been wanting to do since the beginning of this discussion: write a function that returns the probability of any input object of type 'event ()' given that this input event object is a subset of the sample space such that the function's return value lies within the interval between the real numbers 0 and 1, inclusive. Note as well that the input event object is a subset because it is a member of the power set of the sample space over which one must calculate the probability of this input event object . Now do you understand what I've been trying to ask this entire time, Duoduoduo?

- Here's hoping we get this right,

- BCG999 (talk) 18:03, 1 December 2012 (UTC)

- Sorry, I can't understand your most recent post at all. Since this seems to be beyond me, I'm afraid I can't help any more. But I wish you success in getting it figured out. Duoduoduo (talk) 21:22, 1 December 2012 (UTC)

- Maybe it would help you or whoever else is interested in helping me with this problem if I told you that I'm trying to write another function which restricts my original function, which I now clarify below through a few slight modifications which change the domain from the power set of the sample space to the set of all real numbers and remove the sample space from the mapping…:

- …, to the domain defined by the members of the sample space as explained here by the article on function domains, like so:

- The only problem is that, since this is a high-school Probability and Statistics class for which I'm doing my homework, my teacher wouldn't expect me to know how to define functions in terms of maps; in fact, I had never seen the map-based way of defining a function before I read about in the article on functions. That's why I wanted to know if could define a probability function restricted to the sample space of relevance to each problem in my homework that takes an event (or , if you're only using a single event; each of my problems wants me to solve for multiple probabilities in the context of a probability distribution) as its only argument while still allowing me to use this restricted domain to calculate its result in standard, notation. So, what do I do?

- BCG999 (talk) 20:19, 4 December 2012 (UTC)

- Wait a minute, I think I just figured this out myself! To verify my thinking, could I say that the following function:

- Maybe it would help you or whoever else is interested in helping me with this problem if I told you that I'm trying to write another function which restricts my original function, which I now clarify below through a few slight modifications which change the domain from the power set of the sample space to the set of all real numbers and remove the sample space from the mapping…:

- Sorry, I can't understand your most recent post at all. Since this seems to be beyond me, I'm afraid I can't help any more. But I wish you success in getting it figured out. Duoduoduo (talk) 21:22, 1 December 2012 (UTC)

- BCG999 (talk) 18:03, 1 December 2012 (UTC)

- No, Duoduoduo; you misunderstand: is a general instance of the even more general event object such that I can work with multiple events if a problem requires me to do so. So, the function can take the event as an input because both of the events and are…well, events made up of all of the outcomes given by the problem to be members of the event. In other words, it makes sense to me that I can define the events as the set of their members, the outcomes . For example, an event comprises all of the even outcomes that one could possibly obtain by rolling a standard, six-sided die. I gave this event in my initial post as an example of how my textbook denotes events:

- I'm bothered by your notation P(A). As I understand it A is the set of all possible affirmative outcomes and S is the set of all possible outcomes. Since A is not an outcome itself, you can't talk about its probability. E is then the event in which an outcome is in the affirmative set A. So I think the notation would be P(E). Equivalently, if you let O be the outcome of a drawing, then P(E) is defined as P(O A). Duoduoduo (talk) 13:29, 1 December 2012 (UTC)

- …is actually equivalent to when the event is either a subset of the sample space or a member of the power set of the sample space ?

- Here's hoping I'm right,

Calculating an invariant ellipse

Hi. Suppose we have a linear map on the plane, given by a 2x2 matrix, which has complex eigenvalues and determinant equal to 1. The invariant sets in the plane under this transformation - if I'm not mistaken - should be ellipses. Does anyone know the easiest way to find the equations of these ellipses, given the matrix of the transformation? Thanks in advance. -68.185.201.210 (talk) 23:19, 28 November 2012 (UTC)

- If the matrix is and the invariant ellipse family is , for any the LHS must be equal for and , thus the coefficients must be equal. By solving (and letting ) we get

- -- Meni Rosenfeld (talk) 08:51, 29 November 2012 (UTC)

- Thanks a million! -68.185.201.210 (talk) 15:14, 29 November 2012 (UTC)

- You might be interested to look at Matrix representation of conic sections and Conic section#Matrix notation. Duoduoduo (talk) 18:41, 29 November 2012 (UTC)

- Probably just brainlock on my part, but I don't understand Meni Rosenfeld's answer. If we write = R2 and and equate coefficients, we get e=a, f=b+c, and g=d. (Generally b and c are taken as equal.) Duoduoduo (talk) 18:52, 29 November 2012 (UTC)

- I got the same answer as Meni Rosenfeld, but not using the equation you wrote down. I set , and then equated coefficients in , which I don't think is the same thing. This answer also agrees with the actual ellipses I'm looking at in my application, so I'm confident that it's right. -129.120.41.156 (talk) 23:23, 29 November 2012 (UTC) (O.P. at a different computer)

- Thanks. I misunderstood what the matrix was. Duoduoduo (talk) 23:29, 29 November 2012 (UTC)

- So, the other matrix you wrote down would be.... some kind of quadratic form associated to the transformation? Is there a nice bijection there, or do we get equivalence classes on one side? -68.185.201.210 (talk) 03:12, 30 November 2012 (UTC)

- There's one more degree of freedom in (2D) linear transformations than quadratic forms; changing b and c while keeping fixed is equivalent. But other than that, as far as I know the quadratic form represented by a rectangle of numbers has very little to do with the linear transformation represented by the same. -- Meni Rosenfeld (talk) 09:00, 30 November 2012 (UTC)

- So, the other matrix you wrote down would be.... some kind of quadratic form associated to the transformation? Is there a nice bijection there, or do we get equivalence classes on one side? -68.185.201.210 (talk) 03:12, 30 November 2012 (UTC)

- Thanks. I misunderstood what the matrix was. Duoduoduo (talk) 23:29, 29 November 2012 (UTC)

- I got the same answer as Meni Rosenfeld, but not using the equation you wrote down. I set , and then equated coefficients in , which I don't think is the same thing. This answer also agrees with the actual ellipses I'm looking at in my application, so I'm confident that it's right. -129.120.41.156 (talk) 23:23, 29 November 2012 (UTC) (O.P. at a different computer)

- Probably just brainlock on my part, but I don't understand Meni Rosenfeld's answer. If we write = R2 and and equate coefficients, we get e=a, f=b+c, and g=d. (Generally b and c are taken as equal.) Duoduoduo (talk) 18:52, 29 November 2012 (UTC)

- (1) By Meni Rosenfeld's solutions for e and g in terms of f, note that if sgn(b)=sgn(c) then you get a hyperbola, not an ellipse. (2) If b=0=c you get a rectangular hyperbola. Duoduoduo (talk) 15:35, 30 November 2012 (UTC)

- See Conic section#Discriminant classification. Duoduoduo (talk) 15:38, 30 November 2012 (UTC)

- Note, however, that if the eigenvalues of the matrix are nonreal (as the OP hints at with the term "complex"), then it must be the case that and the solutions are ellipses. -- Meni Rosenfeld (talk) 20:26, 1 December 2012 (UTC)

- I interpreted complex eigenvalues as simply being in the complex plane but not necessarily on the real line. But I don't think that matters. I get the characteristic equation to be hence , with eigenvalues half of , and I can't see how this requires for the eigenvalues to be non-real. (E.g. consider the case a = 1.2 , d=1 / 1.1 . Since ad-bc=1, bc=ad-1 which in my example is >0, yet the eigenvalues are complex). So we can have non-real eigenvalues, bc>0, and a hyperbola.

- Note that even if bc<0, the conic could still be a hyperbola if the eigenvalues are real, since an ellipse requires ; using your solutions for e and g gives an ellipse iff , which does not always hold when bc < 0 (but does hold if the eigenvalues are non-real). Duoduoduo (talk) 22:18, 1 December 2012 (UTC)

- If then and the eigenvalues are real.

- If the eigenvalues are nonreal, the discriminant must be negative, . From this subtract to get so and .

- Put differently, for a given sum , is largest when , and then ; since , . -- Meni Rosenfeld (talk) 10:44, 2 December 2012 (UTC)

- Thanks. Bad mental math on my part. Maybe I should buy a calculator. Duoduoduo (talk) 12:41, 2 December 2012 (UTC)

- Good idea, it makes life so much easier. In the meantime, Win+R calc is also handy. -- Meni Rosenfeld (talk) 12:59, 2 December 2012 (UTC)

- Thanks. Bad mental math on my part. Maybe I should buy a calculator. Duoduoduo (talk) 12:41, 2 December 2012 (UTC)

- Note that even if bc<0, the conic could still be a hyperbola if the eigenvalues are real, since an ellipse requires ; using your solutions for e and g gives an ellipse iff , which does not always hold when bc < 0 (but does hold if the eigenvalues are non-real). Duoduoduo (talk) 22:18, 1 December 2012 (UTC)

November 29

Reflected image of a point in a plane, R^3

Hello. What is the quickest analytic method to find the reflected image P' of a point P(x0,y0,z0) across an arbitrary plane π: ax+by+cz+d=0 in R3? I solved the problem by picking an arbitrary point on π then considering vectors and projections but this is a lengthy and ugly process. Thanks. 24.92.74.238 (talk) 02:58, 29 November 2012 (UTC)

- You want to find the point which is an equal distance from the plane, on the far side, on the normal vector to the plane, through the original point ? If so, once you find the normal projection of the point onto the plane, finding the point on the opposite side is almost trivial. If point 0 is the original, point 1 is the normal projection onto the plane, and point 2 is the reflected point behind the plane, find point 2 as follows:

x2 = x0 - 2(x0-x1) y2 = y0 - 2(y0-y1) z2 = z0 - 2(z0-z1)

- Yes; see http://www.9math.com/book/projection-point-plane for a more explicit answer. Looie496 (talk) 19:47, 29 November 2012 (UTC)

November 30

the R^2 of (X,Y) aggregated samples is much larger than the R^2 of individual samples of (X,Y)

I collect/calculate velocity and curvature data for about 15 fruit flies of a certain genotype per experiment. Each experiment samples new data points at some frequency for 8000 frames. Then I have N experiments. Also actually they are log velocity and log curvature data so I can calculate a power law, but I'm just going to call them v and k.

If I aggregate the data, i.e. I concatenate velocity time-series array for one fly, with another fly's time series, and then for all the flies of a genotype (say, 135), and repeat the same for the curvature time series, such that the nth element of the concatenated velocity array corresponds to the nth element of the concatenated curvature array, I get a pretty high R^2: 0.694.

But when I take the average of the individual R^2's, the R^2 average is as low as 0.193.

Roughly the same magnitude of difference happens with every other genotype. How do I explain this? I don't think it's Simpson's Paradox, which I don't think would give a higher R^2 if there were two different distributions, and I don't see two different distributions when I look at the log-log plot. John Riemann Soong (talk) 05:30, 30 November 2012 (UTC)

- Can you give us an example ? I don't understand how you can concatenate different flies' data together. Isn't there a discrepancy at the joins (a discontinuity in the curve) ? StuRat (talk) 05:39, 30 November 2012 (UTC)

- I'm not plotting against time. I have the following pseudocode (summarising)

p = 1; while p <= number of experiments n=1; while n <= number of flies in experiment speed = cat(2,speed,cor(n,p).logx) %concatenation along the 2nd dimension in MATLAB, or just joining arrays together end to end kurv = cat(2,kurv,cor(n,p).logy) n = n+1; end p = p + 1 end

- The result is that I have two very long arrays with all the time series joined together. However, I am plotting the nth element in one array (i.e. velocity) against the nth element the other (i.e. curvature). The order is kept because both arrays were joined in the same way. i.e. say I have the following velocity and curvature arrays taken in time, for a first fly and second fly

- k1 = [a b c d] v1 =[e f g h]

- k2 = [i j k l] v2 =[m n o p]

- the nth element of k1 (the first fly) corresponds to the same moment of time as the nth element of k2, v1 or v2. i.e. a, e, i, m all represent data taken at some common frame.

- When we join the arrays together we get k= [a b c d i j k l] v=[e f g h m n o p]. the first element in k corresponds to the first element in v, represents data from the fly 1 at the same time. the 5th element of k corresponds to the 5th element in v, and is the data for fly 2 at the same time. Correspondence is maintained. You can add flies from other experiments (i.e. data taken on a different day) and the correspondence is maintained.

- It doesn't matter that there's a time discontinuity, because we are not plotting against time. The neighborhood of some point (k_x,v_x) on the collective plot might have points from times spaced far apart, or from a different experiment. We just have to ensure that v(n) and k(n) represent the same fly at the same moment. Then we can see how correlated that fly's curvature is to its velocity. Repeat for all n, which might be the same fly at a different moment, a different fly at the same moment, or a different fly at a different moment.

- I've done autocorrelation and Fourier transform plots, plots of v(t) against v(t+1) or v(t+k) (a recurrence plot). In general, two data points separated in time for the same fly are not very well-correlated, i.e. the velocity at frame 1501 has very little predictive power for the velocity at frame 1512. Thus I think the time discontinuities aren't of a big concern and we can ignore the correlation of the observed variables with time. John Riemann Soong (talk) 06:09, 30 November 2012 (UTC)

- I think I now understand your concern. There would be a discontinuity if each fly (or each experiment) sampled from a different distribution. However when I look at the aggregate plots, the data is very smooth, and I don't see any apparent subdistributions. (I suppose a quantitative way would be to run an ANOVA. But surely running an ANOVA for the time series of 135 flies would make it likely to report a low p-value?) When I compare data from different genotypes, then subdistributions emerge. John Riemann Soong (talk) 06:30, 30 November 2012 (UTC)

- It would be helpful if you'd link to the concept you're referring to. I'm guessing that by "R^2" you're referring to the coefficient of determination, which seems to measure "goodness of fit". I'm also supposing that you mean to linear model. I'm not a statistician, but I can see at least one potential problem (and I'm hoping that simply dealing with data sets of different sizes is not a contributor). Even though you're dealing with one genotype, you're dealing with distinct flies within the genotype. There may be random variation in the phenotype from fly to fly: one fly may be slightly faster, and accelerate more rapidly, than another. This will produce a different distribution for each fly. As soon as the data points are not drawn from the same distribution (each fly will have its own), you should start seeing effects such as you are observing. I would expect the large discrepancy in the values that you observe only if there is relatively little moment-to-moment correlation between velocity and acceleration, but a readily measurable difference between the behaviour of individual flies within a genotype. If you take the average velocity and average acceleration for each fly (i.e. you're not looking at correlation for the single fly at all), and determine the correlation between the two sets of averages over the flies of a genotype, I am guessing you'll find this is where your large R2 comes from. — Quondum 08:38, 30 November 2012 (UTC)

- Well yes, I'm aware the phenotype will be slightly different, but this is measuring from the same population (no one said that all the members of the population had to be identical!) Also, the individual linear estimators (slope and intercept) when averaged out, are very close to the aggregately-calculated slope and intercept. The 95% confidence intervals for the slope and intercept (where one sample = one fly's slope and intercept, and the population being all the flies) are small enough that I can cleanly separate genotypes with different slopes and intercepts. The 95% confidence interval for data containing time series data (both individual and aggregate) isn't very useful I think, but I have calculated it, with the result that the 95% confidence interval is something on the order of 0.002 for say, a slope and intercept of 0.39 and 0.66 (the 95% CI based on the population (not time series) average of the flies' slopes and intercepts are on the order of 0.02). 128.143.1.41 (talk) 13:15, 30 November 2012 (UTC)

- If I understand the problem correctly, in your concatenated series the first n data points are from one fly, then the next n data points are from another fly, etc. If there is a lot more variation across flies in the mean of whichever is your dependent variable than there is variation within any one fly of its observed dependent variable values, then your aggregated regression is trying to explain dependent variable variance that is mostly due to the former, and you'll get a good R squared with the aggregate data if the flies are included in say increasing order of the mean of the dependent variable. To see what I mean, look at a plot of the aggregated independent and dependent data (always a good idea in data analysis). Maybe in the lower left of the plot you have a cloud of fly 1's data points, then farther to the right and a lot higher up you have a cloud of the second fly's data, then still farther up and to the right you have another cloud, etc. If you hand draw an approximate regression line, you'll explain the vertical variable's variance very well just because you have a large slope coefficient.

- So try shuffling the order in which the flies appear in the aggregated series. If you try a large number of such shuffles, on average your aggregated slope should be about the same as the slope of the typical unaggregated regression.

- Also, you could try putting in a dummy variable for all but one of the flies, which has the effect of shifting all your clouds of data down to where they have the same vertical mean as each other. My guess is that all the dummy variables will be significant, meaning that your aggregated regression is inappropriate in the absence of the dummies.

- Given that you include dummies, you could also try a Chow test of whether all the slope coefficients are the same for all the flies. Duoduoduo (talk) 14:51, 30 November 2012 (UTC)

- Actually I messed up in my script. For the individual R^2's, the program was calculating the correlation between one type of curvature and another type! The new differences are more like 0.66 for the individual and 0.69 for the aggregate (for the intercept). 137.54.1.168 (talk) 15:07, 30 November 2012 (UTC)

- Are you John Riemann Soong ? If so, can we mark this Q resolved ? StuRat (talk) 23:19, 1 December 2012 (UTC)

Martingale system works if you have more than 50% of winning?

Yes, Martingale Betting system doenst work in casinos,.... the article explain it. But lets imagine some game where you have more than 50% of winning. Martingale would work in this game? — Preceding unsigned comment added by 187.58.189.95 (talk) 13:01, 30 November 2012 (UTC)

- Inasmuch as it would produce the same expected value as any other method of play.--80.109.106.49 (talk) 18:15, 30 November 2012 (UTC)

- Let's provide a link: Martingale betting system. StuRat (talk) 00:04, 1 December 2012 (UTC)

- Also, you probably mean "winning", as "whining" is only a successful strategy for children. :-) StuRat (talk) 00:07, 1 December 2012 (UTC)

- Surely the OP meant "wining" as in "winning and celebrating by drinking wine". -- Meni Rosenfeld (talk) 20:44, 1 December 2012 (UTC)

- The martingale system is bad enough for games where the chance to win is <50%, but it's even worse for games where it is >50%. Its insanely high risk squanders your opportunity to profit from the game.

- Instead you should repeatedly bet small amounts, say 1% of your net worth. After enough times you're guaranteed to become rich (several assumptions apply, of course). -- Meni Rosenfeld (talk) 20:44, 1 December 2012 (UTC)

- Agreed, assuming that the payoff when you win is at least twice your bet, and also assuming that the game will continue forever. However, that last assumption is impossible, in the real world. That is, if the house is steadily losing money, then they can't continue doing so for long. They will either go bankrupt or come to their senses in short order. So, in that case, you might want to make larger bets, to get their money before somebody else does. StuRat (talk) 23:17, 1 December 2012 (UTC)

- How martingale is even worse for games where the chance to wins is >50%? I dont get it, lets imagine some roulette that has, 0 and 00 and if you bet at even and the ball fall in 0 or 00 you win (and you 2x the amount you bet with even/ods). How using martingale system in the case would be bad or worse then betting in some normal way (betting in numbers, black/red....)?201.78.162.15 (talk) 02:41, 2 December 2012 (UTC)

- If you make 10 $1 bets in this game you will have the same expected earnings as with one $10 bet. But the variance is much smaller in the former case, because variance is quadratic in the bet size but linear in the number of independent bets. More variance means, among other things, more likelihood to go bankrupt before having a chance to profit from this money-making machine.

- The martingale system dictates that you make very large bets, and is thus bad. You need to carefully choose the optimal bet size taking into account your net worth, the cost of the time required to play the game, the minimal bet size (the lower the minimal bet, the higher your bet should be because you can recover from greater losses) and so on. Martingale doesn't take any of this into account, it just arbitrarily pumps up the bet size. -- Meni Rosenfeld (talk) 09:45, 2 December 2012 (UTC)

- See Kelly criterion for how much to bet if you find a nice little earner paying out on average more than you put in and you want to exploit it for all it's worth as fast as possible. Dmcq (talk) 16:32, 2 December 2012 (UTC)

Regression estimators on X as a function of Y have a much smaller confidence interval (spread) than Y as a function of X

How do I explain the following data? v-k slope/intercept is based on the linear regression of velocity as the y-variable and curvature as the x-variable. k-v velocity just switches the two. Why can the v-k parameters be used to separate genotypes much more efficiently than the k-v parameters? The Wilcoxon Mann–Whitney U test comparing w1118 and C5v2 v-k slopes have a p-value of 8.6*10^-4. The p-value comparing k-v slopes is 0.0206.

The following is my results, the 95% CI is in parentheses:

average w1118 v-k slope: 0.391291 (0.007820) average w1118 v-k intercept: 0.658777 (0.015886) average individual w1118 R^2: 0.667761 collective w1118 R^2: 0.694240 average w1118 k-v slope: 1.713136 (0.021954) average w1118 k-v intercept: -1.099095 (0.026176) average C5v2 v-k slope: 0.359071 (0.017672) average C5v2 v-k intercept: 0.574655 (0.029339) average individual C5v2 R^2: 0.626207 collective C5v2 R^2: 0.689704 average C5v2 k-v slope: 1.740820 (0.035692) average C5v2 k-v intercept: -1.003023 (0.031987) average fumin v-k slope: 0.398489 (0.007759) average fumin v-k intercept: 0.691860 (0.016663) average individual fumin R^2: 0.670038 collective fumin R^2: 0.688467 average fumin k-v slope: 1.681955 (0.026843) average fumin k-v intercept: -1.118567 (0.023364) average NorpA v-k slope: 0.366310 (0.020219) average NorpA v-k intercept: 0.627074 (0.025159) average individual NorpA R^2: 0.642634 collective NorpA R^2: 0.696703 average NorpA k-v slope: 1.758842 (0.035529) average NorpA k-v intercept: -1.056938 (0.031151) average CantonS v-k slope: 0.289517 (0.033790) average CantonS v-k intercept: 0.330509 (0.056035) average individual CantonS R^2: 0.505073 collective CantonS R^2: 0.648629 average CantonS k-v slope: 1.781094 (0.121968) average CantonS k-v intercept: -0.742059 (0.049598)

137.54.1.168 (talk) 15:12, 30 November 2012 (UTC)

- Which variable to put on the left versus the right side of the regression ahs to depend on underlying theoretical assumptions. If you believe the curvature causes speed (i.e., when the fly want to curve more sharply it changes its speed), then the regression of curvature on speed is meaningless. If vice versa, then vice versa. Possibly one of the regressions is giving poor results because it specifies causation going in the wrong direction. Duoduoduo (talk) 15:50, 30 November 2012 (UTC)

- Regression doesn't determine anything about causation. Right? I thought the Y and X stuff was just for data presentation. 137.54.11.174 (talk) 15:56, 30 November 2012 (UTC)

- If you only look at the correlation coefficient, then you can do that without mentioning causation. But if you try to interpret anything else about the regression, you have to specify the regression in a valid way in advance. You assume y = a + bx + error and to engage in any kind of inference you assume the errors are uncorrelated with the x data. Without that assumption you run into trouble. But assuming the errors are uncorrelated with the x data is equivalent to assuming causation from x and the errors to y (although the strength of the causation from x may or may not turn out to be zero). So you are right that regression doesn't determine anything about causation; but regression results make no sense unless the regression has been specified in accordance with an assumption about causation. Duoduoduo (talk) 16:20, 30 November 2012 (UTC)

December 1

Function defining a gravity well?

I've been thinking about this question recently, yet I can't quite figure it out. How would we define the graph of a gravity well in terms of G and the mass of the central object?

The force of gravity on an object on an inclined plane is F = m g sin θ, and at the same time θ = arctan(dy / dx), so if I set the force due to incline to equal the force due to "gravity" (the force we want to simulate), I think I get

Where m is my little mass (the planet or marble), M is the big mass (the "sun", I suppose, except it's a point mass), and x is how far the little mass is from the point mass. However, I don't know if I'm right or if that equation even has a clear-cut solution.

I hope my explanation of my question is understandable. Thanks. 66.27.77.225 (talk) 02:56, 1 December 2012 (UTC)

- To me it's not obvious what the problem you are discussing is. We the equation for the force on a mass m on an inclined plane at the surface of the earth F = m g sin θ, the relation of that angle to the gradient θ = arctan(dy / dx), but then we equate this to Newtons law of gravitation? It seems what you have done is tried to equate two different models of gravitation, the Newtonian inverse square law ( F = G M m / r2) , and an approximation for constant acceleration due to gravity at the earth's surface (F = m g). Overlooking other mistakes in this process, this is at least uninformative.

- To elucidate the dynamics of a marble on a slope (if that is the problem) we need an equation of force Fy = m g sin θ, and to know how an object responds to a force, for which we use Newtons second law F = m a. Then we have ay = g sin θ with ay = d2y / dt2. This is the dynamical equation of motion for your marble.

- An interesting link if you enjoy thinking about such things might be Brachistochrone curve. — Preceding unsigned comment added by 182.55.244.11 (talk) 03:41, 1 December 2012 (UTC)

- I believe the OP is thinking about a popular visualization for gravity wells, where the behavior of an object in a gravitational potential is analogous to the behavior of a ball rolling on a surface with the shape of the potential graph.

- However, this is only an inaccurate visual analogy - there is no physical truth behind it. The linked article describes some of the flaws.

- The "real" gravity well - the gravitational potential energy at every point - is given simply by . -- Meni Rosenfeld (talk) 20:33, 1 December 2012 (UTC)

Confidence interval with detected sampling bias

According to this dining-hall survey (p. 16, Fig. 1b), 47% of MIT students (n=236) were virgins as of fall 2001. The rates were 70%, 32%, 28% and 33% for undergrad years 1, 2, 3 and 4-5 respectively; 35% for grads; and 49% for undergrads combined. But according to this report from the MIT Office of the Registrar, the enrolments by year were 1033, 1039, 1037 and 1104 undergrads and 5984 grads. By my calculation, the stratified percentage for undergrads would have been 40.6%, which clearly indicates a sampling bias. Can confidence intervals still be estimated, without knowing the number of students surveyed at each level? NeonMerlin 14:34, 1 December 2012 (UTC)

- If you mean confidence intervals for the true percentages, no, not really, not without additional information. Looie496 (talk) 17:12, 1 December 2012 (UTC)

The solution of the equation: xy=yx , for x≠y, {x,y}≠{2,4} , in natural numbers.

Any suggestions? HOOTmag (talk) 20:51, 1 December 2012 (UTC)

- How about -2 and -4 ? StuRat (talk) 22:16, 1 December 2012 (UTC)

- Oops, you did say natural numbers. Are we allowing zero ? StuRat (talk) 22:18, 1 December 2012 (UTC)

- Taking natural logs, y ln(x) = x ln(y) so ln(x) / x = ln(y) / y. Plot ln(z) / z and you get it increasing in the range (0, e), a single max at z=e, and decreasing for z > e. So the only possible solutions have x < e, and since x=1 doesn't work, x = 2 is the only solution in natural numbers (with y=4 giving the same value of the function). Duoduoduo (talk) 22:36, 1 December 2012 (UTC)

- I ran a simulation, which agrees that there are no other solutions, in the range tested (up to 1600). StuRat (talk) 23:21, 1 December 2012 (UTC)

December 2

December 3

random sampling, control and case

Kind of an esoteric question; I'm modeling something rare, about 1000 cases in about a million population. (SAS, proc genmod, poisson distribution). Intending to do the more or less routine thing; model sample of 1/3, run that model on second third to check for overfitting, use final third to validate/score. Works fine, but.... i sampled cases and controls separately then combined each (i.e. 1/3 of cases + 1/3 of controls), rather than taking 1/3 sample of total controls+cases together, because I was worried that 1/3 of the whole thing might get a small number of cases. Anyway, practicality aside, I'd like to hear any arguments re the theoretical validity or lack of same of the aforementioned separate sampling of controls and cases and combining? Thanks. Gzuckier (talk) 04:46, 3 December 2012 (UTC)

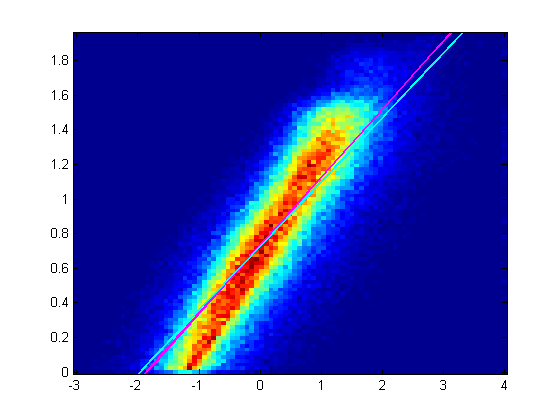

How do I choose a better method for robust regression?

The following is a heat map 2D histogram of X,Y values. It's a linear heat map. Magenta is the bisquare robust fit, cyan is the least squares fit. The robust fit makes the previous least squares fit only slightly better. How do I make it fit the trend? The plot is a log-log plot comparing log velocity (on the Y axis) against log of curvature (on the X-axis), where the data apparently follows a power law. However, using linear regression seems to obtain the wrong slope. John Riemann Soong (talk) 05:18, 3 December 2012 (UTC)

![{\displaystyle P(E)={}^{\left[{\text{Number of outcomes in event}}\right]}/{}_{\left[{\text{Total number of outcomes in sample space}}\right]}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ad369ae153d59b888c4a6bd7962b9459182d0b7a)

![{\displaystyle \left[{\text{Rolling an even number}}\right]=\{2,4,6\}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/39de78b7e03ec2a03dd6e44872ba21d6ef440db9)

![{\displaystyle \left[{\text{Sample space over a 6-sided die}}\right]=\{1,2,3,4,5,6\}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8cd0540bcb51a6617c02b0e30395ffa8f563ede5)

![{\displaystyle \left[{\text{Number of outcomes in event}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d88a8f42333093e7ea8c8eed02def137cab61842)

![{\displaystyle \left[{\text{Total number of outcomes in sample space}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/eb737d2b9392ab2065d4e5eefeda6e81ed739bee)

![{\displaystyle \left[{\text{Number of outcomes in event}}\right]=\|A\|\left[{\text{Total number of outcomes in sample space}}\right]=\|S\|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0515d65adcb136efc154d485379cd1fd85cb157f)

![{\displaystyle {\begin{array}{ll}\left[{\text{Number of outcomes in event}}\right]&=\left|A\right|,\\\left[{\text{Total number of outcomes in sample space}}\right]&=\left|S\right|\end{array}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/766b2379f03181b920da4409abe0817f937a2840)

![{\displaystyle {\begin{aligned}P\colon {\mathcal {P}}(S)&\to \left[0,1\right]\\A,S&\mapsto {}^{\left|A\right|}/_{\left|S\right|}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/05af2fa390ff2c05af8bb2478a9f2aa3024b9b09)

![{\displaystyle P(A:A\in {\mathcal {P}}(S))=({}^{\left|A\right|}/_{\left|S\right|}:P(A)\in \left[0,1\right])}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7cfa7947370ab2c1b526b9333cccacbdf2c7d974)

![{\displaystyle \left[0,1\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c57121c2b6c63c0b2f38eb96b1f7a543b5d1c522)

![{\displaystyle \left\{\forall \mathbb {R} :\mathbb {R} \in \left[0,1\right]\right\}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/67b5c91370a026aeeba47b11b00048c87856efab)

![{\displaystyle \left[{\text{Rolling an even number}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/44101554d71559b6184a54f90167f8d4b25db4db)

![{\displaystyle \left[{\text{Rolling an even number}}\right]=\left\{2,4,6\right\}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/57104e4c581a8c2968fb8737b648263a71ffc70d)

![{\displaystyle {\begin{aligned}P\colon \mathbb {R} &\to \left[0,1\right]\\A&\mapsto {}^{\left|A\right|}/_{\left|\mathbb {R} \right|}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f05458f493ef70572a5c6a38870a33bd299d2ef9)

![{\displaystyle {\begin{aligned}\left.P\right|_{S}\colon S&\to \left[0,1\right]\\A&\mapsto {}^{\left|A\right|}/_{\left|S\right|},A\in {\mathcal {P}}(S)\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/00d7bc9a1513d0986115f22db6fab17611341496)

![{\displaystyle A=\left[{\begin{array}{cc}a&b\\c&d\end{array}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/73786efacf2c0e7944d40123c867350b06f73f12)

^{T}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f829068aff9c03f411c1c7d6753474be16d78007)

^{T}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2f24d7f646c0d3bb0e7e410d0a17efdd4af40c7a)