Visual perception: Difference between revisions

Luckas-bot (talk | contribs) m r2.7.1) (Robot: Adding lv:Redze |

link to Akinetopsia |

||

| Line 101: | Line 101: | ||

===Disorders/dysfunctions=== |

===Disorders/dysfunctions=== |

||

*[[Achromatopsia]] |

*[[Achromatopsia]] |

||

*[[Akinetopsia]] |

|||

*[[Astigmatism_(eye)|Astigmatism]] |

*[[Astigmatism_(eye)|Astigmatism]] |

||

*[[Color blindness]] |

*[[Color blindness]] |

||

Revision as of 15:47, 16 April 2012

| Part of a series on |

| Psychology |

|---|

Visual perception is the ability to interpret information and surroundings from the effects of visible light reaching the eye. The resulting perception is also known as eyesight, sight, or vision (adjectival form: visual, optical, or ocular). The various physiological components involved in vision are referred to collectively as the visual system, and are the focus of much research in psychology, cognitive science, neuroscience, and molecular biology.

Visual system

The visual system in humans and animals allows individuals to assimilate information from the environment. The act of seeing starts when the lens of the eye focuses an image of its surroundings onto a light-sensitive membrane in the back of the eye, called the retina. The retina is actually part of the brain that is isolated to serve as a transducer for the conversion of patterns of light into neuronal signals. The lens of the eye focuses light on the photoreceptive cells of the retina, which detect the photons of light and respond by producing neural impulses. These signals are processed in a hierarchical fashion by different parts of the brain, from the retina upstream to central ganglia in the brain.

Note that up until now much of the above paragraph could apply to octopi, molluscs, worms, insects and things more primitive; anything with a more concentrated nervous system and better eyes than say a jellyfish. However, the following applies to mammals generally and birds (in modified form): The retina in these more complex animals sends fibers (the optic nerve) to the lateral geniculate nucleus, to the primary and secondary visual cortex of the brain. Signals from the retina can also travel directly from the retina to the superior colliculus.

Study of visual perception

The major problem in visual perception is that what people see is not simply a translation of retinal stimuli (i.e., the image on the retina). Thus people interested in perception have long struggled to explain what visual processing does to create what is actually seen.

Early studies

There were two major ancient Greek schools, providing a primitive explanation of how vision is carried out in the body.

The first was the "emission theory" which maintained that vision occurs when rays emanate from the eyes and are intercepted by visual objects. If an object was seen directly it was by 'means of rays' coming out of the eyes and again falling on the object. A refracted image was, however, seen by 'means of rays' as well, which came out of the eyes, traversed through the air, and after refraction, fell on the visible object which was sighted as the result of the movement of the rays from the eye. This theory was championed by scholars like Euclid and Ptolemy and their followers.

The second school advocated the so called 'intro-mission' approach which sees vision as coming from something entering the eyes representative of the object. With its main propagators Aristotle, Galen and their followers, this theory seems to have some contact with modern theories of what vision really is, but it remained only a speculation lacking any experimental foundation.

Both schools of thought relied upon the principle that "like is only known by like", and thus upon the notion that the eye was composed of some "internal fire" which interacted with the "external fire" of visible light and made vision possible. Plato makes this assertion in his dialogue Timaeus, as does Aristotle, in his De Sensu.[1]

Alhazen (965 – c. 1040) carried out many investigations and experiments on visual perception, extended the work of Ptolemy on binocular vision, and commented on the anatomical works of Galen.[2][3]

Leonardo DaVinci (1452–1519) was the first to recognize the special optical qualities of the eye. He wrote "The function of the human eye ... was described by a large number of authors in a certain way. But I found it to be completely different." His main experimental finding was that there is only a distinct and clear vision at the line of sight, the optical line that ends at the fovea. Although he did not use these words literally he actually is the father of the modern distinction between foveal and peripheral vision.[citation needed]

Unconscious inference

Hermann von Helmholtz is often credited with the first study of visual perception in modern times. Helmholtz examined the human eye and concluded that it was, optically, rather poor. The poor-quality information gathered via the eye seemed to him to make vision impossible. He therefore concluded that vision could only be the result of some form of unconscious inferences: a matter of making assumptions and conclusions from incomplete data, based on previous experiences.

Inference requires prior experience of the world.

Examples of well-known assumptions, based on visual experience, are:

- light comes from above

- objects are normally not viewed from below

- faces are seen (and recognized) upright.[4]

- closer objects can block the view of more distant objects, but not vice a versa

The study of visual illusions (cases when the inference process goes wrong) has yielded much insight into what sort of assumptions the visual system makes.

Another type of the unconscious inference hypothesis (based on probabilities) has recently been revived in so-called Bayesian studies of visual perception. Proponents of this approach consider that the visual system performs some form of Bayesian inference to derive a perception from sensory data. Models based on this idea have been used to describe various visual subsystems, such as the perception of motion or the perception of depth.[5][6] The "wholly empirical theory of perception" is a related and newer approach that rationalizes visual perception without explicitly invoking Bayesian formalisms.[7]

Gestalt theory

Gestalt psychologists working primarily in the 1930s and 1940s raised many of the research questions that are studied by vision scientists today.

The Gestalt Laws of Organization have guided the study of how people perceive visual components as organized patterns or wholes, instead of many different parts. Gestalt is a German word that partially translates to "configuration or pattern" along with "whole or emergent structure." According to this theory, there are six main factors that determine how the visual system automatically groups elements into patterns: Proximity, Similarity, Closure, Symmetry, Common Fate (i.e. common motion), and Continuity.

Analysis of eye movement

During the 1960s, technical development permitted the continuous registration of eye movement during reading[8] in picture viewing[9] and later in visual problem solving[10] and when headset-cameras became available, also during driving.[11]

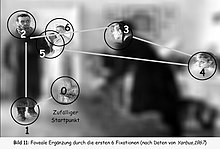

The picture to the left shows what may happen during the first two seconds of visual inspection. While the background is out of focus, representing the peripheral vision, the first eye movement goes to the boots of the man (just because they are very near the starting fixation and have a reasonable contrast).

The following fixations jump from face to face. They might even permit comparisons between faces.

It may be concluded that the icon face is a very attractive search icon within the peripheral field of vision. The foveal vision adds detailed information to the peripheral first impression.

It can also be noted that there are three different types of eye movements: vergence movements, saccadic movements and pursuit movements. Vergence movements involve the cooperation of both eyes to allow for an image to fall on the same area of both retinas. This results in a single focused image. Saccadic movements is the type of eye movement that is used to rapidly scan a particular scene/image. Lastly, pursuit movement is used to follow objects in motion. [12]

The cognitive and computational approaches

The major problem with the Gestalt laws (and the Gestalt school generally) is that they are descriptive not explanatory. For example, one cannot explain how humans see continuous contours by simply stating that the brain "prefers good continuity". Computational models of vision have had more success in explaining visual phenomena and have largely superseded Gestalt theory. More recently, the computational models of visual perception have been developed for Virtual Reality systems — these are closer to real life situation as they account for motion and activities which are prevalent in the real world.[13] Regarding Gestalt influence on the study of visual perception, Bruce, Green & Georgeson conclude:

- "The physiological theory of the Gestaltists has fallen by the wayside, leaving us with a set of descriptive principles, but without a model of perceptual processing. Indeed, some of their "laws" of perceptual organisation today sound vague and inadequate. What is meant by a "good" or "simple" shape, for example?" [14]

In the 1970s David Marr developed a multi-level theory of vision, which analysed the process of vision at different levels of abstraction. In order to focus on the understanding of specific problems in vision, he identified three levels of analysis: the computational, algorithmic and implementational levels. Many vision scientists, including Tomaso Poggio, have embraced these levels of analysis and employed them to further characterize vision from a computational perspective. [citation needed]

The computational level addresses, at a high level of abstraction, the problems that the visual system must overcome. The algorithmic level attempts to identify the strategy that may be used to solve these problems. Finally, the implementational level attempts to explain how solutions to these problems are realized in neural circuitry.

Marr suggested that it is possible to investigate vision at any of these levels independently. Marr described vision as proceeding from a two-dimensional visual array (on the retina) to a three-dimensional description of the world as output. His stages of vision include:

- a 2D or primal sketch of the scene, based on feature extraction of fundamental components of the scene, including edges, regions, etc. Note the similarity in concept to a pencil sketch drawn quickly by an artist as an impression.

- a 2½ D sketch of the scene, where textures are acknowledged, etc. Note the similarity in concept to the stage in drawing where an artist highlights or shades areas of a scene, to provide depth.

- a 3 D model, where the scene is visualized in a continuous, 3-dimensional map.[15]

Transduction

Transduction is the process through which energy from environmental stimuli is converted to neural activity for the brain to understand and process. The back of the eye contains three different cell layers; Photoreceptor layer, Bipolar cell layer and Ganglion cell layer. The photoreceptor layer is at the very back and contains rod photoreceptors and cone photoreceptors. Cones are responsible for colour perception. There are three different cones: red, green and blue. Photoreceptors contain within them photopigments, composed of two molecules. There are 4 specific photopigments (each with their own colour) that respond to specific wavelengths of light. When the appropriate wavelength of light hits the photoreceptor, its photopigment splits into two, which sends a message to the bipolar cell layer, which in turn sends a message to the ganglion cells, which then send the information through the optic nerve to the brain. If the appropriate photopigment is not in the proper photoreceptor (for example, a green photopigment inside a red cone), a condition called colour blindness will occur.[16]

Opponent Process

Transduction involves chemical messages sent from the photoreceptors to the bipolar cells to the ganglion cells. Several photoreceptors may send their information to one ganglion cell. There are two types of ganglion cells: red / green and yellow/blue. These neuron cells consistently fire – even when not stimulated. The brain interprets different colours (and with a lot of information, an image) when the rate of firing of these neurons alters. Red light stimulates the red cone, which in turn stimulates the red/green ganglion cell. Likewise, green light stimulates the green cone, which stimulates the red/green ganglion cell and blue light stimulates the blue cone which stimulates the yellow/blue ganglion cell. The rate of firing of the ganglion cells is increased when it is signalled by one cone and decreased (inhibited) when it is signalled by the other cone. The first colour in the name if the ganglion cell is the colour that excites it and the second is the colour that inhibits it. I.e.: A red cone would excite the red/green ganglion cell and the green cone would inhibit the red/green ganglion cell. This is an opponent process. If the rate of firing of a red/green ganglion cell is increased, the brain would know that the light was red, if the rate was decreased, the brain would know that the colour of the light was green.[17]

Artificial visual perception

Theories and observations of visual perception have been the main source of inspiration for computer vision (also called machine vision, or computational vision). Special hardware structures and software algorithms provide machines with the capability to interpret the images coming from a camera or a sensor. Artificial Visual Perception has long been used in the industry and is now entering the domains of automotive and robotics.

See also

- Naked eye

- Color vision

- Depth perception

- Entoptic phenomenon

- Lateral masking

- Motion perception

- Visual illusion

- Machine vision

- Computer vision

- Gestalt Psychology

Disorders/dysfunctions

- Achromatopsia

- Akinetopsia

- Astigmatism

- Color blindness

- Scotopic sensitivity syndrome

- Recovery from blindness

Related disciplines

References

- ^ Finger, Stanley. Origins of Neuroscience. A History of Explorations into Brain Function. New York: Oxford University Press, USA, 1994.

- ^ Howard, I (1996). "Alhazen's neglected discoveries of visual phenomena". Perception. 25 (10): 1203–1217. doi:10.1068/p251203. PMID 9027923.

- ^ Omar Khaleefa (1999). "Who Is the Founder of Psychophysics and Experimental Psychology?". American Journal of Islamic Social Sciences. 16 (2).

- ^ Hans-Werner Hunziker, (2006) Im Auge des Lesers: foveale und periphere Wahrnehmung - vom Buchstabieren zur Lesefreude [In the eye of the reader: foveal and peripheral perception - from letter recognition to the joy of reading] Transmedia Stäubli Verlag Zürich 2006 ISBN 978-3-7266-0068-6

- ^ Mamassian, Landy & Maloney (2002)

- ^ A Primer on Probabilistic Approaches to Visual Perception

- ^ The Wholly Empirical Theory of Perception

- ^ Taylor, St.: Eye Movements in Reading: Facts and Fallacies. American Educational Research Association, 2 (4), 1965, 187-202.

- ^ Yarbus, A. L. (1967). Eye movements and vision, Plenum Press, New York

- ^ Hunziker, H. W. (1970). Visuelle Informationsaufnahme und Intelligenz: Eine Untersuchung über die I CAN'T SEE!Augenfixationen beim Problemlösen. Schweizerische Zeitschrift für Psychologie und ihre Anwendungen, 1970, 29, Nr 1/2

- ^ Cohen, A. S. (1983). Informationsaufnahme beim Befahren von Kurven, Psychologie für die Praxis 2/83, Bulletin der Schweizerischen Stiftung für Angewandte Psychologie

- ^ Carlson, Neil R. (2010). Psychology the Science of Behaviour. Toronto Ontario: Pearson Canada Inc. pp. 140–141.

{{cite book}}: More than one of|author=and|last=specified (help) - ^ A.K.Beeharee - http://www.cs.ucl.ac.uk/staff/A.Beeharee/research.htm

- ^ Bruce, V., Green, P. & Georgeson, M. (1996). Visual perception: Physiology, psychology and ecology (3rd ed.). LEA. p. 110.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ Marr, D (1982). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. MIT Press.

- ^ Carlson, Neil R. (2010). "5". Psychology the science of behaviour (2nd ed.). Upper Saddle River, New Jersey, USA: Pearson Education Inc. pp. 138–145. ISBN 978-0-205-64524-4.

{{cite book}}: Unknown parameter|coauthors=ignored (|author=suggested) (help) - ^ Carlson, Neil R. (2010). "5". Psychology the science of behaviour (2nd ed.). Upper Saddle River, New Jersey, USA: Pearson Education Inc. pp. 138–145. ISBN 978-0-205-64524-4.

{{cite book}}: Unknown parameter|coauthors=ignored (|author=suggested) (help)

External links

- Visual Perception 3 - Cultural and Environmental Factors

- Gestalt Laws

- Summary of Kosslyn et al.'s theory of high-level vision

- The Organization of the Retina and Visual System

- Reference info on aritificial visual perception

- Dr Trippy's Sensorium A website dedicated to the study of the human sensorium and organisational behaviour

- Effect of Detail on Visual Perception by Jon McLoone, the Wolfram Demonstrations Project.

- The Joy of Visual Perception An excellent resource on the eye's perception abilities.

- VisionScience. An Internet Resource for Research in Human and Animal Vision A most comprehensive collection of resources in vision science and perception.

- Vision and Psychophysics. A quality account of many aspects of vision. However, some parts are missing.