PCI Express

| |

| Year created | 2004 |

|---|---|

| Created by | Intel, Dell, IBM, HP |

| Supersedes | AGP, PCI, PCI-X |

| Width in bits | 1–32 |

| No. of devices | One device each on each endpoint of each connection. PCI Express switches can be used to create multiple endpoints out of one endpoint to allow sharing of one endpoint with multiple devices. |

| Speed | Per lane:

16 lane slot:

|

| Style | Serial |

| Hotplugging interface | Yes, if ExpressCard or PCI Express ExpressModule |

| External interface | Yes, with PCI Express External Cabling |

| Website | pcisig |

PCI Express (Peripheral Component Interconnect Express), officially abbreviated as PCIe (or PCI-E, as it is commonly called), is a computer expansion card standard designed to replace the older PCI, PCI-X, and AGP standards. PCIe 3.0 is the latest standard for expansion cards that is available on mainstream personal computers.[1][2]

PCI Express is used in consumer, server, and industrial applications, as a motherboard-level interconnect (to link motherboard-mounted peripherals) and as an expansion card interface for add-in boards. A key difference between PCIe and earlier buses is a topology based on point-to-point serial links, rather than a shared parallel bus architecture.

The PCIe electrical interface is also used in a variety of other standards, most notably the ExpressCard laptop expansion card interface.

Conceptually, the PCIe bus can be thought of as a high-speed serial replacement of the older (parallel) PCI/PCI-X bus.[3] At the software level, PCIe preserves compatibility with PCI; a PCIe device can be configured and used in legacy applications and operating systems which have no direct knowledge of PCIe's newer features (though PCIe cards cannot be inserted into PCI slots). In terms of bus protocol, PCIe communication is encapsulated in packets. The work of packetizing and depacketizing data and status-message traffic is handled by the transaction layer of the PCIe port (described later). Radical differences in electrical signaling and bus protocol require the use of a different mechanical form factor and expansion connectors (and thus, new motherboards and new adapter boards).

Architecture

PCIe, unlike previous PC expansion standards, is structured around point-to-point serial links, a pair of which (one in each direction) make up a lane; rather than a shared parallel bus. These lanes are routed by a hub on the main-board acting as a crossbar switch. This dynamic point-to-point behavior allows more than one pair of devices to communicate with each other at the same time. In contrast, older PC interfaces had all devices permanently wired to the same bus; therefore, only one device could send information at a time. This format also allows channel grouping, where multiple lanes are bonded to a single device pair in order to provide higher bandwidth.

The number of lanes is negotiated during power-up or explicitly during operation. By making the lane count flexible, a single standard can provide for the needs of high-bandwidth cards (e.g., graphics, 10 Gigabit Ethernet and multiport Gigabit Ethernet cards) while being economical for less demanding cards.

Unlike preceding PC expansion interface standards, PCIe is a network of point-to-point connections. This removes the need for bus arbitration or waiting for the bus to be free, and enables full duplex communication. While standard PCI-X (133 MHz 64 bit) and PCIe ×4 have roughly the same data transfer rate, PCIe ×4 will give better performance if multiple device pairs are communicating simultaneously or if communication between a single device pair is bidirectional.

Format specifications are maintained and developed by the PCI-SIG (PCI Special Interest Group), a group of more than 900 companies that also maintain the Conventional PCI specifications.

Interconnect

PCIe devices communicate via a logical connection called an interconnect[4] or link. A link is a point-to-point communication channel between 2 PCIe ports, allowing both to send/receive ordinary PCI-requests (configuration read/write, I/O read/write, memory read/write) and interrupts (INTx, MSI, MSI-X). At the physical level, a link is composed of 1 or more lanes.[4] Low-speed peripherals (such as an 802.11 Wi-Fi card) use a single-lane (×1) link, while a graphics adapter typically uses a much wider (and thus, faster) 16-lane link.

Lane

A lane is composed of a transmit and receive pair of differential lines. Each lane is composed of 4 wires or signal paths, meaning conceptually, each lane is a full-duplex byte stream, transporting data packets in 8 bit 'byte' format, between endpoints of a link, in both directions simultaneously.[5] Physical PCIe slots may contain from one to thirty-two lanes, in powers of two (1, 2, 4, 8, 16 and 32).[4] Lane counts are written with an × prefix (e.g., ×16 represents a sixteen-lane card or slot), with ×16 being the largest size in common use.[6]

Serial Bus

The bonded serial format was chosen over a traditional parallel format due to the phenomenon of timing skew. Timing skew is a direct result of the limitations imposed by the speed of an electrical signal traveling down a wire, which it does at the finite speed of electricity. Because signal paths across an interface have different finite lengths, parallel signals transmitted simultaneously arrive at their destinations at slightly different times. When the interface clock rate increases to the point where the wavelength of a single bit is less than the smallest difference between path lengths, the bits of a single word do not arrive at their destination simultaneously, making parallel recovery of the word difficult. Thus, the speed of the electrical signal, combined with the difference in length between the longest and shortest path in a parallel interconnect, leads to a naturally imposed maximum bandwidth. Serial channel bonding avoids this issue by not requiring the bits to arrive simultaneously. PCIe is just one example of a general trend away from parallel buses to serial interconnects. Other examples include Serial ATA, USB, SAS, FireWire and RapidIO. Multichannel serial design increases flexibility by allowing slow devices to be allocated fewer lanes than fast devices.

Form factors

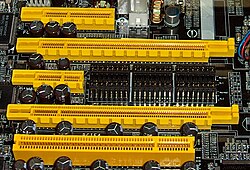

PCI Express (standard)

A PCIe card will fit into a slot of its physical size or larger, but may not fit into a smaller PCIe slot. Some slots use open-ended sockets to permit physically longer cards and will negotiate the best available electrical connection. The number of lanes actually connected to a slot may also be less than the number supported by the physical slot size. An example is a ×8 slot that actually only runs at ×1; these slots will allow any ×1, ×2, ×4 or ×8 card to be used, though only running at ×1 speed. This type of socket is described as a ×8 (×1 mode) slot, meaning it physically accepts up to ×8 cards but only runs at ×1 speed. The advantage gained is that a larger range of PCIe cards can still be used without requiring the motherboard hardware to support the full transfer rate, which keeps design and implementation costs down.

Pinout

| Pin | Side B | Side A | Comments |

|---|---|---|---|

| 1 | +12V | PRSNT1# | Pulled low to indicate card inserted |

| 2 | +12V | +12V | |

| 3 | Reserved | +12V | |

| 4 | Ground | Ground | |

| 5 | SMCLK | TCK | SMBus and JTAG port pins |

| 6 | SMDAT | TDI | |

| 7 | Ground | TDO | |

| 8 | +3.3V | TMS | |

| 9 | TRST# | +3.3V | |

| 10 | +3.3Vaux | +3.3V | Standby power |

| 11 | WAKE# | PWRGD | Link reactivation, power good. |

| Key notch | |||

| 12 | Reserved | Ground | |

| 13 | Ground | REFCLK+ | Reference clock differential pair |

| 14 | HSOp(0) | REFCLK- | Lane 0 transmit data, + and − |

| 15 | HSOn(0) | Ground | |

| 16 | Ground | HSIp(0) | Lane 0 receive data, + and − |

| 17 | PRSNT2# | HSIn(0) | |

| 18 | Ground | Ground | |

| 19 | HSOp(1) | Reserved | Lane 1 transmit data, + and − |

| 20 | HSOn(1) | Ground | |

| 21 | Ground | HSIp(1) | Lane 1 receive data, + and − |

| 22 | Ground | HSIn(1) | |

| 23 | HSOp(2) | Ground | Lane 2 transmit data, + and − |

| 24 | HSOn(2) | Ground | |

| 25 | Ground | HSIp(2) | Lane 2 receive data, + and − |

| 26 | Ground | HSIn(2) | |

| 27 | HSOp(3) | Ground | Lane 3 transmit data, + and − |

| 28 | HSOn(3) | Ground | |

| 29 | Ground | HSIp(3) | Lane 3 receive data, + and − |

| 30 | Ground | HSIn(3) | |

| 31 | PRSNT2# | Ground | |

| 32 | Ground | Reserved | |

An ×1 slot is a shorter version of this, ending after pin 18. ×8 and ×16 slots extend the pattern.

| Ground pin | Zero volt reference |

|---|---|

| Power pin | Supplies power to the PCIe card |

| Output pin | Signal from the card to the motherboard |

| Input pin | Signal from the motherboard to the card |

| Open drain | May be pulled low and/or sensed by multiple cards |

| Sense pin | Pulled up by the motherboard, pulled low by the card |

| Reserved | Not presently used, do not connect |

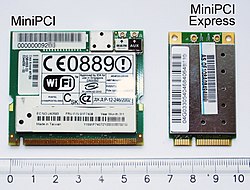

PCI Express Mini Card

PCI Express Mini Card (also known as Mini PCI Express, Mini PCIe, and Mini PCI-E) is a replacement for the Mini PCI form factor based on PCI Express. It is developed by the PCI-SIG. The host device supports both PCI Express and USB 2.0 connectivity, and each card uses whichever the designer feels most appropriate to the task. Most laptop computers built after 2005 are based on PCI Express and can have several Mini Card slots.

Physical dimensions

PCI Express Mini Cards are 30×50.95 mm. There is a 52 pin edge connector, consisting of two staggered rows on a 0.8 mm pitch. Each row has 8 contacts, a gap equivalent to 4 contacts, then a further 18 contacts. A half-length card is also specified 30×26.8 mm. Cards have a thickness of 1.0 mm (excluding components)..

Electrical interface

PCI Express Mini Card edge connector provide multiple connections and buses:

- PCIe×1

- USB 2.0

- SMBus

- GPS

- Wires to diagnostics LEDs for wireless network (i.e., WiFi) status on computer's chassis

- SIM card for GSM and WCDMA applications

- Future extension for another PCIe lane

- 1.5 and 3.3 volt power

Mini PCIe SSD connector

Some netbooks (notably the Asus EeePC,MacBook Air, and Dell mini9 and mini10) use a variant of the PCI Express Mini Card as an SSD. This variant uses the reserved and several non-reserved pins to implement SATA and IDE interface passthrough, keeping only USB, ground lines, and sometimes the core PCIe 1x bus intact.[7] This makes the 'miniPCIe' flash and solid state drives sold for netbooks largely incompatible with true PCI Express Mini implementations. Also, the typical Asus miniPCIe SSD is 71mm long, causing the Dell 51mm model to often be (incorrectly) referred to as half length. A true 51mm Mini PCIe SSD was announced in 2009, with two stacked PCB layers, which allows for higher storage capacity. The announced design preserves the PCIe interface, making it compatible with the standard mini PCIe slot. No working product has yet been developed, likely as a result of the popularity of the alternative variant.

PCI Express External Cabling

PCI Express External Cabling (also known as External PCI Express or Cabled PCI Express) specifications were released by the PCI-SIG in February 2007.[8][9]

Standard cables and connectors have been defined for ×1, ×4, ×8, and ×16 link widths, with a transfer rate of 250 MB/s per lane. The PCI-SIG also expects the norm will evolve to reach the 500 MB/s as found in PCI Express 2.0. The maximum cable length hasn't been determined yet.

Derivative forms

There are several other expansion card types derived from PCIe. These include:

- Low height card

- ExpressCard: successor to the PC card form factor (with ×1 PCIe and USB 2.0; hot-pluggable)

- PCI Express ExpressModule: a hot-pluggable modular form factor defined for servers and workstations

- XMC: similar to the CMC/PMC form factor (with ×4 PCIe or Serial RapidI/O)

- AdvancedTCA: a complement to CompactPCI for larger applications; supports serial based backplane topologies

- AMC: a complement to the AdvancedTCA specification; supports processor and I/O modules on ATCA boards (×1, ×2, ×4 or ×8 PCIe).

- FeaturePak: a tiny expansion card format (43 x 65 mm) for embedded and small form factor applications; it implements two x1 PCIe links on a high-density connector along with USB, I2C, and up to 100 points of I/O.

- Universal IO: A variant from Super Micro Computer Inc designed for use in low profile rack mounted chassis. It has the connector bracket reversed so it cannot fit in a normal PCI Express socket, but is pin compatible and may be inserted if the bracket is removed.

History

While in development, PCIe was initially referred to as HSI (for High Speed Interconnect), and underwent a name change to 3GIO (for 3rd Generation I/O) before finally settling on its PCI-SIG name PCI Express. It was first drawn up by a technical working group named the Arapaho Work Group (AWG) which, for initial drafts, consisted of an Intel only team of architects. Subsequently the AWG was expanded to include industry partners.

PCIe is a technology under constant development and improvement. The current PCI Express implementation is version 3.0.

PCI Express 1.0a

In 2003, PCI-SIG introduced PCIe 1.0a, with a data rate of 250 MB/s and a transfer rate of 2.5 GT/s.

PCI Express 2.0

PCI-SIG announced the availability of the PCI Express Base 2.0 specification on 15 January 2007.[10] The PCIe 2.0 standard doubles the per-lane throughput from the PCIe 1.0 standard's 250 MB/s to 500 MB/s. This means a 32-lane PCI connector (x32) can support throughput up to 16 GB/s aggregate. The PCIe 2.0 standard uses a base clock speed of 5.0 GHz, while the first version operates at 2.5 GHz.

PCIe 2.0 motherboard slots are fully backward compatible with PCIe v1.x cards. PCIe 2.0 cards are also generally backward compatible with PCIe 1.x motherboards, using the available bandwidth of PCI Express 1.1. Overall, graphic cards or motherboards designed for v 2.0 will be able to work with the other being v 1.1 or v 1.0.

The PCI-SIG also said that PCIe 2.0 features improvements to the point-to-point data transfer protocol and its software architecture.[11]

In June 2007 Intel released the specification of the Intel P35 chipset which supports only PCIe 1.1, not PCIe 2.0.[12] Some people may be confused by the P35 block diagram which states the Intel P35 has a PCIe x16 graphics link (8 GB/s) and 6 PCIe x1 links (500 MB/s each).[13] For simple verification one can view the P965 block diagram which shows the same number of lanes and bandwidth but was released before PCIe 2.0 was finalized.[original research?] Intel's first PCIe 2.0 capable chipset was the X38 and boards began to ship from various vendors (Abit, Asus, Gigabyte) as of October 21, 2007.[14] AMD started supporting PCIe 2.0 with its AMD 700 chipset series and nVidia started with the MCP72.[15] The specification of the Intel P45 chipset includes PCIe 2.0.

PCI Express 2.1

PCI Express 2.1 supports a large proportion of the management, support, and troubleshooting systems planned to be fully implemented in PCI Express 3.0. However, the speed is the same as PCI Express 2.0. Most motherboards sold currently come with PCI Express 2.0 connectors.

PCI Express 3.0

PCI Express 3.0 Base specification revision 3.0 was made available in November 2010, after multiple delays. In August 2007, PCI-SIG announced that PCI Express 3.0 would carry a bit rate of 8 gigatransfers per second, and that it would be backwards compatible with existing PCIe implementions. At that time, it was also announced that the final specification for PCI Express 3.0 would be delayed until 2011,[16] although more recent sources (see below) stated that it may be available towards the end of 2010. New features for the PCIe 3.0 specification include a number of optimizations for enhanced signaling and data integrity, including transmitter and receiver equalization, PLL improvements, clock data recovery, and channel enhancements for currently supported topologies.[17]

Following a six-month technical analysis of the feasibility of scaling the PCIe interconnect bandwidth, PCI-SIG's analysis found out that 8 gigatransfers per second can be manufactured in mainstream silicon process technology, and can be deployed with existing low-cost materials and infrastructure, while maintaining full compatibility (with negligible impact) to the PCIe protocol stack.

PCIe 2.0 delivers 5 GT/s, but employs an 8b/10b encoding scheme which results in a 20 percent overhead on the raw bit rate. PCIe 3.0 removes the requirement for 8b/10b encoding and instead uses a technique called "scrambling" in which "a known binary polynomial is applied to a data stream in a feedback topology. Because the scrambling polynomial is known, the data can be recovered by running it through a feedback topology using the inverse polynomial"[18] and also uses a 128b/130b encoding scheme, reducing the overhead to approximately 1.5%, as opposed to the 20% overhead of 8b/10b encoding used by PCIe 2.0. PCIe 3.0's 8 GT/s bit rate effectively delivers double PCIe 2.0 bandwidth. According to an official press release by PCI-SIG on 8 August 2007:

"The final PCIe 3.0 specifications, including form factor specification updates, may be available by late 2009, and could be seen in products starting in 2010 and beyond."[19]

As of January 2010[update], the release of the final specifications had been delayed until Q2 2010.[20] PCI-SIG expects the PCIe 3.0 specifications to undergo rigorous technical vetting and validation before being released to the industry. This process, which was followed in the development of prior generations of the PCIe Base and various form factor specifications, includes the corroboration of the final electrical parameters with data derived from test silicon and other simulations conducted by multiple members of the PCI-SIG.

On May 31, 2010, it was announced that the 3.0 specification would be coming in 2010, but not until the second half of the year.[21] Then, on June 23, 2010, the PCI Special Interest Group released a timetable showing the final 3.0 specification due in the fourth quarter of 2010.[22]

Finally, on November 18, 2010, the PCI Special Interest Group officially publishes the finalized PCI Express 3.0 specification to its members to build devices based on this new version of PCI Express.[23]

Current status

PCI Express has replaced AGP as the default interface for graphics cards on new systems. With a few exceptions, all graphics cards being released as of 2009 and 2010 from AMD (ATI) and NVIDIA use PCI Express. NVIDIA uses the high bandwidth data transfer of PCIe for its Scalable Link Interface (SLI) technology, which allows multiple graphics cards of the same chipset and model number to be run in tandem, allowing increased performance. ATI also has developed a multi-GPU system based on PCIe called CrossFire. AMD and NVIDIA have released motherboard chipsets which support up to four PCIe ×16 slots, allowing tri-GPU and quad-GPU card configurations.

Uptake for other forms of PC expansion has been much slower and conventional PCI remains dominant. PCI Express is commonly used for disk array controllers, onboard gigabit Ethernet and Wi-Fi but add-in cards are still generally conventional PCI, particularly at the lower end of the market. Sound cards, TV/capture-cards, modems, serial port/USB/Firewire cards, network/WiFi cards and other cards with low-speed interfaces are still nearly all conventional PCI. For this reason most motherboards supporting PCI Express offer conventional PCI slots as well. As of 2010 many of these cards are starting to make their way over to x8, x4, or x1 PCIe slots which are present in motherboards. For instance, almost all new sound cards from the second half of 2010 are now PCIe.[citation needed]

ExpressCard has been introduced on several mid- to high-range laptops such as Apple's MacBook Pro line. Unlike desktops, however, laptops frequently only have one expansion slot. Replacing the PC card slot with ExpressCard slot means a loss in compatibility with PC-card devices.

Hardware protocol summary

The PCIe link is built around dedicated unidirectional couples of serial (1-bit), point-to-point connections known as lanes. This is in sharp contrast to the conventional PCI connection, which is a bus-based system where all the devices share the same bidirectional, 32-bit (or 64-bit), parallel bus.

PCI Express is a layered protocol, consisting of a transaction layer, a data link layer, and a physical layer. The Data Link Layer is subdivided to include a media access control (MAC) sublayer. The Physical Layer is subdivided into logical and electrical sublayers. The Physical logical-sublayer contains a physical coding sublayer (PCS). (Terms borrowed from the IEEE 802 model of networking protocol.)

Physical layer

The PCIe Physical Layer (PHY, PCIEPHY , PCI Express PHY, or PCIe PHY) specification is divided into two sub-layers, corresponding to electrical and logical specifications. The logical sublayer is sometimes further divided into a MAC sublayer and a PCS, although this division is not formally part of the PCIe specification. A specification published by Intel, the PHY Interface for PCI Express (PIPE),[24] defines the MAC/PCS functional partitioning and the interface between these two sub-layers. The PIPE specification also identifies the physical media attachment (PMA) layer, which includes the serializer/deserializer (SerDes) and other analog circuitry; however, since SerDes implementations vary greatly among ASIC vendors, PIPE does not specify an interface between the PCS and PMA.

At the electrical level, each lane consists of two unidirectional LVDS or PCML pairs at 2.525 Gbit/s. Transmit and receive are separate differential pairs, for a total of 4 data wires per lane.

A connection between any two PCIe devices is known as a link, and is built up from a collection of 1 or more lanes. All devices must minimally support single-lane (x1) link. Devices may optionally support wider links composed of 2, 4, 8, 12, 16, or 32 lanes. This allows for very good compatibility in two ways:

- a PCIe card will physically fit (and work correctly) in any slot that is at least as large as it is (e.g., an ×1 sized card will work in any sized slot);

- a slot of a large physical size (e.g., ×16) can be wired electrically with fewer lanes (e.g., ×1, ×4, ×8, or ×12) as long as it provides the ground connections required by the larger physical slot size.

In both cases, PCIe will negotiate the highest mutually supported number of lanes. Many graphics cards, motherboards and bios versions are verified to support ×1, ×4, ×8 and ×16 connectivity on the same connection.

Even though the two would be signal-compatible, it is not usually possible to place a physically larger PCIe card (e.g., a ×16 sized card) into a smaller slot —though if the PCIe slots are open-ended, by design or by hack, some motherboards will allow this.[citation needed]

The width of a PCIe connector is 8.8 mm, while the height is 11.25 mm, and the length is variable. The fixed section of the connector is 11.65 mm in length and contains 2 rows of 11 (22 pins total), while the length of the other section is variable depending on the number of lanes. The pins are spaced at 1 mm intervals, and the thickness of the card going into the connector is 1.8 mm.[25][26]

| Lanes | Pins Total | variable section | Total Length | variable section |

|---|---|---|---|---|

| ×1 | 2×18=36[27] | 2×7=14 | 25 mm | 7.65 mm |

| ×4 | 2×32=64 | 2×21=42 | 39 mm | 21.65 mm |

| ×8 | 2×49=98 | 2×38=76 | 56 mm | 38.65 mm |

| ×16 | 2×82=164 | 2×71=142 | 89 mm | 71.65 mm |

Data transmission

PCIe sends all control messages, including interrupts, over the same links used for data. The serial protocol can never be blocked, so latency is still comparable to conventional PCI, which has dedicated interrupt lines.

Data transmitted on multiple-lane links is interleaved, meaning that each successive byte is sent down successive lanes. The PCIe specification refers to this interleaving as "data striping". While requiring significant hardware complexity to synchronize (or deskew) the incoming striped data, striping can significantly reduce the latency of the nth byte on a link. Due to padding requirements, striping may not necessarily reduce the latency of small data packets on a link.

As with all high data rate serial transmission protocols, clocking information must be embedded in the signal. At the physical level, PCI Express utilizes the very common 8b/10b encoding scheme[18] to ensure that strings of consecutive ones or consecutive zeros are limited in length. This is necessary to prevent the receiver from losing track of where the bit edges are. In this coding scheme every 8 (uncoded) payload bits of data are replaced with 10 (encoded) bits of transmit data, causing a 20% overhead in the electrical bandwidth. To improve the available bandwidth, PCI Express version 3.0 employs 128b/130b encoding instead: similar but with much lower overhead.

Many other protocols (such as SONET) use a different form of encoding known as scrambling to embed clock information into data streams. The PCIe specification also defines a scrambling algorithm, but it is used to reduce electromagnetic interference (EMI) by preventing repeating data patterns in the transmitted data stream.

Data link layer

The Data Link Layer implements the sequencing of the transaction layer packets (TLPs) that are generated by the transaction layer, data protection via a 32-bit cyclic redundancy check code (CRC, known in this context as LCRC) and an acknowledgement protocol (ACK and NAK signaling). TLPs that pass an LCRC check and a sequence number check result in an acknowledgement, or ACK, while those that fail these checks result in a negative acknowledgement, or NAK. TLPs that result in a NAK, or timeouts that occur while waiting for an ACK, result in the TLPs being replayed from a special buffer in the transmit data path of the data link layer. This guarantees delivery of TLPs in spite of electrical noise, barring any malfunction of the device or transmission medium.

ACK and NAK signals are communicated via a low-level packet known as a data link layer packet (DLLP). DLLPs are also used to communicate flow control information between the transaction layers of two connected devices, as well as some power management functions.

Transaction layer

PCI Express implements split transactions (transactions with request and response separated by time), allowing the link to carry other traffic while the target device gathers data for the response.

PCI Express utilizes credit-based flow control. In this scheme, a device advertises an initial amount of credit for each of the receive buffers in its transaction layer. The device at the opposite end of the link, when sending transactions to this device, will count the number of credits consumed by each TLP from its account. The sending device may only transmit a TLP when doing so does not result in its consumed credit count exceeding its credit limit. When the receiving device finishes processing the TLP from its buffer, it signals a return of credits to the sending device, which then increases the credit limit by the restored amount. The credit counters are modular counters, and the comparison of consumed credits to credit limit requires modular arithmetic. The advantage of this scheme (compared to other methods such as wait states or handshake-based transfer protocols) is that the latency of credit return does not affect performance, provided that the credit limit is not encountered. This assumption is generally met if each device is designed with adequate buffer sizes.

PCIe 1.x is often quoted to support a data rate of 250 MB/s in each direction, per lane. This figure is a calculation from the physical signaling rate (2.5 Gbaud) divided by the encoding overhead (10 bits per byte.) This means a sixteen lane (×16) PCIe card would then be theoretically capable of 16×250 MB/s = 4 GB/s in each direction. While this is correct in terms of data bytes, more meaningful calculations will be based on the usable data payload rate, which depends on the profile of the traffic, which is a function of the high-level (software) application and intermediate protocol levels.

Like other high data rate serial interconnect systems, PCIe has a protocol and processing overhead due to the additional transfer robustness (CRC and acknowledgements). Long continuous unidirectional transfers (such as those typical in high-performance storage controllers) can approach >95% of PCIe's raw (lane) data rate. These transfers also benefit the most from increased number of lanes (×2, ×4, etc.) But in more typical applications (such as a USB or Ethernet controller), the traffic profile is characterized as short data packets with frequent enforced acknowledgements.[28] This type of traffic reduces the efficiency of the link, due to overhead from packet parsing and forced interrupts (either in the device's host interface or the PC's CPU.) Being a protocol for devices connected to the same printed circuit board, it does not require the same tolerance for transmission errors as a protocol for communication over longer distances, and thus, this loss of efficiency is not particular to PCIe.

Uses

External PCIe cards

Theoretically, external PCIe could give a notebook the graphics power of a desktop, by connecting a notebook with any PCIe desktop video card (enclosed in its own external housing, with strong power supply and cooling); This is possible with an ExpressCard interface, which provides single lane v1.1 performance.

IBM/Lenovo has also included a PCI-Express slot in their Advanced Docking Station 250310U. It provides a half sized slot with an x16 length slot, but only x1 connectivity.[34] However, docking stations with expansion slots are becoming less common as the laptops are getting more advanced video cards and either DVI-D interfaces, or DVI-D pass through for port replicators and docking stations.

Additionally, Nvidia has developed Quadro Plex external PCIe Video Cards that can be used for advanced graphic applications. These video cards require a PCI Express ×8 or ×16 slot for the interconnection cable.[35] AMD has recently announced the ATI XGP technology based on proprietary cabling solution which is compatible with PCIe x8 signal transmissions.[36] This connector is available on the Fujitsu Amilo and the Acer Ferrari One notebooks. Only Fujitsu has an actual external box available, which also works on the Ferrari One. Recently Acer launched the Dynavivid graphics dock for XGP. Shuttle introduced their own external graphics solutions, GXT.

There are now card hubs in development that one can connect to a laptop through an ExpressCard slot, though they are currently rare, obscure, or unavailable on the open market. These hubs can have full-sized cards placed in them.

Competing protocols

Several communications standards have emerged based on high bandwidth serial architectures. These include but are not limited to InfiniBand, RapidIO, StarFabric and HyperTransport.

Essentially the differences are based on the tradeoffs between flexibility and extensibility vs latency and overhead. An example of such a tradeoff is adding complex header information to a transmitted packet to allow for complex routing (PCI Express is not capable of this). The additional overhead reduces the effective bandwidth of the interface and complicates bus discovery and initialization software. Also making the system hot-pluggable requires that software track network topology changes. Examples of buses suited for this purpose are InfiniBand and StarFabric.

Another example is making the packets shorter to decrease latency (as is required if a bus is to be operated as a memory interface). Smaller packets mean that the packet headers consume a higher percentage of the packet, thus decreasing the effective bandwidth. Examples of bus protocols designed for this purpose are RapidIO and HyperTransport.

PCI Express falls somewhere in the middle, targeted by design as a system interconnect (local bus) rather than a device interconnect or routed network protocol. Additionally, its design goal of software transparency constrains the protocol and raises its latency somewhat.

Development tools

When developing and/or troubleshooting the PCI Express bus, examination of hardware signals can be very important to find problems. Logic analyzers and bus analyzers are tools which collect, analyze, decode, store signals so people can view the high-speed waveforms at their leisure.

See also

- Accelerated Graphics Port (AGP)

- Active State Power Management (ASPM)

- AMR

- Extended Industry Standard Architecture (EISA)

- Industry Standard Architecture (ISA)

- List of device bandwidths (A useful listing of device bandwidths that includes PCI Express)

- Micro Channel architecture (MCA)

- NuBus

- PCI Configuration Space

- PCI Local Bus

- PCI-X

- Root complex

- VESA Local Bus (VLB)

- Zorro II and Zorro III (Amiga Autoconfig Bus)

References

- ^ "PCI Express 2.0 (Training)". MindShare. Retrieved 2009-12-07.

- ^ "PCI Express Base specification". PCI_SIG. Retrieved 2010-10-18.

{{cite web}}: Cite has empty unknown parameter:|data=(help) - ^ "HowStuffWorks "How PCI Express Works"". Computer.howstuffworks.com. Retrieved 2009-12-07.

- ^ a b c "PCI Express Architecture Frequently Asked Questions". PCI-SIG. Retrieved 23 November 2008.

- ^ "PCI Express Bus". Retrieved 2010-06-12.

- ^ "PCI Express – An Overview of the PCI Express Standard - Developer Zone - National Instruments". Zone.ni.com. 2009-08-13. Retrieved 2009-12-07.

- ^ "Eee PC Research". Retrieved 26 October 2009.

- ^ "PCI Express External Cabling 1.0 Specification". Retrieved 9 February 2007.

- ^ "February 7, 2007". Pci-Sig. 2007-02-07. Retrieved 2010-09-11.

- ^ "PCI Express Base 2.0 specification announced" (PDF) (Press release). PCI-SIG. 15 January 2007. Retrieved 9 February 2007. — note that in this press release the term aggregate bandwidth refers to the sum of incoming and outgoing bandwidth; using this terminology the aggregate bandwidth of full duplex 100BASE-TX is 200 Mbit/s

- ^ Tony Smith (11 October 2006). "PCI Express 2.0 final draft spec published". The Register. Retrieved 9 February 2007.

- ^ "Intel P35 Express Chipset Product Brief" (PDF). Intel. Retrieved 5 September 2007.

- ^ Richard Swinburne (21 May 2007). "First look — Intel P35 chipset". bit-tech.net. Retrieved 19 June 2007.

- ^ Gary Key & Wesley Fink (21 May 2007). "Intel P35: Intel's Mainstream Chipset Grows Up". AnandTech. Retrieved 21 May 2007.

- ^ Anh Huynh (8 February 2007). "NVIDIA "MCP72" Details Unveiled". AnandTech. Retrieved 9 February 2007.

- ^ Hachman, Mark. "PC Magazine". Pcmag.com. Retrieved 2010-09-11.

- ^ "PCI Express 3.0 Bandwidth: 8.0 Gigatransfers/s". ExtremeTech. 9 August 2007. Retrieved 5 September 2007.

- ^ a b "PCI Express 3.0 Frequently Asked Questions". PCI-SIG. Retrieved 23 November 2010.

- ^ "PCI-SIG Announces PCI Express 3.0 Bit Rate For Products In 2011 And Beyond". 8 August 2007. Retrieved 24 March 2008.

- ^ "PCI Express 3.0 Spec Pushed Out to 2010". 5 August 2009. Retrieved 30 August 2009.

- ^ "PCI-Express 3.0 specs to finally be released in 2H". 31 May 2010. Retrieved 7 June 2010.

- ^ "PCI Express 3.0 Nears Completion". 23 Jun 2010. Retrieved 16 August 2010.

The PCI Special Interest Group released a detailed timetable for the next-generation PCI Express 3.0 specification on Wednesday, with a final specification due by the fourth quarter and products possibly in early 2011.

- ^ "PCI Special Interest Group Publishes PCI Express 3.0 Standard". 18 November 2010. Retrieved 18 November 2010.

- ^ "PHY Interface for the PCI Express Architecture, version 2.00" (PDF). Retrieved 21 May 2008.

- ^ "Mechanical Drawing for PCI Express Connector". Retrieved 7 December 2007.

- ^ "FCi schematic for PCIe connectors" (PDF). Retrieved 7 December 2007. [dead link]

- ^ "PCI Express 1x, 4x, 8x, 16x bus pinout and wiring @". Pinouts.ru. Retrieved 2009-12-07.

- ^ "Computer Peripherals And Interfaces". Technical Publications Pune. Retrieved 23 July 2009.

- ^ "Magma ExpressBox1: Cabled PCI Express for Desktops and Laptops". Magma.com. Retrieved 2010-09-11.

- ^ "TheInquirer". TheInquirer. Retrieved 2010-09-11.

- ^ "Custompcmag.co.uk". Custompcmag.co.uk. Retrieved 2010-09-11.

- ^ ASUSTeK Computer

- ^ "Technology Beats. News and Reviews". VR-Zone. 1995-09-09. Retrieved 2010-09-11.

- ^ "IBM/Lenovo Thinkpad Advanced Dock Overview". 307.ibm.com. 2010-03-07. Retrieved 2010-09-11.

- ^ "NVIDIA Quadro Plex VCS – Advanced visualization and remote graphics". Nvidia.com. Retrieved 2010-09-11.

- ^ "Advanced Micro Devices, AMD – Global Provider of Innovative Microprocessor, Graphics and Media Solutions". Ati.amd.com. Retrieved 2010-09-11.

External links

- Introduction to PCI Protocol

- "PCI Express Base Specification Revision 1.0". PCI-SIG. 29 April 2002.

{{cite journal}}: Cite journal requires|journal=(help)CS1 maint: postscript (link) (Requires PCI-SIG membership) - PCI-SIG, the industry organization that maintains and develops the various PCI standards

- Intel Developer Network for PCI Express Architecture