Pixel

In digital imaging, a pixel, pel,[1] dots, or picture element[2] is a physical point in a raster image, or the smallest addressable element in an all points addressable display device; so it is the smallest controllable element of a picture represented on the screen. The address of a pixel corresponds to its physical coordinates. LCD pixels are manufactured in a two-dimensional grid, and are often represented using dots or squares, but CRT pixels correspond to their timing mechanisms and sweep rates.

Each pixel is a sample of an original image; more samples typically provide more accurate representations of the original. The intensity of each pixel is variable. In color imaging systems, a color is typically represented by three or four component intensities such as red, green, and blue, or cyan, magenta, yellow, and black.

In some contexts (such as descriptions of camera sensors), the term pixel is used to refer to a single scalar element of a multi-component representation (more precisely called a photosite in the camera sensor context, although the neologism sensel is sometimes used to describe the elements of a digital camera's sensor),[3] while in yet other contexts the term may be used to refer to the set of component intensities for a spatial position, though this is more accurately termed a sample. Drawing a distinction between pixels, photosite and samples avoids confusion when describing color systems that use chroma subsampling or cameras that use Bayer filter to produce color components via upsampling.

The word pixel is based on a contraction of pix (from word "pictures", where it is shortened to "pics", and "cs" in "pics" sounds like "x") and el (for "element"); similar formations with 'el' include the words voxel,[4] texel[4] and maxel (for magnetic pixel).[5]

Etymology

The word "pixel" was first published in 1965 by Frederic C. Billingsley of JPL, to describe the picture elements of video images from space probes to the Moon and Mars.[6] Billingsley had learned the word from Keith E. McFarland, at the Link Division of General Precision in Palo Alto, who in turn said he did not know where it originated. McFarland said simply it was "in use at the time" (circa 1963).[7]

The word is a combination of pix, for picture, and element. The word pix appeared in Variety magazine headlines in 1932, as an abbreviation for the word pictures, in reference to movies.[8] By 1938, "pix" was being used in reference to still pictures by photojournalists.[7]

The concept of a "picture element" dates to the earliest days of television, for example as "Bildpunkt" (the German word for pixel, literally 'picture point') in the 1888 German patent of Paul Nipkow. According to various etymologies, the earliest publication of the term picture element itself was in Wireless World magazine in 1927,[9] though it had been used earlier in various U.S. patents filed as early as 1911.[10]

Some authors explain pixel as picture cell, as early as 1972.[11] In graphics and in image and video processing, pel is often used instead of pixel.[12] For example, IBM used it in their Technical Reference for the original PC.

Pixilation, spelled with a second i, is an unrelated filmmaking technique that dates to the beginnings of cinema, in which live actors are posed frame by frame and photographed to create stop-motion animation. An archaic British word meaning "possession by spirits (pixies)," the term has been used to describe the animation process since the early 1950s; various animators, including Norman McLaren and Grant Munro, are credited with popularizing it.[13]

Technical

A pixel is generally thought of as the smallest single component of a digital image. However, the definition is highly context-sensitive. For example, there can be "printed pixels" in a page, or pixels carried by electronic signals, or represented by digital values, or pixels on a display device, or pixels in a digital camera (photosensor elements). This list is not exhaustive and, depending on context, synonyms include pel, sample, byte, bit, dot, and spot. Pixels can be used as a unit of measure such as: 2400 pixels per inch, 640 pixels per line, or spaced 10 pixels apart.

The measures dots per inch (dpi) and pixels per inch (ppi) are sometimes used interchangeably, but have distinct meanings, especially for printer devices, where dpi is a measure of the printer's density of dot (e.g. ink droplet) placement.[14] For example, a high-quality photographic image may be printed with 600 ppi on a 1200 dpi inkjet printer.[15] Even higher dpi numbers, such as the 4800 dpi quoted by printer manufacturers since 2002, do not mean much in terms of achievable resolution.[16]

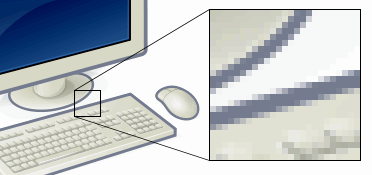

The more pixels used to represent an image, the closer the result can resemble the original. The number of pixels in an image is sometimes called the resolution, though resolution has a more specific definition. Pixel counts can be expressed as a single number, as in a "three-megapixel" digital camera, which has a nominal three million pixels, or as a pair of numbers, as in a "640 by 480 display", which has 640 pixels from side to side and 480 from top to bottom (as in a VGA display), and therefore has a total number of 640×480 = 307,200 pixels or 0.3 megapixels.

The pixels, or color samples, that form a digitized image (such as a JPEG file used on a web page) may or may not be in one-to-one correspondence with screen pixels, depending on how a computer displays an image. In computing, an image composed of pixels is known as a bitmapped image or a raster image. The word raster originates from television scanning patterns, and has been widely used to describe similar halftone printing and storage techniques.

Sampling patterns

For convenience, pixels are normally arranged in a regular two-dimensional grid. By using this arrangement, many common operations can be implemented by uniformly applying the same operation to each pixel independently. Other arrangements of pixels are possible, with some sampling patterns even changing the shape (or kernel) of each pixel across the image. For this reason, care must be taken when acquiring an image on one device and displaying it on another, or when converting image data from one pixel format to another.

For example:

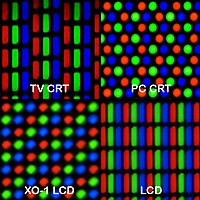

- LCD screens typically use a staggered grid, where the red, green, and blue components are sampled at slightly different locations. Subpixel rendering is a technology which takes advantage of these differences to improve the rendering of text on LCD screens.

- The vast majority of color digital cameras use a Bayer filter, resulting in a regular grid of pixels where the color of each pixel depends on its position on the grid.

- A clipmap uses a hierarchical sampling pattern, where the size of the support of each pixel depends on its location within the hierarchy.

- Warped grids are used when the underlying geometry is non-planar, such as images of the earth from space.[17]

- The use of non-uniform grids is an active research area, attempting to bypass the traditional Nyquist limit.[18]

- Pixels on computer monitors are normally "square" (this is, having equal horizontal and vertical sampling pitch); pixels in other systems are often "rectangular" (that is, having unequal horizontal and vertical sampling pitch – oblong in shape), as are digital video formats with diverse aspect ratios, such as the anamorphic widescreen formats of the Rec. 601 digital video standard.

Resolution of computer monitors

Computers can use pixels to display an image, often an abstract image that represents a GUI. The resolution of this image is called the display resolution and is determined by the video card of the computer. LCD monitors also use pixels to display an image, and have a native resolution. Each pixel is made up of triads, with the number of these triads determining the native resolution. On some CRT monitors, the beam sweep rate may be fixed, resulting in a fixed native resolution. Most CRT monitors do not have a fixed beam sweep rate, meaning they do not have a native resolution at all - instead they have a set of resolutions that are equally well supported. To produce the sharpest images possible on an LCD, the user must ensure the display resolution of the computer matches the native resolution of the monitor.

Resolution of telescopes

The pixel scale used in astronomy is the angular distance between two objects on the sky that fall one pixel apart on the detector (CCD or infrared chip). The scale s measured in radians is the ratio of the pixel spacing p and focal length f of the preceding optics, s=p/f. (The focal length is the product of the focal ratio by the diameter of the associated lens or mirror.) Because p is usually expressed in units of arcseconds per pixel, because 1 radian equals 180/π*3600≈206,265 arcseconds, and because diameters are often given in millimeters and pixel sizes in micrometers which yields another factor of 1,000, the formula is often quoted as s=206p/f.

Bits per pixel

The number of distinct colors that can be represented by a pixel depends on the number of bits per pixel (bpp). A 1 bpp image uses 1-bit for each pixel, so each pixel can be either on or off. Each additional bit doubles the number of colors available, so a 2 bpp image can have 4 colors, and a 3 bpp image can have 8 colors:

- 1 bpp, 21 = 2 colors (monochrome)

- 2 bpp, 22 = 4 colors

- 3 bpp, 23 = 8 colors

...

- 8 bpp, 28 = 256 colors

- 16 bpp, 216 = 65,536 colors ("Highcolor" )

- 24 bpp, 224 = 16,777,216 colors ("Truecolor")

For color depths of 15 or more bits per pixel, the depth is normally the sum of the bits allocated to each of the red, green, and blue components. Highcolor, usually meaning 16 bpp, normally has five bits for red and blue, and six bits for green, as the human eye is more sensitive to errors in green than in the other two primary colors. For applications involving transparency, the 16 bits may be divided into five bits each of red, green, and blue, with one bit left for transparency. A 24-bit depth allows 8 bits per component. On some systems, 32-bit depth is available: this means that each 24-bit pixel has an extra 8 bits to describe its opacity (for purposes of combining with another image).

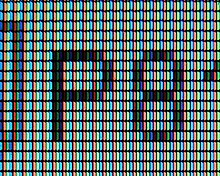

Subpixels

Many display and image-acquisition systems are, for various reasons, not capable of displaying or sensing the different color channels at the same site. Therefore, the pixel grid is divided into single-color regions that contribute to the displayed or sensed color when viewed at a distance. In some displays, such as LCD, LED, and plasma displays, these single-color regions are separately addressable elements, which have come to be known as subpixels.[19] For example, LCDs typically divide each pixel horizontally into three subpixels. When the square pixel is divided into three subpixels, each subpixel is necessarily rectangular. In display industry terminology, subpixels are often referred to as pixels,[by whom?] as they are the basic addressable elements in a viewpoint of hardware, and hence pixel circuits rather than subpixel circuits is used.

Most digital camera image sensors use single-color sensor regions, for example using the Bayer filter pattern, and in the camera industry these are known as pixels just like in the display industry, not subpixels.

For systems with subpixels, two different approaches can be taken:

- The subpixels can be ignored, with full-color pixels being treated as the smallest addressable imaging element; or

- The subpixels can be included in rendering calculations, which requires more analysis and processing time, but can produce apparently superior images in some cases.

This latter approach, referred to as subpixel rendering, uses knowledge of pixel geometry to manipulate the three colored subpixels separately, producing an increase in the apparent resolution of color displays. While CRT displays use red-green-blue-masked phosphor areas, dictated by a mesh grid called the shadow mask, it would require a difficult calibration step to be aligned with the displayed pixel raster, and so CRTs do not currently use subpixel rendering.

The concept of subpixels is related to samples.

Megapixel

A megapixel (MP) is a million pixels; the term is used not only for the number of pixels in an image, but also to express the number of image sensor elements of digital cameras or the number of display elements of digital displays. For example, a camera that makes a 2048×1536 pixel image (3,145,728 finished image pixels) typically uses a few extra rows and columns of sensor elements and is commonly said to have "3.2 megapixels" or "3.4 megapixels", depending on whether the number reported is the "effective" or the "total" pixel count.[20]

Digital cameras use photosensitive electronics, either charge-coupled device (CCD) or complementary metal–oxide–semiconductor (CMOS) image sensors, consisting of a large number of single sensor elements, each of which records a measured intensity level. In most digital cameras, the sensor array is covered with a patterned color filter mosaic having red, green, and blue regions in the Bayer filter arrangement, so that each sensor element can record the intensity of a single primary color of light. The camera interpolates the color information of neighboring sensor elements, through a process called demosaicing, to create the final image. These sensor elements are often called "pixels", even though they only record 1 channel (only red, or green, or blue) of the final color image. Thus, two of the three color channels for each sensor must be interpolated and a so-called N-megapixel camera that produces an N-megapixel image provides only one-third of the information that an image of the same size could get from a scanner. Thus, certain color contrasts may look fuzzier than others, depending on the allocation of the primary colors (green has twice as many elements as red or blue in the Bayer arrangement).

DxO Labs invented the Perceptual MegaPixel (P-MPix) to measure the sharpness that a camera produces when paired to a particular lens – as opposed to the MP a manufacturer states for a camera product which is based only on the camera's sensor. The new P-MPix claims to be a more accurate and relevant value for photographers to consider when weighing-up camera sharpness.[21] As of mid-2013, the Sigma 35mm F1.4 DG HSM mounted on a Nikon D800 has the highest measured P-MPix. However, with a value of 23 MP, it still wipes-off more than one-third of the D800's 36.3 MP sensor.[22]

A camera with a full-frame image sensor, and a camera with an APS-C image sensor, may have the same pixel count (for example, 16 MP), but the full-frame camera may allow better dynamic range, less noise, and improved low-light shooting performance than an APS-C camera. This is because the full-frame camera has a larger image sensor than the APS-C camera, therefore more information can be captured per pixel. A full-frame camera that shoots photographs at 36 megapixels has roughly the same pixel size as an APS-C camera that shoots at 16 megapixels.[23]

One new method to add Megapixels has been introduced in a Micro Four Thirds System camera which only uses 16MP sensor, but can produce 64MP RAW (40MP JPEG) by expose-shift-expose-shift the sensor a half pixel each time to both directions. Using a tripod to take level multi-shots within an instance, the multiple 16MP images are then generated into a unified 64MP image.[24]

See also

References

- ^ Foley, J. D.; Van Dam, A. (1982). Fundamentals of Interactive Computer Graphics. Reading, MA: Addison-Wesley. ISBN 0201144689.

- ^ Rudolf F. Graf (1999). Modern Dictionary of Electronics. Oxford: Newnes. p. 569. ISBN 0-7506-4331-5.

- ^ Michael Goesele (2004). New Acquisition Techniques for Real Objects and Light Sources in Computer Graphics. Books on Demand. ISBN 3-8334-1489-8.

- ^ a b Foley, James D.; Andries van Dam; John F. Hughes; Steven K. Feiner (1990). "Spatial-partitioning representations; Surface detail". Computer Graphics: Principles and Practice. The Systems Programming Series. Addison-Wesley. ISBN 0-201-12110-7.

These cells are often called voxels (volume elements), in analogy to pixels.

- ^ By. "Just When You Thought Magnets Weren't Magic; Magnets Are Mechanisms". Hackaday. Retrieved 2016-03-22.

- ^ Fred C. Billingsley, "Processing Ranger and Mariner Photography," in Computerized Imaging Techniques, Proceedings of SPIE, Vol. 0010, pp. XV-1–19, Jan. 1967 (Aug. 1965, San Francisco).

- ^ a b Lyon, Richard F. (2006). A brief history of 'pixel'. IS&T/SPIE Symposium on Electronic Imaging.

- ^ "Online Etymology Dictionary".

- ^ "On language; Modem, I'm Odem", The New York Times, April 2, 1995. Accessed April 7, 2008.

- ^ Sinding-Larsen, Alf Transmission of Pictures of Moving Objects, US Patent 1,175,313, issued March 14, 1916.

- ^ Robert L. Lillestrand (1972). "Techniques for Change Detection". IEEE Trans. Comput. C-21 (7).

- ^ Lewis, Peter H. (February 12, 1989). The Executive Computer; Compaq Sharpens Its Video Option. The New York Times.

- ^ Frame by Frame Stop Motion: NonTraditional Approaches to Stop Motion Animation - Tom Gasek - Google Books

- ^ Derek Doeffinger (2005). The Magic of Digital Printing. Lark Books. p. 24. ISBN 1-57990-689-3.

- ^ "Experiments with Pixels Per Inch (PPI) on Printed Image Sharpness". ClarkVision.com. July 3, 2005.

- ^ Harald Johnson (2002). Mastering Digital Printing. Thomson Course Technology. p. 40. ISBN 1-929685-65-3.

- ^ "Image registration of blurred satellite images". Retrieved 2008-05-09.

- ^ "ScienceDirect - Pattern Recognition: Image representation by a new optimal non-uniform morphological sampling:". Retrieved 2008-05-09.

- ^ "Subpixel in Science". dictionary.com. Retrieved 4 July 2015.

- ^ Now a megapixel is really a megapixel

- ^ http://www.dxomark.com/en/Reviews/Looking-for-new-photo-gear-DxOMark-s-Perceptual-Megapixel-can-help-you

- ^ http://www.dxomark.com/index.php/Lenses/Camera-Lens-Ratings/Optical-Metric-Scores

- ^ "Camera sensor size: Why does it matter and exactly how big are they?". March 21, 2013.

- ^ Damien Demolder (February 14, 2015). "Soon, 40MP without the tripod: A conversation with Setsuya Kataoka from Olympus". Retrieved March 8, 2015.

External links

- A Pixel Is Not A Little Square: Microsoft Memo by computer graphics pioneer Alvy Ray Smith.

- Video of talk on pixel history at the Computer History Museum

- Square and non-Square Pixels: Technical info on pixel aspect ratios of modern video standards (480i, 576i, 1080i, 720p), plus software implications.

- 120 Megapixel is here now: A lot of information about MegaPixel and Gigapixel.