Wikipedia:Reference desk/Science

of the Wikipedia reference desk.

Main page: Help searching Wikipedia

How can I get my question answered?

- Select the section of the desk that best fits the general topic of your question (see the navigation column to the right).

- Post your question to only one section, providing a short header that gives the topic of your question.

- Type '~~~~' (that is, four tilde characters) at the end – this signs and dates your contribution so we know who wrote what and when.

- Don't post personal contact information – it will be removed. Any answers will be provided here.

- Please be as specific as possible, and include all relevant context – the usefulness of answers may depend on the context.

- Note:

- We don't answer (and may remove) questions that require medical diagnosis or legal advice.

- We don't answer requests for opinions, predictions or debate.

- We don't do your homework for you, though we'll help you past the stuck point.

- We don't conduct original research or provide a free source of ideas, but we'll help you find information you need.

How do I answer a question?

Main page: Wikipedia:Reference desk/Guidelines

- The best answers address the question directly, and back up facts with wikilinks and links to sources. Do not edit others' comments and do not give any medical or legal advice.

May 20

What would be the first and last function to fail if?

You teleported an unshielded smartphone to low Earth orbit? (of course that's science fiction, do it in a thought experiment physics simulator if you hate teleportation thought experiments so much) What if you teleported a smartphone to a Trojan asteroid of Earth orbit? How damaging is sunlight and solar wind compared to the radiation of the rest of the universe? How many pixels fail per second? When would the screen turn off if it's set to always on? Also I'm wondering when a contemporary smartphone's GPS would stop working well if you could put it in a climate-controlled shield that only lets non-damaging radiations through and hitchhike it on a spaceprobe. Can it work outside the constellation? What velocities does GPS work at? Would it give crummy readings if you could make the receiver move at ~100 km/s relative to the satellites or a thousand or 10K or 0.999c? Sagittarian Milky Way (talk) 16:54, 20 May 2019 (UTC)

- The cold would do damage before radiation in a number of ways. Shrinking pieces would crack, battery would fail, inner moisture would turn into ice, LCD would freeze and stop reponding to electric signal, the chip would fail...

- Gem fr (talk) 18:14, 20 May 2019 (UTC)

- The average temperature of the Earth without the greenhouse effect was about 0F if I recall so the phone average temperature might be bearable in sunlight but however much temperature differential the conduction can't remove will of course still stress the parts. Sagittarian Milky Way (talk) 01:23, 21 May 2019 (UTC)

- This is wrong. Without atmosphere part of the moon exposed to the sun are close to 400K (this value depends on albedo, which is why astronauts wear white suits, so they do not roast in the sun); plastic parts will not withstand that. In the shade it drops to 3K. Average temperature do not apply to such a small object as a smartphone, it lacks thermal inertia. Gem fr (talk) 09:42, 21 May 2019 (UTC)

- Could it work for seconds while the sun side hadn't heated up fully yet and vice versa? Sagittarian Milky Way (talk) 12:36, 21 May 2019 (UTC)

- I dunno. Here are my 2 cents. The energy gain when lit would be in the kW/m² magnitude, a typical smartphone being ~100cm² so it gains ~10 W=~10 J/s. Specific heat capacity would be in 1 J/K/g magnitude, but it will depend a lot on whether the energy spreads on the entire 200 g typical smartphone --in which case it would heat at ~1/20 K/s, and could keep working for tens of minutes--, or first concentrate in a small, directly exposed 2g part -- in which case this would heat at 5K/s and would be destroyed in less than half a minute. Probably somewhere in between, meaning, it could work for some seconds.

- For freezing, the energy loss would be 1/3 of the aforemantioned gain, so time needed would be x3.

- but don't take my word for it

- And check Nimur (talk) answser below. I underestimated soft error from radiation, that wouldnt destroy the smartphone but would cause critical malfunction. The average mean-time for such is not clear from his ref, though, so it all depend on whether they occur in a matter of minutes, or need more time. Gem fr (talk) 21:08, 21 May 2019 (UTC)

- Could it work for seconds while the sun side hadn't heated up fully yet and vice versa? Sagittarian Milky Way (talk) 12:36, 21 May 2019 (UTC)

- This is wrong. Without atmosphere part of the moon exposed to the sun are close to 400K (this value depends on albedo, which is why astronauts wear white suits, so they do not roast in the sun); plastic parts will not withstand that. In the shade it drops to 3K. Average temperature do not apply to such a small object as a smartphone, it lacks thermal inertia. Gem fr (talk) 09:42, 21 May 2019 (UTC)

- The average temperature of the Earth without the greenhouse effect was about 0F if I recall so the phone average temperature might be bearable in sunlight but however much temperature differential the conduction can't remove will of course still stress the parts. Sagittarian Milky Way (talk) 01:23, 21 May 2019 (UTC)

- GPS satellites direct their antenna beams towards Earth surface. An unprepared GPS receiver needs several minutes continuous reception to acquire ephemeris and then can obtain a location in seconds if it receives simultaneously from 4 satellites. NASA report that GPS can be used (with proper equipment) as far out as geosynchronous orbit 36,000 km altitude. Simple domestic GPS receivers that have only two channels that are multiplexed amongst satellites lack processing bandwidth to handle more than about 100 mph speed. DroneB (talk) 20:34, 20 May 2019 (UTC)

- As I understand it, while GPS may be able to work that far out, many GPS device you're able to buy probably won't. They still enforce the Coordinating Committee for Multilateral Export Controls (in an OR fashion) meaning they won't work above 18km altitude even though as I understand it the COCOM rules are dead and their replacement don't actually have an altitude limit. See e.g. [1] [2] [3]. P.S. Some people suggest some devices obey COCOM in an AND fashion although I'm confused by this since the COCOM text seems to be clearly OR. Possibly these devices are only complying with the Missile Technology Control Regime speed limit and people are simply confused. I think it's far rarer that people actually encounter the speed limit in practice compared to the altitude limit. So many probably don't actually know if it's obeying COCOM in an OR fashion or it's only obeying a speed limit with no altitude limit. Since MTCR seems to clearly require a speed limit, I would be surprised if devices instead obey COCOM in an OR fashion although then again I wouldn't be that surprised if whoever is in charge of implementing these limits are as confused about what they're supposed to do as random commentators, despite the fact they should have lawyers etc to tell them. Nil Einne (talk) 02:12, 21 May 2019 (UTC)

- P.P.S. It is obviously possible that one or more governments e.g. the US, still had the COCOM limits in law/regulation despite the treaty establishing them ending so manufacturers did have to obey the limits or risk trouble. Nil Einne (talk) 02:17, 21 May 2019 (UTC)

- Consumer GPS had a COCOM or other limit of 999mph as of some years back, but at least some of them worked fine at 500+ mph. I remember taking mine (a cheap Garmin) on an airliner just to try it out. 67.164.113.165 (talk) 06:08, 21 May 2019 (UTC)

- From what I can tell the actual COCOM limit was 1000 knots. The MTCR limit is 600 m s-1. I suspect some devices were conservative because they weren't sure of their accuracy at such high speed and didn't think it mattered or because (as per the altitude limite) whoever was in charge of implementing the limit wasn't given proper guidance, but I'm not sure if these was every any legal reason for such a low limit. Nil Einne (talk) 07:29, 22 May 2019 (UTC)

- As I understand it, while GPS may be able to work that far out, many GPS device you're able to buy probably won't. They still enforce the Coordinating Committee for Multilateral Export Controls (in an OR fashion) meaning they won't work above 18km altitude even though as I understand it the COCOM rules are dead and their replacement don't actually have an altitude limit. See e.g. [1] [2] [3]. P.S. Some people suggest some devices obey COCOM in an AND fashion although I'm confused by this since the COCOM text seems to be clearly OR. Possibly these devices are only complying with the Missile Technology Control Regime speed limit and people are simply confused. I think it's far rarer that people actually encounter the speed limit in practice compared to the altitude limit. So many probably don't actually know if it's obeying COCOM in an OR fashion or it's only obeying a speed limit with no altitude limit. Since MTCR seems to clearly require a speed limit, I would be surprised if devices instead obey COCOM in an OR fashion although then again I wouldn't be that surprised if whoever is in charge of implementing these limits are as confused about what they're supposed to do as random commentators, despite the fact they should have lawyers etc to tell them. Nil Einne (talk) 02:12, 21 May 2019 (UTC)

- How about we avoid speculation, and point our OP to a real resource?

- Radiation Effects on Integrated Circuits and Systems for Space Applications, with a new second-edition published this year, is written by Raoul Velazco, research director at the TIMA Lab in Grenoble. He's a world expert on a subject near to my own personal interests: the infamous single-event upset.

- In 2011, several iPhones and other consumer telephones flew on STS-135. Here's the NASA "What's Going Up" article. Some technical details are published by the experiment investigators, Odyssey Space Research, and summarized in this press release. Here's the NASA Blog article, too: SpaceLab for iOS. Among the research, the LFI (Lifecycle Flight Instrumentation) characterized the effects of radiation on the device.

- To excite and inspire the community at large, Apollo astronaut Buzz Aldrin gave a presentation at Apple's World Wide Developer Conference in 2011 to show off some ideas for consumer-electronics and promote enthusiasm for spaceflight.

- Here's another NASA article, Socializing Science with Smartphones in Space. They also point to a following experiment, SPHERES, in which a team of MIT student-researchers planned to emplace the smart-phone powered Nanoracks satellite outside the space station - that is to say, a smart-phone, outside, in low earth orbit.

- Perhaps to the surprise of everyone commenting in the thread - but sort of expected by anyone with a little background in this area - the first thing to fail was the application software. The hardware itself was qualified for spaceflight.

- If I may take a moment to grump out at this late stage of the morning, the real metaphorical take-away here is that as of this decade, the limiting factor in spaceflight is software-quality.

- Interested future engineers should spend more time learning fundamentals of math, science, and engineering, and then spend a lot more time engaged in formal education relating to software engineering and computer science, so that our next generation can have exponential improvement in software quality.

- Some people say that "everyone can code." The truth of the matter is, very few people can code, and even fewer people are good enough at math and science and logical thinking to code well enough for a mission-critical space-flight application.

- Nimur (talk) 16:30, 21 May 2019 (UTC)

- Another possible failure mode would be that the battery would be ejected. That is, if air inside the cell phone is at 15 PSI, and the battery and cover is maybe 2x3 inches, or 6 square inches, that would be about 90 lbs of instantaneous force first on the cover, and, once it blows off, on the battery. Whether this actually happens depends on the specifics of the cover design, though, and how well sealed the rest of the phone is. Ironically, a waterproof model might be more prone to this than one with gaps, like the ill-fated Galaxy Fold. SinisterLefty (talk) 22:12, 27 May 2019 (UTC)

May 22

Photons

- |......................................

- |--------------------------------------

- | < thinnest and best aimed beam that <

- | < doesn't violate laws of physics < <

- |--------------------------------------

- |......................................

- |

- |

- |< a bullseye

Where will half the photons fall? A wavelength wide circle? (open edit window for unscrambled ASCII art) (asked by 107.242.117.33 14:25, 22 May 2019)

- "ASCII art" is not enough (at least for me) to understand what you mean

- but anyway looks to me you'll find to be happy in Diffraction-limited system

- Gem fr (talk) 14:51, 22 May 2019 (UTC)

- Photon waves are used for equations. Light doesn't bounce up and down in a wave as it flies through the air. 12.207.168.3 (talk) 16:01, 22 May 2019 (UTC)

- If the OP is interested in modern theory and practice to describe where we will detect photons, An Introduction to Modern Optics is a good introductory book. After reading through a book like that, let us know if you have more specific questions. Nimur (talk) 17:10, 22 May 2019 (UTC)

- Basically I want to know how wide the photon equivalent of an electron cloud is. The part of the cloud that has the photon half of the time that is. Maybe the cloud won't look like a spherical galaxy since photons can't go faster than c so I made the photons fall on a 2D target. No matter how wide and perfect a laser and lens are you can't focus a laser spot sharper than this photon equivalent of an electron cloud right? 107.242.117.17 (talk) 20:01, 22 May 2019 (UTC)

- You're looking for a specific technical parameter - something like a collision cross-section - to describe the interaction between a photon and some other object; but that's not a common way to model a photon. Most physicists don't talk about the photon's position in the same way that they talk about the electron cloud. And what's worse - you've somehow concluded that this number, if you could deduce it, would tell you something about "beam width." With all due respect, that's just wrong physics! So ... if you ask a wrong-physics question, there's no way to get a right-answer. In fact, this "characteristic" length scale of a photon - if we could even concoct it - would not be the thing that limits how well-focused a beam of light is, nor how wide the beam would appear if you looked at it or photographed it or otherwise sampled it.

- The position of an individual photon is not well-defined until after it interacts. Instead, we use a probability density function to estimate where the photon may be, and we use experimental measurements of a large population of photons to describe where most of them will be, most of the time. This is the whole premise of quantum-mechanically correct physics. There is a small but finite probability that the photon is anywhere. We can write a wave-function to describe how improbable it would be for us to find the photon at any specific coordinate, and we can even provide characteristic scale-lengths for the wave function.

- It would be wise to do some homework: study the common models that physicists use to study the behavior of light, so that you can make sure that you're asking a well-formed question.

- If you're deep in it, here's Solutions of the Maxwell equations and photon wave functions, (2010), which was also published in the peer-reviewed journal Annals of Physics, authored by Peter Mohr, a professional physicist employed at NIST. This is 75 pages of very math-heavy, advanced quantum-mechanically-correct physics that answers your question. If you are not already deep in to the physics, I recommend that you start with a much simpler book, like the introductory text I linked earlier. A more simple geometric model would be the Airy disk, or the beam profile or geometry for a beam of collimated light; but these models are applicable to ensembles and not to individual photons.

- Part of me wants to remind you to go back and review every permuted variations of the slit experiment, ... but part of me wonders if you've ever seen those before; if this picture doesn't jog your memory - or if you're seeing it for the first time, if it doesn't enlighten you a little bit - then you really really need to go back to studying the basic physics material.

- Nimur (talk) 20:19, 22 May 2019 (UTC)

- I think the OP is looking for an area containing 50% probability, if such a thing exists.--Wikimedes (talk) 22:12, 22 May 2019 (UTC)

- Basically I want to know how wide the photon equivalent of an electron cloud is. The part of the cloud that has the photon half of the time that is. Maybe the cloud won't look like a spherical galaxy since photons can't go faster than c so I made the photons fall on a 2D target. No matter how wide and perfect a laser and lens are you can't focus a laser spot sharper than this photon equivalent of an electron cloud right? 107.242.117.17 (talk) 20:01, 22 May 2019 (UTC)

- The diffraction limit is kind of toast -- see superlens. I won't claim to know exactly when and how well you can beat the limit with what technologies. I suppose the fundamental Heisenberg uncertainty principle governs how well you can say where a photon will strike, relative to the uncertainty in its momentum ... however, if you are willing to tolerate substantial uncertainty in the left-right direction only (you don't know when the photon will hit and you don't know what wavelength it is) I don't see a way to use that to constrain the accuracy of the targeting. Wnt (talk) 22:26, 22 May 2019 (UTC)

- If you want to build machines that defy physical limitations and push the boundaries of optical performance, it's a good idea to understand all aspects of the physical limitations, in theory and in practice ... this is why cameras and optical equipment companies employ cross-functional teams comprised of physicists, electronic engineers, materials and mechanical engineers, and other experts... shameless plug: we're hiring on multiple continents! And per my usual advice - it'd be a real competitive advantage if the skilled optical physics candidate is additionally a software expert, because the truth of the matter is, most of the time, software plays a huge role in controlling and interpreting the real-world limits imposed by the laws of physics.

- Anyway,... I have to return to my day-job, laboriously counting incident photons ... if only I could train some kind of machine to do this excruciating work for me... I'd have more time to pontificate about physics on the internet! But, I've got bills to pay... Good luck with the suggested-readings!

- Nimur (talk) 16:54, 23 May 2019 (UTC)

- Or I could just look up some news stories about how good they've gotten. this proposal wants 30 nm resolution from 193 nm light... in 2007. But it's for the military, which may not have published. This one from 2012 got 45 nm out of 365 nm light, and says that 193 nm was down to 22 nm resolution for lithography. [This one from 2014] predicts 10 nm resolution. I don't doubt the skill of the practitioners, but I do doubt the diffraction limit is going to stop them soon. Wnt (talk) 03:13, 24 May 2019 (UTC)

Peach's substance

What substance in peach causes slightly burning or acrid sensation when eaten (especially on lips, similar to citruses)? 212.180.235.46 (talk) 19:33, 22 May 2019 (UTC)

- Via PubMed search, I found Response to major peach and peanut allergens in a population of children allergic to peach (2015). "We study the sensitization to relevant allergens, such as peach and peanut LTP, Prup3 and Arah9, and seed storage protein, Arah2."

- Pru p 3 seems to be the biological chemical name for the most common allergen in peach. This is a lipid transfer protein.

- In at least one case, Anaphylaxis induced by nectarine, a peach-allergy patient reacted to nectarine, suffering a severe reaction including flush, wheals, and anaphylactic shock.

- It is important to emphasize that there's a huge variety among the population. Any individual might be sensitive to other compounds, or there could be another cause for sensitivity that is totally unrelated to exposure to the peach fruit.

- Nimur (talk) 19:47, 22 May 2019 (UTC)

- I would be very hesitant to diagnose an allergy. Naturally peaches often have an acidic "tang" that simply results from low pH. The comparison to citruses invites this interpretation. I looked up the peach trichomes thinking there might be some silica in them (I think, could be wrong, that kiwi fruit can annoy people's lips and tongue by this means) but this paper, which goes into absolutely pornographic detail on peach skin, doesn't mention any silica I could see. Wnt (talk) 22:46, 22 May 2019 (UTC)

- If you're concerned, see your doctor. ←Baseball Bugs What's up, Doc? carrots→ 23:40, 22 May 2019 (UTC)

- I mean that tangy taste mentioned by Wnt. I'm healthy, but noticed it may slightly burn the lips. Probably that tangy substance also causes that subtle burning sensation. 212.180.235.46 (talk) 16:51, 23 May 2019 (UTC)

- I've never experience that. So if you're concerned, see your doctor. ←Baseball Bugs What's up, Doc? carrots→ 17:32, 23 May 2019 (UTC)

- Agreed with Bugs. It's probably nothing, but that tingling sensation is potentially a sign of a food allergy. I certainly get it from time to time when I eat something I shouldn't. No harm in getting it checked out. Matt Deres (talk) 19:00, 23 May 2019 (UTC)

- I've never experience that. So if you're concerned, see your doctor. ←Baseball Bugs What's up, Doc? carrots→ 17:32, 23 May 2019 (UTC)

- I mean that tangy taste mentioned by Wnt. I'm healthy, but noticed it may slightly burn the lips. Probably that tangy substance also causes that subtle burning sensation. 212.180.235.46 (talk) 16:51, 23 May 2019 (UTC)

- Most likely citric acid, which causes the low pH (acidity), AKA "tartness". If you happen to have small cracks on your lips, which is common in the case of chapped lips, or small tears from accidentally biting your lip or from sharp food, like pretzels with salt crystals on the outside, that can sting. Of course, as the name implies, citric acid is common to all citrus fruit, with lemons and limes being perhaps the tartest. SinisterLefty (talk) 21:27, 27 May 2019 (UTC)

May 23

What color is a safelight?

On TV it is usually red, and I'm wondering if this is the same red which is one of the three primary colors of light?— Vchimpanzee • talk • contributions • 21:44, 23 May 2019 (UTC)

- We have an article on safelights. The exact type and exact color depend on the purpose. Many old-fashioned photographic development labs used a red or reddish colored light. Many modern industrial labs that work with photosensitive photoresists in the electronics industry use a yellow or amber light. Professionals who work with specific photographic or photoreactive film chemicals usually get specialized technical data from their chemical vendor to guide their light color and fixture choices. Here's technical information for DuPont RISTON photopolymer, including spectra and lamp recommendations. Here's safe light filter recommendations for KODAK motion picture film, and here's a guide to dark room illumination. Nimur (talk) 21:59, 23 May 2019 (UTC)

- The Wikipedia article didn't get very specific, but does any of that relate to the red that is a primary color of light? In fact, Wikipedia doesn't even seem to cover that subject.— Vchimpanzee • talk • contributions • 22:28, 23 May 2019 (UTC)

- I don't think any of those even mentioned the color red. I can't imagine how people develop film in total darkness, but that was the recommendation for certain cases.— Vchimpanzee • talk • contributions • 22:33, 23 May 2019 (UTC)

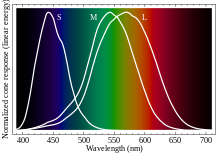

- There is no "red that is a primary color of light", or at least not exactly. There are three different kind of cone cells, which have different response curves to wavelengths of light in the visible range. One of the three types has the strongest response in the longer wavelengths, and in some (probably oversimplified) sense, that is what defines what we call "red". But lots of different wavelengths will provoke at least some response from those cells.

- So "red as a primary color" is more about the human eye than it is about light per se.

- I expect there's more information at color vision. --Trovatore (talk) 23:13, 23 May 2019 (UTC)

- Photographic film is sensitive to red light. But it only has to be in total darkness when you open the camera, and transfer the film to the developer spindle and then once it is inside the developer it is safe from light. Then once the fixer has done its job, it is safe to open. The black and white photopaper was safe in red light. Graeme Bartlett (talk) 23:18, 23 May 2019 (UTC)

- I don't think any of those even mentioned the color red. I can't imagine how people develop film in total darkness, but that was the recommendation for certain cases.— Vchimpanzee • talk • contributions • 22:33, 23 May 2019 (UTC)

- The Wikipedia article didn't get very specific, but does any of that relate to the red that is a primary color of light? In fact, Wikipedia doesn't even seem to cover that subject.— Vchimpanzee • talk • contributions • 22:28, 23 May 2019 (UTC)

- It's unrelated to primary colours (except when it isn't...).

- A safelight can be any colour (if there are any) to which the photographic emulsion is insensitive. In the "classic" example, this was red light. That's because red is long wavelength, thus low energy photons (see Photon#Physical properties). Early emulsions were orthochromatic and insensitive to red light (still an improvement, because earlier ones had only been sensitive to blue light). This had given rise to photographic grey, a deliberate painting of some objects (such as brand new steam locomotives) in a pale grey which photographed well with these early emulsions.

- This sensitivity is a linear series, based on the quantum behaviour of the photon (long wavelength, low energy) and these red photons simply not having enough energy to activate the emulsion.

- Primary colours are an artefact of the human eye and its three [sic] types of colour sensitive cones. Speaking in terms of physics, monochrome emulsions and safelights, there are no such things. Andy Dingley (talk) 23:22, 23 May 2019 (UTC)

- There are two types of emulsion in use for typical processes: film (usually a negative film) and then photographic paper used to produce the print. Safelights are (usually) only applicable to the darkroom printing process onto paper. The film is handled in total darkness. Usually this is in a small light-proof developing tank which is loaded in the dark, then allows the chemical handling to be done in daylight.

- The film could be "black and white", more precisely "monochromatic", but it's still sensitive to a wide range of colours. Early emulsions (to mid 20th century) were orthochromatic, but panchromatic emulsions were developed later to give a more realistic (i.e. matching the behaviour of the rods in the human eye) mapping of colour onto brightness. These were red-sensitive and although even ortho film was usually handled in darkness, these couldn't be used with a human-friendly safelight. They were however insensitive to infra-red light and some auto-processing machines used IR LEDs and detectors to read information coded on the film edge, even while it was in the camera or during processing.

- In Victorian times and wet glass plate processes, emulsions were not only just sensitive to blue light, but weren't very sensitive at all. A dim light, such as a candle or oil lamp, could be used as a safe worklight. Although this would eventually have fogged the plate, it would take too long to be a problem.

- Printing paper emulsions didn't have to be daylight sensitive, or even very sensitive at all, as they're exposed via an enlarger with a bright lamp of any desired colour.

- For black & white processing, red safelights are generally used. Some processes have a wider range of sensitivity, so a "deep red" (i.e. longer wavelength) safelight is needed, which is awkward as it's getting to the range where humans don't see too well either. Some processes vary the colour of the enlarger light (with a set of yellow filters) in order to control the contrast ratio of the printing process (Paper used to be made in a range of fixed contrast ratios, but the multigrade papers allowed one paper to be used for all of them). These could though be fussy about safelights.

- Colour printing paper obviously needs to respond to a range of colours. It's made from a laminate of differently responsive layers (see Color photography#Three-color processes): usually the complementary colours though, cyan, magenta and yellow, not RGB (see Color photography#Subtractive color and CMYK color model). Safelights are difficult, but a dim yellow-green one was usable. As the triplet colours here were chosen to match human colour perception, and the safelight is having to match a gap between those, then we could now say that safelight colours are in fact derived from human eye responses.

- A further sort of safelight is used in microelectronics fabrication, mostly IC production but also sometimes for circuitry. The photoresists used here as UV-sensitive by design, but also overlap into the blue visible spectrum. The safelight here is a rather bilious bright yellow, much brighter than used for photography. It could just as well be red (the photoresists don't care) but humans see poorly in red and these are precision labs, where good vision is needed. Andy Dingley (talk) 23:34, 23 May 2019 (UTC)

In B&W darkrooms you usually use red (sometimes amber) safelight with enlarging paper that's not sensitive to it. For film you use total darkness. It's not too bad--you shut off the lights and load the developing reel by feel, and you can do it with a changing bag if you don't have a darkroom. Just practice loading reels once or twice with the lights on and with scrap film to pick up the technique and you're good to go. Then you put the reel into the developing tank (which is light tight) and you can turn on the lights.

For color enlarging you do the enlargement in darkness but then you usually load the exposed enlarging paper into a machine or light-tight drum for processing, so you don't have to fumble around with trays of liquid chemicals in the dark. You do use the chemical trays for B&W paper, but with safelight so you can see what you're doing. 67.164.113.165 (talk) 18:45, 24 May 2019 (UTC)

- Anyway, all I really want is to ask whether the color I saw in a darkroom on TV was the color that is considered a primary color of light, in the sense that green and a certain shade of blue are added to this red to produce white light, or in the sense that old televisions have these three colors. I don't know what modern TVs do. I saw what I am describing in World Book Encyclopedia but I don't know if Wikipedia has anything comparable. In World Book, three colored circles were projected on a wall or screen in a manner similar to this. Where the red I am describing overlapped with green, the light was yellow. Where it overlapped with blue, the light was magenta. And when green and blue overlapped, the light was cyan. In a small area all three colors overlapped and the result was white light.— Vchimpanzee • talk • contributions • 16:32, 25 May 2019 (UTC)

- There's no exact wavelength triplet that is the primary colors. It might not work as well if your primaries have a touch of cyan or yellow or indigo or magenta to the naked eye. Sagittarian Milky Way (talk) 18:05, 25 May 2019 (UTC)

- See Color vision for more.

- In the "classic" case for printing B&W paper, the safelight is red because that's the longest wavelength in the human visible range, thus the lowest energy photons, thus the least chance of affecting the paper. It's not a "primary colour".

- In the rarer case of a colour-safe-safelight for some more sensitive colour paper emulsions, the safelight is the yellow-green colour which is at the peak of the L curve here. That's because it's both a minimum of the paper's sensitivity (between two colours it is sensitive to) and also something of a peak for what humans can see. But those are pretty useless safelights, as they're so dim. This still isn't a primary colour, but it is now related to the human eye's behaviour, not just quantum physics. Andy Dingley (talk) 10:17, 26 May 2019 (UTC)

May 24

Which species of spider?

Can anyone identify this species of spider? Bubba73 You talkin' to me? 00:14, 24 May 2019 (UTC)

- The yellow and black one is an orb spider. They are very common and go by many different local names. 97.82.165.112 (talk) 01:12, 24 May 2019 (UTC)

- Thanks, I forgot to say that the second photo is its web. Bubba73 You talkin' to me? 01:25, 24 May 2019 (UTC)

- Amazing web! In that case I would suggest it's an orb-weaver spider.--Shantavira|feed me 07:46, 24 May 2019 (UTC)

- See web decoration. Mikenorton (talk) 10:59, 24 May 2019 (UTC)

- The top image shows the underside of possibly a wasp spider. Mikenorton (talk) 12:42, 24 May 2019 (UTC)

- Amazing web! In that case I would suggest it's an orb-weaver spider.--Shantavira|feed me 07:46, 24 May 2019 (UTC)

- If the spider is not causing a problem for you, I strongly suggest leaving it alone. They are very friendly spiders that do a great job in controlling ground pests like grasshoppers. Plus, if you can get over the often grotesque appearance, they make very pretty webs. 12.207.168.3 (talk) 12:44, 24 May 2019 (UTC)

- I doubt they object to being photographed. ←Baseball Bugs What's up, Doc? carrots→ 14:45, 24 May 2019 (UTC)

- If the spider is not causing a problem for you, I strongly suggest leaving it alone. They are very friendly spiders that do a great job in controlling ground pests like grasshoppers. Plus, if you can get over the often grotesque appearance, they make very pretty webs. 12.207.168.3 (talk) 12:44, 24 May 2019 (UTC)

My wife called my attention to the interesting web. The spider behind the web (hardly visible) is the same one in the other photo. I have not bothered it. The photo turned out to not be as sharp as I wanted. I think I had the shutter speed too low. Bubba73 You talkin' to me? 19:00, 24 May 2019 (UTC)

Is a single photons light particle emitted in every direction?

Or is a photon particle directed in one direction?

The question that my friend pondered is if we look at the sun, are we receiving the same photon particles or if he is receiving separate photons.

I read many pages on the subject but am still unsure.

Thank you.

107.199.78.194 (talk) 01:26, 24 May 2019 (UTC)

- The probability that the photon will be encountered is emitted in all directions. Whether the photon "itself" is emitted in all directions seems to be a matter of interpretation. Some interpretations of quantum mechanics, like Bohmian mechanics, say a very clear no, while others, fulfilling the same math requirements (like Copenhagen interpretation) say yes.

- However, in either case, the individual photon is a particle that might hit your retina or his, but not both. Wnt (talk) 02:00, 24 May 2019 (UTC)

- As Wnt has hinted at, this is a question that sounds simple but is surprisingly deep. To restate your question: can the same photon interact with both your friend's eye and your own eye? If you look at things based on the classical photon model, which models the photon as a particle, the answer is a pretty simple "no". Things can't be in two places at once, silly! The problem is, we now know that classical mechanics is not "fundamentally" correct; it's just an approximation that works well under certain conditions. Quantum mechanics (QM) more accurately describes the world, but it does so in ways that defy our intuition. In QM, a photon is described by a wavefunction, and the wavefunction simply gives you a probability of finding the photon at any given point in space. So does that mean the photon is everywhere at once? It depends on how you interpret QM. All the math does is give you numbers. This is what the famous wave–particle duality is about: you can view a photon as either a wave or a particle, but in QM a photon isn't really either: sometimes it can behave like a wave, and sometimes like a particle. Some of the responses here may help give some more detail: [4] [5]. This might seem unsatisfying; you might want to know what a photon "really is". Well, it's a photon, which according to quantum field theory is an excitation in the electromagnetic field. It shouldn't really be surprising that it's difficult to visualize such things. Our intuition developed to survive on the savannah, not to figure out how photons behave. If you want to learn more about QM in general, try introduction to quantum mechanics for a starting point. There are some good books listed there in the bibliography. I also recommend PBS Space Time on YouTube. --47.146.63.87 (talk) 03:34, 24 May 2019 (UTC)

- Oops, the bibliography is actually in the main quantum mechanics article. --47.146.63.87 (talk) 06:54, 24 May 2019 (UTC)

- "The question that my friend pondered is if we look at the sun, are we receiving the same photon particles or if he is receiving separate photons." The question is, as noted, quite interesting if you ask it in different ways. If a photon of light did hit your eye, it cannot hit your friends eye. That is a different question than will a particular photon hit your eye or your friend's eye. The answer to that is not intuitively similar to the past-tense question, and gets down to what may be the fundamental difference between classical mechanics and quantum mechanics. Classical mechanics presumes certain (what seems to us) rather obvious, axiomatic assumptions: objects have a defined location in space and time, and if you know an object's location, and you know the forces on that object, you can predict reliably how that object's motion will change over time. That is perhaps the fundamental set of axioms for classical mechanics; it is the assumption that one makes in nearly all first- and second- year basic physics classes. The key bit that makes quantum mechanics different than classical mechanics is that very first part "objects have a defined location in space and time". Imagine a world where they didn't. I don't mean "imagine a world where you can't know an object's location because you don't have good enough instrumentation." I mean "Imagine a world where an object's location is not itself a well-defined concept." Now, try to predict the results of interactions between those objects, or the results of forces acting on those objects. Imagine trying to predict the flight of a soccer ball you are about to kick where you don't know where the ball is, where your foot is, and when your foot will strike the ball. You do know when you have struck the ball, because, lets say, you can see the mark the ball leaves on a wall. But can you tell what will happen before the ball hits the wall and makes the mark? That's what quantum mechanics looks like in macroscopic terms. If you want to understand anything about your system (like, how kicking soccer balls works) in your quantum world, you need to kick a bunch of soccer balls, and look where they hit the wall, and extrapolate some equations that give some predictive power over the behavior of the soccer ball. That's basically what quantum physicists do... Take observations of quantum behavior and devise mathematics that reliably predicts it. In simpler terms: you need to define your question carefully: are you picking a photon that is leaving the sun and trying to predict who's eye it will hit? Or are you picking a photon that has already hit your eye, and asking if it already hit your friend's eye too? Only the second question has a single, defined answer: it hit your eye. The first can only be answered in terms of probabilities. --Jayron32 11:21, 24 May 2019 (UTC)

- Not to add to my rambling above, but there is another implied question here: "What is the photon doing as it travels between the sun and us before it hits one of our eyes". The answer to that question is entirely unknowable, and what gets to the heart of all of the debates regarding the interpretations of quantum mechanics: unless and until you make a measurement of that photon, you don't know what it is doing. And you can only make a measurement by bouncing that photon off of something. And that only tells you when and where that photon was at a particular point in time; all you did was move your eyes to a different location, you didn't gain any insight on what the photon did between the sun and your eyes. --Jayron32 11:34, 24 May 2019 (UTC)

- In for a dime, in for a dollar: adding to this yet again; above I use the word "location" and "localization" in a physical, Cartesian sense, defining it as (for example) a set of four coordinates (x, y, z, and time) in space and time. But in quantum physics, the term "locality" refers more generally to any measurement one can make of a quantum particle or of a quantum system. Location in space and time is a convenient one to discuss, but any measurable quantity is subject to the same indeterminacy. So when you read some of the articles I linked above, they tend to speak in more general terms about what "local" means; I use it above meaning "a physical location in space", but it really means "any measurement you could make". --Jayron32 11:43, 24 May 2019 (UTC)

- Not to add to my rambling above, but there is another implied question here: "What is the photon doing as it travels between the sun and us before it hits one of our eyes". The answer to that question is entirely unknowable, and what gets to the heart of all of the debates regarding the interpretations of quantum mechanics: unless and until you make a measurement of that photon, you don't know what it is doing. And you can only make a measurement by bouncing that photon off of something. And that only tells you when and where that photon was at a particular point in time; all you did was move your eyes to a different location, you didn't gain any insight on what the photon did between the sun and your eyes. --Jayron32 11:34, 24 May 2019 (UTC)

Do not eat raw batter!

I bought a lemon-poppyseed muffin mix from Martha White at the store yesterday. At the bottom of the packaging, near the manufacturing company's mailing address and telephone number, is a disclaimer: Do Not Eat Raw Batter. Why? It's dry mix with nothing perishable (ingredients), "best by" some time next year, and one creates batter by taking muffin mix and adding milk. (If your milk's gone bad, it's your fault; but are they afraid of spoiled-milk-drinkers suing?) I can't envision anything in the ingredients that could be dangerous but that would be neutralized by fifteen minutes in a 400°F oven. Nyttend (talk) 05:00, 24 May 2019 (UTC)

- Presumably because we're not supposed to eat raw flour.[6] DMacks (talk) 05:32, 24 May 2019 (UTC)

- (EC) A quick search finds the FDA and the CDC recommend against the consumption of flour, raw dough and raw batter apparently due to the risk of E. coli from the flour (and in some cases which probably don't apply here Salmonella from the eggs). [7] [8] BTW, for anything that isn't too thick, fifteen minutes in a 400°F oven is more than enough to kill most bacteria although maybe not their spores and probably also won't do much for toxins already produced. If the internal temperature reaches even 75°C for a short while, that's enough for most things [9] so it really depends on the thickness and other factors as to how long it takes for the internal temperature to reach that. To be clear, this is only in relation to bacteria, there are other things that need to be considered for some foods e.g. Antinutrient or toxins that sometimes need to be dealt with, sometimes with cooking and I'm not sure whether an internal temperature of 75°C for a short while is enough for them. (Think cassava etc.) So it's not intended as generally food safety advice simply a comment on the "can't envision anything in the ingredients that could be dangerous but that would be neutralized by fifteen minutes in a 400°F oven" bit. Nil Einne (talk) 05:41, 24 May 2019 (UTC)

- I believe the first major recall that brought this issue to prominence was the 2009 Nestle Toll House cookie dough recall where flour was the source [10]. I can't prove this but I don't think the possibility that E. coli could live in flour was something that had really been considered in the industry prior to this. Interesting side note, Nestle started heat treating their flour afterwards because they are aware that even though their packages say "Do not consume raw cookie dough", a large percentage of their customers will do it anyways. shoy (reactions) 13:59, 24 May 2019 (UTC)

- I'm not convinced that was true e.g. [11]. It may be true it wasn't believed there would be anything to cause health concerns if you're just eating small amounts of raw flour (considering quantity and type expected to be present). Definitely the fact that there would probably be some bacteria in your flour doesn't seem particularly surprising even if the assumption may be that there won't be enough of anything of concern to be a problem. Remember that the non perishability of something like flour most likely comes not so much from the fact that there's no microorganisms on it, but from the water activity being way way too low to support any growth. If you were to wet your flour e.g. by putting it in a container+water that had been autoclaved, I don't think any competent microbiologist even from the 1960s would have been surprised to find bacteria growing it in that came from the flour. Nil Einne (talk) 15:19, 24 May 2019 (UTC)

- I believe the first major recall that brought this issue to prominence was the 2009 Nestle Toll House cookie dough recall where flour was the source [10]. I can't prove this but I don't think the possibility that E. coli could live in flour was something that had really been considered in the industry prior to this. Interesting side note, Nestle started heat treating their flour afterwards because they are aware that even though their packages say "Do not consume raw cookie dough", a large percentage of their customers will do it anyways. shoy (reactions) 13:59, 24 May 2019 (UTC)

- The answer is: because the cost of ink on a label is cheaper than the cost of a lawsuit. --Jayron32 11:01, 24 May 2019 (UTC)

I am trying to find a way to cut 5/16" stainless steel rod at work. At present we are using disc grinders. This is time consuming and needs a large working area. I am hoping to modify a nut splitter by adding a boron nitride cutting edge. The nut splitter I have ordered is: https://www.otctools.com/products/universal-c-frame-nut-splitter The CBN material I have ordered is: https://www.spyderco.com/catalog/details/204CBN/891 The CBN knife sharpeners appear to be hollow and thus will probably crack. Plan A is to fill them with powdered glass or ceramic glaze and then heat to melting. Issues: Will the glass/glaze expand and crack the CBN rods? How to I 'snap' the rods to approx. 1/2" length to fit the nut splitter? I thought of putting them in a vise and either whacking them with a hammer or snapping them off with stainless steel pipe; both methods using soft metal padding. Any other wise ideas about modifying a nut splitter or cutting stainless rod would be very helpful, and please feel free to invent this gadget so I can just buy it from you next time. 96.55.104.236 (talk) 16:53, 24 May 2019 (UTC)

- I know nothing nearly practical enough to answer your question, but I can link to a cute video of a water jet cutter. Website [12] says coefficient of expansion of cubic boron nitride is 3.8 x 10-6 deg C-1. But figuring out the coefficient of expansion for a ceramic glaze looks like a fine art [13], complete with a reprise of our non-ideal solution issues from a week ago. I don't know what's on your table for this. Wnt (talk) 00:18, 25 May 2019 (UTC)

- If you think filling the void with whatever will prevent the CBN part cracking, that is simply NOT true. Interference fit is serious mechanical business, unproperly done you have higher a chance to weaken the thing, than to strengthen it. So I wouldn't bother, and use it as is, the way it is used in a diamond anvil cell (that is, softer material around it).

- But then again, if I were you, I would refrain from reinventing a manual disc grinder, because this is what you are doing now (and I see no way you manually turning your intended tool at ~10 rpm speed can compete with a 3000 rpm grinder). If disc grinder are really consumming too much working time, so much so that some investment are worth it, consider aforementioned water jet cutter or laser cutter, but I guess there are too expensive for you.

- Sorry to ruin your groove, but good luck with it.

- Gem fr (talk) 06:23, 25 May 2019 (UTC)

- Thank you both for your input. The time and space factors are because the stainless steel rod breaks regularly on our dough proofer conveyor belts which causes an alarm and the belt stops. We only have 15 minutes to get the belt moving or we lose all the product in the proofer. The water jet would be a good idea if we could make one small enough to fit in tight areas. I will ask why the broken conveyor link sensors aren't mounted outside the machine and close to the maintenance shop or a place where we can keep a disc grinder and face shield stationed. 96.55.104.236 (talk) 15:18, 25 May 2019 (UTC)

- Would a portable Shear, such like alligator shear, be too large? It does the job in a matter of seconds https://www.youtube.com/watch?v=5QSfqHld-XY Gem fr (talk) 20:09, 25 May 2019 (UTC)

- Thank you both for your input. The time and space factors are because the stainless steel rod breaks regularly on our dough proofer conveyor belts which causes an alarm and the belt stops. We only have 15 minutes to get the belt moving or we lose all the product in the proofer. The water jet would be a good idea if we could make one small enough to fit in tight areas. I will ask why the broken conveyor link sensors aren't mounted outside the machine and close to the maintenance shop or a place where we can keep a disc grinder and face shield stationed. 96.55.104.236 (talk) 15:18, 25 May 2019 (UTC)

May 25

Photons2

How many photons does the universe actually need to work in the way we observe it? 213.205.242.170 (talk) 04:04, 25 May 2019 (UTC)

- [14]--Jayron32 04:37, 25 May 2019 (UTC)

- Or, if you want to put your noggin through the wringer, John Wheeler proposed an answer of "one". He was talking about electrons, not photons, but the same concept applies. --47.146.63.87 (talk) 05:22, 25 May 2019 (UTC)

- Yes I could have asked about electrons insted. THeyre bothe pure balls of energy.--213.205.242.170 (talk) 21:51, 25 May 2019 (UTC)

- Not true. Electrons are matter. They have a rest mass. Photons are not matter because they do not.--Jayron32 22:50, 26 May 2019 (UTC)

- "Pure energy" is a term that is essentially meaningless, and one physicists generally don't use, though it tends to show up sometimes in pop science stuff. It is true that both electrons and photons are both described by current mainstream physics as point particles, with no known internal structure. --47.146.63.87 (talk) 07:43, 27 May 2019 (UTC)

- "Pure energy" is physically equivalent to "Massless particle" and "moves at speed c", so this is totally meaningful, applies to photon and not to electrons. Gem fr (talk) 21:39, 27 May 2019 (UTC)

- "Pure energy" is a term that is essentially meaningless, and one physicists generally don't use, though it tends to show up sometimes in pop science stuff. It is true that both electrons and photons are both described by current mainstream physics as point particles, with no known internal structure. --47.146.63.87 (talk) 07:43, 27 May 2019 (UTC)

- Not true. Electrons are matter. They have a rest mass. Photons are not matter because they do not.--Jayron32 22:50, 26 May 2019 (UTC)

- Yes I could have asked about electrons insted. THeyre bothe pure balls of energy.--213.205.242.170 (talk) 21:51, 25 May 2019 (UTC)

Hawking Radiation

I'm sure that I'm not understanding the concept, so please correct me where I'm wrong. If due to quantum fluctuations within the QED vacuum, positive-mass/negative-mass particle pairs are momentarily created, then it only makes sense for the singularity to lose mass when more negative-mass particles than positive mass particles were to fall in. Is there such a dynamic equilibrium whereby negative-mass particles preferentially, or exclusively fall in? Plasmic Physics (talk) 07:50, 25 May 2019 (UTC)

- no, not "negative-mass". both particles and antiparticle have positive mass (if any mass at all, that is).

- The singularity lose mass because it lose energy. because E=mc² so losing energy E is losing mass E/c². And it lose energy because of Hawking radiation.

- Remember this is still unproven theory.

- Gem fr (talk) 13:05, 25 May 2019 (UTC)

- Remember that YOU are still an unproven theory. You are still consistent and explainable though. --Jayron32 15:33, 25 May 2019 (UTC)

- explainable, not so much (lots of hidden variables), but observable for sure, while we just got our first image of a black hole. So we are still some way to observing Hawking radation and a vanishing black hole. Gem fr (talk) 20:20, 25 May 2019 (UTC)

- Wait a minute, how can both particles have positive mass/energy when net mass/energy cannot be created or destroyed? One particle must cancel the other for the law of conservation to be upheld. It is not enough for simply the charges to cancel. Plasmic Physics (talk) 22:40, 25 May 2019 (UTC)

- again, E=mc². So conversion from energy to mass allows for creation of mass, at the expense of some energy. This has been observed quite a lot (for instance by CERN) Gem fr (talk) 12:03, 26 May 2019 (UTC)

- Moreover, if the singularity absorbs a particle with positive mass/energy, shouldn't it's mass increase instead of decrease? Plasmic Physics (talk) 02:54, 26 May 2019 (UTC)

- Not if the original energy comes from it. Also, I smell some confusion: Hawking radiation comes from Apparent horizon, not the singularity itself Gem fr (talk) 12:03, 26 May 2019 (UTC)

- Time and energy are conjugate variables and are therefore related by the Heisenberg Uncertainty Principle. That means that energy is not well defined over very short time periods. A crude way to express it is that violations of conservation of energy are allowed if they occur over a short enough time. Virtual particles normally exist for a short enough time that their existence does not conflict with conservation of energy. CodeTalker (talk) 03:47, 26 May 2019 (UTC)

- OK, but what about the question of my adenumdum? Plasmic Physics (talk) 04:35, 26 May 2019 (UTC)

- This is approaching the limits of my understanding, so perhaps someone with more knowledge can chime in, but there are a couple of points to consider. When the virtual particle escapes the black hole, it is no longer slipping under the "short enough time" loophole in the law of conservation of energy, so to speak. It is now a real particle, and the energy embodied in that particle must have come from somewhere. The only place it can come from is the black hole's mass. Also, you are objecting that a virtual particle falling into the black hole should increase its mass. But the virtual particle isn't a real particle -- it didn't get created because there was enough energy to embody in a particle somewhere. Since it's virtual, it's not carrying energy/mass. Finally, I think the "virtual pair where one escapes and one falls in" model can't be pushed too far. It's not exactly what's really happening; it's an approximate model to help one understand the event without resorting to quantum mechanics calculations. CodeTalker (talk) 16:07, 26 May 2019 (UTC)

- I remember seeing the same explanation in virtually the same words in something I read. But my mind boggles at a notion of a universe that "notices" that the bookkeeping has been cheated and "finds" mass to deduct. It seems like a very sloppy idea. What is stranger is that the Unruh effect dictates that the virtual particles seen by the accelerating observer are not there for the free-falling observer, i.e. for the person falling in the hole there is no Hawking radiation. I feel like there has to be a saner way to interpret this, using the same math. Wnt (talk) 21:36, 26 May 2019 (UTC)

- A similar phenomenon actually occurs in classical physics. Consider the case of an accelerating charged particle, which is predicted by Maxwell's equations to emit electromagnetic waves. Now ask, is a charged particle at rest with respect to the surface of the Earth accelerating? Classical mechanics says no, but general relativity says yes. Electromagnetic waves are not observed to emanate from one. Now ask, is a charged particle free-falling to the Earth's surface accelerating? Classical mechanics says yes, but general relativity says no. Electromagnetic waves are observed. So did we disprove general relativity? No. Maxwell's laws are formulated for an inertial observer. In the case of an accelerating observer, inertial charged particles are expected to emit electromagnetic waves, but co-accelerating particles are not. So wtf? Well, a stationary charged particle is associated with a static electric field. To an inertial observer who is not in the same reference frame, that field is distorted, but still stationary relative to the particle. To an accelerating observer, that field is changing, and has a wave-like component. Someguy1221 (talk) 22:07, 26 May 2019 (UTC)

- Fascinating point. How about observers at the Equator and North Pole? They feel acceleration in perpendicular directions, so they can scarcely be "co-accelerating", can they? But they remain at the same position relative to one another and, I assume, see no electromagnetic wave emission... Wnt (talk) 02:22, 27 May 2019 (UTC)

- I do believe you would observe electromagnetic waves, and this is easier to see from the perspective of the observer at the north pole. Ignoring the rest of the Earth, the particle on the equator is essentially making a slow orbit around the particle at the north pole, though offset by a few thousand miles. Of course, charged particles following a circular trajectory emit electromagnetic waves, and incidentally is why the idea of electrons orbiting a nucleus was so bizarre to physicists prior to the development of quantum mechanics. Comparing again to Unruh radiation, what I find so peculiar about this type of classical phenomenon is that while all observers record the same interactions, perspectives differ as to who is actually emitting the radiation. I believe the focus on the electric field itself, and how it can change from static to dynamic simply from a change in reference frame can also make the quantum version seem less outrageous, even without understanding the math. The quantum vacuum state is of course occupied by quantum fields. If a boring old electric field can interact with inertial particles differently than it does with accelerating particles, it's suddenly not so weird that quantum fields might do the same thing. And if a particle is just an excitation in a quantum field, maybe it's also not so weird that even the existence of a particle could be a matter of perspective. Someguy1221 (talk) 10:19, 27 May 2019 (UTC)

- Fascinating point. How about observers at the Equator and North Pole? They feel acceleration in perpendicular directions, so they can scarcely be "co-accelerating", can they? But they remain at the same position relative to one another and, I assume, see no electromagnetic wave emission... Wnt (talk) 02:22, 27 May 2019 (UTC)

- Ditto, why should mass be deducted from the black hole to square the imbalance? The black hole isn't intrinsically connected to the escaping particle, because their existence is independent. Only when the captured particle is actually assimilated by the singularity, does it become connected. Secondly, why does the escaping particle become real, but not the captured one? This is all assuming that the virtual-to-real particle-pair transition model is accurate. If this is only an approximation of what is happening, what is actually happening? Lay it on me, I studied cosmology and quantum physics, including the likes of the Schrodinger equation. Plasmic Physics (talk) 06:06, 27 May 2019 (UTC)

- An observer outside the event horizon of a black hole can't "see" anything beyond the event horizon. You can't "see" how much of the black hole's mass is in the singularity and how much is still infalling. For an outside observer, as soon as something passes the event horizon, its mass is added to the black hole's mass. In the case of Hawking radiation, the particle that falls in has negative energy according to a distant observer. (Basing this off the article.) Remember, mass and energy are the same thing, and in particle physics are routinely interchanged. Note that you can also extract mass–energy from a spinning black hole outside its event horizon, in the ergosphere. --47.146.63.87 (talk) 08:36, 27 May 2019 (UTC)

- This make a whole lot of sense actually. So, I was sort of right by considering the particle pair as being negative-mass/positive mass, except that the captured particle only attains hypothetical negative mass, at the instant of capture at that, due to the relativistic nature of a black hole. Furthermore due to the above stated no-hair theorem, the conversion of the particle to negative-mass is not actually required, since the assimilation of mass/energy by a black hole across the horizon is instantaneous from the outside perspective. Is this correct? Except, now we've got a contradiction - what about the internal perspective? Shouldn't the internal observer measure capture particles as still having positive mass causing the singularity to gain mass/energy? Plasmic Physics (talk) 09:56, 27 May 2019 (UTC)

- An observer outside the event horizon of a black hole can't "see" anything beyond the event horizon. You can't "see" how much of the black hole's mass is in the singularity and how much is still infalling. For an outside observer, as soon as something passes the event horizon, its mass is added to the black hole's mass. In the case of Hawking radiation, the particle that falls in has negative energy according to a distant observer. (Basing this off the article.) Remember, mass and energy are the same thing, and in particle physics are routinely interchanged. Note that you can also extract mass–energy from a spinning black hole outside its event horizon, in the ergosphere. --47.146.63.87 (talk) 08:36, 27 May 2019 (UTC)

- A similar phenomenon actually occurs in classical physics. Consider the case of an accelerating charged particle, which is predicted by Maxwell's equations to emit electromagnetic waves. Now ask, is a charged particle at rest with respect to the surface of the Earth accelerating? Classical mechanics says no, but general relativity says yes. Electromagnetic waves are not observed to emanate from one. Now ask, is a charged particle free-falling to the Earth's surface accelerating? Classical mechanics says yes, but general relativity says no. Electromagnetic waves are observed. So did we disprove general relativity? No. Maxwell's laws are formulated for an inertial observer. In the case of an accelerating observer, inertial charged particles are expected to emit electromagnetic waves, but co-accelerating particles are not. So wtf? Well, a stationary charged particle is associated with a static electric field. To an inertial observer who is not in the same reference frame, that field is distorted, but still stationary relative to the particle. To an accelerating observer, that field is changing, and has a wave-like component. Someguy1221 (talk) 22:07, 26 May 2019 (UTC)

- I remember seeing the same explanation in virtually the same words in something I read. But my mind boggles at a notion of a universe that "notices" that the bookkeeping has been cheated and "finds" mass to deduct. It seems like a very sloppy idea. What is stranger is that the Unruh effect dictates that the virtual particles seen by the accelerating observer are not there for the free-falling observer, i.e. for the person falling in the hole there is no Hawking radiation. I feel like there has to be a saner way to interpret this, using the same math. Wnt (talk) 21:36, 26 May 2019 (UTC)

- This is approaching the limits of my understanding, so perhaps someone with more knowledge can chime in, but there are a couple of points to consider. When the virtual particle escapes the black hole, it is no longer slipping under the "short enough time" loophole in the law of conservation of energy, so to speak. It is now a real particle, and the energy embodied in that particle must have come from somewhere. The only place it can come from is the black hole's mass. Also, you are objecting that a virtual particle falling into the black hole should increase its mass. But the virtual particle isn't a real particle -- it didn't get created because there was enough energy to embody in a particle somewhere. Since it's virtual, it's not carrying energy/mass. Finally, I think the "virtual pair where one escapes and one falls in" model can't be pushed too far. It's not exactly what's really happening; it's an approximate model to help one understand the event without resorting to quantum mechanics calculations. CodeTalker (talk) 16:07, 26 May 2019 (UTC)

- OK, but what about the question of my adenumdum? Plasmic Physics (talk) 04:35, 26 May 2019 (UTC)

- Remember that YOU are still an unproven theory. You are still consistent and explainable though. --Jayron32 15:33, 25 May 2019 (UTC)

- This isn't my field, but I've read on occasion that virtual particles can be avoided in favor of an explanation relying on quantum tunneling. Some papers (unknown quality) include [15] [16] The second suggests there is a close connection between the two ideas -- I wonder if the classic textbook illustration of quantum tunneling through a barrier could be done with a "pair of virtual particles" appearing inside the barrier and leaving to opposite sides??? I looked that up and came up with this paper just now. My experience in physics though is that anything I can speculate, I'll find a source for, which people will 95% of the time tell me is bogus. :) Wnt (talk) 21:29, 25 May 2019 (UTC)

- Our virtual particle article does say "Quantum tunnelling may be considered a manifestation of virtual particle exchanges." CodeTalker (talk) 17:48, 26 May 2019 (UTC)

May 26

Special ops helicopter

When the MH-53 Pave Low was retired in 2008, which aircraft was its replacement? Is the replacement still in service in the same role, or has it, in turn, been replaced? 2601:646:8A00:A0B3:D572:F62A:ECB8:F316 (talk) 04:25, 26 May 2019 (UTC)

- MH-X Silent Hawk seems to be a replacement -- but specifics are not publicly known. The 'X' designation suggests that it is not widely in service. —2606:A000:1126:28D:D8C8:217E:6F53:9D9 (talk) 07:28, 26 May 2019 (UTC)

- Looking through the various articles on Wikipedia, the Bell Boeing V-22 Osprey and the MH-60M seems the most likely replacements. WegianWarrior (talk) 08:40, 26 May 2019 (UTC)

- Bell Boeing V-22 Osprey#variant indicates it replaced MH-53

- and internet agrees: https://www.military.com/defensetech/2008/10/08/bye-bye-pave-low-hello-osprey

- Gem fr (talk) 15:05, 26 May 2019 (UTC)

- Thanks! Follow-up question: is it possible to fast-rope from an Osprey as it is from a helo? 2601:646:8A00:A0B3:D572:F62A:ECB8:F316 (talk) 00:34, 27 May 2019 (UTC)

- Yes from the rear ramp as our article indicates. Here is a link to an image: [17]. Rmhermen (talk) 02:48, 27 May 2019 (UTC)

- Thanks! That's just what I wanted to hear! 2601:646:8A00:A0B3:D572:F62A:ECB8:F316 (talk) 03:35, 27 May 2019 (UTC)

- It's worth asking - what is a "special" operation?

- My local air guard wing - the 129th Rescue Wing - uses the combination HC-130J and HH-60G for low-level insertion and rescue, including long-range search and rescue. The active duty Coast Guard Air Station San Francisco, up the road, uses the distinctive MH-65D Dolphin in bright orange, and every once in a while I see a Black Hawk in Coast Guard livery. Air Station Sacramento has some very unique C-27 aircraft, in various paint schemes, which are used for coastal search and rescue and other operations.

- At the Salinas Air Show, we saw some special Navy Sea Hawks out of North Island, San Diego equipped with buoys used for antisubmarine warfare.

- While flying out over the Olympic Mountains, not far from Joint Base Lewis–McChord, I heard some active duty United States Army training flights in Black Hawks. They were doing some strange things in the lonely darkness.

- And while I was visiting San Diego on vacation, I saw the regular Marines (almost surely 3MAW) flying a lot of V-22 Osprey aircraft, on training missions.

- Every one of these represents a unique, hard-working, and highly-specialized group of air crews. And all of this jives with the official Air Force story: MH-53s fly final combat missions (2008), quoting Lt. Col. Gene Becker, "the 20th Expeditionary Special Operations Squadron commander and a MH-53 pilot of 13 years": "Most of the MH-53 crewmembers will head to AFSOC's new weapons systems like the CV-22 (Osprey), AC-130 (Gunship) ... and (MQ-1) Predators. Some will head over to Air Combat Command and fly the HH-60G (Pave Hawk), and a few will retire."

- Now, which among these are "special" operations? Are they only "special" if they're organized under the auspices of the United States Special Operations Command - which is usually a temporary administrative action, anyway?

- I'd like to think that the various and more irregularly-organized irregulars - the sort who run around and set things on fire without any paperwork or permission or "special" titles - are the really real Special Operations Executive. I would hazard that those special operations don't do search-and-rescue: if an operative is caught or killed... well, let's just say, I would rather be on a regular operation. If I go down, I want three Black Hawks, a C-130, and the whole civil air patrol looking for me.

- Nimur (talk) 17:24, 27 May 2019 (UTC)