Transformer (deep learning architecture): Difference between revisions

added a punctuation mark. Tags: Visual edit Mobile edit Mobile web edit |

→Timeline: additional detail; ref Tags: Mobile edit Mobile app edit Android app edit |

||

| Line 22: | Line 22: | ||

* In 2014, a 380M-parameter [[seq2seq]] model using two [[LSTM]]s networks was proposed by Sutskever at al.<ref name="2014_Sutskever_et_al">{{cite journal |last1=Sutskever |first1=Ilya |last2=Vinyals |first2=Oriol |last3=Le |first3=Quoc V |date=2014 |title=Sequence to Sequence Learning with Neural Networks |url=https://proceedings.neurips.cc/paper_files/paper/2014/hash/a14ac55a4f27472c5d894ec1c3c743d2-Abstract.html |journal=Advances in Neural Information Processing Systems |publisher=Curran Associates, Inc. |volume=27 |arxiv=1409.3215}}</ref> |

* In 2014, a 380M-parameter [[seq2seq]] model using two [[LSTM]]s networks was proposed by Sutskever at al.<ref name="2014_Sutskever_et_al">{{cite journal |last1=Sutskever |first1=Ilya |last2=Vinyals |first2=Oriol |last3=Le |first3=Quoc V |date=2014 |title=Sequence to Sequence Learning with Neural Networks |url=https://proceedings.neurips.cc/paper_files/paper/2014/hash/a14ac55a4f27472c5d894ec1c3c743d2-Abstract.html |journal=Advances in Neural Information Processing Systems |publisher=Curran Associates, Inc. |volume=27 |arxiv=1409.3215}}</ref> |

||

* In 2014, a 130M-parameter [[seq2seq]] model |

* In 2014, gating proved to be useful in a 130M-parameter [[seq2seq]] model, which used a simplified [[gated recurrent unit]]s (GRUs). Bahdanau et al<ref name="ii1xZ">{{cite journal |last1=Cho |first1=Kyunghyun |last2=van Merrienboer |first2=Bart |last3=Bahdanau |first3=Dzmitry |last4=Bengio |first4=Yoshua |date=2014 |title=On the Properties of Neural Machine Translation: Encoder–Decoder Approaches |url=http://dx.doi.org/10.3115/v1/w14-4012 |journal=Proceedings of SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation |location=Stroudsburg, PA, USA |publisher=Association for Computational Linguistics |pages=103–111 |doi=10.3115/v1/w14-4012 |s2cid=11336213}}</ref> showed that GRUs are neither better nor worse than gated LSTMs.<ref name="MyUser_Arxiv.org_May_18_2016c">{{cite arXiv |eprint=1412.3555|title=Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling|last1=Chung |first1=Junyoung |last2=Gulcehre |first2=Caglar |last3=Cho |first3=KyungHyun |last4=Bengio |first4=Yoshua |class=cs.NE |year=2014 }}</ref><ref name ="gruber_jockisch"> {{citation |title=Are GRU cells more specific and LSTM cells more sensitive in motive classification of text? |last1=Gruber |first1=N.|last2=Jockisch |first2=A. |year=2020 |journal=Frontiers in Artificial Intelligence |volume=3 |page=40 | doi = 10.3389/frai.2020.00040|pmid=33733157 |pmc=7861254 |s2cid=220252321 |doi-access=free }}</ref> |

||

* In 2014, improving the previous model by using an "additive" kind of attention mechanism in-between two LSTM networks was proposed by Bahdanau et al.<ref name=":9">{{cite arXiv |eprint=1409.0473 |class=cs.CL |first1=Dzmitry |last1=Bahdanau |first2=Kyunghyun |last2=Cho |title=Neural Machine Translation by Jointly Learning to Align and Translate |date=2014-09-01 |last3=Bengio |first3=Yoshua}}</ref> It was, however, not yet the parallelizable (scaled "dot product") kind of attention, later proposed in the 2017 transformer paper. |

* In 2014, improving the previous model by using an "additive" kind of attention mechanism in-between two LSTM networks was proposed by Bahdanau et al.<ref name=":9">{{cite arXiv |eprint=1409.0473 |class=cs.CL |first1=Dzmitry |last1=Bahdanau |first2=Kyunghyun |last2=Cho |title=Neural Machine Translation by Jointly Learning to Align and Translate |date=2014-09-01 |last3=Bengio |first3=Yoshua}}</ref> It was, however, not yet the parallelizable (scaled "dot product") kind of attention, later proposed in the 2017 transformer paper. |

||

Revision as of 17:23, 12 August 2023

| Part of a series on |

| Machine learning and data mining |

|---|

A transformer is a deep learning architecture that relies on the parallel multi-head attention mechanism.[1] The modern transformer was proposed in the 2017 paper titled 'Attention Is All You Need' by Ashish Vaswani et al., Google Brain team. It is notable for requiring less training time than previous recurrent neural architectures, such as long short-term memory (LSTM),[2] and its later variation has been prevalently adopted for training large language models on large (language) datasets, such as the Wikipedia Corpus and Common Crawl, by virtue of the parallelized processing of input sequence.[3] Input text is split into n-grams encoded as tokens and each token is converted into a vector via looking up from a word embedding table. At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism allowing the signal for key tokens to be amplified and less important tokens to be diminished. Though the transformer paper came out in 2017, the softmax-based attention mechanism was proposed earlier in 2014 by Bahdanau, Cho, and Bengio for machine translation,[4][5] and transformers with linearized attention (without softmax) were introduced already in 1992 by Schmidhuber.[6][7][8]

This architecture is now used not only in natural language processing and computer vision,[9] but also in audio[10] and multi-modal processing. It has also led to the development of pre-trained systems, such as generative pre-trained transformers (GPTs)[11] and BERT[12] (Bidirectional Encoder Representations from Transformers).

Timeline

- In 1990, Elman network, in which a recurrent network was trained on simple sentences like "dog chases man", was proposed. The (pre-)trained model was used to convert each word into a vector and the whole vocabulary into a vector database. Groups of vectors were clustered by closeness into a tree. The tree was then found to have a structure. The groups of vectors representing verbs and nouns each belonged to a different large cluster. The noun cluster furtherly was found to have its own sub-clusters for inanimates and animates.[13]

- In 1992, the first kind of transformer was published by Jürgen Schmidhuber under the name "fast weight controller."[6] It learns to answer queries by programming the attention weights of another neural network through outer products of key vectors and value vectors called FROM and TO. This is now called a linear transformer without normalization.[8][7][14] The terminology "learning internal spotlights of attention" was introduced in 1993.[15] An advantage of the fast linear transformers is that their computational complexity grows linearly with sequence length, while modern transformers scale quadratically.

- In 1993, the IBM alignment models were used for statistical machine translation.[16]

- In 1997, a precursor of large language model, using recurrent neural networks, such as long short-term memory, was proposed.

- In 2001, one-billion-word large text corpus, scraped from the Internet, referred to as "very very large" at the time, was used for word disambiguation.[17]

- In 2012, AlexNet demonstrated the effectiveness of large neural networks for image recognition, encouraging large artificial neural networks approach instead of older, statistical approaches.

- In 2014, a 380M-parameter seq2seq model using two LSTMs networks was proposed by Sutskever at al.[18]

- In 2014, gating proved to be useful in a 130M-parameter seq2seq model, which used a simplified gated recurrent units (GRUs). Bahdanau et al[19] showed that GRUs are neither better nor worse than gated LSTMs.[20][21]

- In 2014, improving the previous model by using an "additive" kind of attention mechanism in-between two LSTM networks was proposed by Bahdanau et al.[22] It was, however, not yet the parallelizable (scaled "dot product") kind of attention, later proposed in the 2017 transformer paper.

- In 2016, Google Translate gradually replaced the older statistical machine translation approach with the newer neural-networks-based approach that included a seq2seq model combined by LSTM and the "additive" kind of attention mechanism. They achieved a higher level of performance than the statistical approach, which took ten years to develop, in only nine months.[23][24]

- In 2017, the original (100M-sized) transformer model with a faster (parallelizable or decomposable) attention mechanism was proposed in the "Attention is all you need" paper. Because the model had difficulties converging, it was suggested that the learning rate should be linearly scaled up from 0 to maximal value for the first part of the training (i.e. 2% of the total number of training steps).[1]

- In 2018, in the ELMo paper, an entire sentence was processed before an embedding vector was assigning to each word in the sentence. A bi-directional LSTM was used to calculate such, deep contextualized embeddings for each word.

- In 2020, the transformer is successfully applied to other modalities, outperforming architectures specialized to those modalities: Vision transformer[26] on images and Conformer[27] on speech.

- In 2020, difficulties with converging the original transformer were solved by normalizing layers before (instead of after) multiheaded attention by Xiong et al.[28]

- In 2023, uni-directional ("autoregressive") transformers were being used in the (more than 100B-sized) GPT-3 and other OpenAI GPT models.[29][30]

Predecessors

Before transformers, predecessors of attention mechanism were added to gated RNNs, such as LSTMs and gated recurrent units (GRUs), which processed datasets sequentially. Dependency on previous token computations prevented them from being able to parallelize the attention mechanism. In 1992, fast weight controller was proposed as an alternative to RNNs that can learn "internal spotlights of attention".[15][6] In theory, the information from one token can propagate arbitrarily far down the sequence, but in practice the vanishing gradient problem leaves the model's state at the end of a long sentence without precise, extractable information about preceding tokens.

The performance of old models was enhanced by adding an attention mechanism, which allowed a model to access any preceding point along the sequence. The attention layer weighs all previous states according to a learned measure of relevance, providing relevant information about far-away tokens. This proved to be especially useful in language translation, where far-away context can be essential for the meaning of a word in a sentence. The state vector has been accessible only after the last English word was processed while, for example, translating it from French by a LSTM model. Although in theory such a vector retains the information about the whole original sentence, in practice the information is poorly preserved. If an attention mechanism is added, the decoder is given access to the state vectors of every input word, not just the last, and can learn attention weights that dictate how much to attend to each input state vector. The augmentation of seq2seq models with the attention mechanism was first implemented in the context of machine translation by Bahdanau, Cho, and Bengio in 2014.[4][5]

Decomposable attention

In 2016, highly parallelizable decomposable attention was successfully combined with a feedforward network.[31] This indicated that attention mechanisms were powerful in themselves and that sequential recurrent processing of data was not necessary to achieve the quality gains of RNNs with attention. Soon Jakob Uszkoreit from Google Research also proposed replacing RNNs with self-attention and started the effort to evaluate that idea.[32] Transformers, using an attention mechanism, processing all tokens simultaneously, calculated "soft" weights between them in successive layers. Since the attention mechanism only uses information about other tokens from lower layers, it can be computed for all tokens in parallel, which leads to improved training speed.

Training

Methods for stabilizing training

The plain transformer architecture had difficulty converging. In the original paper[32] the authors recommended using learning rate warmup. That is, the learning rate should linearly scale up from 0 to maximal value for the first part of the training (usually recommended to be 2% of the total number of training steps), before decaying again.

A 2020 paper found that using layer normalization before (instead of after) multiheaded attention and feedforward layers stabilizes training, not requiring learning rate warmup.[33]

Pretrain-finetune

Transformers typically undergo self-supervised learning involving unsupervised pretraining followed by supervised fine-tuning. Pretraining is typically done on a larger dataset than fine-tuning, due to the limited availability of labeled training data. Tasks for pretraining and fine-tuning commonly include:

- language modeling[12]

- next-sentence prediction[12]

- question answering[3]

- reading comprehension

- sentiment analysis[1]

- paraphrasing[1]

Applications

The transformer has had great success in natural language processing (NLP), for example the tasks of machine translation and time series prediction. Many large language models such as GPT-2, GPT-3, GPT-4, BERT, XLNet, RoBERTa and ChatGPT demonstrate the ability of transformers to perform a wide variety of such NLP-related tasks, and have the potential to find real-world applications. These may include:

- machine translation

- document summarization

- document generation

- named entity recognition (NER)[34]

- biological sequence analysis

- video understanding.

In addition to the NLP applications, it has also been successful in other fields, such as computer vision, or the protein folding applications (such as AlphaFold).

Implementations

The transformer model has been implemented in standard deep learning frameworks such as TensorFlow and PyTorch.

Transformers is a library produced by Hugging Face that supplies transformer-based architectures and pretrained models.[11]

Architecture

All transformers have the same primary components:

- Tokenizers, which convert text into tokens

- Embedding layers, which convert tokens into semantically meaningful representations

- Transformer layers, which carry out the reasoning capabilities, and consist of Attention and MLP layers

Transformer layers can be one of two types, encoder and decoder. In the original paper both of them were used, while later models included only one type of them. BERT is an example of encoder-only model; GPT are decoder-only models.

Input

The input text is parsed into tokens by a byte pair encoding tokenizer, and each token is converted into a vector via looking up from a word embedding table. Then, positional information of the token is added to the word embedding.

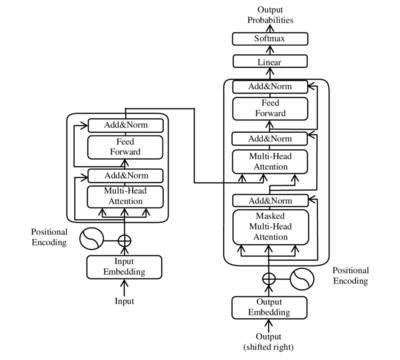

Encoder/decoder architecture

Like earlier seq2seq models, the original transformer model used an encoder/decoder architecture. The encoder consists of encoding layers that process the input tokens iteratively one layer after another, while the decoder consists of decoding layers that iteratively process the encoder's output as well as the decoder output's tokens so far.

The function of each encoder layer is to generate contextualized token representations, where each representation corresponds to a token that "mixes" information from other input tokens via self-attention mechanism. Each decoder layer contains two attention sublayers: (1) cross-attention for incorporating the output of encoder (contextualized input token representations), and (2) self-attention for "mixing" information among the input tokens to the decoder (i.e., the tokens generated so far during inference time).[35] [36]

Both the encoder and decoder layers have a feed-forward neural network for additional processing of the outputs and contain residual connections and layer normalization steps.[36]

Scaled dot-product attention

The transformer building blocks are scaled dot-product attention units. For each attention unit, the transformer model learns three weight matrices: the query weights , the key weights , and the value weights . For each token , the input token representation is multiplied with each of the three weight matrices to produce a query vector , a key vector , and a value vector . Attention weights are calculated using the query and key vectors: the attention weight from token to token is the dot product between and . The attention weights are divided by the square root of the dimension of the key vectors, , which stabilizes gradients during training, and passed through a softmax which normalizes the weights. The fact that and are different matrices allows attention to be non-symmetric: if token attends to token (i.e. is large), this does not necessarily mean that token will attend to token (i.e. could be small). The output of the attention unit for token is the weighted sum of the value vectors of all tokens, weighted by , the attention from token to each token.

The attention calculation for all tokens can be expressed as one large matrix calculation using the softmax function, which is useful for training due to computational matrix operation optimizations that quickly compute matrix operations. The matrices , and are defined as the matrices where the th rows are vectors , , and respectively. Then we can represent the attention as

where softmax is taken over the horizontal axis.

Multi-head attention

One set of matrices is called an attention head, and each layer in a transformer model has multiple attention heads. While each attention head attends to the tokens that are relevant to each token, with multiple attention heads the model can do this for different definitions of "relevance". In addition, the influence field representing relevance can become progressively dilated in successive layers. Many transformer attention heads encode relevance relations that are meaningful to humans. For example, some attention heads can attend mostly to the next word, while others mainly attend from verbs to their direct objects.[37] The computations for each attention head can be performed in parallel, which allows for fast processing. The outputs for the attention layer are concatenated to pass into the feed-forward neural network layers.

Concretely, let the multiple attention heads be indexed by , then we have where the matrix is the concatenation of word embeddings, and the matrices are "projection matrices" owned by individual attention head , and is a final projection matrix owned by the whole multi-headed attention head.

Masked attention

It may be necessary to cut out attention links between some word-pairs. For example, the decoder for token position should not have access to token position . This may be accomplished before the softmax stage by adding a mask matrix that is negative infinity at entries where the attention link must be cut, and zero at other places.

Encoder

Each encoder consists of two major components: a self-attention mechanism and a feed-forward neural network. The self-attention mechanism accepts input encodings from the previous encoder and weights their relevance to each other to generate output encodings. The feed-forward neural network further processes each output encoding individually. These output encodings are then passed to the next encoder as its input, as well as to the decoders.

The first encoder takes positional information and embeddings of the input sequence as its input, rather than encodings. The positional information is necessary for the transformer to make use of the order of the sequence, because no other part of the transformer makes use of this.[32]

The encoder is bidirectional. Attention can be placed on tokens before and after the current token. Tokens are used instead of words to account for polysemy.

Positional encoding

A positional encoding is a fixed-size vector representation that encapsulates the relative positions of tokens within a target sequence: it provides the transformer model with information about where the words are in the input sequence.

The positional encoding is defined as a function of type , where is a positive even integer. The full positional encoding – as defined in the original paper – is given by the equation:where .

Here, is a free parameter that should be significantly larger than the biggest that would be input into the positional encoding function. In the original paper,[32] the authors chose .

The function is in a simpler form when written as a complex function of type where .

The main reason the authors chose this as the positional encoding function is that it allows one to perform shifts as linear transformations:where is the distance one wishes to shift. This allows the transformer to take any encoded position, and find the encoding of the position n-steps-ahead or n-steps-behind, by a matrix multiplication.

By taking a linear sum, any convolution can also be implemented as linear transformations:for any constants . This allows the transformer to take any encoded position and find a linear sum of the encoded locations of its neighbors. This sum of encoded positions, when fed into the attention mechanism, would create attention weights on its neighbors, much like what happens in a convolutional neural network language model. In the author's words, "we hypothesized it would allow the model to easily learn to attend by relative position".

In typical implementations, all operations are done over the real numbers, not the complex numbers, but since complex multiplication can be implemented as real 2-by-2 matrix multiplication, this is a mere notational difference.

Decoder

Each decoder consists of three major components: a self-attention mechanism, an attention mechanism over the encodings, and a feed-forward neural network. The decoder functions in a similar fashion to the encoder, but an additional attention mechanism is inserted which instead draws relevant information from the encodings generated by the encoders. This mechanism can also be called the encoder-decoder attention.[32][36]

Like the first encoder, the first decoder takes positional information and embeddings of the output sequence as its input, rather than encodings. The transformer must not use the current or future output to predict an output, so the output sequence must be partially masked to prevent this reverse information flow.[32] This allows for autoregressive text generation. For all attention heads, attention can't be placed on following tokens. The last decoder is followed by a final linear transformation and softmax layer, to produce the output probabilities over the vocabulary.

All members of OpenAI's GPT series have a decoder-only architecture.

Terminology

In large language model, the terminology is somewhat different than the terminology used in the original Transformer paper:[38]

- "encoder only": full encoder, full decoder.

- "encoder-decoder": full encoder, autoregressive decoder.

- "decoder only": autoregressive encoder, autoregressive decoder.

Here "autoregressive" means that a mask is inserted in the attention head to zero out all attention from one token to all tokens following it, as described in the "masked attention" section.

Subsequent work

Alternative functions

The original transformer uses ReLU activation function. Other activation functions were developed, such as SwiGLU.[39]

Alternative positional encodings

Transformers may use other positional encoding methods than sinusoidal.[40]

RoPE (rotary positional embedding),[41] is best explained by considering a list of 2-dimensional vectors . Now pick some angle . Then RoPE encoding isFor a list of -dimensional vectors, a RoPE encoder is defined by a sequence of angles . Then the RoPE encoding is applied to each pair of coordinates.

The benefit of RoPE is that the dot-product between two vectors depends on their relative location only:

for any integer .

ALiBi (Attention with Linear Biases)[42] is not a replacement for the positional encoder on the original transformer. Instead, it is an additional positional encoder that is directly plugged into the attention mechanism. Specifically, the ALiBi attention mechanism isHere, is a real number ("scalar"), and is the linear bias matrix defined byin other words, .

ALiBi allows pretraining on short context windows, then finetuning on longer context windows. Since it is directly plugged into the attention mechanism, it can be combined with any positional encoder that is plugged into the "bottom" of the entire network (which is where the sinusoidal encoder on the original transformer, as well as RoPE and many others, are located).

Efficient implementation

FlashAttention

FlashAttention[43] is an algorithm that implements the transformer attention mechanism efficiently on a GPU. It performs matrix multiplications in blocks, such that each block fits within the cache of a GPU, and by careful management of the blocks it minimizes data copying between GPU caches (as data movement is slow).

An improved version, FlashAttention-2,[44][45][46] was developed to cater to the rising demand for language models capable of handling longer context lengths. It offers enhancements in work partitioning and parallelism, enabling it to achieve up to 230 TFLOPs/s on A100 GPUs (FP16/BF16), a 2x speed increase over the original FlashAttention.

Key advancements in FlashAttention-2 include the reduction of non-matmul FLOPs, improved parallelism over the sequence length dimension, better work partitioning between GPU warps, and added support for head dimensions up to 256 and multi-query attention (MQA) and grouped-query attention (GQA).

Benchmarks revealed FlashAttention-2 to be up to 2x faster than FlashAttention and up to 9x faster than a standard attention implementation in PyTorch. Future developments include optimization for new hardware like H100 GPUs and new data types like FP8.

Multi-Query Attention

Multi-Query Attention changes the multiheaded attention mechanism.[47] Whereas normally,

with Multi-Query Attention, there is just one , thus:

This has a neutral effect on model quality and training speed, but increases inference speed.

Sub-quadratic transformers

Training transformer-based architectures can be expensive, especially for long inputs.[48] Alternative architectures include the Reformer (which reduces the computational load from to [48]), or models like ETC/BigBird (which can reduce it to )[49] where is the length of the sequence. This is done using locality-sensitive hashing and reversible layers.[50][51]

Ordinary transformers require a memory size that is quadratic in the size of the context window. Attention-free transformers[52] reduce this to a linear dependence while still retaining the advantages of a transformer by linking the key to the value.

Long Range Arena (2020)[53] is a standard benchmark for comparing the behavior of transformer architectures over long inputs.

Random Feature Attention (2021)[54] uses Fourier random features:where are independent samples from the normal distribution . This choice of parameters satisfy , or Consequently, the one-headed attention, with one query, can be written as where . Similarly for multiple queries, and for multiheaded attention.

This approximation can be computed in linear time, as we can compute the matrix first, then multiply it with the query. In essence, we have managed to obtain a more precise version of

Performer (2022)[55] uses the same Random Feature Attention, but are first independently sampled from the normal distribution , then they are Gram-Schmidt processed.

Multimodality

Transformers can also be used/adapted for modalities (input or output) beyond just text, usually by finding a way to "tokenize" the modality.

Vision transformers[56] adapt the transformer to computer vision by breaking down input images as a series of patches, turning them into vectors, and treating them like tokens in a standard transformer.

Conformer[57] and later Whisper[58] follow the same pattern for speech recognition, first turning the speech signal into a spectrogram, which is then treated like an image, i.e. broken down into a series of patches, turned into vectors and treated like tokens in a standard transformer.

Perceivers by Andrew Jaegle et al. (2021)[59][60] can learn from large amounts of heterogeneous data.

Regarding image outputs, Peebles et al introduced a diffusion transformer (DiT) which facilitates use of the transformer architecture for diffusion-based image production.[61] Also, Google released a transformer-centric image generator called "Muse" based on parallel decoding and masked generative transformer technology.[62] (Transformers played a less-central role with prior image-producing technologies,[63] albeit still a significant one.[64])

See also

- Perceiver – Variant of Transformer designed for multimodal data

- BERT (language model) – Series of language models developed by Google AI

- GPT-3 – 2020 text-generating language model

- GPT-4 – 2023 text-generating language model

- ChatGPT – Chatbot developed by OpenAI

- Wu Dao – Chinese multimodal artificial intelligence program

- Vision transformer – Variant of Transformer designed for vision processing

- BLOOM (language model) – Open-access multilingual language model

References

- ^ a b c d Vaswani, Ashish; Shazeer, Noam; Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N; Kaiser, Łukasz; Polosukhin, Illia (2017). "Attention is All you Need". Advances in Neural Information Processing Systems. 30. Curran Associates, Inc.

- ^ Hochreiter, Sepp; Schmidhuber, Jürgen (1 November 1997). "Long Short-Term Memory". Neural Computation. 9 (8): 1735–1780. doi:10.1162/neco.1997.9.8.1735. ISSN 0899-7667. PMID 9377276. S2CID 1915014.

- ^ a b "Better Language Models and Their Implications". OpenAI. 2019-02-14. Archived from the original on 2020-12-19. Retrieved 2019-08-25.

- ^ a b Bahdanau; Cho, Kyunghyun; Bengio, Yoshua (September 1, 2014). "Neural Machine Translation by Jointly Learning to Align and Translate". arXiv:1409.0473 [cs.CL].

- ^ a b Luong, Minh-Thang; Pham, Hieu; Manning, Christopher D. (August 17, 2015). "Effective Approaches to Attention-based Neural Machine Translation". arXiv:1508.04025 [cs.CL].

- ^ a b c Schmidhuber, Jürgen (1992). "Learning to control fast-weight memories: an alternative to recurrent nets". Neural Computation. 4 (1): 131–139. doi:10.1162/neco.1992.4.1.131. S2CID 16683347.

- ^ a b Schlag, Imanol; Irie, Kazuki; Schmidhuber, Jürgen (2021). "Linear Transformers Are Secretly Fast Weight Programmers". ICML 2021. Springer. pp. 9355–9366.

- ^ a b Katharopoulos, Angelos; Vyas, Apoorv; Pappas, Nikolaos; Fleuret, François (2020). "Transformers are RNNs: Fast autoregressive Transformers with linear attention". ICML 2020. PMLR. pp. 5156–5165.

- ^ He, Cheng (31 December 2021). "Transformer in CV". Transformer in CV. Towards Data Science. Archived from the original on 16 April 2023. Retrieved 19 June 2021.

- ^ Radford, Alec; Jong Wook Kim; Xu, Tao; Brockman, Greg; McLeavey, Christine; Sutskever, Ilya (2022). "Robust Speech Recognition via Large-Scale Weak Supervision". arXiv:2212.04356 [eess.AS].

- ^ a b Wolf, Thomas; Debut, Lysandre; Sanh, Victor; Chaumond, Julien; Delangue, Clement; Moi, Anthony; Cistac, Pierric; Rault, Tim; Louf, Remi; Funtowicz, Morgan; Davison, Joe; Shleifer, Sam; von Platen, Patrick; Ma, Clara; Jernite, Yacine; Plu, Julien; Xu, Canwen; Le Scao, Teven; Gugger, Sylvain; Drame, Mariama; Lhoest, Quentin; Rush, Alexander (2020). "Transformers: State-of-the-Art Natural Language Processing". Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations. pp. 38–45. doi:10.18653/v1/2020.emnlp-demos.6. S2CID 208117506.

- ^ a b c "Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing". Google AI Blog. 2 November 2018. Archived from the original on 2021-01-13. Retrieved 2019-08-25.

- ^ Elman, Jeffrey L. (March 1990). "Finding Structure in Time". Cognitive Science. 14 (2): 179–211. doi:10.1207/s15516709cog1402_1. S2CID 2763403.

- ^ Choromanski, Krzysztof; Likhosherstov, Valerii; Dohan, David; Song, Xingyou; Gane, Andreea; Sarlos, Tamas; Hawkins, Peter; Davis, Jared; Mohiuddin, Afroz; Kaiser, Lukasz; Belanger, David; Colwell, Lucy; Weller, Adrian (2020). "Rethinking Attention with Performers". arXiv:2009.14794 [cs.CL].

- ^ a b Schmidhuber, Jürgen (1993). "Reducing the ratio between learning complexity and number of time-varying variables in fully recurrent nets". ICANN 1993. Springer. pp. 460–463.

- ^ Brown, Peter F. (1993). "The mathematics of statistical machine translation: Parameter estimation". Computational Linguistics (19): 263–311.

- ^ Banko, Michele; Brill, Eric (2001). "Scaling to very very large corpora for natural language disambiguation". Proceedings of the 39th Annual Meeting on Association for Computational Linguistics - ACL '01. Morristown, NJ, USA: Association for Computational Linguistics: 26–33. doi:10.3115/1073012.1073017. S2CID 6645623.

- ^ Sutskever, Ilya; Vinyals, Oriol; Le, Quoc V (2014). "Sequence to Sequence Learning with Neural Networks". Advances in Neural Information Processing Systems. 27. Curran Associates, Inc. arXiv:1409.3215.

- ^ Cho, Kyunghyun; van Merrienboer, Bart; Bahdanau, Dzmitry; Bengio, Yoshua (2014). "On the Properties of Neural Machine Translation: Encoder–Decoder Approaches". Proceedings of SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation. Stroudsburg, PA, USA: Association for Computational Linguistics: 103–111. doi:10.3115/v1/w14-4012. S2CID 11336213.

- ^ Chung, Junyoung; Gulcehre, Caglar; Cho, KyungHyun; Bengio, Yoshua (2014). "Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling". arXiv:1412.3555 [cs.NE].

- ^ Gruber, N.; Jockisch, A. (2020), "Are GRU cells more specific and LSTM cells more sensitive in motive classification of text?", Frontiers in Artificial Intelligence, 3: 40, doi:10.3389/frai.2020.00040, PMC 7861254, PMID 33733157, S2CID 220252321

- ^ Bahdanau, Dzmitry; Cho, Kyunghyun; Bengio, Yoshua (2014-09-01). "Neural Machine Translation by Jointly Learning to Align and Translate". arXiv:1409.0473 [cs.CL].

- ^ Lewis-Kraus, Gideon (2016-12-14). "The Great A.I. Awakening". The New York Times. ISSN 0362-4331. Archived from the original on 24 May 2023. Retrieved 2023-06-22.

- ^ Wu, Yonghui; et al. (2016-09-01). "Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation". arXiv:1609.08144 [cs.CL].

- ^ Devlin, Jacob; Chang, Ming-Wei; Lee, Kenton; Toutanova, Kristina (11 October 2018). "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding". arXiv:1810.04805v2 [cs.CL].

- ^ Dosovitskiy, Alexey; Beyer, Lucas; Kolesnikov, Alexander; Weissenborn, Dirk; Zhai, Xiaohua; Unterthiner, Thomas; Dehghani, Mostafa; Minderer, Matthias; Heigold, Georg; Gelly, Sylvain; Uszkoreit, Jakob (2021-06-03). "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale". arXiv:2010.11929 [cs.CV].

- ^ Gulati, Anmol; Qin, James; Chiu, Chung-Cheng; Parmar, Niki; Zhang, Yu; Yu, Jiahui; Han, Wei; Wang, Shibo; Zhang, Zhengdong; Wu, Yonghui; Pang, Ruoming (2020). "Conformer: Convolution-augmented Transformer for Speech Recognition". arXiv:2005.08100 [eess.AS].

- ^ Xiong, Ruibin; Yang, Yunchang; He, Di; Zheng, Kai; Zheng, Shuxin; Xing, Chen; Zhang, Huishuai; Lan, Yanyan; Wang, Liwei; Liu, Tie-Yan (2020-06-29). "On Layer Normalization in the Transformer Architecture". arXiv:2002.04745 [cs.LG].

- ^ "Improving language understanding with unsupervised learning". openai.com. June 11, 2018. Archived from the original on 2023-03-18. Retrieved 2023-03-18.

- ^ finetune-transformer-lm, OpenAI, June 11, 2018, retrieved 2023-05-01

- ^ "Papers with Code – A Decomposable Attention Model for Natural Language Inference". paperswithcode.com.

- ^ a b c d e f Vaswani, Ashish; Shazeer, Noam; Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N.; Kaiser, Lukasz; Polosukhin, Illia (2017-06-12). "Attention Is All You Need". arXiv:1706.03762 [cs.CL].

- ^ Xiong, Ruibin; Yang, Yunchang; He, Di; Zheng, Kai; Zheng, Shuxin; Xing, Chen; Zhang, Huishuai; Lan, Yanyan; Wang, Liwei; Liu, Tie-Yan (2020-06-29). "On Layer Normalization in the Transformer Architecture". arXiv:2002.04745 [cs.LG].

- ^ Kariampuzha, William; Alyea, Gioconda; Qu, Sue; Sanjak, Jaleal; Mathé, Ewy; Sid, Eric; Chatelaine, Haley; Yadaw, Arjun; Xu, Yanji; Zhu, Qian (2023). "Precision information extraction for rare disease epidemiology at scale". Journal of Translational Medicine. 21 (1): 157. doi:10.1186/s12967-023-04011-y. PMC 9972634. PMID 36855134.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ^ "Sequence Modeling with Neural Networks (Part 2): Attention Models". Indico. 2016-04-18. Archived from the original on 2020-10-21. Retrieved 2019-10-15.

- ^ a b c Alammar, Jay. "The Illustrated Transformer". jalammar.github.io. Archived from the original on 2020-10-18. Retrieved 2019-10-15.

- ^ Clark, Kevin; Khandelwal, Urvashi; Levy, Omer; Manning, Christopher D. (August 2019). "What Does BERT Look at? An Analysis of BERT's Attention". Proceedings of the 2019 ACL Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP. Florence, Italy: Association for Computational Linguistics: 276–286. doi:10.18653/v1/W19-4828. Archived from the original on 2020-10-21. Retrieved 2020-05-20.

- ^ LeCun, Yann (Apr 28, 2023). "A survey of LLMs with a practical guide and evolutionary tree". Twitter. Archived from the original on 23 Jun 2023. Retrieved 2023-06-23.

- ^ Shazeer, Noam (2020-02-01). "GLU Variants Improve Transformer". arXiv:2002.05202.

- ^ Dufter, Philipp; Schmitt, Martin; Schütze, Hinrich (2022-06-06). "Position Information in Transformers: An Overview". Computational Linguistics. 48 (3): 733–763. doi:10.1162/coli_a_00445. ISSN 0891-2017. S2CID 231986066.

- ^ Su, Jianlin; Lu, Yu; Pan, Shengfeng; Murtadha, Ahmed; Wen, Bo; Liu, Yunfeng (2021-04-01). "RoFormer: Enhanced Transformer with Rotary Position Embedding". arXiv:2104.09864.

- ^ Press, Ofir; Smith, Noah A.; Lewis, Mike (2021-08-01). "Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation". arXiv:2108.12409.

- ^ Dao, Tri; Fu, Dan; Ermon, Stefano; Rudra, Atri; Ré, Christopher (2022-12-06). "FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness". Advances in Neural Information Processing Systems. 35: 16344–16359. arXiv:2205.14135.

- ^ "Stanford CRFM". crfm.stanford.edu. Retrieved 2023-07-18.

- ^ "FlashAttention-2: Faster Attention with Better Parallelism and Work Partitioning". Princeton NLP. 2023-06-17. Retrieved 2023-07-18.

- ^ "Introducing Together AI Chief Scientist Tri Dao, as he releases FlashAttention-2 to speed up model training and inference". TOGETHER. Retrieved 2023-07-18.

- ^ Chowdhery, Aakanksha; Narang, Sharan; Devlin, Jacob; Bosma, Maarten; Mishra, Gaurav; Roberts, Adam; Barham, Paul; Chung, Hyung Won; Sutton, Charles; Gehrmann, Sebastian; Schuh, Parker; Shi, Kensen; Tsvyashchenko, Sasha; Maynez, Joshua; Rao, Abhishek (2022-04-01). "PaLM: Scaling Language Modeling with Pathways". arXiv:2204.02311.

- ^ a b Kitaev, Nikita; Kaiser, Łukasz; Levskaya, Anselm (2020). "Reformer: The Efficient Transformer". arXiv:2001.04451 [cs.LG].

- ^ "Constructing Transformers For Longer Sequences with Sparse Attention Methods". Google AI Blog. 25 March 2021. Archived from the original on 2021-09-18. Retrieved 2021-05-28.

- ^ "Tasks with Long Sequences – Chatbot". Coursera. Archived from the original on 2020-10-26. Retrieved 2020-10-22.

- ^ "Reformer: The Efficient Transformer". Google AI Blog. 16 January 2020. Archived from the original on 2020-10-22. Retrieved 2020-10-22.

- ^ Zhai, Shuangfei; Talbott, Walter; Srivastava, Nitish; Huang, Chen; Goh, Hanlin; Zhang, Ruixiang; Susskind, Josh (2021-09-21). "An Attention Free Transformer". arXiv:2105.14103 [cs.LG].

- ^ Tay, Yi; Dehghani, Mostafa; Abnar, Samira; Shen, Yikang; Bahri, Dara; Pham, Philip; Rao, Jinfeng; Yang, Liu; Ruder, Sebastian; Metzler, Donald (2020-11-08). "Long Range Arena: A Benchmark for Efficient Transformers". arXiv:2011.04006 [cs.LG].

- ^ Peng, Hao; Pappas, Nikolaos; Yogatama, Dani; Schwartz, Roy; Smith, Noah A.; Kong, Lingpeng (2021-03-19). "Random Feature Attention". arXiv:2103.02143 [cs.CL].

- ^ Choromanski, Krzysztof; Likhosherstov, Valerii; Dohan, David; Song, Xingyou; Gane, Andreea; Sarlos, Tamas; Hawkins, Peter; Davis, Jared; Belanger, David; Colwell, Lucy; Weller, Adrian (2020-09-30). "Masked Language Modeling for Proteins via Linearly Scalable Long-Context Transformers". arXiv:2006.03555 [cs.LG].

- ^ Dosovitskiy, Alexey; Beyer, Lucas; Kolesnikov, Alexander; Weissenborn, Dirk; Zhai, Xiaohua; Unterthiner, Thomas; Dehghani, Mostafa; Minderer, Matthias; Heigold, Georg; Gelly, Sylvain; Uszkoreit, Jakob (2021-06-03). "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale". arXiv:2010.11929 [cs.CV].

- ^ Gulati, Anmol; Qin, James; Chiu, Chung-Cheng; Parmar, Niki; Zhang, Yu; Yu, Jiahui; Han, Wei; Wang, Shibo; Zhang, Zhengdong; Wu, Yonghui; Pang, Ruoming (2020). "Conformer: Convolution-augmented Transformer for Speech Recognition". arXiv:2005.08100 [eess.AS].

- ^ Radford, Alec; Kim, Jong Wook; Xu, Tao; Brockman, Greg; McLeavey, Christine; Sutskever, Ilya (2022). "Robust Speech Recognition via Large-Scale Weak Supervision". arXiv:2212.04356 [eess.AS].

- ^ Jaegle, Andrew; Gimeno, Felix; Brock, Andrew; Zisserman, Andrew; Vinyals, Oriol; Carreira, Joao (2021-06-22). "Perceiver: General Perception with Iterative Attention". arXiv:2103.03206 [cs.CV].

- ^ Jaegle, Andrew; Borgeaud, Sebastian; Alayrac, Jean-Baptiste; Doersch, Carl; Ionescu, Catalin; Ding, David; Koppula, Skanda; Zoran, Daniel; Brock, Andrew; Shelhamer, Evan; Hénaff, Olivier (2021-08-02). "Perceiver IO: A General Architecture for Structured Inputs & Outputs". arXiv:2107.14795 [cs.LG].

- ^ Peebles, William; Xie, Saining (March 2, 2023). "Scalable Diffusion Models with Transformers". arXiv:2212.09748 [cs.CV].

- ^ "Google AI Unveils Muse, a New Text-to-Image Transformer Model". InfoQ.

- ^ "Using Diffusion Models to Create Superior NeRF Avatars". January 5, 2023.

- ^ Islam, Arham (November 14, 2022). "How Do DALL·E 2, Stable Diffusion, and Midjourney Work?".

Further reading

- Hubert Ramsauer et al. (2020), "Hopfield Networks is All You Need" Archived 2021-09-18 at the Wayback Machine, preprint submitted for ICLR 2021. arXiv:2008.02217; see also authors' blog Archived 2021-09-18 at the Wayback Machine

- – Discussion of the effect of a transformer layer as equivalent to a Hopfield update, bringing the input closer to one of the fixed points (representable patterns) of a continuous-valued Hopfield network

- Alexander Rush, The Annotated transformer Archived 2021-09-22 at the Wayback Machine, Harvard NLP group, 3 April 2018

- Phuong, Mary; Hutter, Marcus (2022), Formal Algorithms for Transformers, arXiv:2207.09238

![{\displaystyle {\text{MultiheadedAttention}}(Q,K,V)={\text{Concat}}_{i\in [\#heads]}({\text{Attention}}(XW_{i}^{Q},XW_{i}^{K},XW_{i}^{V}))W^{O}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fc1b0d2171d6755c26d650b7242b93d33e880aa1)

![{\displaystyle [(x_{1}^{(1)},x_{1}^{(2)}),(x_{2}^{(1)},x_{2}^{(2)}),(x_{3}^{(1)},x_{3}^{(2)}),...]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/08b00c812263b798fed7b345975d49dbebdfada5)

![{\displaystyle {\text{MultiQueryAttention}}(Q,K,V)={\text{Concat}}_{i\in [\#heads]}({\text{Attention}}(XW_{i}^{Q},XW^{K},XW^{V}))W^{O}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1171dbeec771a6b92e0e508726a24dbd77a57ccf)

![{\displaystyle \varphi (x)={\frac {1}{\sqrt {D}}}[\cos \langle w_{1},x\rangle ,\sin \langle w_{1},x\rangle ,\cdots \cos \langle w_{D},x\rangle ,\sin \langle w_{D},x\rangle ]^{T}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/243ed0310c01dc8193d985ea838e92191cec4fac)

![{\displaystyle \mathbb {E} [\langle \varphi (x),\varphi (y)\rangle ]=e^{\frac {\|x-y\|^{2}}{2\sigma ^{2}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/024c3a42738f529d84842abf256cee8b23b40d8f)

![{\displaystyle e^{\langle x,y\rangle /\sigma ^{2}}=\mathbb {E} [\langle e^{\|x\|^{2}/2\sigma ^{2}}\varphi (x),e^{\|y\|^{2}/2\sigma ^{2}}\varphi (y)\rangle ]\approx \langle e^{\|x\|^{2}/2\sigma ^{2}}\varphi (x),e^{\|y\|^{2}/2\sigma ^{2}}\varphi (y)\rangle }](https://wikimedia.org/api/rest_v1/media/math/render/svg/bfb56111453c9e03415021c39d21ed88a37d2ea1)