Wikipedia:Reference desk/Science

of the Wikipedia reference desk.

Main page: Help searching Wikipedia

How can I get my question answered?

- Select the section of the desk that best fits the general topic of your question (see the navigation column to the right).

- Post your question to only one section, providing a short header that gives the topic of your question.

- Type '~~~~' (that is, four tilde characters) at the end – this signs and dates your contribution so we know who wrote what and when.

- Don't post personal contact information – it will be removed. Any answers will be provided here.

- Please be as specific as possible, and include all relevant context – the usefulness of answers may depend on the context.

- Note:

- We don't answer (and may remove) questions that require medical diagnosis or legal advice.

- We don't answer requests for opinions, predictions or debate.

- We don't do your homework for you, though we'll help you past the stuck point.

- We don't conduct original research or provide a free source of ideas, but we'll help you find information you need.

How do I answer a question?

Main page: Wikipedia:Reference desk/Guidelines

- The best answers address the question directly, and back up facts with wikilinks and links to sources. Do not edit others' comments and do not give any medical or legal advice.

January 14

Electric motors and hybrid vehicles

Hybrid in the sense of a flying car or a car boat, something along those lines. If you were to make these things using electric motors, do you need separate electric motors to drive the wheels and the propeller/jets? Or could you in theory use a single electric motor to provide power to both the wheels and the propellers/jets? ScienceApe (talk) 04:32, 14 January 2016 (UTC)

- Yes you could use just one motor and a complex transmission, or you could devote one motor to each wheel/prop. If your design never uses both forms of propulsion at once then you may come in cheaper/lighter with a single motor solution. Greglocock (talk) 05:13, 14 January 2016 (UTC)

- "propeller/jets" - probably not jets. The hard part for small planes is payload weight is traded for fuel. --DHeyward (talk) 05:35, 14 January 2016 (UTC)

- I took "jets" to mean the boat kind, as in a Jet Ski, not the airplane kind. StuRat (talk) 05:45, 14 January 2016 (UTC)

- Terrafugia has been pitching their "Transition" aircraft, which uses one engine: "Running on premium unleaded automotive gasoline, the same engine powers the propeller in flight or the rear wheels on the ground." Their proposed TF-X will have multiple electric engines. They just need somebody to design it, build it, program it, test it, operate it, fund it, ...

- Nimur (talk) 17:33, 14 January 2016 (UTC)

- For the flying car, weight is critical, so you really don't want an electric motor to start with, because they, along with the required batteries, have a lower power-to-weight ratio than gasoline, jet fuel, etc. Also, a car can't be designed with the same lightweight components you use in a plane, or it would be too fragile for the road. So, an electric-powered flying car is quite impractical (it might be possible, but that's not the same as practical).

- For the amphibious vehicle, electric power would be a bit more practical, as power-to-weight ratio is less of a concern. There are basically two types I am aware of, hovercraft and ducks. The power-to-weight ratio would be less of a concern in the second type. StuRat (talk) 05:42, 14 January 2016 (UTC)

- Electric engines can be split up very easily and effective down to multiple smallest size engines instead of one big because they are very simple constructions in essence. Combustion engines on the other hand are way more complicated because they need lots of additional parts like an injection system, a starter, often even additional special parts like a choke for starting cold etc.. Thus its much harder to replace one big combustion engine with multiple small ones.

- Now in addition to that ist much easier and more effective to just lay two electric cables instead of adding a transmission to every location you want a central motor's power to be split up to. Thats why for example each of the 6 Curiosity (rover)'s wheels has its very own engine. --Kharon (talk) 00:18, 15 January 2016 (UTC)

- There's also quite a bonus in torque if you drive the wheels and propeller of that vehicle directly with electric motors instead of using a transmission (where you get friction losses, perhaps even losses in torque owing to the gearing or teleflex cables used to transmit power).

- Speed and torque both can be controlled by at each motor by pulse-width modulation circuitry, which is cheaper and easier than a physical transmission. If the point of the exercise is to get more motion per watt, electric motors to drive the wheels and the propeller of the vehicle could well be lighter than a transmission to do the same thing from a single motor. loupgarous (talk) 19:58, 15 January 2016 (UTC)

- An electric drive does not need a transmission or gearbox. Electric motors have a high and nearly constant efficiency. Batteries can not store that much energy than fuel does. Weight and volume are limiting factors for traction batteries in a vehicle. Combustion engies have brake specific fuel consumption in load and rotational speed and less efficiency than electric engines. A transmission or gearbox transforms the ratio of rotational speed and torque of the combustion engine to keep it operating in the mode of the lowest possible fuel consumption. There are several variants of hybrid vehicles. Patents protect the use of some solutions. The one used in Japan, connects two motorgenerators (electric motors which can be used as a generator as well) over a planetary gear box, using it to sum or subtract torque between motors and drive axle. The combustion engine is attached to the smaller of the motorgenerators. A clutch is installed to protect the gears only. The combustion engine just adds torque to the system. This torque is beeing used to charge the traction battiery and drive the vehicle. The ration is controlled by the two motorgeneratos. Charging the battiery from a generator is a lost my the efficiency ratio of each of the converters. Batteries are changed with a higher voltage than the batterie output is. Driving the vericle directly from the combution enginge makes it more efficienty when the combustion engine is beeing operated in an optimal mode. The motorgenerators also keep the ratio by vehicle speed and engine operation mode. This video, shows it. The cobustion engine speeds up to its maximum power output, not to its highes rpm. Kicking the accellerator down, the vehicle still speeds up while the combustion engine is operating in constant rpm making the mechanic from the garage think the first time, there's someting wrong with the clutch, which is not true. --Hans Haase (有问题吗) 01:52, 16 January 2016 (UTC)

- Uhh just so you know, that video is from a video game. You can tell by the "grass". ScienceApe (talk) 20:10, 16 January 2016 (UTC)

- Sorry ScienceApe, indeed, geat simulation, have these from real camera.[1][2][3][4], This violent driving[5] – I do not support – shows how the combustion engine reduces rotational speed when not needed. Faster than 50 km/h (~30 mph) it never turns of due to high rotational speeds in the planetary gear. --Hans Haase (有问题吗) 12:51, 18 January 2016 (UTC)

- Uhh just so you know, that video is from a video game. You can tell by the "grass". ScienceApe (talk) 20:10, 16 January 2016 (UTC)

- Similar to the Japanese patent using motorgenerators on a planetary gear, a German patent uses hydraulic drive over planetary gear it machines for food production [6] It also shows how the Japanese hybrid operate in reverse gear on empty battery or cold combustion engine. --Hans Haase (有问题吗) 12:16, 16 January 2016 (UTC)

History of puberty blockers

When was the first one developed? When did they become widely available? -- Brainy J ~✿~ (talk) 04:44, 14 January 2016 (UTC)

- This article from 2006 [7] is the earliest ref in our current article. It cites this 1983 article [8] describing a different treatment of precocious puberty in 1983. I do not know if that was the first puberty blocker, but it's a start. If you need access to these articles, ask at WP:REX. Sorting through the citations of the 1983 paper and our precocious puberty article (or even just reading them it carefully, which I did not) may answer you questions more conclusively. SemanticMantis (talk) 16:17, 14 January 2016 (UTC)

Platinum electrode

What electrochemical cell voltage would be considered as a safe upper limit, bellow which oxidation hence dissolution of the platinum electrode is negligible? I'm running an electrowinning setup using a complex leachate, containing multiple components. I've been using 4.0 V, but that is a complete guess. Plasmic Physics (talk) 10:53, 14 January 2016 (UTC)

- Standard electrode potential (data page) indicates the standard reduction potential for Pt2+ --> Pt is +1.188 volts. --Jayron32 16:08, 14 January 2016 (UTC)

- I don't think it works that way, besides, I've had no obvious degradation of the anode. Plasmic Physics (talk) 20:37, 14 January 2016 (UTC)

What's the difference between transvestites and transsexuals?

When you see one can you tell, this is a transvestite but not transsexual? Are transvestites just part-time transsexuals?--Scicurious (talk) 13:57, 14 January 2016 (UTC)

- We do have articles transvestite and transsexual - the former could use some work though. It sounds from that like "transvestite" is coined not really that long ago to mean cross-dressing, but its creator used it to refer to more long term transsexuality? Wnt (talk) 14:35, 14 January 2016 (UTC)

- I'd say transvestism is about behavior and transsexualism about gender identity. --Llaanngg (talk) 15:27, 14 January 2016 (UTC)

- When you just see anyone, you can't necessarily tell that much about them. Sexual orientation and gender identity are not inherently visible. Note also that trans people can be homosexual or heterosexual or bisexual or even asexual. Gender identity and sexual orientation and other factors combine to a lot of different sorts of people. You may also enjoy background reading on notions of gender and sex, as well as cisgender, transgender, genderqueer, or attraction to transgender people.

- Some people choose to present a visual image that helps signal their status to others, while other people do not. The general notion of this in biology is Signalling_theory. Depending on where you live, you may have seen many transsexual or transgender people and not known it, e.g. Passing_(gender).

- To answer your question directly - If you see someone who you think is cross-dressing or transsexual, the only way to know for sure if they identify as either is to ask them. But that is very rude. If I were to meet you as a stranger in public, I wouldn't ask who you like to fuck or why you wear the clothes you wear, and I'd think you'd prefer it that way :) In the USA at least, the polite thing to do is to reserve questions of this nature for people with whom we already closely familiar. SemanticMantis (talk) 15:59, 14 January 2016 (UTC)

- Our article on Transvestism says that it is, regardless of its underlying motivation, a behavior, dressing as a member of the opposite sex. Our article Transsexual defines the term as referring to those who identify with a gender inconsistent with their physiological sex, and wish to physically change to the gender with which they identify.

- How to tell the two apart? You can't. Transsexuals at some point often are transvestites - especially after sex reassignment therapy or other medical interventions aimed changing the patient's ostensible sex to what the patient identifies with.

- Transvestites are not "part-time" transsexuals. A transsexual is always a transsexual, never "part-time." Now, the reverse is possible - a transsexual may cross-dress only part of the time. Or, like the movie director Ed Wood, a transvestite may be heterosexual but have comfort issues which compel him or her to dress as the opposite sex. It's worth mentioning that women in Western countries often wear male attire without being called "transvestites." In some other countries today, this is not only transvestism, but culturally deprecated and even illegal. loupgarous (talk) 21:54, 14 January 2016 (UTC)

- I wouldn't say that after sex reassignment transsexual are often transvestites. Quite in contrary. They are physically, mentally and legally a member of their target sex, who will very probably dress like a typical member of this sex.

- I also would not say that Western woman wear male attire. Attire worn by men became unisex or with no gender mark. Women in trousers, box shorts, shirts, suits are all wearing these unisex clothes. Denidi (talk) 01:55, 15 January 2016 (UTC)

- Well, pantaloons weren't always considered unisex - see women and trousers. Which brings us to an important point that since the definition is cultural, it changes, and there may be varying motives, pragmatism ranking very high on the list. Even non-transvestites might be affected when the other sex clothing is simply unpleasant. Wnt (talk) 17:10, 15 January 2016 (UTC)

popping zits

In nature are zits supposed to be popped or left alone? Which is most beneficial from an evolutionary standpoint? — Preceding unsigned comment added by 62.37.237.15 (talk) 20:15, 14 January 2016 (UTC)

- Check out danger triangle of the face. It is rare, but popping acne or furuncles in that area can lead to infections that spread to other areas, causing Cavernous_sinus_thrombosis, or to the brain, causing meningitis. SemanticMantis (talk) 20:41, 14 January 2016 (UTC)

- This is definitely one of those "No Medical Advice" questions. We aren't allowed to offer advice on diagnosis, prognosis or treatment of medical conditions...so we can't advise you on how to treat pimples - even if the treatment is something as seemingly mundane as popping/not-popping them. Beware of arguments from an evolutionary standpoint - evolution may not care whether you wind up with smooth skin or something that looks like the surface of the moon. These things tend to be cultural in nature. SteveBaker (talk) 20:57, 14 January 2016 (UTC)

- I'm not asking for medical advice, I just asking what nature intended. How is this any more medical advice than asking if nature intended broken bones to heal or not? I don't have zits, I'm not asking this for myself. I'm asking generally as a scientific question. 62.37.237.15 (talk) 21:08, 14 January 2016 (UTC)

- Or to put it a different way; have the millions of years of human evolution favored popping or not popping zits, from a purely evolutionary (therefore survival) standpoint. No cultural issues needed. 62.37.237.15 (talk) 21:12, 14 January 2016 (UTC)

- Nature does not intend anything.

- And apparently both zit-poppers and zit-non-poppers managed to survive. So, evolution did not made up her mind about this. But as said above, this is a bad perspective, you can be in a social environment that strongly prefers the one or the other. In the same way as people might have their preferences toward deformed/normal feet, or tanned/fair skin, thin/fat bodies. --Scicurious (talk) 21:52, 14 January 2016 (UTC)

- Quick and dirty answer? Pop your zits in the presence of a potential mate, and your chances of passing your genes on through sex drop precipitously. This answers your question regarding evolution. Popping zits would seem to reduce the popper's chances of transmitting his or her genes to future generations. That being said, previous posters' remarks about "zit-poppers and zit-non-poppers" managing to survive imply a genetic basis for the behavior which hasn't, as far as I'm aware, been investigated, much less proven. It's just a gross habit that would only get you laid if you found someone who thought it was attractive and was aroused somehow by it. Think that's ever going to happen? loupgarous (talk) 22:03, 14 January 2016 (UTC)

- lol, I'm pretty sure that I could pop my zits (I rarely have them, yay yay for testosterone blockers!) in front of most of my mates (male or female) and they wouldn't really care. My ex-girlfriend didn't really have zits either, but I'm sure that she would have popped them in front of me, and we would still have great sex afterwards. Yanping Nora Soong (talk) 22:21, 14 January 2016 (UTC)

- De gustibus non disputandam... loupgarous (talk) 23:50, 14 January 2016 (UTC)

- lol, I'm pretty sure that I could pop my zits (I rarely have them, yay yay for testosterone blockers!) in front of most of my mates (male or female) and they wouldn't really care. My ex-girlfriend didn't really have zits either, but I'm sure that she would have popped them in front of me, and we would still have great sex afterwards. Yanping Nora Soong (talk) 22:21, 14 January 2016 (UTC)

- Quick and dirty answer? Pop your zits in the presence of a potential mate, and your chances of passing your genes on through sex drop precipitously. This answers your question regarding evolution. Popping zits would seem to reduce the popper's chances of transmitting his or her genes to future generations. That being said, previous posters' remarks about "zit-poppers and zit-non-poppers" managing to survive imply a genetic basis for the behavior which hasn't, as far as I'm aware, been investigated, much less proven. It's just a gross habit that would only get you laid if you found someone who thought it was attractive and was aroused somehow by it. Think that's ever going to happen? loupgarous (talk) 22:03, 14 January 2016 (UTC)

- Or to put it a different way; have the millions of years of human evolution favored popping or not popping zits, from a purely evolutionary (therefore survival) standpoint. No cultural issues needed. 62.37.237.15 (talk) 21:12, 14 January 2016 (UTC)

- I'm not asking for medical advice, I just asking what nature intended. How is this any more medical advice than asking if nature intended broken bones to heal or not? I don't have zits, I'm not asking this for myself. I'm asking generally as a scientific question. 62.37.237.15 (talk) 21:08, 14 January 2016 (UTC)

- Regarding the claim that "Pop your zits in the presence of a potential mate, and your chances of passing your genes on through sex drop precipitously. [...] Popping zits would seem to reduce the popper's chances of transmitting his or her genes to future generations": the second statement does not follow from the first, because the smart zit-popper doesn't pop zits in the presence of a potential mate, but before meeting a potential mate, thereby accomplishing the zit-popping purpose of minimizing the zit's appearance, thus increasing the popper's chance of transmitting genes. —SeekingAnswers (reply) 05:26, 16 January 2016 (UTC)

- From an evolutionary standpoint, nothing is "supposed" to do anything. Nature is not a conscious agent. The only question that makes sense is, "How does this behavior affect the fitness of the organism?" I suspect popping pimples might slightly decrease an organism's fitness, because it can lead to infection, but the risk is not that great, so I imagine the overall selection pressure is pretty tiny. --71.119.131.184 (talk) 22:16, 14 January 2016 (UTC)

- I doubt that popping zits can lead to an non-treatable infection nowadays. Scicurious (talk) 22:32, 14 January 2016 (UTC)

- This is a ref desk, what you doubt or don't doubt is completely irrelevant. Vespine (talk) 23:23, 14 January 2016 (UTC)

- That's just an expression. I could have said: I don't have any ref at hand right now, but I don't believe popping zits can lead to an non-treatable infection nowadays. Scicurious (talk) 23:40, 14 January 2016 (UTC)

- That's exactly the problem. Not having any references but an opinion. A reference like [www.webmd.com/skin-problems-and-treatments/teen-acne-13/pop-a-zit Before You Pop a Pimple] from WDMed, a web-site I normally trust, collides with your assumptions. Denidi (talk) 23:54, 14 January 2016 (UTC)

- I have myself on occasion offered a "I doubt something", but ONLY if the thread had not already had some relevant replies leading towards an opposite conclusion AND even more critically was not related to something that could have serious negative health repercussions. Vespine (talk) 00:51, 15 January 2016 (UTC)

- That's exactly the problem. Not having any references but an opinion. A reference like [www.webmd.com/skin-problems-and-treatments/teen-acne-13/pop-a-zit Before You Pop a Pimple] from WDMed, a web-site I normally trust, collides with your assumptions. Denidi (talk) 23:54, 14 January 2016 (UTC)

- That's just an expression. I could have said: I don't have any ref at hand right now, but I don't believe popping zits can lead to an non-treatable infection nowadays. Scicurious (talk) 23:40, 14 January 2016 (UTC)

- This is a ref desk, what you doubt or don't doubt is completely irrelevant. Vespine (talk) 23:23, 14 January 2016 (UTC)

- I doubt that popping zits can lead to an non-treatable infection nowadays. Scicurious (talk) 22:32, 14 January 2016 (UTC)

Eka-, dvi-, tri-, and chatur-

"Eka-" is sometimes used as a prefix meaning "one row below in the periodic table". For example "ununtrium" is sometimes called "eka-thallium". Have "Dvi-", "Tri-", and "Chatur-" been used in similar ways?? These are the corresponding prefixes for 2, 3, and 4. Georgia guy (talk) 22:07, 14 January 2016 (UTC)

- See Mendeleev's predicted elements. At least rhenium was called dvi-manganese (because eka-manganese, i.e. technetium, wasn't known either). Icek~enwiki (talk) 00:22, 15 January 2016 (UTC)

- There is and was no reason to use the higher numbers when the lower number could be used; hence you might see element 115 called eka-bismuth today, but certainly not dvi-antimony. The only cases where they were ever used AFAIK was exactly as Icek suggests: e.g. dvi-manganese, since eka-manganese was not known either. Given the rows of blanks in Mendeleyev's 1871 table where the rare earths should go, though, it's conceivable that rhenium would have become tri-manganese instead until the rare earths were separated out of the main body of the 8-group table. But there is no reason at all AFAICS to go to "chatur-" and beyond. Double sharp (talk) 08:03, 16 January 2016 (UTC)

solubility of zwitterions in organic or lipophilic solvents

What are the guidelines for whether a zwitterion (especially an alpha amino acid -- but not necessarily one of the 20) will be soluble in solvents like dichloromethane or cyclohexanol? (Chloroform or ether is also fine, I guess, since solubilities in them are tested more often.) For example, L-DOPA at its isoelectric point is weakly soluble in water, but insoluble in ether or chloroform. Which puzzles me -- how are you supposed to do acid-base extraction of an amino acid into an organic solvent if an amino acid at its pI is weakly soluble in water but even less soluble in an organic phase? Would adding a phase transfer catalyst like tetrabutylammonium bromide increase the solubility of AAs in organic solvents at the pI -- or would it reverse as soon as you tried to separate the two phases? Yanping Nora Soong (talk) 22:27, 14 January 2016 (UTC)

- This article refers to the use of lanthanide complexes to not only extract zwitterionic amino acids, but to do so by chirality of the desired amino acid.

- And this article discusses reverse micellar extraction of zwitterionic amino acids.

- The Google search term I used to locate these articles is "extraction of amino acids zwitterions," and many articles came up which you may want to look at for more information. loupgarous (talk) 00:05, 15 January 2016 (UTC)

- Thanks! That's a more useful keyword. I'm not sure if I'm understanding these "ion exchange" methods correctly (especially since I don't have any ion exchange resins at the moment). I'm looking at patents such as this one. [9] Does this patent imply I can dissolve zwitterionic amino acids in most weakly polar or non-polar organic solvents by dissolving 2M (or 1% w/w) quartenary ammonium salts (like TBAB) into the organic solvent? Then I add the sodium salt of my amino acid (whether in the aqueous layer or as a dry salt) -- sodium bromide precipitates and I get a solution of the tetrabutylammonium salt of my amino acid in cyclohexanol or dichloromethane? I'm trying to make sure I'm understanding these procedures correctly. Yanping Nora Soong (talk) 01:14, 15 January 2016 (UTC)

- The patent specifies "a substantially immiscible extractant phase comprising an amine and an acid, both of which are substantially water immiscible in both free and salt forms." Now, you're proposing (if I understand you correctly), to use quaternary ammonium salts, which are generally cationic detergents and very miscible in water. So you've already departed from the procedure defined in the patent, I think.

- I'd encourage you to read the sources the Google search "extraction of amino acids zwitterions" turns up for ideas that fit your specific requirements more closely. loupgarous (talk) 05:29, 15 January 2016 (UTC)

- In the "previous art" section they discussed the use of trioctylmethylammonium chloride. There is also mention of others using Aliquat 336, which AFAIK is often interchangeable with TBAB for phase transfer catalysis reactions. I have tetrabutylammonium bromide (TBAB). AFAIK, TBAB has a high critical micelle concentration, so it's pretty inaccurate to call it a detergent and also why it's favored for PTC. They actually mention the use of quats in that patent (as well as their organophosphate anionic counterparts), and I think they actually use them, they just combine quats, lipophilic anions and other surfactant stabilizers in the same organic phase. Yanping Nora Soong (talk) 08:48, 15 January 2016 (UTC)

- Thanks! That's a more useful keyword. I'm not sure if I'm understanding these "ion exchange" methods correctly (especially since I don't have any ion exchange resins at the moment). I'm looking at patents such as this one. [9] Does this patent imply I can dissolve zwitterionic amino acids in most weakly polar or non-polar organic solvents by dissolving 2M (or 1% w/w) quartenary ammonium salts (like TBAB) into the organic solvent? Then I add the sodium salt of my amino acid (whether in the aqueous layer or as a dry salt) -- sodium bromide precipitates and I get a solution of the tetrabutylammonium salt of my amino acid in cyclohexanol or dichloromethane? I'm trying to make sure I'm understanding these procedures correctly. Yanping Nora Soong (talk) 01:14, 15 January 2016 (UTC)

Do amino acids sometimes combine in water?

I mean without all the hardware that a cell has, that allows to combine them into proteins, do amino acids sometimes combine by chance just by bumping into each other if you shake the water they float in for long enough? --Lgriot (talk) 22:32, 14 January 2016 (UTC)

- If you ask whether that happens at all: Definitely, even if you don't shake. The molecules are in thermal motion anyway. And conversely, peptides sometimes break apart (i.e. are hydrolyzed) without enzymes catalyzing this reaction.

- Maybe more interesting is the question of what fraction of amino acid molecules can be expected to be free amino acids and which fraction will be in peptides. See chemical equilibrium for a general introduction. For a dilute solution of amino acids, the equilibrium state has far more free amino acid molecules than peptide-bound ones. In a very concentrated solution, water for hydrolysis is not so abundant, and you'll find a higher fraction of peptides at the equilibrium.

- Icek~enwiki (talk) 00:31, 15 January 2016 (UTC)

- Short-answer: they don't. Peptide bond formation is much harder than just simple Fischer esterification-- for the issue that if you try to catalyze the reaction by deprotonating the amino group (activating the nucleophile), you deactivate the carboxylic acid as an electrophile (it becomes a carboxylate), and if you try to catalyze the reaction by acid (activating the carboxylic acid group), you deactivate the nucleophile (the amino group gets protonated, losing its lone pair). You can create an amide or peptide bond by heating your reagents at 250C, but the problem is that these harsh conditions often cause a lot of side reactions to occur, at a risk of degradation or oxidation of the sidechain. Yanping Nora Soong (talk) 00:57, 15 January 2016 (UTC)

- Selective peptide coupling is an expensive task and an entire industry of its own: see peptide synthesis. Yanping Nora Soong (talk) 00:59, 15 January 2016 (UTC)

- I'm reminded of the Urey-Miller experiment. If there were a simple way to string those amino acids into peptides, that would be very exciting, but it's not really so. Now by contrast, I remember hearing that polymerizing hydrogen cyanide gives rise to actual polypeptides (once water is added) - here's a source [10] but I'm not sure it's the definitive one. Now as peptide bond says, water will spontaneously hydrolyze the bond on a very slow time scale, liberating substantial energy; and this implies that the reverse of the reaction does occur, but because of the energy difference, it would occur in a very, very small proportion of the total molecules (I'm afraid I'd have to reread Gibbs free energy, at least, to try to guesstimate what proportion that is.... but AFAIR 8 kJ/mol is a biological way to say "fuggedaboudit") Wnt (talk) 01:26, 15 January 2016 (UTC)

- Polyaspartic acid can be accomplished by simple pyrolysis of aspartic acid followed by hydrolysis (easy undergrad lab experiment). The pathway for this polypeptide is not general for all the encoded amino acids, requiring at least some aspartic acid- or glutamic acid-like component (see also [11] that proposes this sort of reaction as relevant to prebiotic processes). DMacks (talk) 04:29, 15 January 2016 (UTC)

- Remember, thermodynamic energy release is different from the kinetic activation barrier.

- But if dG = -RT ln K = -8 kJ/mol, then K = e^(-dG/RT) = e^(8 kJ/mol / (298 K * molar gas constant)) = e^(3.22) = ~25

- So the equilibrium constant for hydrolysis is around 25. The equilibrium constant for the reverse reaction would thus be around ~4%. This is actually kind of impressive, but the energy barrier is much higher -- I would guesstimate around 20-30 kcal/mol -- or around 80-120 kJ/mol. Yanping Nora Soong (talk) 08:56, 15 January 2016 (UTC)

- If you want to understand the equilibrium dynamics of solutions of amino acids, perhaps our article on Michaelis-Menten kinetics would be helpful. loupgarous (talk) 20:31, 15 January 2016 (UTC)

- This was a quick and dirty calculation. I'm well-aware of MMK. Of course, polymerization beyond forming more than just 1 peptide bond would be a different story... Yanping Nora Soong (talk) 00:57, 16 January 2016 (UTC)

- The OP said "long enough", which is a pretty broad license to ignore kinetics altogether for purposes of the answer. Wnt (talk) 03:02, 17 January 2016 (UTC)

- If you want to understand the equilibrium dynamics of solutions of amino acids, perhaps our article on Michaelis-Menten kinetics would be helpful. loupgarous (talk) 20:31, 15 January 2016 (UTC)

January 15

Is there such as "aspiration center" in nerve system?

(I'm not surely back) Like sushi 49.135.2.215 (talk) 00:48, 15 January 2016 (UTC)

- I don't quite understand your question, further information about the context would be needed. Maybe what you are looking for is an aspiration center. This is a physical location in a hospital where some medical procedure like needle aspiration biopsy) is performed. --Denidi (talk) 01:20, 15 January 2016 (UTC)

- I don't know what's up either, but this paper [12] discusses "fine needle aspiration biopsies of the central nervous system", so it might be helpful to OP. SemanticMantis (talk) 16:09, 15 January 2016 (UTC)

- "Aspiration" has

twothree very different meanings. Aspiration can mean "ambition" (as in my son aspires to be a scientist). Aspiration can also mean "drawing in" or "removing by suction" by creating a negative air pressure difference in a hollow surgical instrument. And, finally, aspiration can mean the act of drawing in breath. Which one is it? :) Dr Dima (talk) 17:52, 15 January 2016 (UTC) - Aspiration in the first sense (that is, ambition) is thought to be functionally associated with frontal lobe; or at least one of the symptoms associated with prefrontal cortex / frontal lobe damage is the lack of ambition. Aspiration in the third sense - breathing in - is governed by the respiratory center in the brain stem. --Dr Dima (talk) 18:17, 15 January 2016 (UTC)

Is there such as "motive entropy"?

(I'm not surely back) Like sushi 49.135.2.215 (talk) 00:49, 15 January 2016 (UTC)

- As above, the question is difficult to understand. Motive is usually a term used in law or psychology while entropy is a term usually used in physics, specifically thermodynamics. I have not come across those two words being used together to describe anything. If this misses your question entirely, you could try asking the question in your native language and someone might be able to translate it for you. Vespine (talk) 04:59, 15 January 2016 (UTC)

- Two seconds of googling reveals it to be a phrase used by Carnot, hence something to do with thermodynamics. Greglocock (talk) 08:28, 15 January 2016 (UTC)

- Motive can also be an adjective meaning "relating to motion and/or to its cause". Just like a locomotive is not generally a reason why loco people commit crimes, it's unlikely that "motive entropy" has anything at all to do with the law or psychology term. ;) --Link (t•c•m) 11:42, 15 January 2016 (UTC)

- Of course it can! Fail on my part. Vespine (talk) 22:50, 17 January 2016 (UTC)

- Hm, electromotive force, magnetomotive force, Projectile#Motive_force all speak to the usage in physics of "motive" in a different sense than the psychological/behavioral sense of motivation. Some of these terms (esp. motive force) may be losing currency, but were very popular not that long ago. SemanticMantis (talk) 16:06, 15 January 2016 (UTC)

- Here [13] is a 2003 article, freely accessible, that explains a concept of "motive entropy", with references, thusly:

| “ | Only the potential energy and zero-point vibrational energies, E0, and the degeneracy term, [math notation for terms] contribute to A so Frank suggested they should be called ‘motive enthalpy’ and ‘motive entropy’ after Carnot to distinguish work terms from the heat terms now called ‘thermal’ terms. | ” |

- Here's another recent (2008) paper that defines the concept formally (eq. A20). The idea seems to be to separate "thermal" and "motive" portions of entropy, the latter of which is independent of temperature (or perhaps adiabatic?). The physical scope of these articles is is a bit over my head, but these should provide good sources for anyone who wants to explain further. (Also if anyone wants to help me out on a related sub-question - is this distinction at all analogous to latent heat vs. sensible heat? I'm fine with the math but fairly ignorant of thermodynamics) SemanticMantis (talk) 16:06, 15 January 2016 (UTC)

Norovirus and Pathogenic Escherichia coli

Does immersion in boiling water inactivate these two pathogens, and how long does it take? Edison (talk) 05:09, 15 January 2016 (UTC)

- Yes, I found this article has some details. All common enteric pathogens are readily inactivated by heat, although the heat sensitivity of microorganisms varies. It appears that for most viruses they take just several seconds (~10 seconds) to be inactivated in boiling water. Just keep in mind that just putting a potato (for example) into boiling water for 10 seconds doesn't guarantee all virus will be inactivated. There could be a virus caught in a crack or cavity that is too small for water to get into, and the potato can act like a heatsink for long enough to cause the virus not to reach a high enough temp. Vespine (talk) 05:53, 15 January 2016 (UTC)

- Agreed. This is why food has to be cooked right through. One more thing to mention is that even though cooking may kill the pathogenic organisms, it won't necessarily inactivate their toxins. -- The Anome (talk) 08:42, 15 January 2016 (UTC)

- Douglas Baldwin's rather wonderful book on sous vide has both the full pasteurisation time/temperature tables for the internal temperature of the food (in the section linked), and timings for how long it would take to reach those temperatures given the meat, thickness, and water bath temperature (in the individual sections for the meats). E coli is considered in the beef, lamb and pork section. The point about toxins is a very valid one - and there are some pathogens (such as Clostridium botulinum) which will just spore up at cooking temperatures, and then continue to reproduce when the temperature drops. MChesterMC (talk) 09:22, 15 January 2016 (UTC)

- The general recommendation in food preparation is to avoid the "Danger zone", which is reported as 5-60 celsius, which would imply that holding food at above 60 C is generally regarded as safe; since boiling is at 100 C, you're probably good. --Jayron32 13:22, 15 January 2016 (UTC)

- Except that food in boiling water is not at 100C (or if it is, it's horribly overcooked!). Looking at my ref above, the pasteurisation times for actual cuts of meat are much higher than the government pasteurisation tables - because it can take several hours for the centre of a joint to get to a safe temperature. This will be a lot quicker at boiling than at typical sous vide temperatures, but the boiling water is the heat source, not the thing you're trying to kill bacteria in. Your best bet is to get a meat thermometer, and measure the internal temperature of your food - if it's above 70C, you're good. If it's been above 60C for 10 minutes, or 65C for 1 minute, you're good. Add 5C to each of those for poultry. Of course, full pasteurisation is overkill for most foods - steak cooked at medium or below won't be pasteurised throughout unless it's dove very slowly, but for the most part it doesn't matter since most of the bacteria are on the surface, which gets hot enough to kill them.

- Also, while holding food at 60C is safe (since most dangerous bacteria can't grow), just getting food to 60 and then eating it may not be - the pasteurisation time at 60C is about half an hour for fatty poultry, so a short time at 60C isn't going to change much. MChesterMC (talk) 14:43, 18 January 2016 (UTC)

- The general recommendation in food preparation is to avoid the "Danger zone", which is reported as 5-60 celsius, which would imply that holding food at above 60 C is generally regarded as safe; since boiling is at 100 C, you're probably good. --Jayron32 13:22, 15 January 2016 (UTC)

- Douglas Baldwin's rather wonderful book on sous vide has both the full pasteurisation time/temperature tables for the internal temperature of the food (in the section linked), and timings for how long it would take to reach those temperatures given the meat, thickness, and water bath temperature (in the individual sections for the meats). E coli is considered in the beef, lamb and pork section. The point about toxins is a very valid one - and there are some pathogens (such as Clostridium botulinum) which will just spore up at cooking temperatures, and then continue to reproduce when the temperature drops. MChesterMC (talk) 09:22, 15 January 2016 (UTC)

- Agreed. This is why food has to be cooked right through. One more thing to mention is that even though cooking may kill the pathogenic organisms, it won't necessarily inactivate their toxins. -- The Anome (talk) 08:42, 15 January 2016 (UTC)

- I see that a restaurant chain plans to reduce the incidence of e coli and norovirus illness as follows "Onions will be dipped in boiling water to kill germs before they're chopped.... Cilantro will be added to freshly cooked rice so the heat gets rid of microbes in the garnish." Past outbreaks of food-born illness have identified irrigation water or produce-washing water which might be contaminated as a source of germs, and presumably if the contamination is only on the surface of the plant material, there would be a decrease in the likelihood of illness, if not a guarantee. Edison (talk) 16:06, 15 January 2016 (UTC)

Question about Quarks

Hello! I was thinking about particle physics, when a thought occurred to me: "How did Quarks form in the early universe? I know that we are pretty certain that they are elementary, so why were they there in the universe, at that time? Are they little balls of energy or did some force (like Gravity) cause space-time to collapse on its self to form Quarks?" So how did they form then? By the way, I only know basic physics, so explain any "complicated stuff" to me. Megaraptor12345 (talk) 15:53, 15 January 2016 (UTC)

- I'm not sure we have a very good idea. You might want to read up on baryogenesis (and by that I mean not just the Wikipedia article, but as a concept to focus your research on outside of Wikipedia as well), but the main take-away I've always had is that we have very general, broad, and not-at-all in focus ideas about what went on during this epoch of the early universe, and that there are several competing (consistent but as yet not well supported) theories on what really went down. --Jayron32 17:09, 15 January 2016 (UTC)

- You actually have to page back quite a few epochs from the quark epoch to reach the legendary grand unification epoch, which lays somewhat west of the Wicked Witch of the West, perhaps. Our article is not very informative due to the lack of accepted grand unification theory. But it wasn't until the quark epoch that they (mostly) lacked more massive competition like W and Z, I suppose. Wnt (talk) 17:15, 15 January 2016 (UTC)

- Sorry, what is W and Z? Megaraptor12345 (talk) 22:05, 15 January 2016 (UTC)

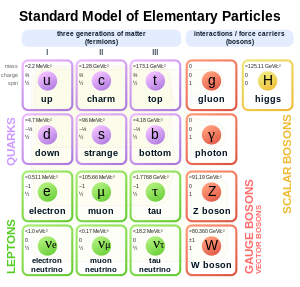

- The bosons in the image below, I think. SemanticMantis (talk) 22:28, 15 January 2016 (UTC)

- I'll have to check it out on my next trip to the Big Bang Burger Bar. --Jayron32 17:30, 15 January 2016 (UTC)

- @Megaraptor12345: The W and Z bosons are mentioned in the previous epoch before the quark epoch, the Electroweak epoch. Note that electroweak theory has been tested and is pretty well agreed upon ... whether that means that period of the universe is well agreed on, I don't know. Wnt (talk) 23:09, 15 January 2016 (UTC)

- Sorry, what is W and Z? Megaraptor12345 (talk) 22:05, 15 January 2016 (UTC)

- You actually have to page back quite a few epochs from the quark epoch to reach the legendary grand unification epoch, which lays somewhat west of the Wicked Witch of the West, perhaps. Our article is not very informative due to the lack of accepted grand unification theory. But it wasn't until the quark epoch that they (mostly) lacked more massive competition like W and Z, I suppose. Wnt (talk) 17:15, 15 January 2016 (UTC)

- It is believed that in the period prior to 10−6 seconds after the Big Bang (the quark epoch), the universe was filled with quark–gluon plasma, as the temperature was too high for hadrons to be stable. Such a quark-gluon plasma was reportedly produced in the Large Hadron Collider in June 2015. However the quark has not been directly observed, it is a category of Elementary particle introduced in the Standard model as parts of an ordering scheme for hadrons that appears to give verifiable predictions of particle interactions, but as yet we have no agreed explanation why there are three generations of quarks and leptons nor can we explain the masses of particular quarks and leptons from first principles. AllBestFaith (talk) 18:25, 15 January 2016 (UTC)

- This is all very well, but I am not sure I put my question right. Let me start again. How did matter form? I thought in my original question that quarks were the original particle, but they were not, were they? So how did any sort of matter form? Did it appear as a personification of energy or as globs of some force? Megaraptor12345 (talk) 22:14, 15 January 2016 (UTC)

- Quarks will appear spontaneously through pair production and other interactions when enough energy is available. They are just a particular mode of vibration of the quantum field, and all of the vibrational modes are coupled together in complicated ways, so if there's enough energy there will unavoidably be quarks. If inflationary cosmology is correct (which it may not be), that energy came from the inflaton [sic] field, and where that came from is anyone's guess. -- BenRG (talk) 22:50, 15 January 2016 (UTC)

- What is a "quantum field"? Megaraptor12345 (talk) 16:49, 16 January 2016 (UTC)

- It's a field (physics), like the electromagnetic field but with more oscillatory degrees of freedom that correspond to particles other than the photon. It isn't really the field that's quantum, it's the world that's quantum. Because the world is quantum, oscillations of any field are quantized, and each quantum of oscillation energy is called a particle. The properties of the field tell you everything about physics at ordinary energies; all particles and interactions are oscillations of the field. The physics is described by the Standard Model Lagrangian and (the quantum version of) Lagrangian mechanics. -- BenRG (talk) 21:50, 16 January 2016 (UTC)

- More specifically, it's one of the set of fields predicted by quantum field theory. This video focuses on the Higgs mechanism, but touches a bit on QFT, as you need to understand the basics to understand why physicists care about the Higgs. --71.119.131.184 (talk) 08:09, 18 January 2016 (UTC)

- What is a "quantum field"? Megaraptor12345 (talk) 16:49, 16 January 2016 (UTC)

- This isn't my field, but here's the perception I have: The universe is a bit like a three ring circus. New kinds of physics come in, stay a while, and eventually are ushered offstage. The way this ushering occurs is that there is physics for very high-energy particles that occurs on a very short time scale and physics for lower energy particles that occurs on a longer time scale. If proton decay occurs, and there is no end of cosmic expansion/heat death of the universe etc., then one day all of our protons and neutrons may be considered just some weird high energy physics that happened during the first few moments after the Big Bang, before the universe settled into steadier assemblages of neutrinos interacting at a more measured pace. Whereas if you look far enough back, there was a time when an ordinary photon of radiated heat had enough energy to make Z and W particles, and perhaps quarks were a minor constituent - something like neutrinos to us in that they didn't carry as much mass and so might have seemed relatively irrelevant ... though I'm not sure the analogy really makes sense due to quarks' charge - certainly I have no guess of how relevant charge was in the era before electromagnetic and weak forces were distinguishable. While free quarks can't exist in our space, back then there was so much energy crammed so close together that the quarks were in quark-gluon plasma, which is tolerable, so basically any thermal photon could make quarks and antiquarks spontaneously. But I don't think anyone knows just how many acts there were at the beginning of this circus, or even if it had a beginning, rather than just smaller and smaller intervals of time in a convergent series. Wnt (talk) 23:09, 15 January 2016 (UTC)

Crude Oil

Will the crude oil rate slash down further? — Preceding unsigned comment added by 59.88.196.26 (talk) 17:44, 15 January 2016 (UTC)

- Maybe, maybe not. Please see WP:CRYSTAL; we cannot speculate on this. Here [14] is a relevant article from the Economist published today that you might be interested in. Other respondents may choose provide references that speculate on this, but they should not speculate here. SemanticMantis (talk) 17:50, 15 January 2016 (UTC)

- The report I heard on NPR said that prices may well drop further, yes. They based that on current stockpile levels and the slow rate at which oil production facilities (and alternative substitutes production, like natural gas) currently are reducing production. Eventually, those production levels will be brought down to match demand, and then you can expect prices to stabilize. StuRat (talk) 22:10, 15 January 2016 (UTC)

- The price of oil, or of any commodity for that matter, will either go up, or down, or remain stable. Guaranteed. ←Baseball Bugs What's up, Doc? carrots→ 07:58, 16 January 2016 (UTC)

- Today sanctions against Iran were dropped, allowing them to sell their substantial oil reserves on the world market. This can be predicted to lower prices due to increased supply. StuRat (talk) 22:05, 16 January 2016 (UTC)

If a person has an MBA, is it wrong to say he's an economist?

Questions about EM radiation

I've been wondering about some things and I'd appreciate any insight into them.

1) I often see visible light described as electromagnetic radiation. If I have a green light lit and had a radio capable of receiving at 563THz, would I receive anything? I assume that I wouldn't, since a radio deals with electrons and not photons.

2) I expect that in any case where a device is powered entirely from RF energy (e.g. a crystal radio), the current draw of the device must be somehow apparent at the transmitter. If this is true, is any conductive object which is connected to ground also drawing power from the transmitter? Does a powered, tunable radio draw less current from the transmitter when not tuned to the transmitter versus when it is tuned to it? Is the load actually measurable?

3) I recall a time when I was transmitting an AM signal into a dummy load and monitoring it with a handheld receiver. As I moved around, I noticed places in the room where the carrier was strong, but the modulation was weak. I observed the same when transmitting an FM signal using an antenna. If the signal wasn't simply just too strong for the receiver, what would cause this? SphericalShape (talk) 00:16, 16 January 2016 (UTC)

- A radio that receives at 563 terahertz is a photodetector. You can't build whip-antennas that small; your "receiver" would have to look and act like a photodiode.

- Yes, everything affects everything else; the electromagnetic impedance as seen by a transmitter is minutely affected by every natural and man-made "receiver" in the environment, all the way out to infinity distance; but the farther away, the smaller the effect; and the farther away, the longer the propagation delay. (The behavior of an object a trillion light-years away has a trillion year propagation delay in effecting any change to the impedance observed at the transmitter). For almost all practical purposes, these effects are so small that they are negligible; the ensemble averages out into the background, which is the impedance of free space. Sometimes, engineers use tuned, matched coils: this is about the only case where the effect of the receiver-antenna's loading is non-negligible: those systems are designed for power transfer. Chances are, the effective load caused by your crystal radio (drawing nanowatts of power) did not register on the VU meter tracking the power loading at the AM station fifty miles away. When you start throwing amplified circuits, and tunable radios, into the mix: well, the formal scientific method to analyze this is electrical engineering. The effective load on an amplifier's output is isolated from the input; but there is still some tiny, tiny effect: when you power on your powered radio, the antenna's impedance gets a slight nudge one way or the other; and just as before, that change propagates all the way back as a difference in the load for the radio transmitter. On the whole: these effects are absolutely tiny. The transmitter sees more load-variance based on the wind blowing molecules of air around, than due to all the cumulative effects of all the radio receivers out to infinity and back - and even that is smaller than the load-variance due to a hundred other effects, like the thermal noise inside the transmitter's power supply.

- Any number of effects might explain this subjective observation; everything from frequency-dependent tuning, to faulty equipment; but it's futile to speculate based on an anecdote.

- Nimur (talk) 01:24, 16 January 2016 (UTC)

- I always thought (on an intuitive level, since I don't have any systematic knowledge in the area) that radios didn't load the transmitter at all, that this had something to do with "near" and "far" fields and that it was the chief difference between radio transmission and stuff like transformers, induction chargers and NFC tags. Asmrulz (talk) 05:53, 16 January 2016 (UTC)

- This is a matter of practical semantics. If the theoretical effect is so small that even our best measurement equipment can't see it ... does this constitute "no effect"? Well, in the same sense that there is "no chance" that you could win the lottery... we're overloading the meaning of "zero" to mean both "zero and almost zero." The semantic problem is very subtle: there is a practical meaning of "almost zero," and there is an even more rigorously-defined theoretical mathematical defintion of almost zero; and I admit some guilt in conflating both cases with "exactly zero." (This is because the English language is an inapt choice for certain specific kinds of descriptions). This ambiguity of natural language leads to a very common semantic problem and if we aren't very careful with our terminology, it can lead us to believe an incorrect conclusion, or to find a paradox where none really exists.

- So, to directly address Asmrulz's concern: receiver-antennas in the far field have almost zero effect on the transmitter. How tiny is that effect? It is so small that we probably can't measure it; but we can surely calculate it by solving for the complete electromagnetic field equations, at all points, and then performing some mental and algebraic gymnastics to mathematically transform the result into something that looks like an impedance.

- This is where real radio engineering intuition is needed: if we start from pure theory of physics, it might take us days to perform such a calculation, only to find out at the end that our result can be safely ignored. Instead, if we start from practical experience, we know the approximate result of the difficult calculation, and therefore we don't actually perform it. This intuitive leap - knowing the answer by gut-feel - is a constantly-recurring theme in applied electromagnetics. It is one reason that other engineers call high frequency radio and antenna work a "black art."

- Nimur (talk) 17:10, 16 January 2016 (UTC)

- My intuition is that of a hobbyist who loves electronics über alles but struggles with quadratic equations, not to mention PDEs. Perhaps in my next life... Asmrulz (talk) 19:16, 16 January 2016 (UTC)

- Does a TV detector van work by this method or Van Eck phreaking (or is it even real?) Wnt (talk) 11:26, 16 January 2016 (UTC)

- From the picture it looks to be real. The antenna would be detecting UHF or VHF transmissions from the TV local oscillator. The TV is a Superheterodyne receiver. Graeme Bartlett (talk) 11:32, 16 January 2016 (UTC)

- I tend to disbelieve the practical utility or historicity of the TV Detector Van; but these vans are an important part of the common culture and mythos amongst paranoids and tin-foil-hat wearers. Surely, the technology could have existed; and in all seriousness, it probably was used at some time or other. But, proposing that it was, or is, part of an ongoing mass-surveillance effort - well, that's much more tenuous a claim.

- Of all the effects one could use to remotely determine if a television set is presently powered, the effect of its antenna would be far from the easiest. There are so many stronger signals, where do we begin enumerating them? The conventional cathode ray tube emits all kinds of characteristic radio energy: the flyback transformer carries a lot of power, and some of that is radiated outward. That signal, or the high voltage generated by the electron gun, is probably the easiest signal to detect. Heck, the time-varying load on the AC power supply mains would be easier to spot.

- Obviously, new televisions do not use cathode ray tubes: if there really is a TV detector van, and it really does operate in this century, then it probably uses "some other method." Were I in charge of designing such an invasive technology, I'd simply remark that it is incredibly easy to hide a "bug" on a circuit board these days; a sophisticated integrated circuit in today's technology is so small, it could piggy-back on the back side of the solder-pad of a passive component and nobody would even look for it; or you could hide it inside the software in any of the dozens of independently-programmable computers that can be found on any modern electronic device. Your television's power cable probably has more compute-power than a PDP-11; and it's being built by some third-party vendor of bargain-basement commodity electronics technologies: such commodity vendors might be in a dire financial way and therefore susceptible to outside "funding opportunities." The evil genius at Mass Surveillance, Inc., could simply repurpose the fuel budgets from the surveillance vans, use the funds to pay off the vendor, and presto - every television could be carrying a cooperative surveillance device, broadcasting a tiny unique identifer to self-report its activity via wireless link. The days of being able to detect, let alone counter, such electronic surveillance technologies are long over. If the baddies wanted to surveillance you, they shall do so; and you won't even notice it. When is the last time anybody ran a malware check, or inspected the open-source software, on their TV's power supply controller?

- Nimur (talk) 17:47, 16 January 2016 (UTC)

- Quibbles with the above are that the high voltage in a CRT televison is not "generated by the electron gun" but instead is rectified via a Voltage multiplier from a pulse winding on the Flyback transformer, and that identifying payment evaders of a decreed TV licence fee is a legitimate part of law enforcement. The article Television licence shows that funding sources in different countries vary between licence fee and advertising. A rhetoric that licence funding is imposed by "evil...baddies" is, at best, ignorant that TV broadcasting always needs to be funded somehow or, at worst, unsourceable covert conspiracy speculation. AllBestFaith (talk) 20:24, 16 January 2016 (UTC)

- For what it's worth, I do not believe that the UK's television license fee is evil; it's a complex policy that might actually promote better-quality, less-biased broadcasting, and I have often wondered if that policy could be implemented in the United States. Broadcast economics has always worked very differently on this side of the pond, and our government has a different legal and historical relationship with broadcasters, and with respect to our "free press" in general; so the issue is not simple at all. That's a conversation for another time.

- Nor do I actually believe that any government is conducting mass surveillance in the fashion described above - certainly not for the purposes of verifying television-usage. I hope my comments are not construed in that way. To clarify my intended point: if any malicious entity - government or otherwise - wished to conduct mass surveillance, for any purpose, the most efficacious methods in this century probably need not involve driving around in vans.

- With respect to your other quibbles - point conceded; I was a bit sloppy in my paraphrased description of the CRT. Interested readers should refer to our article for more details. Nimur (talk) 04:17, 17 January 2016 (UTC)

- Quibbles with the above are that the high voltage in a CRT televison is not "generated by the electron gun" but instead is rectified via a Voltage multiplier from a pulse winding on the Flyback transformer, and that identifying payment evaders of a decreed TV licence fee is a legitimate part of law enforcement. The article Television licence shows that funding sources in different countries vary between licence fee and advertising. A rhetoric that licence funding is imposed by "evil...baddies" is, at best, ignorant that TV broadcasting always needs to be funded somehow or, at worst, unsourceable covert conspiracy speculation. AllBestFaith (talk) 20:24, 16 January 2016 (UTC)

- From the picture it looks to be real. The antenna would be detecting UHF or VHF transmissions from the TV local oscillator. The TV is a Superheterodyne receiver. Graeme Bartlett (talk) 11:32, 16 January 2016 (UTC)

- I always thought (on an intuitive level, since I don't have any systematic knowledge in the area) that radios didn't load the transmitter at all, that this had something to do with "near" and "far" fields and that it was the chief difference between radio transmission and stuff like transformers, induction chargers and NFC tags. Asmrulz (talk) 05:53, 16 January 2016 (UTC)

- Every new broadcasting station has to conduct field measurements throughout its coverage area to establish their radiated signal strength and the relative strength of interfering signals. This work is naturally done by engineers in a vehicle in the USA and in the UK a prominently labelled TV detector van.

- UK detector vans are typically equipped with panoramic display receivers such as this Eddystone combo on which the internal oscillator of a nearby TV receiver is traceable as a radiation spike at 38.9 MHz below a vision carrier frequency. A number of these vans must be kept in service also for investigating reports of illegal transmitters (including espionage devices) and interference. I can attest that during the British GPO monopoly control of broadcasting my complaint about interference to TV reception was met by visits by an enthusiastic engineer carrying a range of signal tracing and filtering equipment, all covered by the standard licence fee. It is obvious that licence collecting authorities find it cost effective to maximise the public expectation that unlicenced receivers will be traced while seldom actually expending resources on general surveillance. A non-technical lay person in the 1950's Britain might not easily distinguish between a dipole or a ladder on the roof of the ubiquitous telephone service vans and suspect that they were all TV detector vans! On Swedish TV I have seen placards that say "We are inspecting <name of town> with a receiver detector. Thank you for keeping your TV licence renewed."

- This thread was deviated into politically charged speculation about malicious survellance hardware but the article Conditional access describes non-covert methods and hardware used to protect broadcast TV content pre-emptively. AllBestFaith (talk) 16:51, 17 January 2016 (UTC)

- 1) Your assumption that a radio deals in electrons, not photons, is incorrect. There is no dividing line between light and radio waves - they are both types of EM radiation and are both made up of photons. Although, as Nimur said, a standard radio antenna won't pick up light-frequency photons, you could in theory built a nano-scale antenna that would do just that. See the experimental device called the nantenna. It's very inefficient and impractical but in principle it does what you describe. --Heron (talk) 13:25, 16 January 2016 (UTC)

- 2) In practice, no to all your questions. The transmitter launches radio waves into free space and doesn't know what happens to them afterwards. The rest of the world acts as an almost perfect absorber unless the transmitter has the misfortune of being surrounded by tinfoil. It doesn't matter whether the energy is absorbed by a crystal set, a transistor radio or a tree, the transmitter justs sees its energy disappearing into a bottomless pit. Incidentally, I have to quibble with Nimur's statement that the impedance of free space is an average of the impedances of all the stuff in the universe - it's not, it's the impedance of any piece of empty space. The wave from the antenna immediately sees the impedance of free space when it leaves the antenna, not after it's had time to bounce around the universe and average everything out. --Heron (talk) 13:44, 16 January 2016 (UTC)

- In case there was any confusion about my statement: the impedance of free space is not caused by the loading effects of all objects in the far field. Rather, the antenna sees a load, which is a superposition of free space plus any other loading effects. This value averages out to the impedance of free space because the additional loading effects is generally negligible. I apologize that my statement was confusing. Nimur (talk) 15:13, 16 January 2016 (UTC)

- Heron is correct to point out that the impedance of free space is a physical constant that is fixed by definition relative to the S.I. base units. It is seen by a transmitting antenna both "immediately" and over prolonged time as radiation propagates away, never to return; I mention exceptions to this scenario in response no. 2. below.

- 1. 563 THz or 563x1012 Hz is the frequency of green monochromatic light emitted by a common DPSS laser pointer. Many other frequency distributions that stimulate the medium cones of the retina can give the same perception of green because the eye is not a precise spectroscope. A few specialized radio receivers used in Radio telescopes detect electromagnetic radiations at millimeter and submillimeter wavelength, see [[15]] and [16], but these are far below visible or infrared light frequency. Among the many types of Photodetector that can respond to green light, types such as photoresistors, photovoltaic cells, photomultipliers, photodiodes, phototransistors and others convert incoming photons to electron current, which could constitute a non-tunable receiver.

- 2. The antenna of a Transmitter is designed to deliver electromagnetic energy into the Impedance of free space (i.e. the wave-impedance of a plane wave in free space) which equals the product of the vacuum permeability or magnetic constant μ0 and the speed of light in vacuum c0 i.e. about 376.73 ohms. Theoretically any object with a different impedance that intrudes into the space around the transmitter causes a reflection at the point of mismatch, and therefore a mismatch effect at the input to the transmitter antenna. Usually in broadcasting the powers reflected by receiver antennas are negligible and undetectable in comparison with the power delivered to space. Exceptions include large metal structures near a transmitter that may necessitate adjustment using an SWR meter of the antenna matching circuit, and deliberate analysis of reflected radio waves which is the basis of Radar. The instruments known as Grid dip oscillator and gate dip oscillator may be regarded as small transmitters with inductive antennas that are sensitive to power absorbed in any nearby tuned circuit, and are useful for finding its resonant frequency by trial-and-error tuning. A tuned receiver front-end draws most power from the antenna when its resonant frequency equals the transmitted frequency. The power dissipated in an RLC circuit can be calculated if its Q factor is known.

- 3. The situation where signal is detected near a dummy load suggests the load is imperfectly matched to the transmitter or imperfectly screened, so the OP detected residual leakage. It is unlikely to have reduced AM though the sound volume from most AM radios decreases when the signal strength is weakened, e.g. by rotating the handheld radio. Most FM is transmitted at wavelengths of 3 m or less, see FM broadcasting, so where there are reflecting conductive surfaces moving a receiver by such a small distance can change the relative phasing of multipath interference which may locally distort, weaken or cancel reception. AllBestFaith (talk) 14:06, 16 January 2016 (UTC)

- Just let me add that Near and far field is our article about what Asmrulz mentioned above. – b_jonas 12:52, 18 January 2016 (UTC)

January 16

Cartilaginous fish outcompeting bony fish as predators

Bony fish (Osteichthyes) vastly outnumber cartilaginous fish (Chondrichthyes) in terms of both number of species and biomass. But in the ocean, more cartilaginous fish than bony fish are apex predators. Why would cartilaginous fish have outcompeted bony fish in that particular ecological niche? —SeekingAnswers (reply) 03:12, 16 January 2016 (UTC)

- You may be cherry picking your data a bit. The apex predators in the sea would have to include whales, but you excluded them by specifying fish. So, once you eliminated whales, then sharks are a fairly large portion of the apex predators that remain, and they happen to be cartilaginous fish. StuRat (talk) 03:33, 16 January 2016 (UTC)

- That's not cherry picking, since I never asked about bony or cartilaginous fish vis-à-vis mammals; I asked specifically about bony and cartilaginous fish vis-à-vis each other. The question remains why cartilaginous fish would outcompete bony fish in that niche. —SeekingAnswers (reply) 05:03, 16 January 2016 (UTC)

- Predators are typically vastly outnumbered by their prey. If they weren't, the predators would die out. Evolutionary pressure may have favored bony fish for survival of the prey, while sharks were just fine as they were. And are. ←Baseball Bugs What's up, Doc? carrots→ 07:53, 16 January 2016 (UTC)

- That's not cherry picking, since I never asked about bony or cartilaginous fish vis-à-vis mammals; I asked specifically about bony and cartilaginous fish vis-à-vis each other. The question remains why cartilaginous fish would outcompete bony fish in that niche. —SeekingAnswers (reply) 05:03, 16 January 2016 (UTC)

- Look at r/K selection theory. Sharks have a low reproductive rate with small clutches fertilized internally, while bony fish produce a huge number of eggs, with the newborns often being planktonic. This means that in niches filled by smaller fishes, bony fish will tend to have an advantage. You can see the exact same thing comparing the more primitive conifers, which comprise the tallest and oldest trees with the angiosperms with their advanced systems of pollenization and seed dispersal. If you consider plant succession, angiosperm "weeds" will colonize open land first, but conifers like the Douglas fir will tend to be among the apex species. μηδείς (talk) 17:55, 16 January 2016 (UTC)

- @Medeis: Yes r/K may come in to it, as may size at birth. But IMO that alone doesn't really explain the issue (if there even is an issue to explain ;). You might be interested to know that plant succession can go the other way too. E.g. Loblolly pines in the Carolinas come in first after clear cuts or fires, and the climax community has much more hardwood broadleaf species (See e.g. Christensen and Peet, 1984, or most any of the studies on the Piedmont or Duke Forest). The fast growth of many conifers is tied to early successional status, and is also why they are such an important timber source. A professor of forestry once told me that this can be seen as a broad, general, trend: east of the Rockies, conifers tend to be earlier successional, while in the west they tend to be late successional. As to the OP @SeekingAnswers: I think this is a very interesting question, but I do think the premise should be clarified and perhaps challenged a bit. Are you saying that most cartilaginous fish species are top predators? Or that most top predator species are cartilaginous fish? Or are you claiming that, among species, a higher percentage of cartilaginous are top predators, as compared to that figure for bony fish? Some of these might be true, but none of them are obviously true to me, and the hypothetical reasons should differ for each one. I'm fairly busy this week, but if you contact me on my talk page I can send more refs later. SemanticMantis (talk) 16:06, 18 January 2016 (UTC)

- What I was suggesting (and really to make the point you'd need an essay) is that there was an earlier stage at which most of the existing "fish" niches were occupied by chondrichthyes; i.e., sharks, rays, chimeras, all of which had internal fertilization, and produce relatively large eggs compared to bony fish. (I am not sure about the reproduction habits of the extinct spiny sharks and placoderms.)

- When the teleosts arose (comprising the vast majority of bony fishes), they had largely external Teleost#Reproduction_and_lifecycle reproduction with a huge number of strategies for dispersal. While the teleost Mola mola lays up to 300,000,000 eggs, sharks like the great white Great_white_shark#Reproduction have only a few young after a very long gestational period.

- True sharks existed in the Silurian period, and animals like the six-foot predator Cladoselache appeared in the Devonian, while teleosts only appeared some 100 million years later, in the Triassic. Sharks already filled K-selected niches at that point, hence the teleosts spread like "weeds" (see my angiosperm analogy above) into many r-selected niches, a great number of which might never before have been occupied.

- My point with conifers was not to point out that they are only apex-succession fauna; they are not. Where I grew up, a sight like that at the left would be typical of a plot 5-10 years after colonization--but eventually the oaks will encroach. I am quite familiar with pine barrens, and the fact that if fires are suppressed in those areas the pines will be replaced by oaks and other late-succession hardwoods. My point is that there are no conifer weeds, just like there is no shark equivalent of duckweed or crabgrass. μηδείς (talk) 18:32, 18 January 2016 (UTC)

- There are perhaps a few edge cases, but I agree that there aren't any squarely ruderal conifers today. However, there used to be [17], [18]. SemanticMantis (talk) 19:18, 18 January 2016 (UTC)

Why are women bad at chess?

In chess rankings there's only 1 or 2 women in the top 100 players. Does anybody know why? 2.102.185.25 (talk) 06:07, 16 January 2016 (UTC)

- Maybe all but those 1 or 2 simply don't like chess. That doesn't mean that women are inherently "bad" at chess. ←Baseball Bugs What's up, Doc? carrots→ 07:49, 16 January 2016 (UTC)

- Maybe the OP's phrasing of women being 'bad' at chess was misleading, but it still begs the question why there are so few elite chess players who are women. Isn't also true that men's brains are generally wired to be more logical than women's brains, or has that theory been debunked now? 95.146.213.181 (talk) 18:59, 16 January 2016 (UTC)

- If it was simply a difference in interest, then you would need something like 50 to 100 times as many men interested in chess as women to predict that 1 or 2 out of 100 figure. Is there really this much of a discrepancy ? Somehow I doubt it. StuRat (talk) 22:10, 16 January 2016 (UTC)

This debate is as old as the hills, with many hypotheses and speculations, but there are no definite answers (and I don't expect there will ever be). Here is a Scientific American Article blaming it on the negative effect of stereotypes, while chess Grand master Nigel Short claims girls not to be "hard-wired" in their brains to play chess well (personally having a 3:8 score against Judit Polgar). Personally I'm most sympathetic with the hypothesis that 100% confrontational and 0% cooperative games are far more attractive to men than women: Without a broad base, the pyramid of female chess players will not grow high, so always most of the leading chess players will be men, far beyond the gender ratio in total players. --KnightMove (talk) 08:34, 16 January 2016 (UTC)

- It's difficult to disentangle "not interested in" and "not good at". If one group of people are dramatically less interested in some activity than some other group - then, inevitably, they will appear to be less good at it because of the lower probability of the most talented members of the group being involved. On the other hand, if some group is less good at something, they'll be less likely to participate in it. Sorting out which of those it is, is extremely difficult.

- This applies in varying degrees to chess, mathematics, physics, computer programming and a range of other activities in which women are severely under-represented. One might argue about correlation and causation here - are they being actively discouraged in some manner - are they being passively left out in some manner - or are they simply less interested in those subjects for some reason of genetic pre-disposition - or are they (perhaps) actually less good at it? It may be a combination of such things.

- It's equally possible to find groups where men are under-represented, or you can pick any other social, ethnic or religious group and come up with similar biasses in similar areas of human interest.

Actually I play in Union Square, Manhattan a lot where I've met some pretty genius-y women chess players. In fact, I met a nine to ten-year-old girl who has only been training for less than a year and holds around a ~1600 rating. Yanping Nora Soong (talk) 05:03, 18 January 2016 (UTC)

Follicular lymphoma

[it looks like a new user posted this without a heading] Wnt (talk) 13:23, 16 January 2016 (UTC)

§information on follicular lymphoma — Preceding unsigned comment added by 24.210.25.88 (talk) 12:54, 16 January 2016 (UTC)

- Well, we have an article on follicular lymphoma. Please say what aspect you're most interested in. Wnt (talk) 13:23, 16 January 2016 (UTC)

Is the “ankle” on both sides of the foot?

A little above of the foot, in both sides there are projections. One is from the inside of the led and the second is from the outside of the leg. My question is if the both sides are called "ankle" or just one of them? 92.249.70.153 (talk) 12:56, 16 January 2016 (UTC)

- The ankle is a broad region that includes them both and much more. You're thinking of the medial malleolus (on the inside) and the lateral malleolus (on the outside). Wnt (talk) 13:27, 16 January 2016 (UTC)

Radio tuning by analog-to-digital conversion?